A few weeks ago, I published an article about the idea of “smart bezels” in iOS devices to avoid conflicts with third-party applications using multitouch gestures. With Apple playing around with the concept of “multitasking gestures” in the latest iOS 4.3 betas, it has become an issue for developers to find a way to enable 3,4 or 5 finger gestures without interfering with Apple’s own implementation:

The problem with the new gestures is that Apple decided to make them system-wide, activated with a preference panel in the Settings app. Once gestures are enabled, they override any other four or five finger gesture developers may have implemented in applications. Personally, in fact, I have experienced issues trying to use multi-touch gestures in Edovia’s Screens or other piano apps — software that makes extensive use of gestures above two and three fingers. Apple’s implementation overrides options set by developers, and there is no way to let the iPad know whether a user wants to perform an app-specific gesture or a system one, like “open the multitasking tray”.

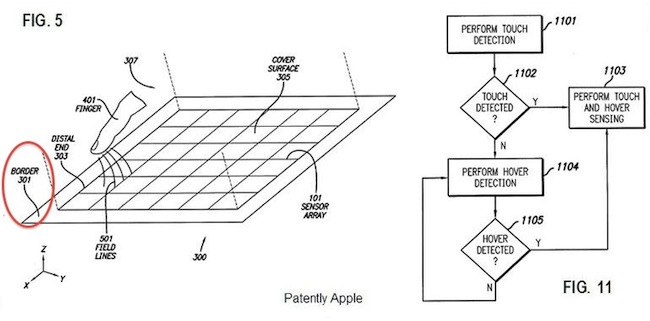

We’ve also seen recent patents Apple has been awarded that confirm engineers and designers at Cupertino have been thinking about implementing new gestures (such as the hitchhiker one) and hover-sensitive surfaces in future iOS devices. A new patent uncovered by Patently Apple today provides, once again, further insight into Apple’s plans to create mobile devices that can recognize touch without touch and actions performed on smart bezels:

In this particular patent, Apple sets out to improve capacitive touch and hover sensing. A capacitive sensor array could be driven with electrical signals, such as alternating current (AC) signals, to generate electric fields that extend outward from the sensor array through a touch surface to detect a touch on the touch surface or an object hovering over the touch surface of a touch screen device, for example.

That’s the technological gist of the patent. Here’s the interesting part about bezels, or “borders”:

The hover position of an object in a border area outside the sensor array may be measured multiple times to determine multiple hover positions. The motion of the object could be determined corresponding to the multiple measured hover positions, and an input could be detected based on the determined motion of the object. For example, a finger detected moving upwards in a border area may be interpreted as a user input to increase the volume of music currently being played.

In some embodiments, the user input may control a GUI. For example, a finger detected moving in a border area may control a GUI item, such as an icon, a slider, a text box, a cursor, etc., in correspondence with the motion of the finger.

The possibility of having touch-sensitive bezels on mobile devices (especially the iPad) does make a lot of sense in our opinion, especially considering games and apps that require several control buttons and actions that are usually displayed on screen, thus cluttering the view. Patents are usually a good indication of concepts Apple is exploring and, unlike the mythical touch iMac, these patents about smart bezels could point to the feature really becoming available in next-generation iOS devices.