Traditionally, Apple likes to pride itself upon the tight integration of hardware and software they achieve in their products. As a company that builds devices and creates the software that runs on them, Apple can control fundamental aspects of the user experience such as Siri being based on a dedicated noise-reduction technology and iOS not recognizing accidental touches on the iPad mini’s smaller bezels, as well as subtle details such as OS X stopping a Mac’s fans when Dictation is active or quickly muting an iPad’s volume if you hold the volume button down for a few seconds.

The “interplay” of Apple’s hardware and software is nothing new, but I believe it was more apparent than ever today with the iPhone 5s, iOS 7, the A7 and M7 chips, and Touch ID.

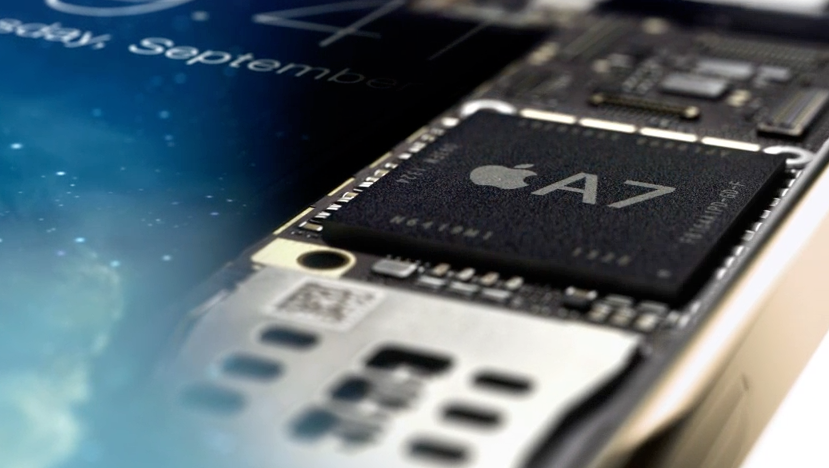

Apple makes no secret of their focus on integrating hardware components with complex software algorithms and processing techniques that lead to powerful end-user features that appear to “just work”. With today’s announcements, the concept is immediately clear at the very basics: the new A7 is a 64-bit processor, and, according to Apple, “iOS 7 was built specifically for 64-bit” so it is “uniquely designed to take advantage of the A7 chip”. While this wording may be open to interpretation, I don’t think it would be too absurd to guess that iOS 7 was always meant for the A7’s 64-bit architecture, and then scaled back to 32-bit processors for older/existing devices. As developers transition their apps to the new architecture and 64-bit CPUs progressively trickle down to the rest of the iOS line-up, users will be left with apps and games that are more powerful and advanced thanks to Apple’s custom silicon.

CPU advancements, however, are somewhat expected at this point, especially in the iPhone’s “S” (now retroactively known as “s”) yearly refresh. While 64-bit impresses developers and tech-savvy users, the average consumer knows that the new iPhone is faster and more efficient, and that’s not really that surprising anymore. This is not to downplay the importance of 64-bit in iOS’ future, but I don’t think it’s the feature that my parents and friends will be talking about tomorrow.

There are more futuristic and forward-looking examples of Apple’s interplay of hardware and software in today’s keynote. Take the camera, for instance: one of the iPhone’s biggest selling points – and certainly the one feature that has been getting upgraded every year since the iPhone 3GS – the 5s’ camera has a new five-element lens with a larger sensor and aperture that takes better pictures. It’s advanced technology for a mobile phone, but as Apple notes in a promotional video for the 5s, it just means that users can take better-looking pictures thanks to the stuff under the hood they don’t know about. With a dual LED flash system that sees two separate white and amber LEDs next to the 5s’ camera, users can take pictures in low-light and end up with more natural skin tones and accurate, “true-to-life” colors. That’s a simple, almost obvious idea – of course photos should look natural! – but it’s only made possible by advancements in hardware that become invisible when they are used.

The Camera app’s 5s-only features are worth a mention as well. With the 5s’ A7 chip, iOS 7 can automatically adjust the camera’s white balance and exposure, run algorithms to pick the best shot out of multiple ones that were actually taken behind the scenes upon pressing the shutter button, and provide automatic image stabilization without the user ever knowing what’s going on with the CPU, optics, and camera software. When all the pieces are combined, the user knows that the iPhone 5s can take slow-motion videos, shoot up to 10 photos per second, make people’s faces more natural when the flash is on, remove shakiness, and zoom on live video. Behind the scenes? iOS 7, the A7, and the camera sensor work in tandem to capture more light, process information such as closed eyes and movements, and then present it through the interface. When using an iPhone, the user only knows that the 5s takes better photos with cool new features.

Touch ID is the (expected) protagonist of a large portion of today’s news, but it shouldn’t be dismissed because several leaks and rumor blogs predicted it. Based on a capacitive sensor built into the Home button, Touch ID is relevant for two key aspects: it hardens the iPhone’s security with an additional and unique piece of information, and it uses iOS’ most common gesture to do so – touching the Home button. Apple may have embedded the 5s’ fingerprint scanner in a separate area of the device, making it appear “newer” as a standalone visible component that demanded for attention. Instead, they went for the obvious, but, again, more complex route, building the sensor into the button everyone knows, leveraging the gesture everyone is familiar with, avoiding a hidden placement that could have potentially broken the iPhone’s design simplicity. It’s a genius implementation because, like it or not, you’re going to know how to use Touch ID (and iOS 7 will prompt you during a 5s’ initial setup).

The way Touch ID works is even more emblematic of Apple’s resilient hardware/software push. A fingerprint scanner registers a template for your unique fingerprint and allows you to unlock an iPhone just by touching the Home button, skipping an entire step of the iPhone’s experience: slide to unlock. Those who have been around long enough to remember Steve Jobs’ original iPhone presentation at Macworld 2007 know the cheering that accompanied Jobs’ first “slide to unlock” gesture. It’s become so iconic people have even made doormats based on it. And now its relevance is being phased out because the iPhone 5s provides a more elegant, secure, natural way of unlocking the device. You can still set up passcodes and slide to unlock, but Touch ID is the Way of the Future™. I wouldn’t be surprised to know that Apple shipped the first beta of iOS 7 with a more confusing layout of “slide to unlock” and Control Center arrows because they were testing iOS 7’s Lock screen primarily through Touch ID (a theory that circles back to the “built for 64-bit” aspect mentioned above).

Apple could have used iCloud or other servers to store a user’s fingerprint, but instead they went for a physical area of the A7 processor they are calling “Secure Enclave” to act as the sole keeper of an encrypted (as Rich Mogull suggests, probably “hashed”) version of the fingerprint’s template. Once securely stored locally inside the A7, iOS 7 can match the data read by the fingerprint sensor with the fingerprint it knows and allow users to unlock a device or authorize a purchase on iTunes. Touch ID could have presumably been possible with a scanner not built into the Home button and data stored in iCloud, but, thanks to Apple’s invisible interplay, it’s better, easier, and safer than that. If the time will come for Touch ID to expand Apple’s reach to other markets that could benefit from secure, personal authorization – such as payments outside of iTunes – you can rest assured that Apple will rely on the combination of hardware and software they can control. In the more immediate future, it’s interesting to imagine how Touch ID could work with iBeacons – an upcoming feature that allows iOS 7 to better communicate with external devices…through hardware and software.

Last: the M7 motion coprocessor and the CoreMotion API. Described by Apple as a “sidekick” to the primary A7 processor, the M7 handles continuous monitoring of motion data. Essentially, it is an additional component that parses data registered by a 5s’ gyroscope, compass, and accelerometer to feed it back to system apps and third-party apps through an API called CoreMotion.

From my iPhone 5s overview:

According to Apple, the M7 will be power-efficient and gather data even when the iPhone 5s is asleep. By offloading work that would typically fall onto the CPU, the M7 is a “sidekick” that can make apps that use all the accelerometer all day consume less power while proving more accurate data thanks to Apple’s algorithms and APIs.

Another upside of contextual awareness is that Apple apps will use the M7 coprocessor in interesting new ways. For instance, the iOS 7 Maps app will be able to automatically switch from driving to walking directions if you park your car and continue on foot; or, when driving, the iPhone 5s will understand that it’s in a moving car and it won’t ask to join WiFi networks. If the M7 tells the iPhone 5s that you’re likely asleep because the iPhone hasn’t moved in a while, network ping will be reduced to increase battery life.

Think about that for a second. iOS 7 and the iPhone 5s are now aware of data points like “the user is walking” or “the user is in a moving vehicle”. On a mere technological level, that requires a lot of technical jargon that includes algorithms, Earth axis, data-parsing, location-tracking, and power-efficiency. For the end user, that will simply mean that the health and fitness apps she likes will now better know how much she walked or ran during the day. The iPhone will be smarter in gracefully disabling features when they’re not needed based on M7-powered data, and the impact on battery life will be minimal, if not completely unnoticeable, thanks to the sidekick approach. More importantly, third-party developers of apps that aren’t necessary fitness or health-related will be able to take advantage of this data without having to write their own parsers or directly querying an iPhone’s accelerometer or gyroscope.

Combined with iOS 7’s new Background Refresh system, you’ll start seeing apps that launch with your data always available at the right time and that are more flexible in interfaces and UX choices thanks to context awareness. This is a potential game-changer for several ideas behind modern app making (imagine if a health-tracking app could suggest to take a walk on days when you’ve been at your desk too much, but to take a shower after you’ve run for 2 hours) and it fits well with iOS 7’s focus on stripping away ornamentation to provide a UI that is more versatile and focused on content. The fact that Apple, and not a third-party hardware maker, is providing easy access to data natively tracked and parsed by an iPhone’s chip will be a huge boon for developers of all kinds of iPhone apps. Nike is already on board, and look at what people who don’t depend on external hardware think of the M7 and CoreMotion. The implications for new app genres, software features[1], and potential new product categories for Apple are vast, long-term, and definitely in the realm of futuristic.

There is one common thread in today’s announcements: an invisible interplay of hardware and software. Today, we’ve seen Apple doing one of the things they do best: creating native apps and features that are uniquely built for Apple’s components and OS while laying the groundwork for third-party developers to start figuring out what’s next.

To paraphrase and mix Ive and Jobs, technology for technology’s sake is not enough. The iPhone 5s and iOS 7 show a glimpse of a promising, smarter future.

- Free app idea: music playlists based on driving/walking context. ↩︎