Google announced a series of new features at its Google I/O developer conference that it will add to Google Maps and the Google Lens feature of its Photos and Assistant apps in the coming weeks and months.

During the Google I/O keynote, the company demonstrated augmented reality navigation that combines a camera-view of your location with superimposed walking directions. The feature, which works with a device’s camera, can also point out landmarks and overlay other information about the surrounding environment.

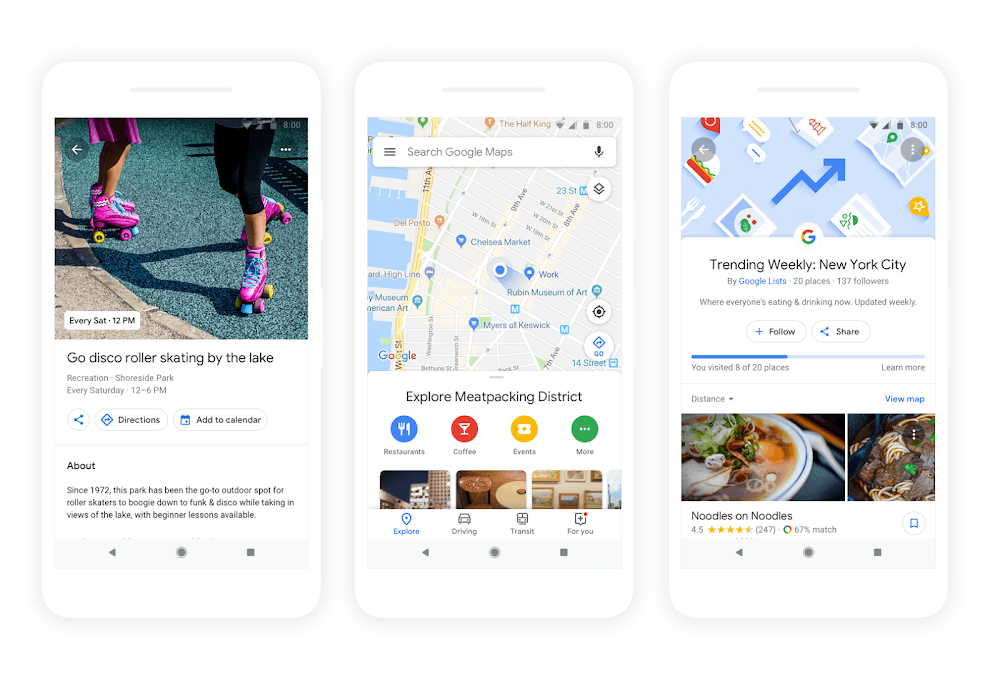

Google Maps is gaining a dedicated ‘For You’ tab too. The new tab will suggest nearby businesses, restaurants, and other activities based on things you’ve rated, places you’ve visited, and other input. The same sorts of inputs will be used in Maps’ new match score, which will predict how much you will like a particular destination and is designed to help make picking between multiple destinations easier. Maps will also allow users to quickly create lists of suggested destinations, share them with friends, and vote on where to go.

Google Lens, which is incorporated into the Google Photos and Assistant apps, is also gaining new features. Much like the iOS app Prizmo Go, Lens will be able to recognize text in books and documents viewed through the camera allowing you to highlight, copy, and paste the text into other apps. Lens is adding a Style Match feature which allows users to point a camera at something and see similar items too. In a demonstration, Google pointed Lens at a lamp, which generated a list of similar lamps almost instantly.

More than ever, Google is showing what can be accomplished with the vast amount of data it can bring to bear in real-time on mobile devices. The insights that are possible may seem creepy to some people, but if used responsibly, they allow Google to provide powerful contextual information to its users.