Apple is releasing iOS and iPadOS 18.2 today, and with those software updates, the company is rolling out the second wave of Apple Intelligence features as part of their previously announced roadmap that will culminate with the arrival of deeper integration between Siri and third-party apps next year.

In today’s release, users will find native integration between Siri and ChatGPT, more options in Writing Tools, a smarter Mail app with automatic message categorization, generative image creation in Image Playground, Genmoji, Visual Intelligence, and more. It’s certainly a more ambitious rollout than the somewhat disjointed debut of Apple Intelligence with iOS 18.1, and one that will garner more attention if only by virtue of Siri’s native access to OpenAI’s ChatGPT.

And yet, despite the long list of AI features in these software updates, I find myself mostly underwhelmed – if not downright annoyed – by the majority of the Apple Intelligence changes, but not for the reasons you may expect coming from me.

Some context is necessary here. As I explained in a recent episode of AppStories, I’ve embarked on a bit of a journey lately in terms of understanding the role of AI products and features in modern software. I’ve been doing a lot of research, testing, and reading about the different flavors of AI tools that we see pop up on almost a daily basis now in a rapidly changing landscape. As I discussed on the show, I’ve landed on two takeaways, at least for now:

- I’m completely uninterested in generative products that aim to produce images, video, or text to replace human creativity and input. I find products that create fake “art” sloppy, distasteful, and objectively harmful for humankind because they aim to replace the creative process with a thoughtless approximation of what it means to be creative and express one’s feelings, culture, and craft through genuine, meaningful creative work.

- I’m deeply interested in the idea of assistive and agentic AI as a means to remove busywork from people’s lives and, well, assist people in the creative process. In my opinion, this is where the more intriguing parts of the modern AI industry lie:

- agents that can perform boring tasks for humans with a higher degree of precision and faster output;

- coding assistants to put software in the hands of more people and allow programmers to tackle higher-level tasks;

- RAG-infused assistive tools that can help academics and researchers; and

- protocols that can map an LLM to external data sources such as Claude’s Model Context Protocol.

I see these tools as a natural evolution of automation and, as you can guess, that has inevitably caught my interest. The implications for the Accessibility community in this field are also something we should keep in mind.

To put it more simply, I think empowering LLMs to be “creative” with the goal of displacing artists is a mistake, and also a distraction – a glossy facade largely amounting to a party trick that gets boring fast and misses the bigger picture of how these AI tools may practically help us in the workplace, healthcare, biology, and other industries.

This is how I approached my tests with Apple Intelligence in iOS and iPadOS 18.2. For the past month, I’ve extensively used Claude to assist me with the making of advanced shortcuts, used ChatGPT’s search feature as a Google replacement, indexed the archive of my iOS reviews with NotebookLM, relied on Zapier’s Copilot to more quickly spin up web automations, and used both Sonnet 3.5 and GPT-4o to rethink my Obsidian templating system and note-taking workflow. I’ve used AI tools for real, meaningful work that revolved around me – the creative person – doing the actual work and letting software assist me. And at the same time, I tried to add Apple’s new AI features to the mix.

Perhaps it’s not “fair” to compare Apple’s newfangled efforts to products by companies that have been iterating on their LLMs and related services for the past five years, but when the biggest tech company in the world makes bold claims about their entrance into the AI space, we have to take them at face value.

It’s been an interesting exercise to see how far behind Apple is compared to OpenAI and Anthropic in terms of the sheer capabilities of their respective assistants; at the same time, I believe Apple has some serious advantages in the long term as the platform owner, with untapped potential for integrating AI more deeply within the OS and apps in a way that other AI companies won’t be able to. There are parts of Apple Intelligence in 18.2 that hint at much bigger things to come in the future that I find exciting, as well as features available today that I’ve found useful and, occasionally, even surprising.

With this context in mind, in this story you won’t see any coverage of Image Playground and Image Wand, which I believe are ridiculously primitive and perfect examples of why Apple may think they’re two years behind their competitors. Image Playground in particular produces “illustrations” that you’d be kind to call abominations; they remind me of the worst Midjourney creations from 2022. Instead, I will focus on the more assistive aspects of AI and share my experience with trying to get work done using Apple Intelligence on my iPhone and iPad alongside its integration with ChatGPT, which is the marquee addition of this release.

Let’s dive in.

ChatGPT Integration: Siri and Writing Tools

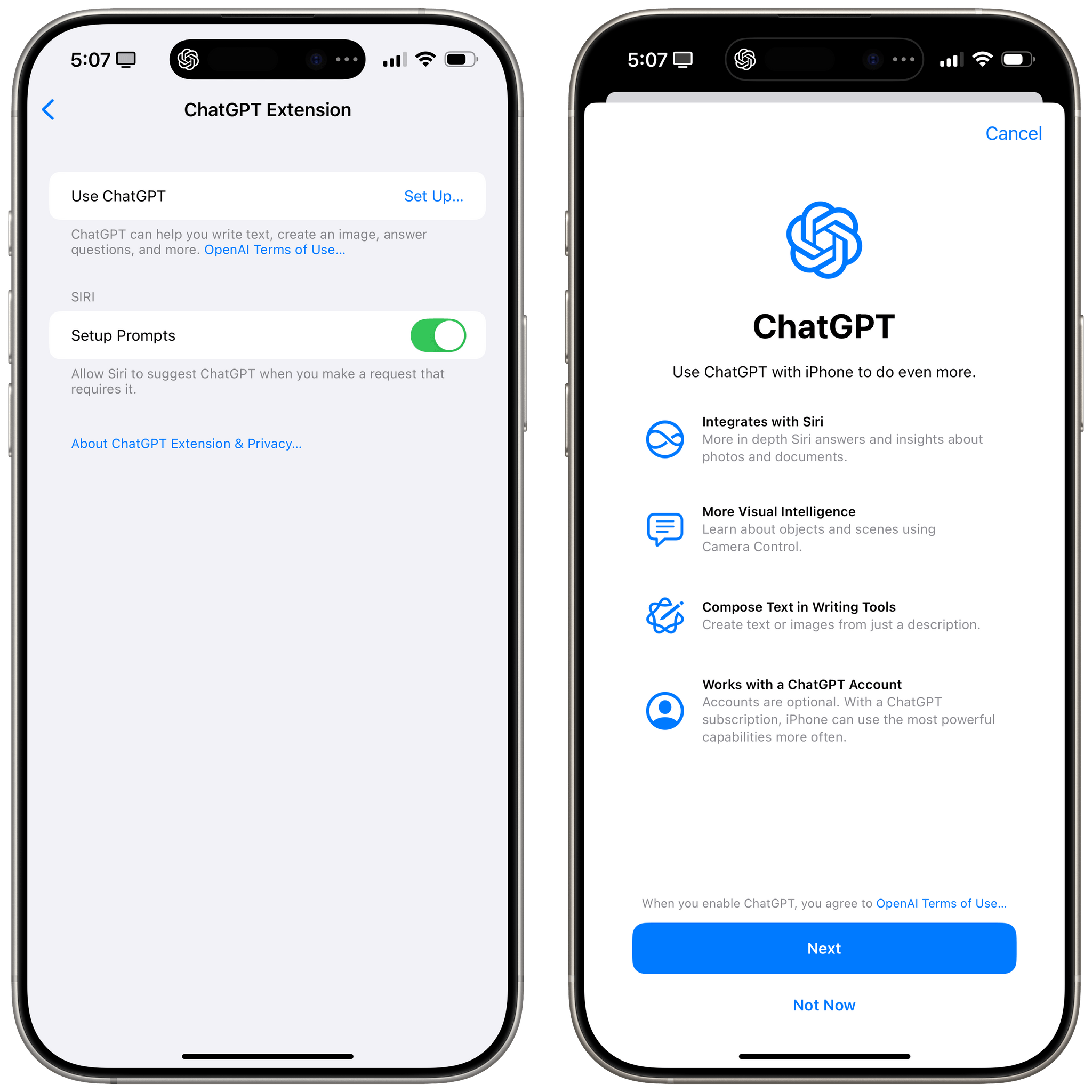

Apple Intelligence in iOS and iPadOS 18.2 offers direct integration with OpenAI’s ChatGPT using the GPT-4o model. This is based on a ChatGPT extension that can be enabled in Settings ⇾ Apple Intelligence & Siri ⇾ Extensions.

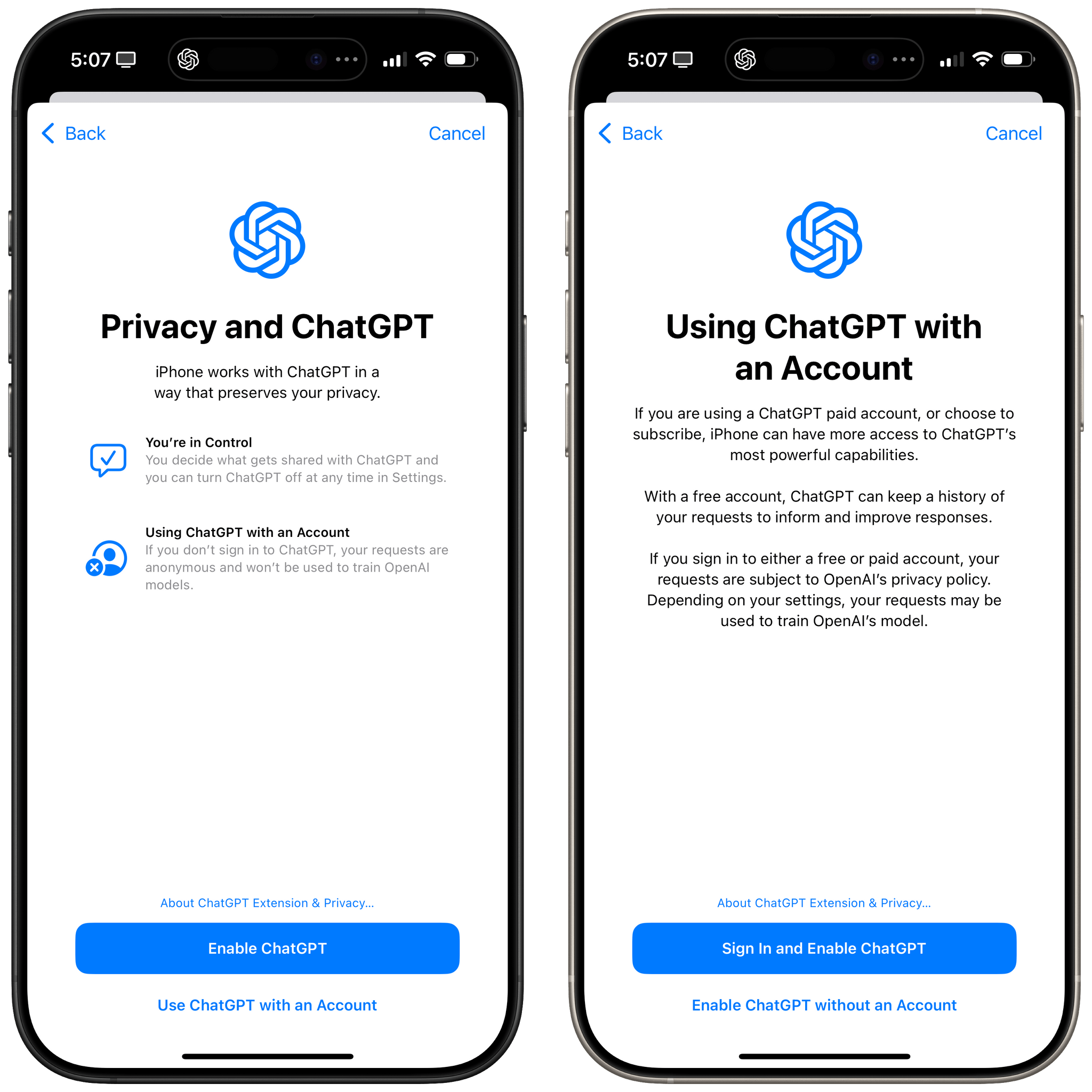

The mere existence of an ‘Extensions’ section seems to confirm that Apple may consider offering other LLMs in the future in addition to ChatGPT, but that’s a story for another time. For now, you can only choose to activate the ChatGPT extension (it’s turned off by default), and in doing so, you have two options. You can choose to use ChatGPT as an anonymous, signed-out user. In this case, your IP address will be obscured on OpenAI’s servers, and only the contents of your request will be sent to ChatGPT. According to Apple, while in this mode, OpenAI must process your request and discard it afterwards; furthermore, the request won’t be used to improve or train OpenAI’s models.

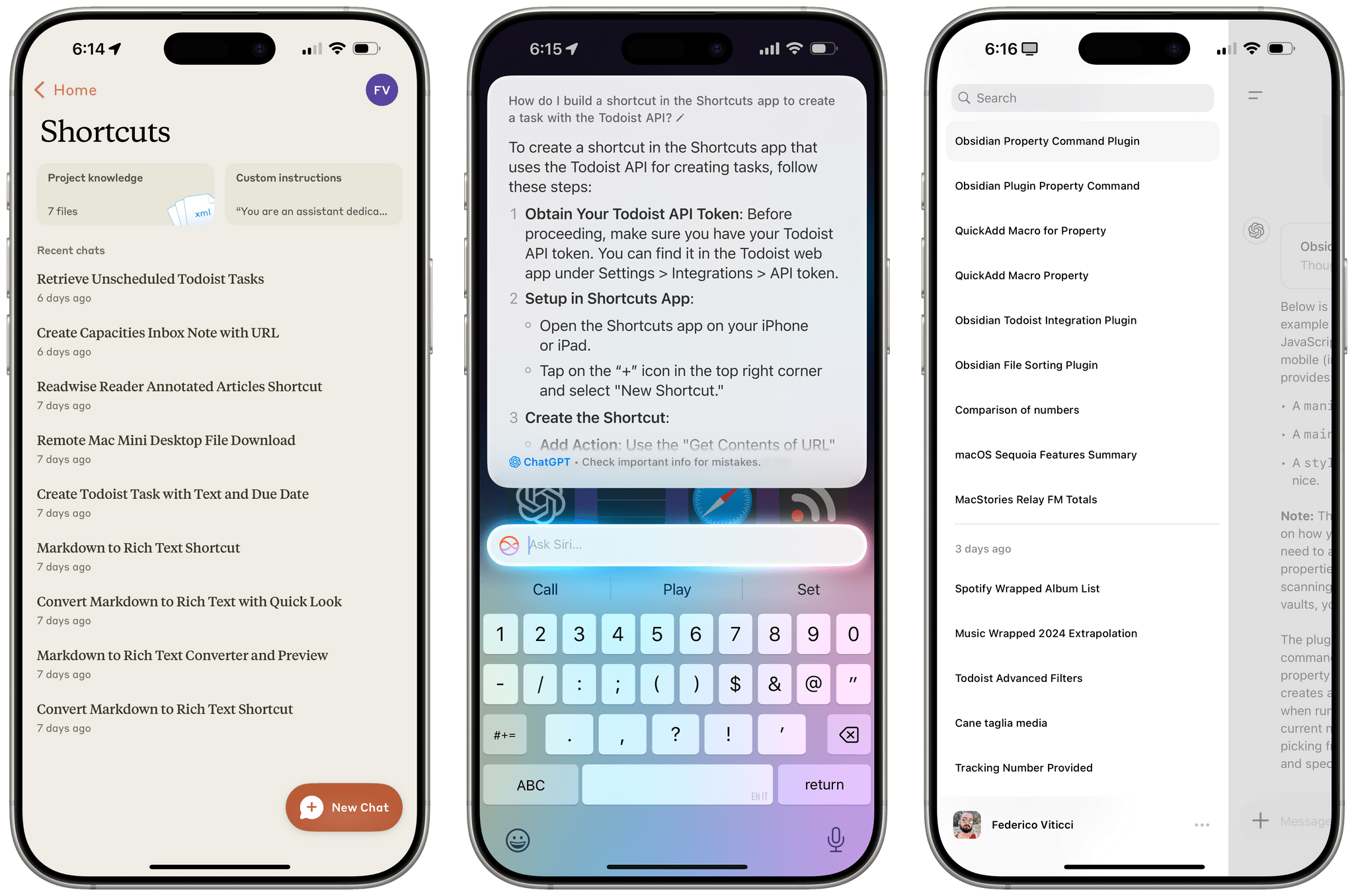

You can also choose to log in with an existing ChatGPT account directly from the Settings app. When logged in, OpenAI’s data retention policies will apply, and your requests may be used for training of the company’s models. Furthermore, your conversations with Siri that involve ChatGPT processing will be saved in your OpenAI account, and you’ll be able to see your previous Siri requests in ChatGPT’s conversation sidebar in the ChatGPT app and website.

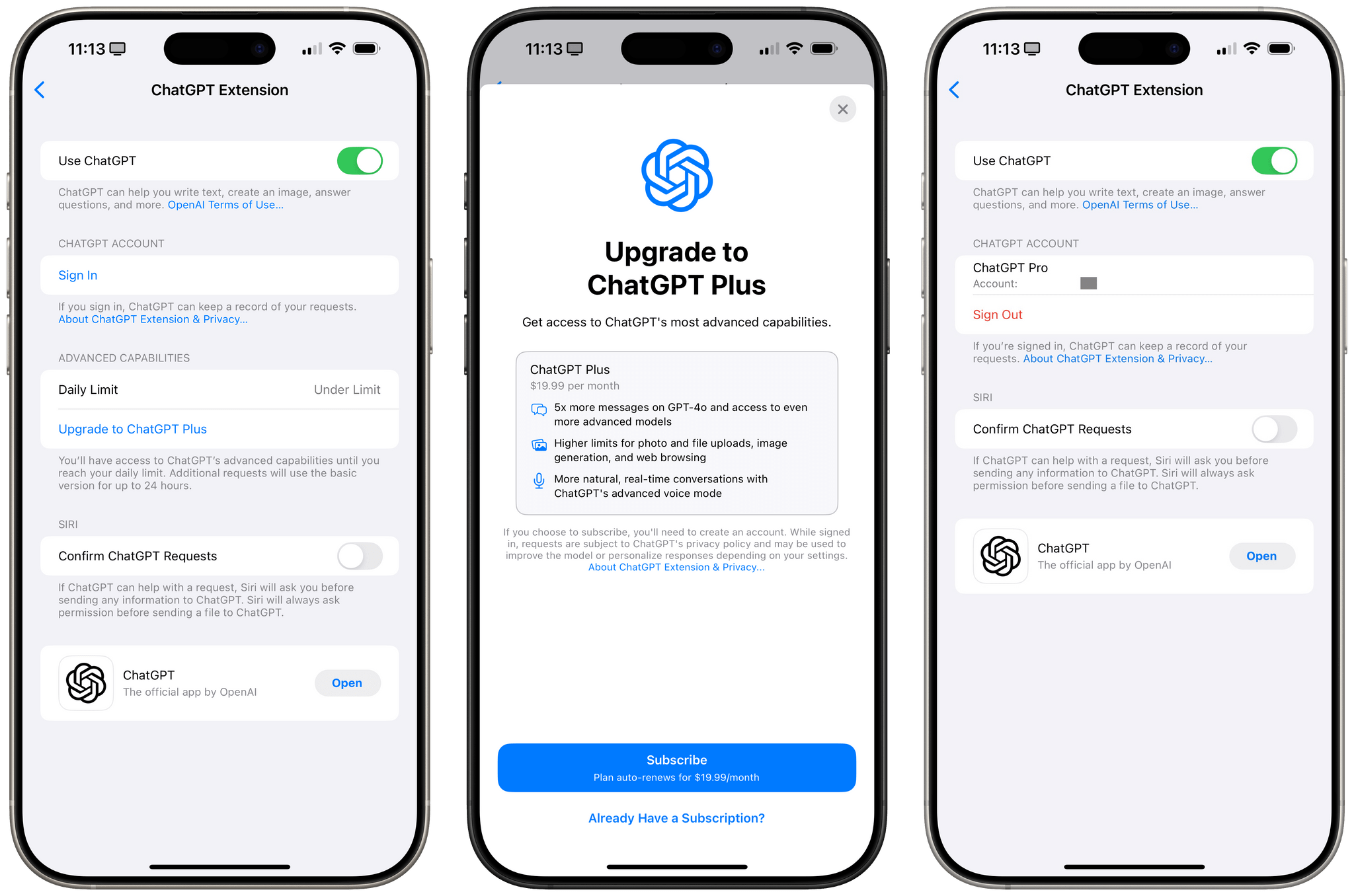

You have the option to use ChatGPT for free or with your paid ChatGPT Plus account. In the ChatGPT section of the Settings app, Apple shows the limits that are in place for free users and offers an option to upgrade to a Plus account directly from Settings. According to Apple, only a small number of requests that use the latest GPT-4o and DALL-E 3 models can be processed for free before having to upgrade. For this article, I used my existing ChatGPT Plus account, so I didn’t run into any limits.

But how does Siri actually determine if ChatGPT should swoop in and answer a question on its behalf? There are more interesting caveats and implementation details worth covering here.

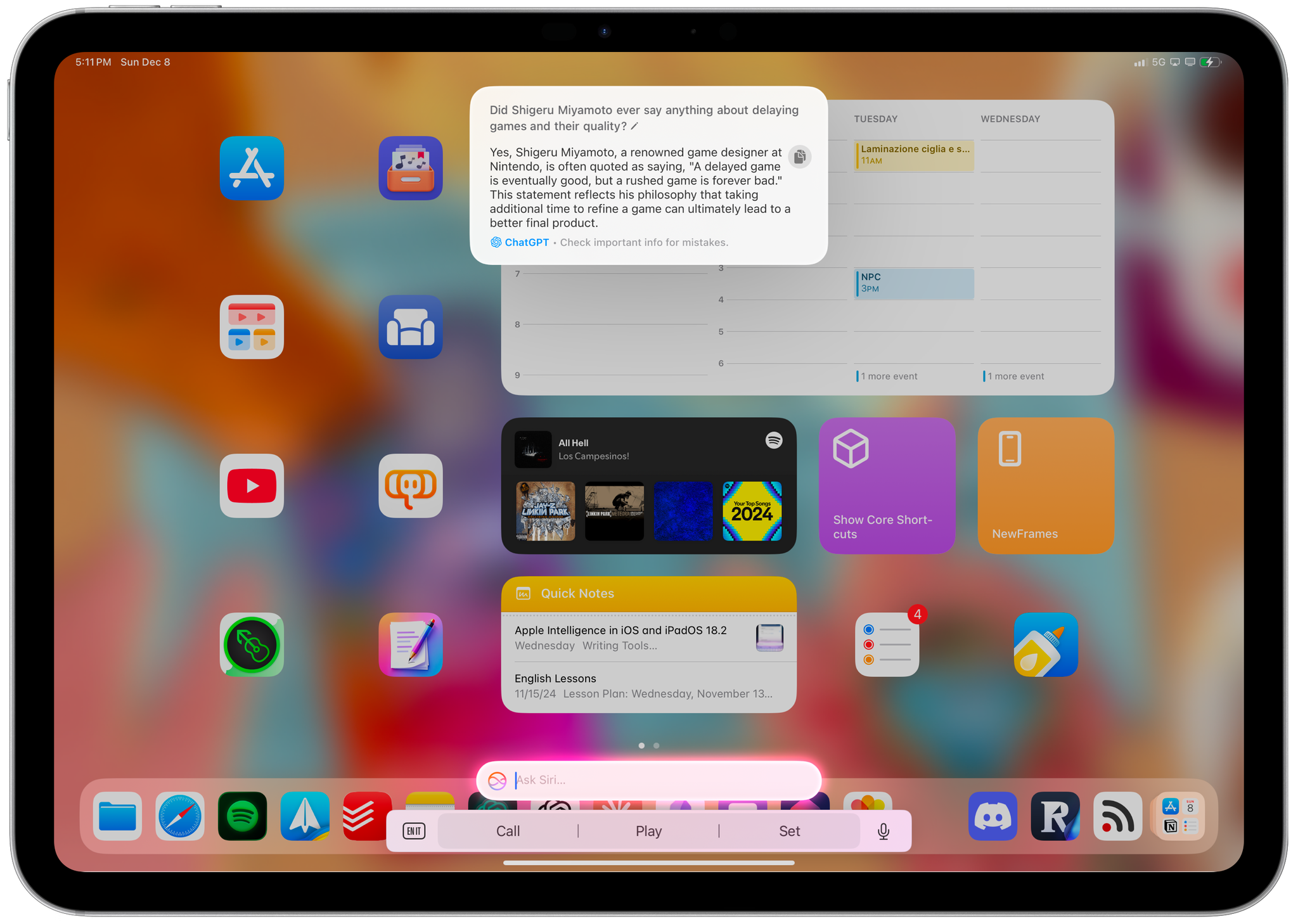

By default, Siri tries to determine if any regular request may be best answered by ChatGPT rather than Siri itself. In my experience, this usually means that more complicated questions or those that pertain to “world knowledge” outside of Siri’s domain get handed off to ChatGPT and are subsequently displayed by Siri with its new “snippet” response style in iOS 18 that looks like a taller notification banner.

For instance, if I ask “What’s the capital of Italy?”, Siri can respond with a rich snippet that includes its own answer accompanied by a picture. However, if I ask “What’s the capital of Italy, and has it always been the capital of Italy?”, the additional information required causes Siri to automatically fall back to ChatGPT, which provides a textual response.

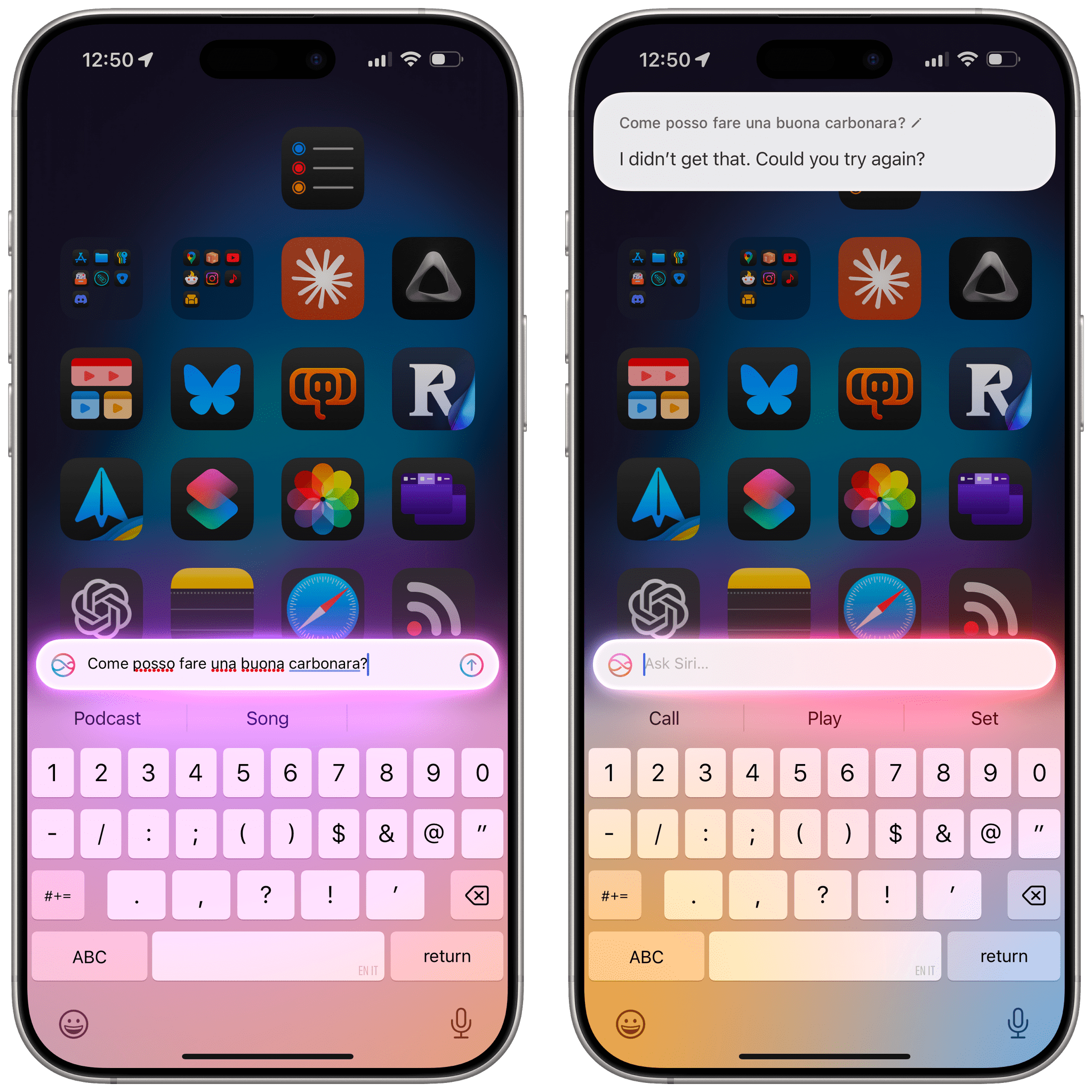

Basic questions (left) can still be answered by Siri itself; ask for more details, however, and ChatGPT comes in.

Siri knows its limits; effectively, ChatGPT has replaced the “I found this on the web” results that Siri used to bring up before it had access to OpenAI’s knowledge. In the absence of a proper Siri LLM (more on this later), I believe this is a better compromise than the older method that involved Google search results. At the very least, now you’re getting an answer instead of a bunch of links.

You can also format your request to explicitly ask Siri to query ChatGPT. Starting your request with “Ask ChatGPT…” is a foolproof technique to go directly to ChatGPT, and you should use it any time you’re sure Siri won’t be able to answer immediately.

I should also note that, by default, Siri in iOS 18.2 will always confirm with you whether you want to send a request to ChatGPT. There is, however, a way to turn off these confirmation prompts: on the ChatGPT Extension screen in Settings, turn off the ‘Confirm ChatGPT Requests’ option, and you’ll no longer be asked if you want to pass a request to ChatGPT every time. Keep in mind, though, that this preference is ignored when you’re sending files to ChatGPT for analysis, in which case you’ll always be asked to confirm your request since those files may contain sensitive information.

By default, you’ll be asked to confirm if you want to use ChatGPT to answer questions. You can turn this off.

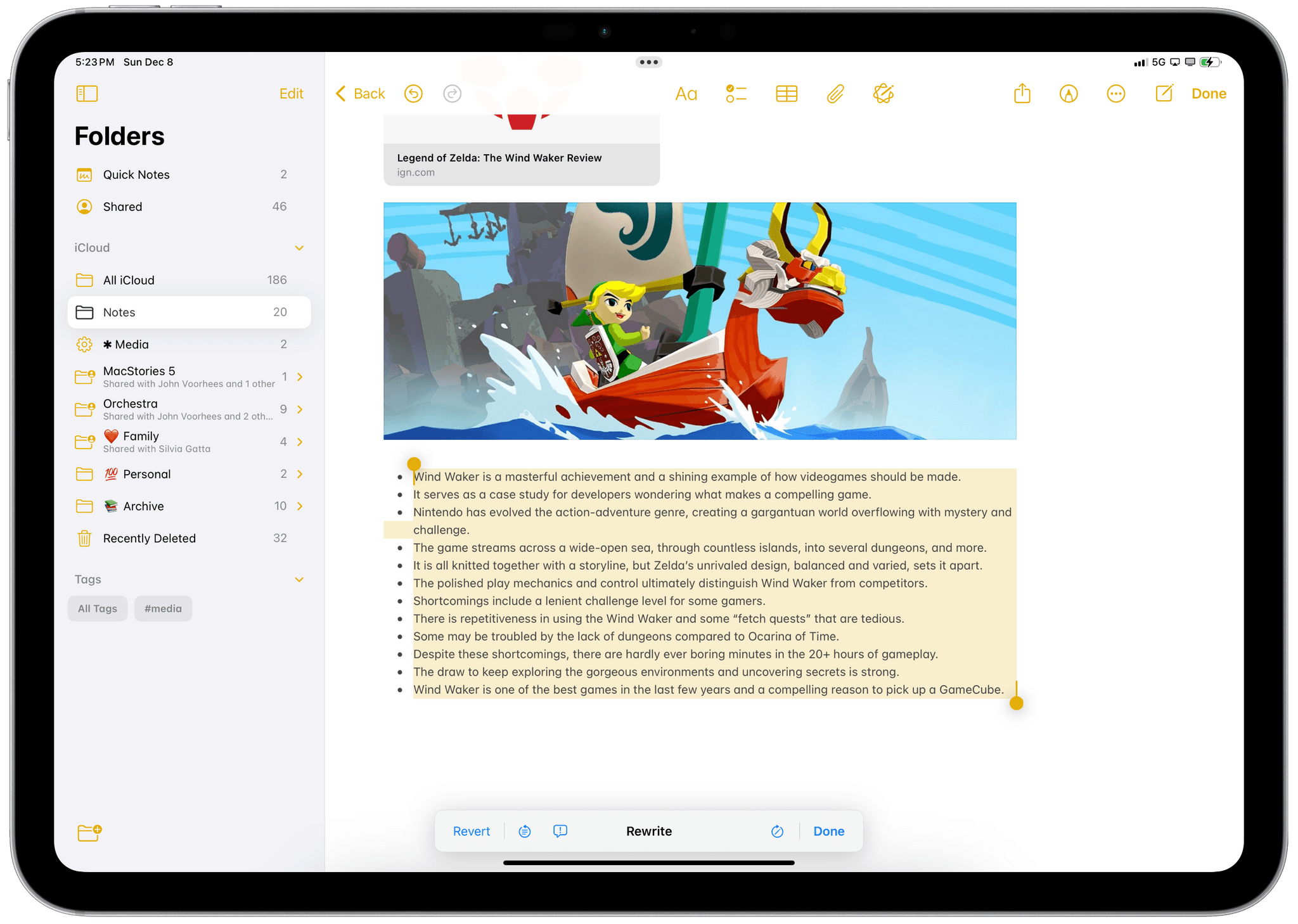

The other area of iOS and iPadOS that is receiving ChatGPT integration today is Writing Tools, which debuted in iOS 18.1 as an Apple Intelligence-only feature. As we know, Writing Tools are now prominently featured system-wide in any text field thanks to their placement in the edit menu, and they’re also available directly in the top toolbar of the Notes app.

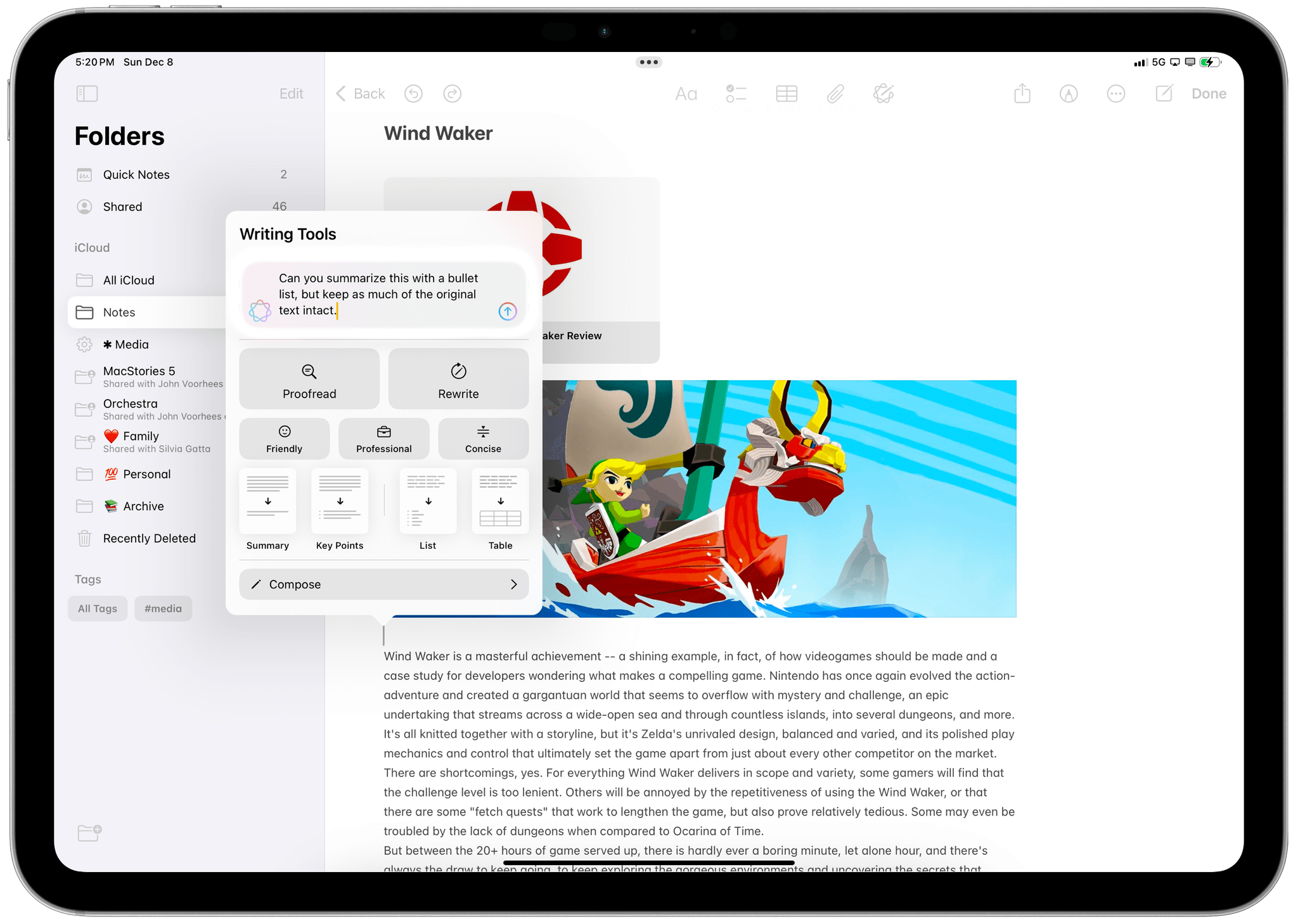

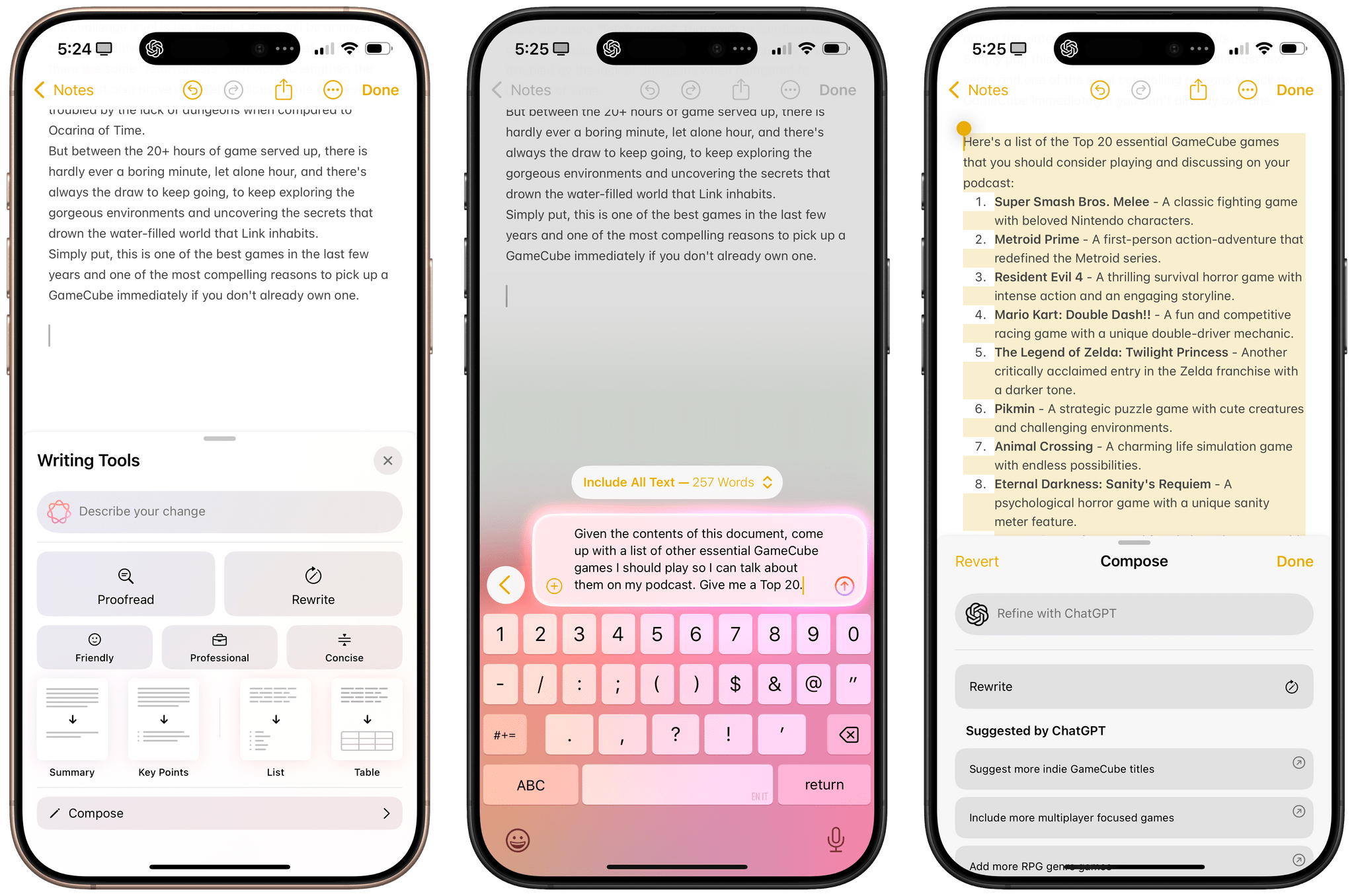

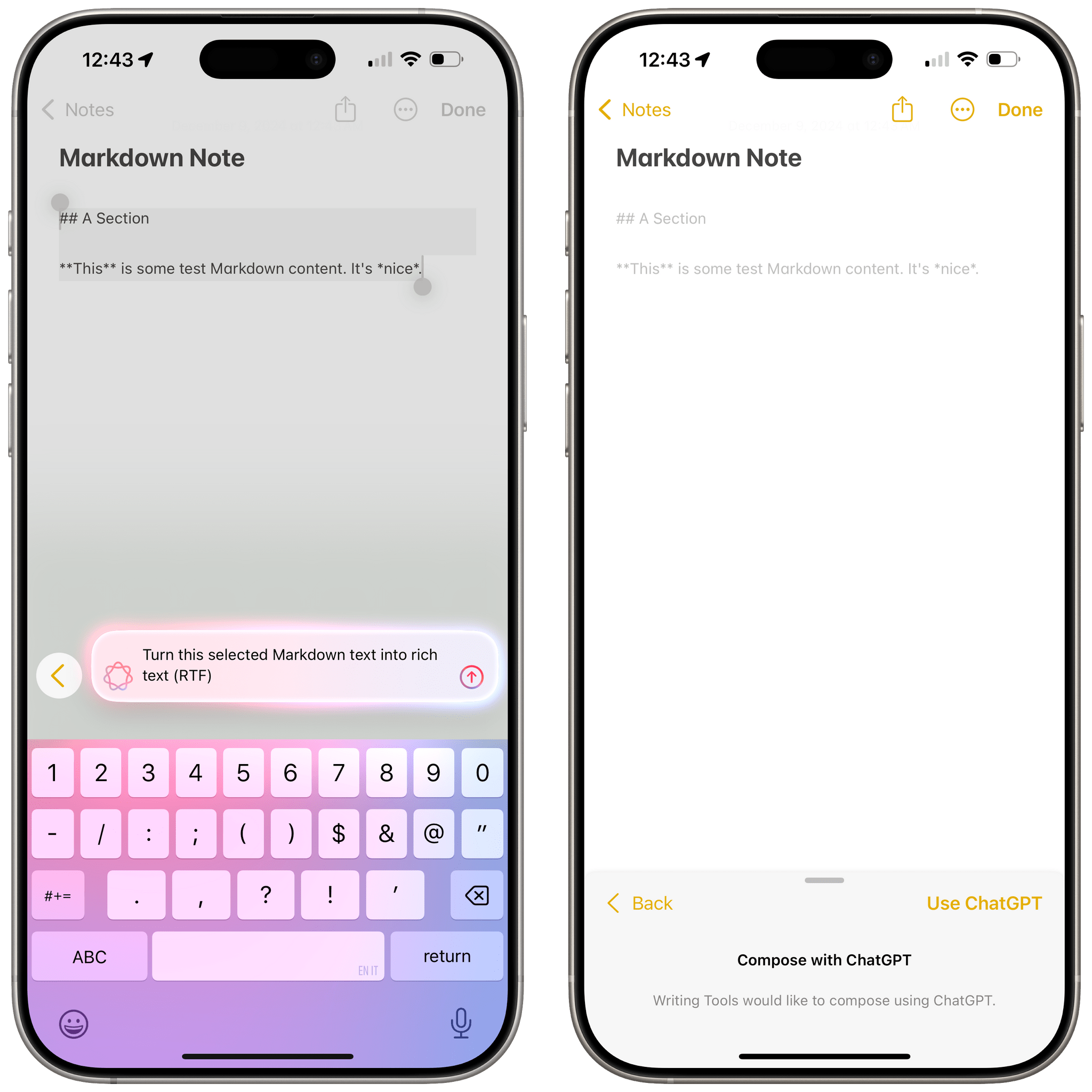

In iOS 18.2, Writing Tools gain the ability to refine text by letting you describe changes you want made, and they also come with a new ‘Compose’ submenu powered by ChatGPT, which lets you ask OpenAI’s assistant to write something for you based on the content of the document you’re working on.

If the difference between the two sounds confusing, you’re not alone. Here’s how you can think about it, though: the ‘Describe your change’ text field at the top of Writing Tools defaults to asking Apple Intelligence, but may fall back to ChatGPT if Apple Intelligence doesn’t know what you mean; the Compose menu always uses ChatGPT. It’s essentially just like Siri, which tries to answer on its own, but may rely on ChatGPT and also includes a manual override to skip Apple Intelligence altogether.

The ability to describe changes is a more freeform way to rewrite text beyond the three default buttons available in Writing Tools for Friendly, Professional, and Concise tones.

With Compose, you can use the contents of a note as a jumping-off point to add any other content you want via ChatGPT.

You can also refine results in the Compose screen with follow-up questions while retaining the context of the current document. In this case, ChatGPT composed a list of more games similar to Wind Waker, which was the main topic of the note.

In testing the updated Writing Tools with ChatGPT integration, I’ve run into some limitations that I will cover below, but I also had two very positive experiences with the Notes app that I want to mention here since they should give you an idea of what’s possible.

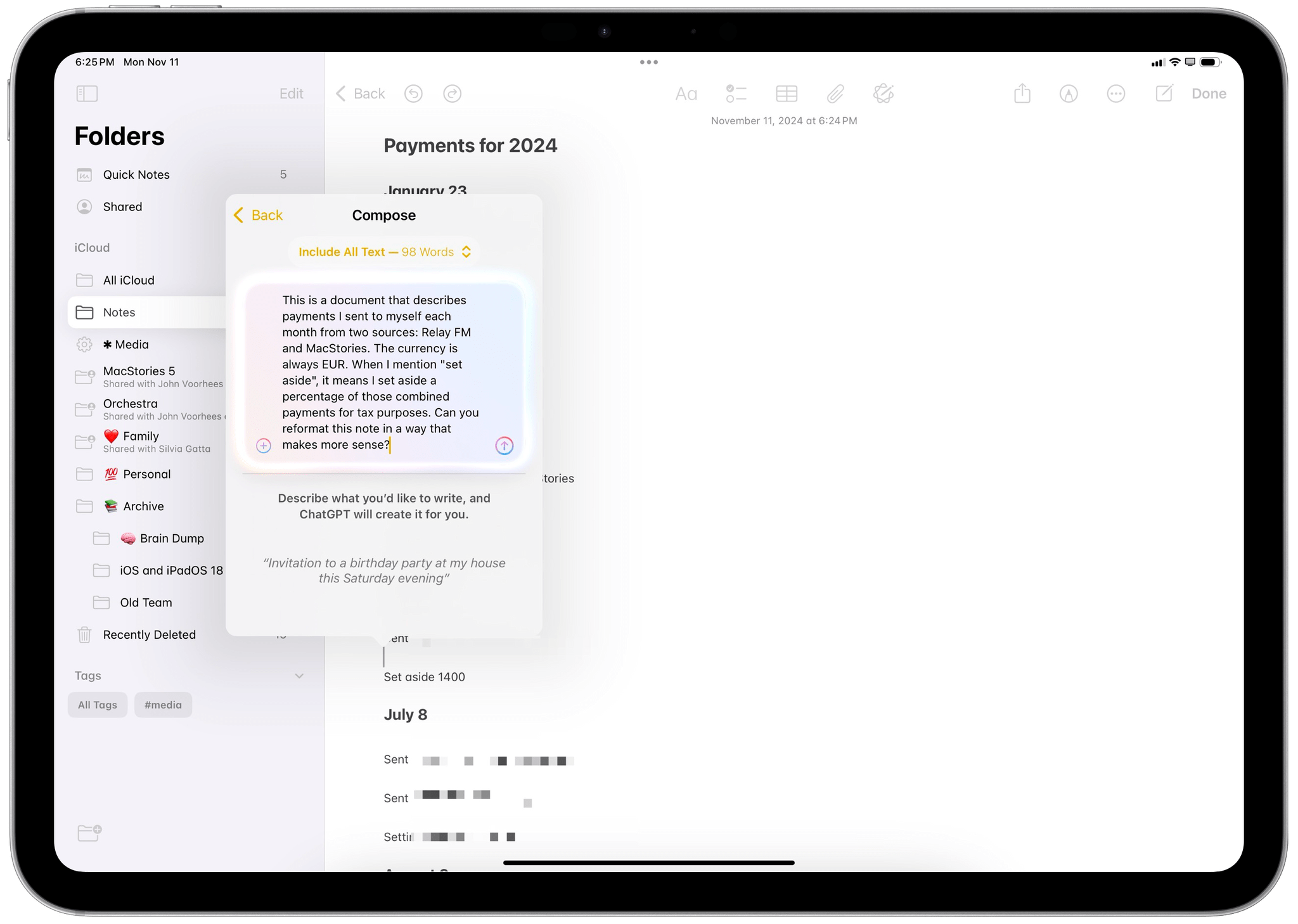

In my first test, I was working with a note that contained a list of payments for my work at MacStories and Relay FM, plus the amount of taxes I was setting aside each month. The note originated in Obsidian, and after I pasted it into Apple Notes, it lost all its formatting.

There were no proper section headings, the formatting was inconsistent between paragraphs, and the monetary amounts had been entered with different currency symbols for EUR. I wanted to make the note look prettier with consistent formatting, so I opened the ‘Compose’ field of Writing Tools and sent ChatGPT the following request:

This is a document that describes payments I sent to myself each month from two sources: Relay FM and MacStories. The currency is always EUR. When I mention “set aside”, it means I set aside a percentage of those combined payments for tax purposes. Can you reformat this note in a way that makes more sense?

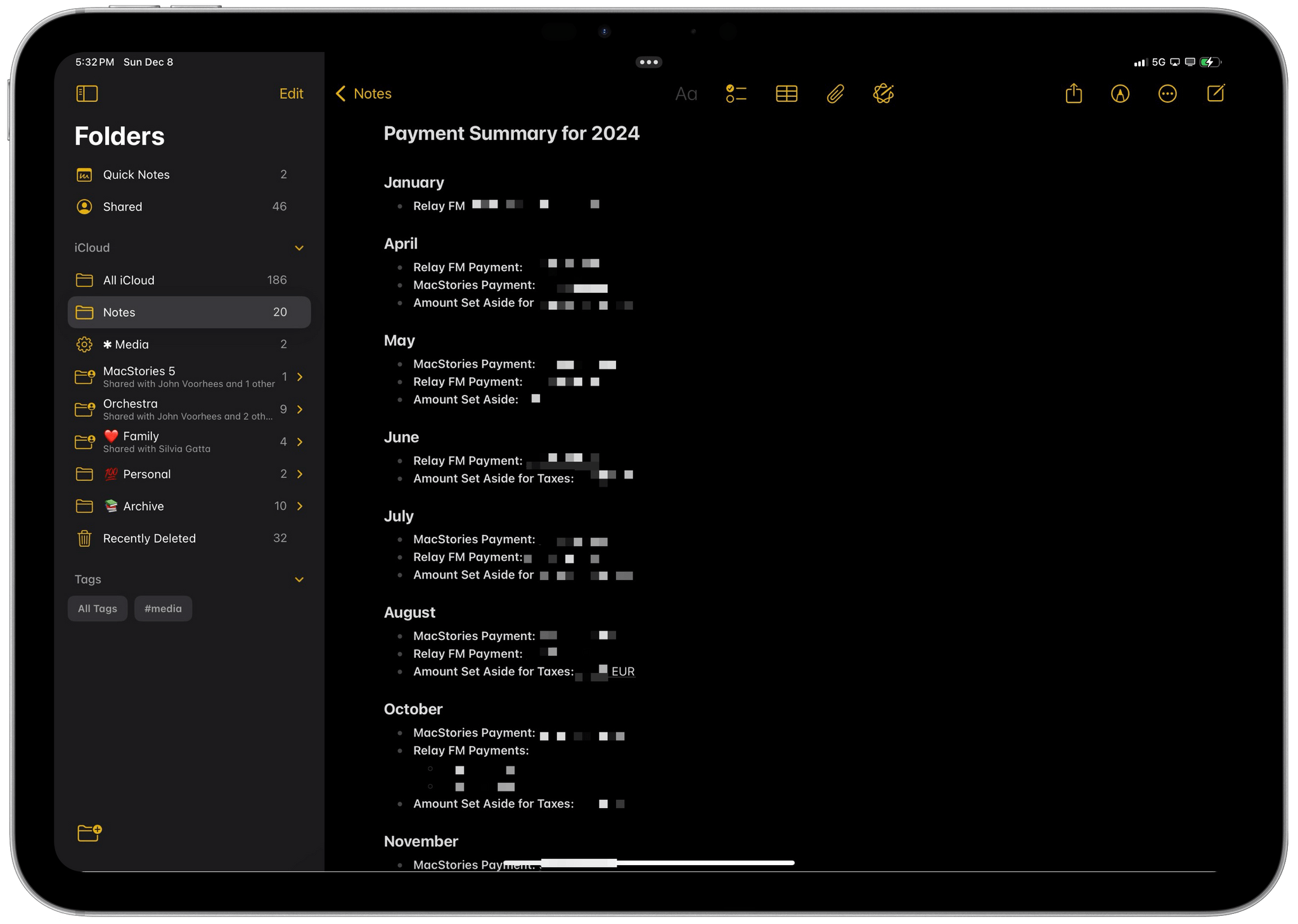

I hit Return, and after a few seconds, ChatGPT reworked my text with a consistent structure organized into sections with bullet points and proper currency formatting. I was immediately impressed, so I accepted the suggested result, and I ended up with the same note, elegantly formatted just like I asked.

This shouldn’t come as a surprise: ChatGPT – especially the GPT-4o model – is pretty good at working with numbers. Still, this is the sort of use case that makes me optimistic about this flavor of AI integration; I could have done this manually by carefully selecting text and manually making each line consistent, but it was going to be boring busywork that would have wasted a bunch of my time. And that’s time that is, frankly, best spent doing research, writing, or promoting my work on social media. Instead, Writing Tools and ChatGPT worked with my data, following a natural language query, and modified the contents of my note in seconds. Even better, after the note had been successfully updated, I was able to ask for more additional information including averages, totals for each revenue source, and more. I could have done this in a spreadsheet, but I didn’t want to (and I also never understood formulas), and it was easier to do so with natural language in a popup menu of the Notes app.

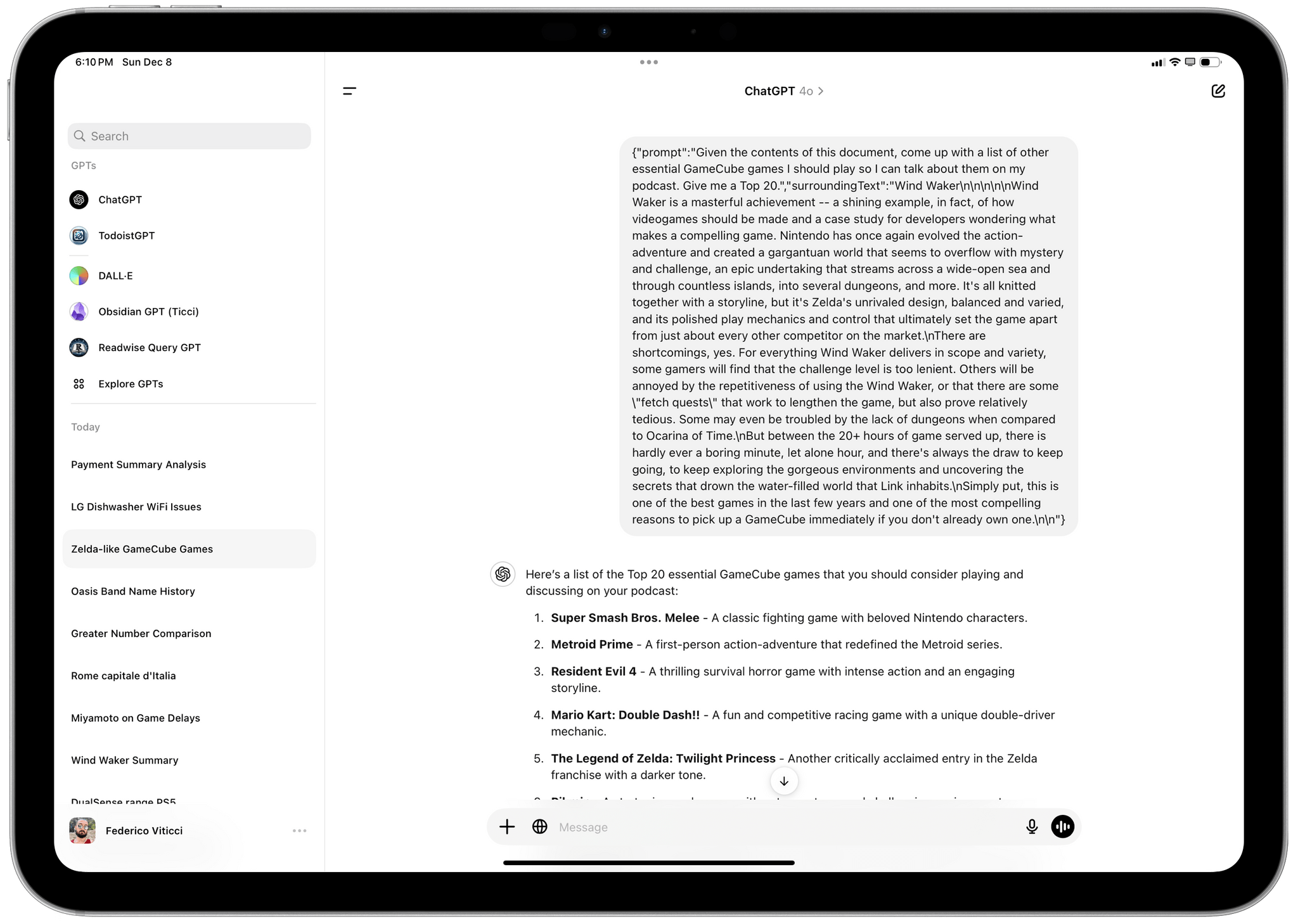

Fun detail: here’s how a request initiated from the Notes app gets synced to your ChatGPT account. Note the prompt and surroundingText keys of the JSON object the Notes app sends to ChatGPT.

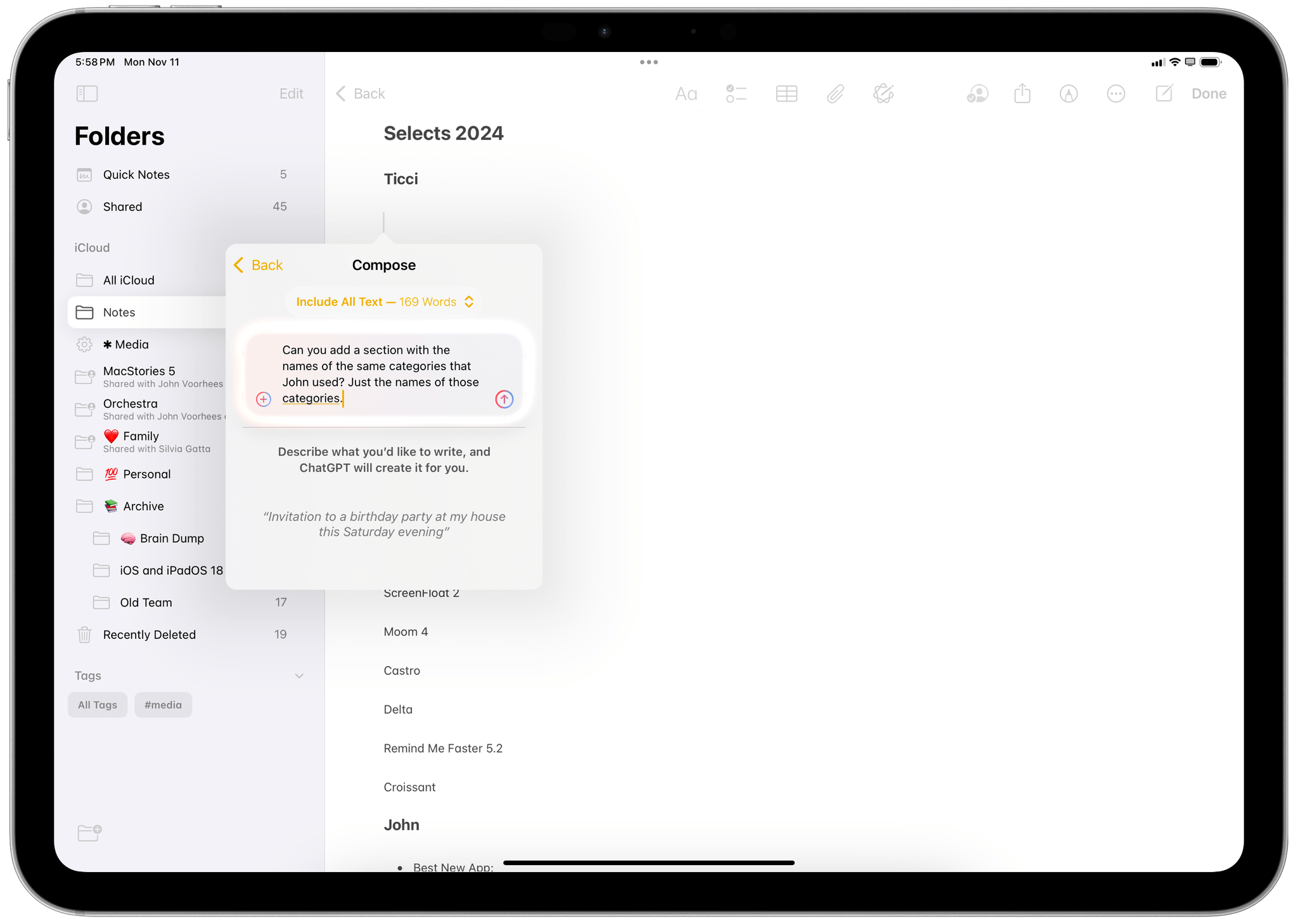

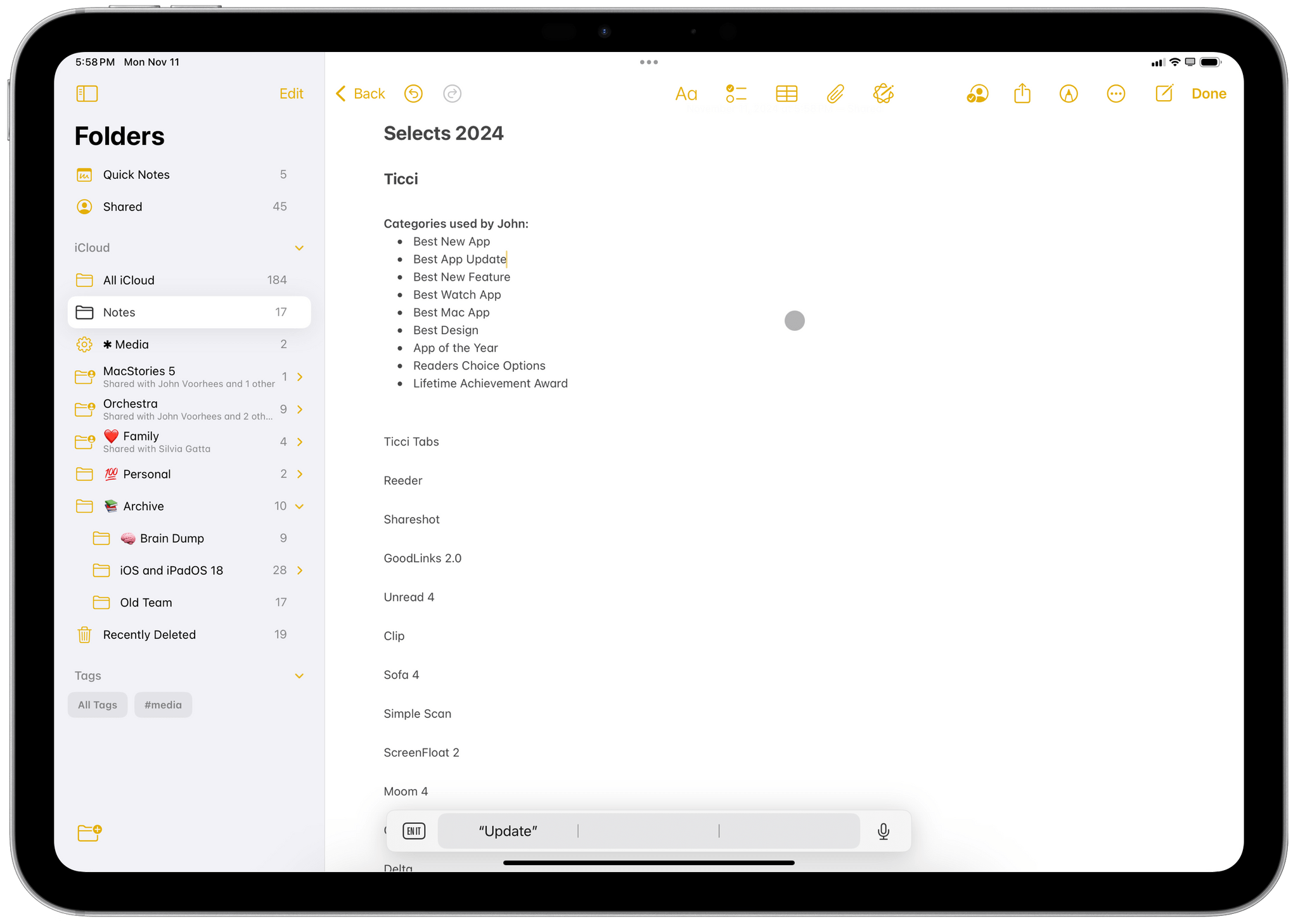

The second example of ChatGPT and Writing Tools applied to regular MacStories work involves our annual MacStories Selects awards. Before getting together with the MacStories team on a Zoom call to discuss our nominees and pick winners, we created a shared note in Apple Notes where different writers entered their picks. When I opened the note, I realized that I was behind others and forgot to enter the different categories of awards in my section of the document. So I invoked ChatGPT’s Compose menu under a section heading with my name and asked:

Can you add a section with the names of the same categories that John used? Just the names of those categories.

A few seconds later, Writing Tools pasted this section below my name:

This may seem like a trivial task, but I don’t think it is. ChatGPT had to evaluate a long list of sections (all formatted differently from one another), understand where the sections entered by John started and ended, and extract the names of categories, separating them from the actual picks under each category. Years ago, I would have had to do a lot of copying and pasting, type it all out manually, or write a shortcut with regular expressions to automate this process. Now, the “automation” takes place as a natural language command that has access to the contents of a note and can reformat it accordingly.

As we’ll see below, there are plenty of scenarios in which Writing Tools, despite the assistance from ChatGPT, fails at properly integrating with the Notes app and understanding some of the finer details behind my requests. But given that this is the beginning of a new way to think about working with text in any text field (third-party developers can integrate with Writing Tools), I’m excited about the prospect of abstracting app functionalities and formatting my documents in a faster, more natural way.

The Limitations – and Occasional Surprises – of Siri’s Integration with ChatGPT

Having used ChatGPT extensively via its official app on my iPhone and iPad for the past month, one thing is clear to me: Apple has a long way to go if they want to match what’s possible with the standalone ChatGPT experience in their own Siri integration – not to mention with Siri itself without the help from ChatGPT.

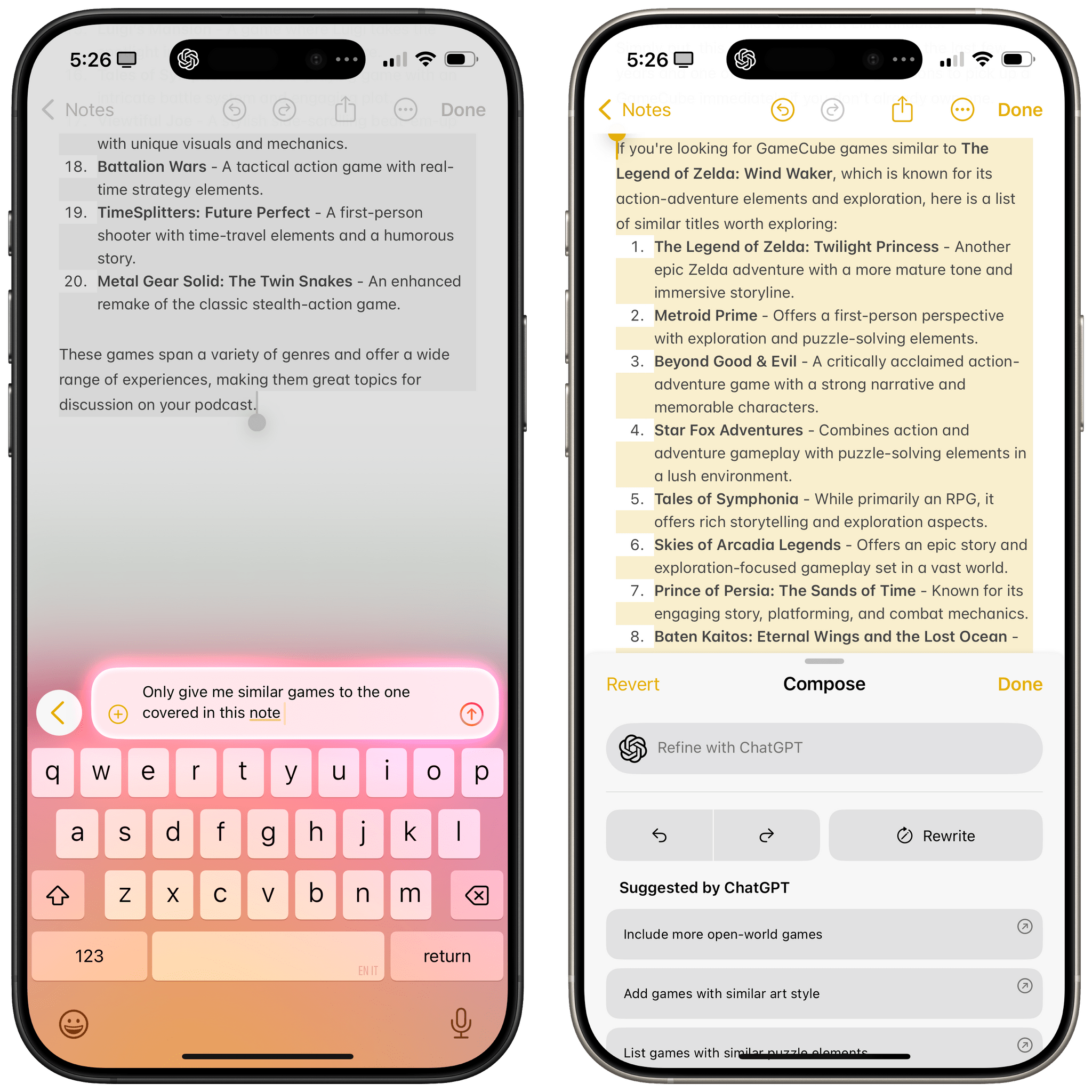

The elephant in the room here is the lack of a single, self-contained Siri LLM experience in the form of an app that can remember all of your conversations and keep the context of an ongoing conversation across multiple sessions. Today, Apple’s efforts to infuse Siri with more “Apple Intelligence” result in a scattershot implementation comprised of disposable interactions that forego the true benefits of LLMs, lacking a cohesive vision. It’s quite telling that the best part of the “new” Siri experience is the ChatGPT integration in 18.2, and even then, it’s no replacement for the full-featured ChatGPT app.

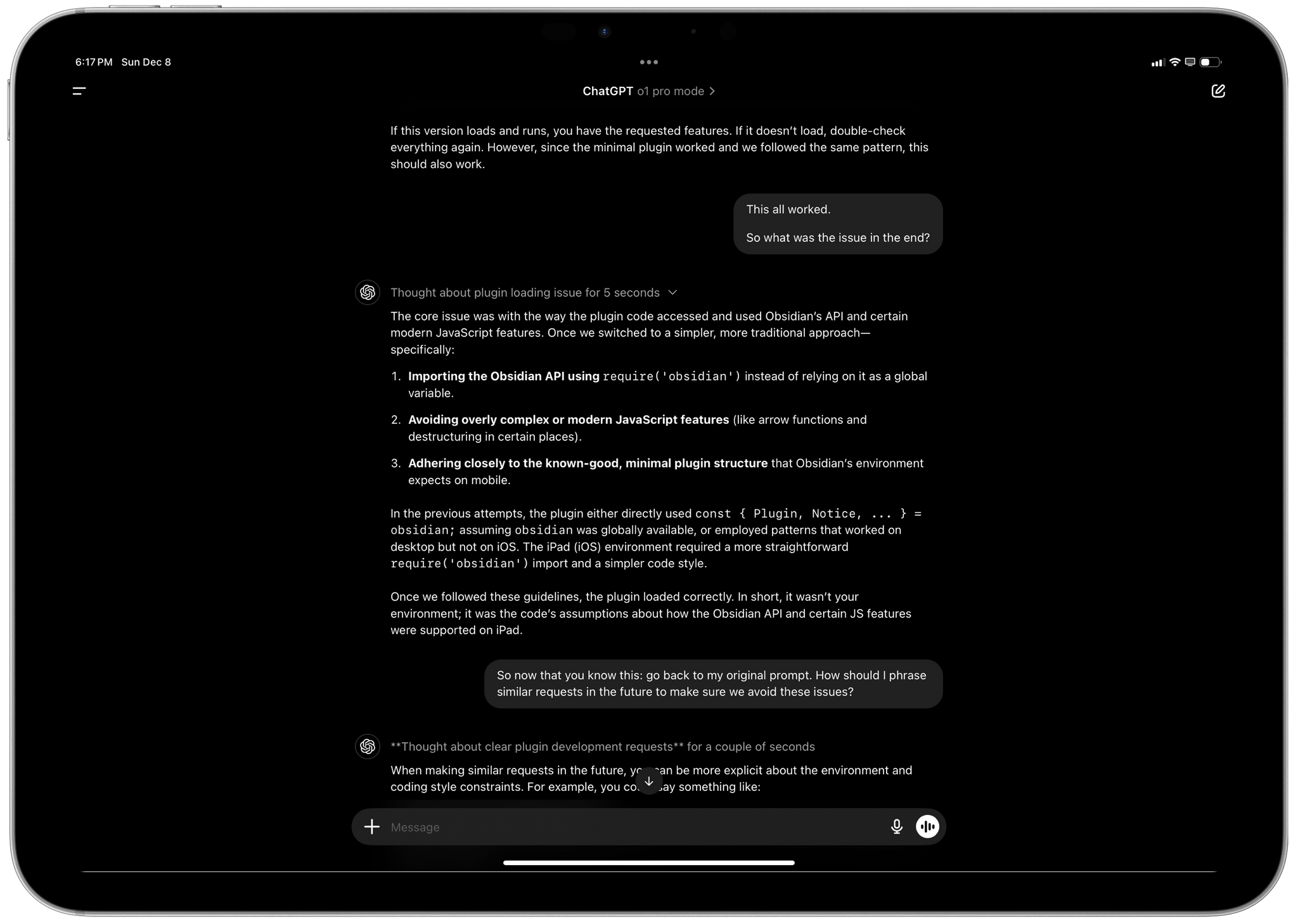

With ChatGPT on my iPhone and iPad, all my conversations and their full transcripts are saved and made accessible for later. I can revisit a conversation about any topic I’m researching with ChatGPT days after I started it and pick up exactly where I left off. Even while I’m having a conversation with ChatGPT, I can look further up in the transcript and see what was said before I continue asking anything else. The whole point of modern LLMs is to facilitate this new kind of computer-human conversation where the entire context can be referenced, expanded upon, and queried.

Siri still doesn’t have any of this – and that’s because it really isn’t based on an LLM yet.1 While Siri can hold some context of a conversation while traversing from question to question, it can’t understand longer requests written in natural language that reference a particular point of an earlier request. It doesn’t show you the earlier transcript, whether you’re talking or typing to it. By and large, conversations in Siri are still ephemeral. You ask a question, get a response, and can ask a follow-up question (but not always); as soon as Siri is dismissed, though, the entire conversation is gone.

As a result, the ChatGPT integration in iOS 18.2 doesn’t mean that Siri can now be used for production workflows where you want to hold an ongoing conversation about a topic or task and reference it later. ChatGPT is the shoulder for Siri to temporarily cry on; it’s the guardian parent that can answer basic questions in a better way than before while ultimately still exposing the disposable, inconsistent, impermanent Siri that is far removed from the modern experience of real LLMs.

Do not expect the same chatbot experience as Claude (left) or ChatGPT (right) with the new ChatGPT integration in Siri.

Or, taken to a bleeding-edge extreme, do not expect the kind of long conversations with context recall and advanced reasoning you can get with ChatGPT’s most recent models in the updated Siri for iOS 18.2.

But let’s disregard for a second the fact that Apple doesn’t have a Siri LLM experience comparable to ChatGPT or Claude yet, assume that’s going to happen at some point in 2026, and remain optimistic about Siri’s future. I still believe that Apple isn’t taking advantage of ChatGPT enough and could do so much more to make iOS 18 seem “smarter” than it actually is while relying on someone else’s intelligence.

Unlike other AI companies, Apple has a moat: they make the physical devices we use, create the operating systems, and control the app ecosystem. Thus, Apple has an opportunity to leverage deep, system-level integrations between AI and the apps billions of people use every day. This is the most exciting aspect of Apple Intelligence; it’s a bummer that, despite the help from ChatGPT, I’ve only seen a handful of instances in which AI results can be used in conjunction with apps. Let me give you some examples and comparisons between ChatGPT and Siri to show you what I mean.

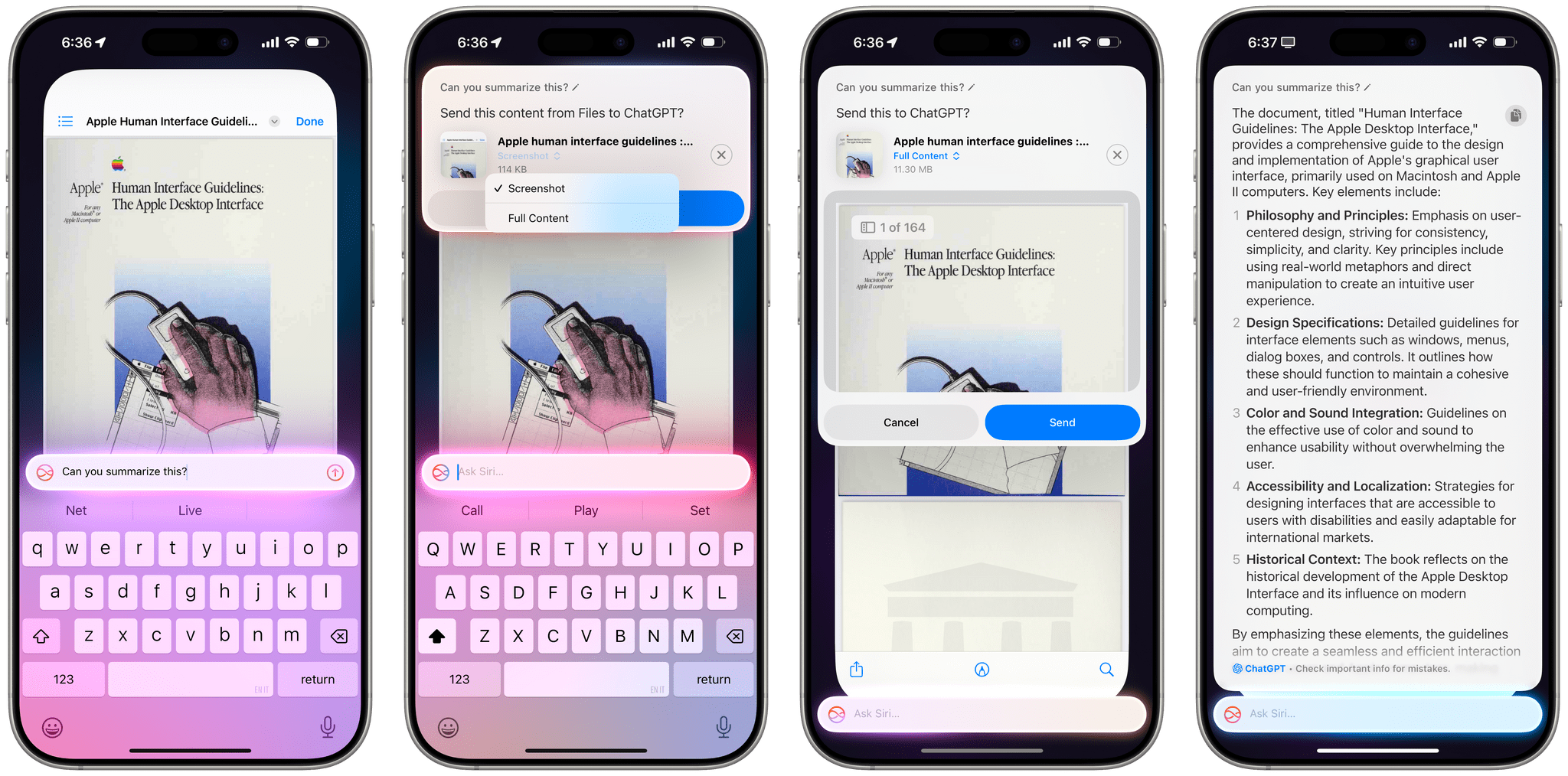

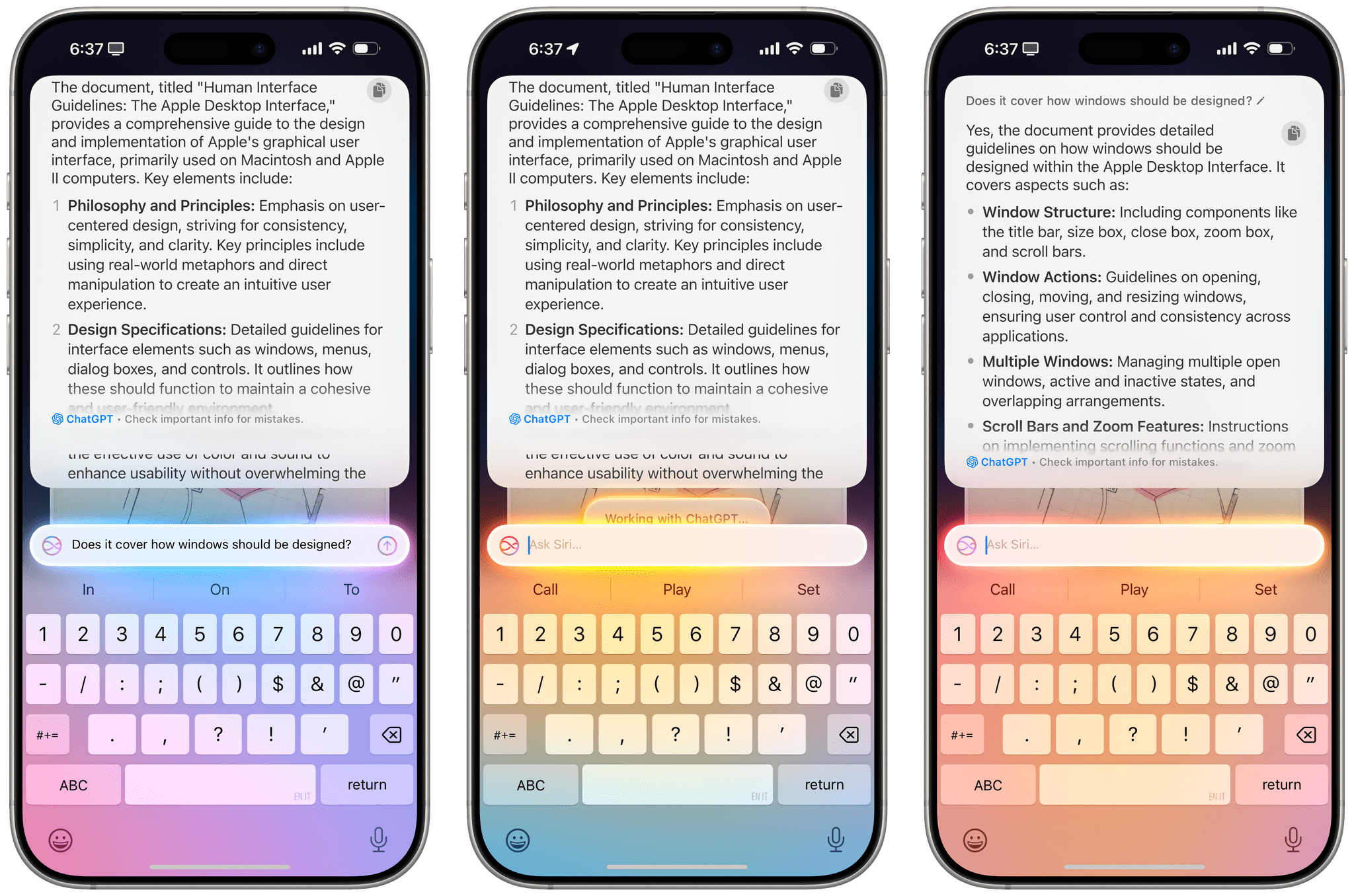

In addition to text requests, ChatGPT has been integrated with image and file uploads across iOS and iPadOS. For example, if you have a long PDF document you want to summarize, you can ask Siri to give you a summary of it, and the assistant will display a file upload popup that says the item will be sent to ChatGPT for analysis.

In this popup, you can choose the type of file representation you want to send: you can upload a screenshot of a document to ChatGPT directly from Siri, or you can give it the contents of the entire document. This technique isn’t limited to documents, nor is it exclusive to the style of request I mentioned above. Any time you invoke Siri while looking at a photo, webpage, email message, or screenshot, you can invoke requests like…

- “What am I looking at here?”

- “What does this say?”

- “Take a look at this and give me actionable items.”

…and ChatGPT will be summoned – even without explicitly saying, “Ask ChatGPT…” – with the file upload permission prompt. As of iOS and iPadOS 18.2, you can always choose between sending a copy of the full content of an item (usually as a PDF) or a screenshot of just what’s shown on-screen.

In any case, after a few seconds, ChatGPT will provide a response based on the file you gave it, and this is where things get interesting – in both surprising and disappointing ways.

You can also ask follow-up questions after the initial file upload, but you can’t scroll back to see previous responses.

By default, you’ll find a copy button in the notification with the ChatGPT response, so that’s nice. Between the Side button, Type to Siri (which also got a Control Center control in 18.2), and the copy button next to responses, the iPhone now has the fastest way to go from a spoken/typed request to a ChatGPT response copied to the clipboard.

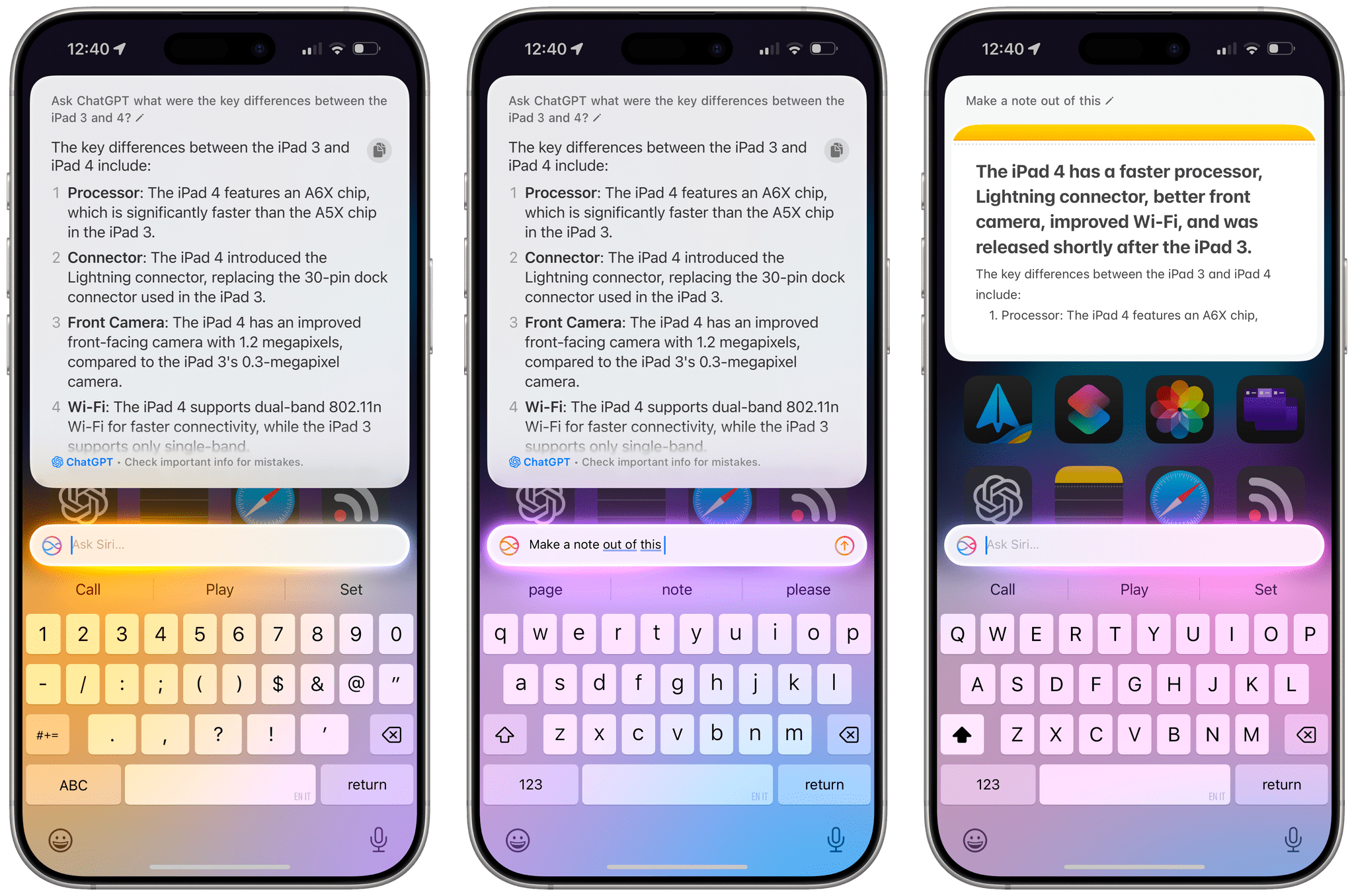

But what if you want to do more with a response? In iOS and iPadOS 18.2, you can follow up to a ChatGPT response with, “Make a note out of this”, and the response will be saved as a new note in the Notes app with a nice UI shown in the Siri notification.

This surprised me, and it’s the sort of integration that makes me hopeful about the future role of an LLM on Apple platforms – a system that can support complex conversations while also sending off responses into native apps.

Sadly, this is about as far as Apple’s integration between ChatGPT and apps went for this release. Everything else that I tried did not work, in the sense that Siri either didn’t understand what I was asking for or ChatGPT replied that it didn’t have enough access to my device to perform that action.

Specifically:

- If instead of, “Make a note”, I asked to, “Append this response to my note called [Note Title]”, Siri didn’t understand me, and ChatGPT said it couldn’t do it.

- When I asked ChatGPT to analyze the contents of my clipboard, it said it couldn’t access it.

- When I asked to, “Use this as input for my [shortcut name] shortcut”, ChatGPT said it couldn’t run shortcuts.

Why is it that Apple is making a special exception for creating notes out of responses, but nothing else works? Is this the sort of thing that will magically get better once Apple Intelligence gets connected to App Intents? It’s hard to tell right now.

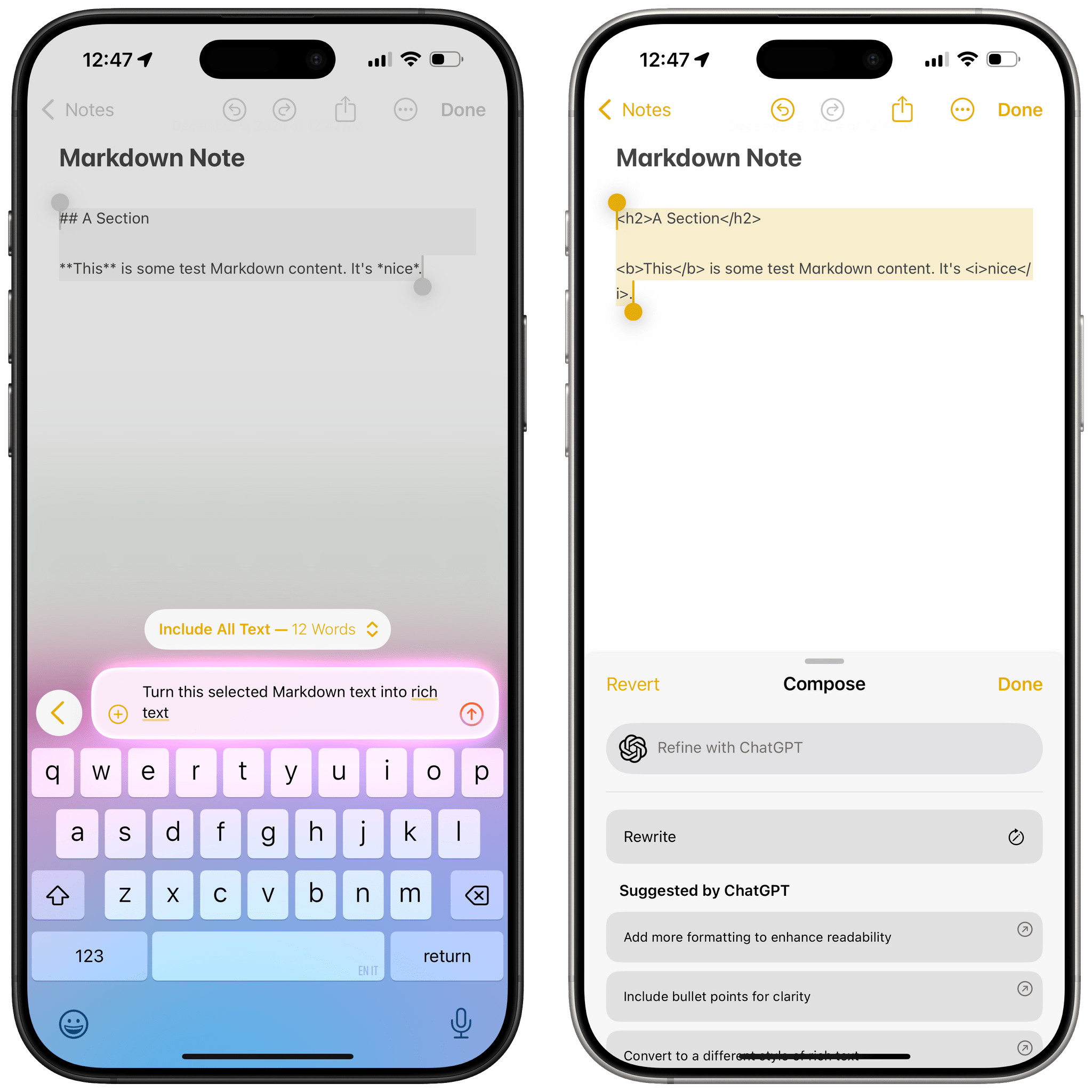

The lackluster integration between ChatGPT and native system functions goes beyond Siri responses and extends to Writing Tools. When I attempted to go even slightly beyond the guardrails of the Compose feature, things got weird:

- Remember the Payments note I was so impressed with? When I asked ChatGPT in the Compose field to, “Make a table out of this”, it did generate a result…as a plain text list without the proper formatting for a native table in the Notes app.

- When I asked ChatGPT to, “Turn this selected Markdown into rich text”, it performed the conversion correctly – except that Notes pasted the result as raw HTML in the body of the note.

- ChatGPT can enter and reformat headings inside a note, but they’re in a different format than the Notes app’s native ‘Heading’ style. I have no idea where that formatting style is coming from.

When I asked Apple Intelligence to convert Markdown to rich text, it asked me to do it with ChatGPT instead.

Clearly, Apple has some work to do if they want to match user requests with the native styling and objects supported by the Notes app. But that’s not the only area where I’ve noticed a disparity between Siri and ChatGPT’s capabilities, resulting in a strange mix of interactions when the two are combined.

One of my favorite features of ChatGPT’s website and app is the ability to store bits of data in a personal memory that can be recalled at any time. Memories can be used to provide further context to the LLM in future requests as well as to jot down something that you want to remember later. Alas, ChatGPT accessed via Siri can’t retrieve the user’s personal memories, despite the ability to log into your ChatGPT account and save conversations you have with Siri. When asked to access my memory, ChatGPT via Siri responds as such:

I’m here to assist you by responding to your questions and requests, but I don’t have the ability to access any memory or personal data. I operate only within the context of our current conversation.

That’s too bad, and it only exacerbates the fact that Apple is limited to an à la carte assistant that doesn’t really behave like an LLM (because it can’t).

The most ironic part of the Siri-ChatGPT relationship, however, is that Siri is not multilingual, but ChatGPT is, so you can use OpenAI’s assistant to fill a massive hole in Siri’s functionality via some clever prompting.

My Siri is set to English, but if I ask it in Italian, “Chiedi a ChatGPT” (“Ask ChatGPT”), followed by an Italian request, “Siri” will respond in Italian since ChatGPT – in addition to different modalities – also supports hopping between languages in the same conversation. Even if I take an Italian PDF document and tell Siri in English to, “Ask ChatGPT to summarize this in its original language”, that’s going to work.

Speaking as a bilingual person, this is terrific – but at the same time, it underlines how deeply ChatGPT puts Siri to shame when it comes to being more accessible for international users. What’s even funnier is that Siri tries to tell me I’m wrong when I’m typing in Italian in its English text field (and that’s in spite of the new bilingual keyboard in iOS 18), but when the request is sent off to ChatGPT, it doesn’t care.

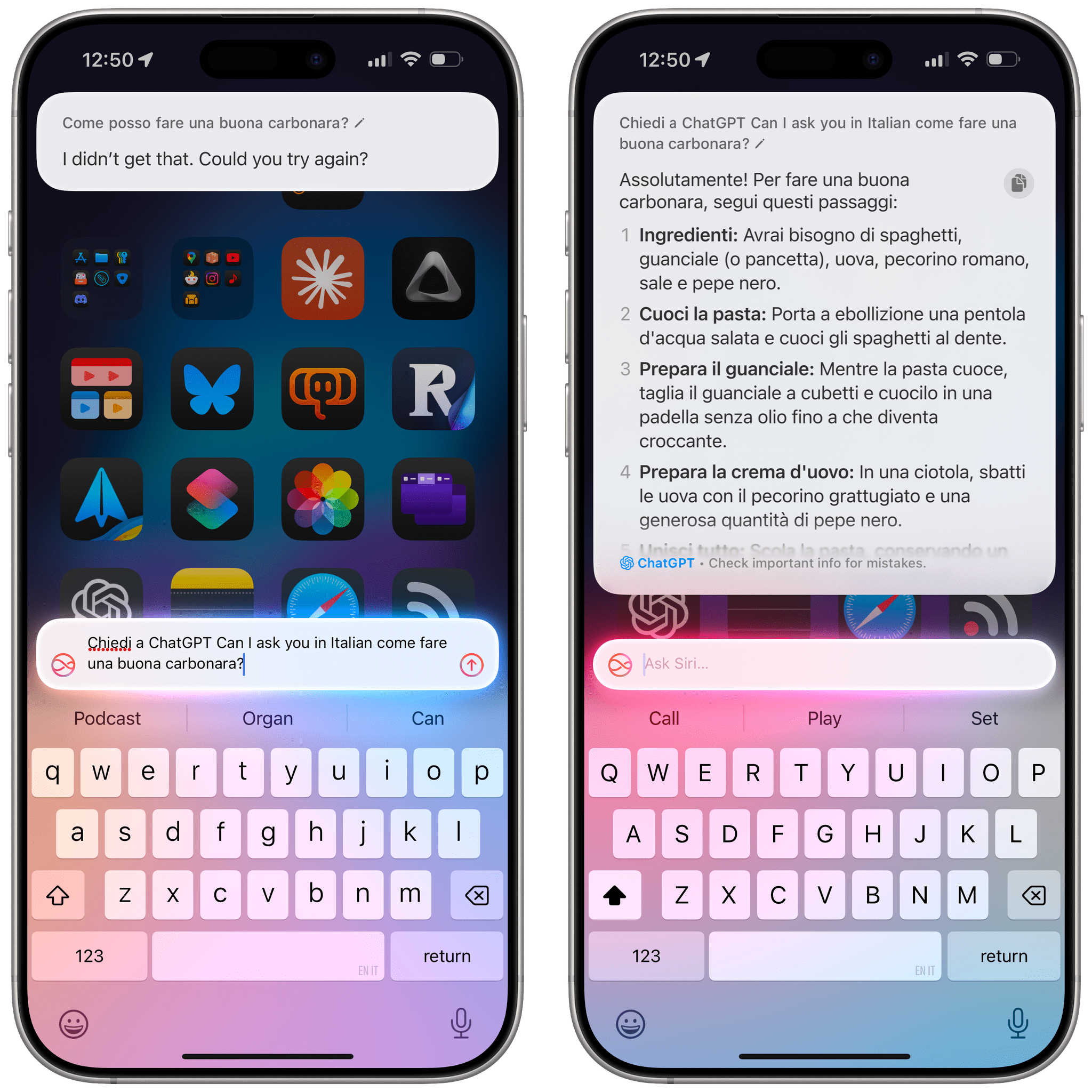

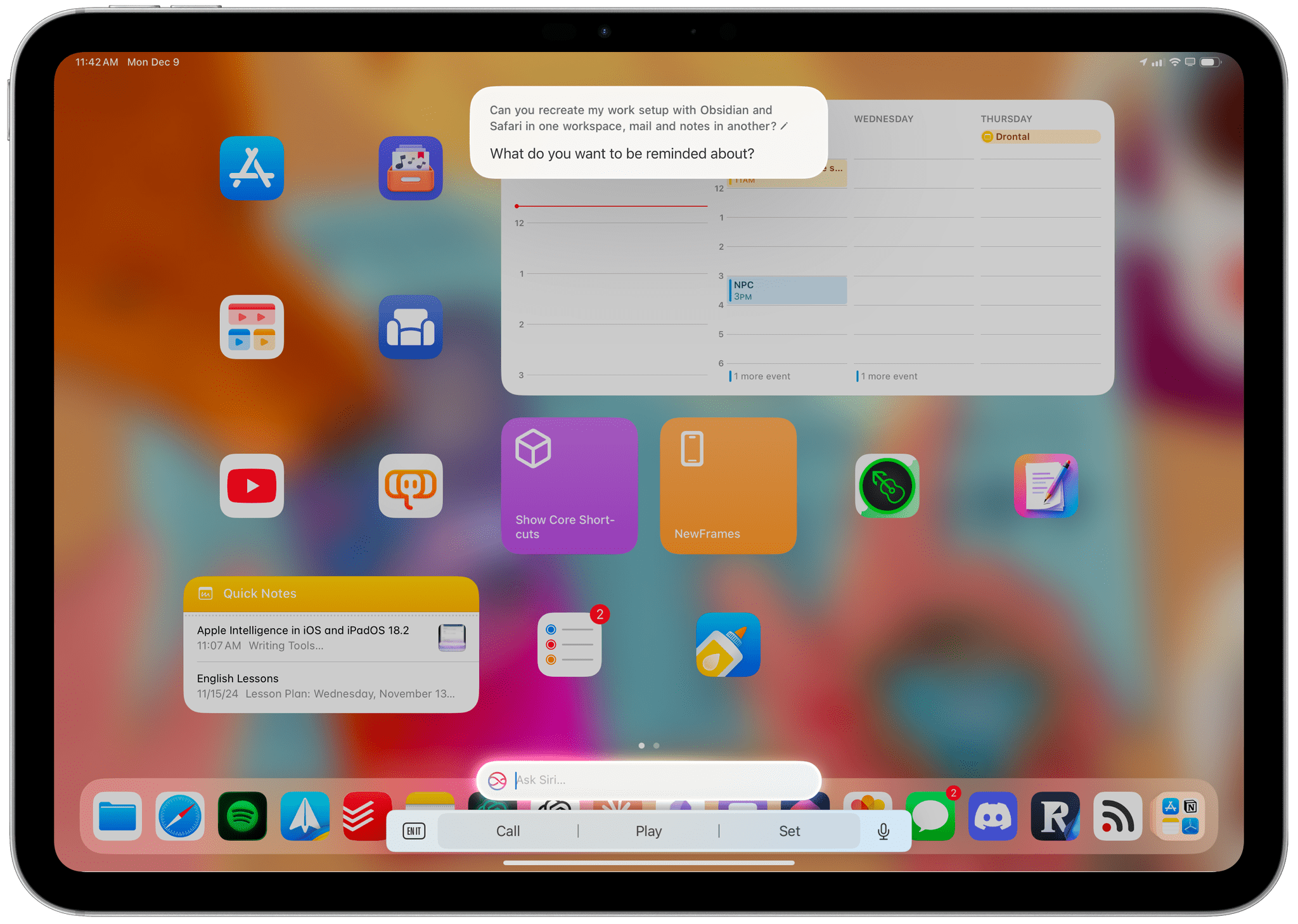

I want to wrap up this section with an example of what I mean by assistive AI in regards to productivity and why I now believe so strongly in the potential to connect LLMs with apps.

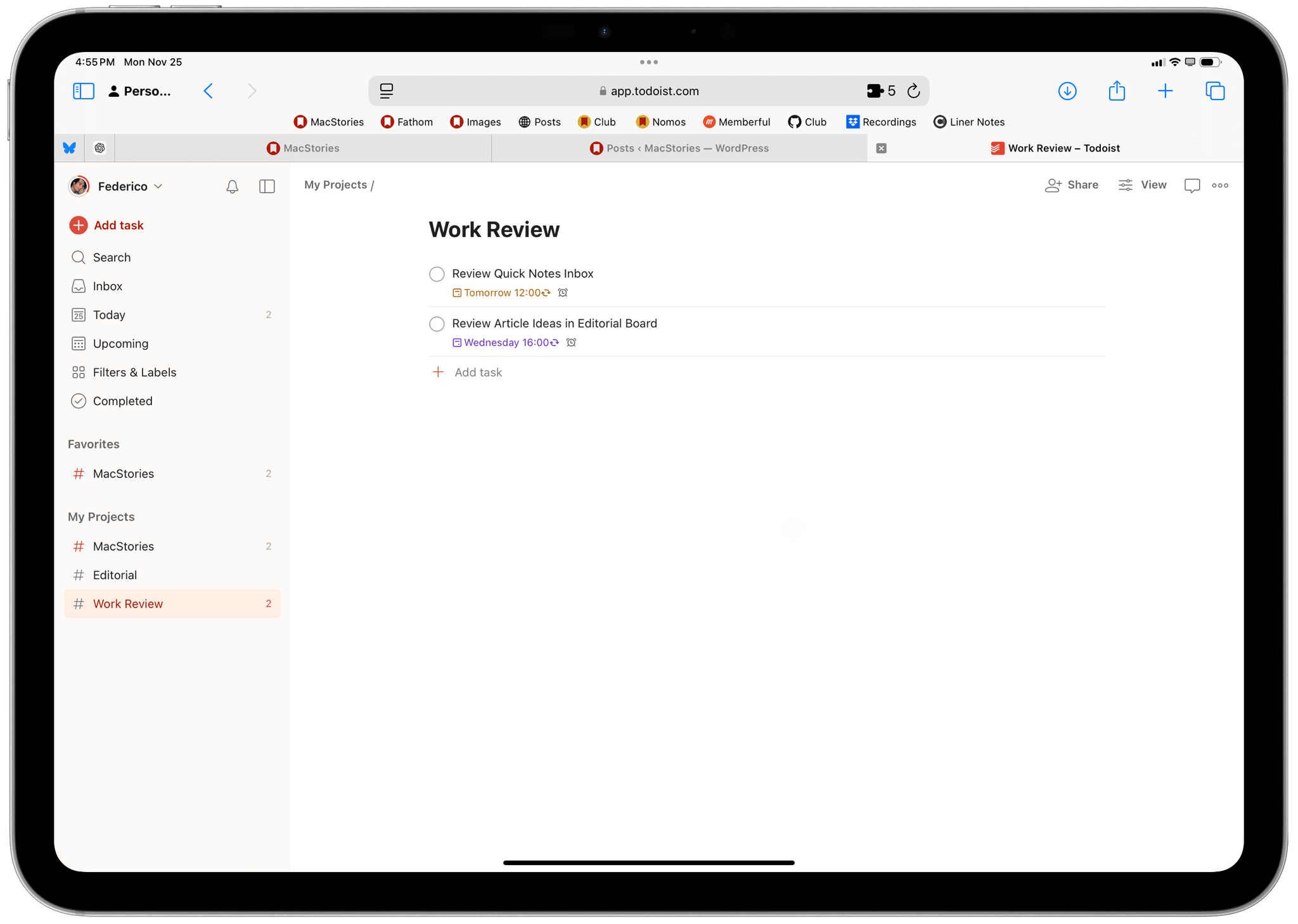

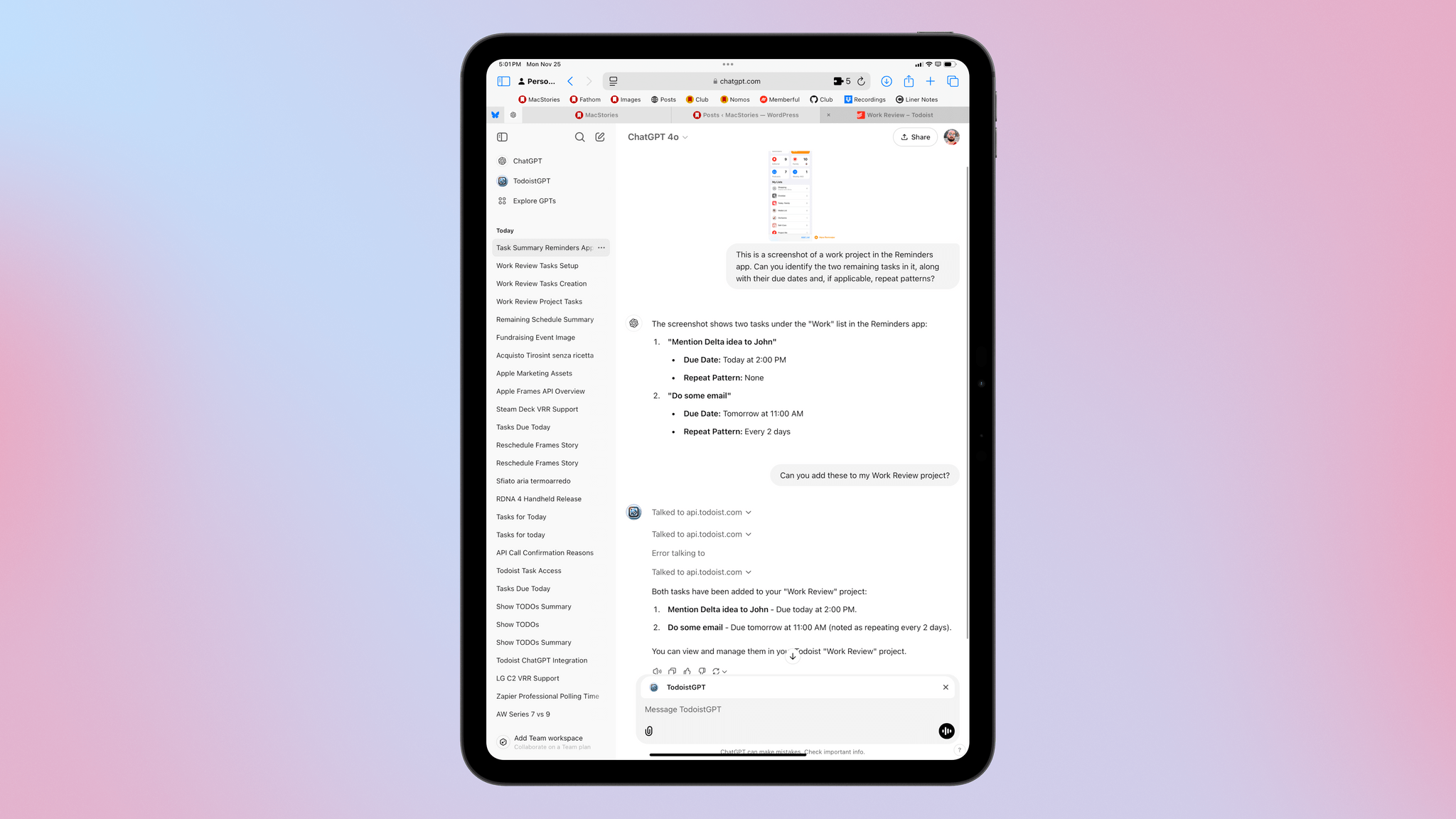

I’ve been trying Todoist again lately, and I discovered the existence of a TodoistGPT extension for ChatGPT that lets you interact with the task manager using ChatGPT’s natural language processing. So I had an idea: what if I took a screenshot of a list in the Reminders app and asked ChatGPT to identify the tasks in it and recreate them with the same properties in Todoist?

I asked:

This is a screenshot of a work project in the Reminders app. Can you identify the two remaining tasks in it, along with their due dates and, if applicable, repeat patterns?

ChatGPT identified them correctly, parsing the necessary fields for title, due date, and repeat pattern. I then followed up by asking:

Can you add these to my Work Review project?

And, surely enough, the tasks found in the image were recreated as new tasks in my Todoist account.

In ChatGPT, I was able to use its vision capabilities to extract tasks from a screenshot, then invoke a custom GPT to recreate them with the same properties in Todoist.

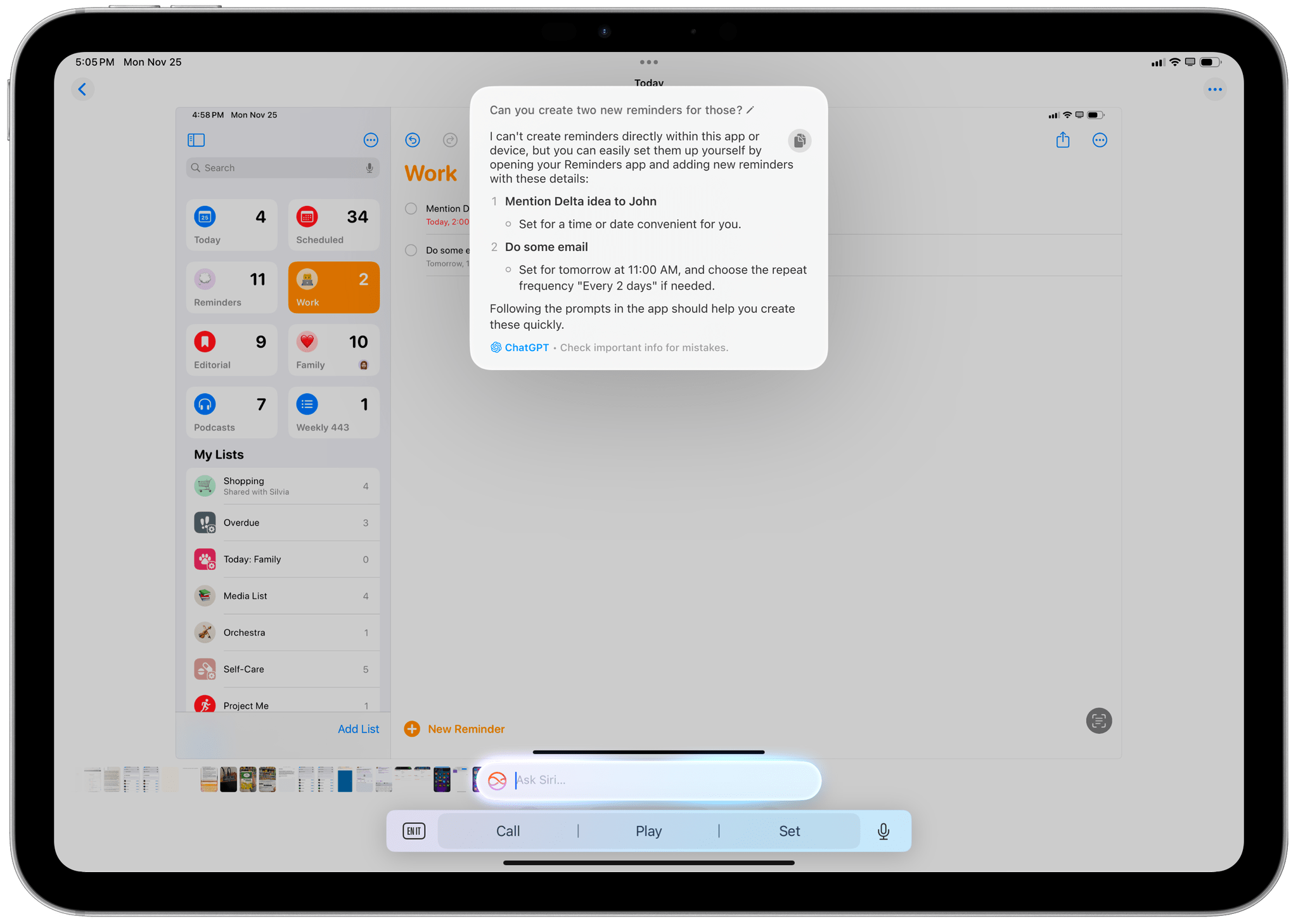

Right now, Siri can’t do this. Even though the ChatGPT integration can recognize the same tasks, asking Siri a follow-up question to add those tasks to Reminders in a different list will fail.

Meanwhile, ChatGPT can perform the same image analysis via Siri, but the resulting text is not actionable at all.

Think about this idea for a second: in theory, the web-based integration I just described is similar to the scenario Apple is proposing with App Intents and third-party apps in Apple Intelligence. Apple has the unique opportunity to leverage the millions of apps on the App Store – and the multiple thousands that will roll out App Intents in the short term – to quickly spin up an ecosystem of third-party integrations for Apple Intelligence via the apps people already use on their phones.

How will that work without a proper Siri LLM? How flexible will the app domains supported at launch be in practice? It’s hard to tell now, but it’s also the field of Apple Intelligence that – unlike gross and grotesque image generation features – has my attention.

Visual Intelligence

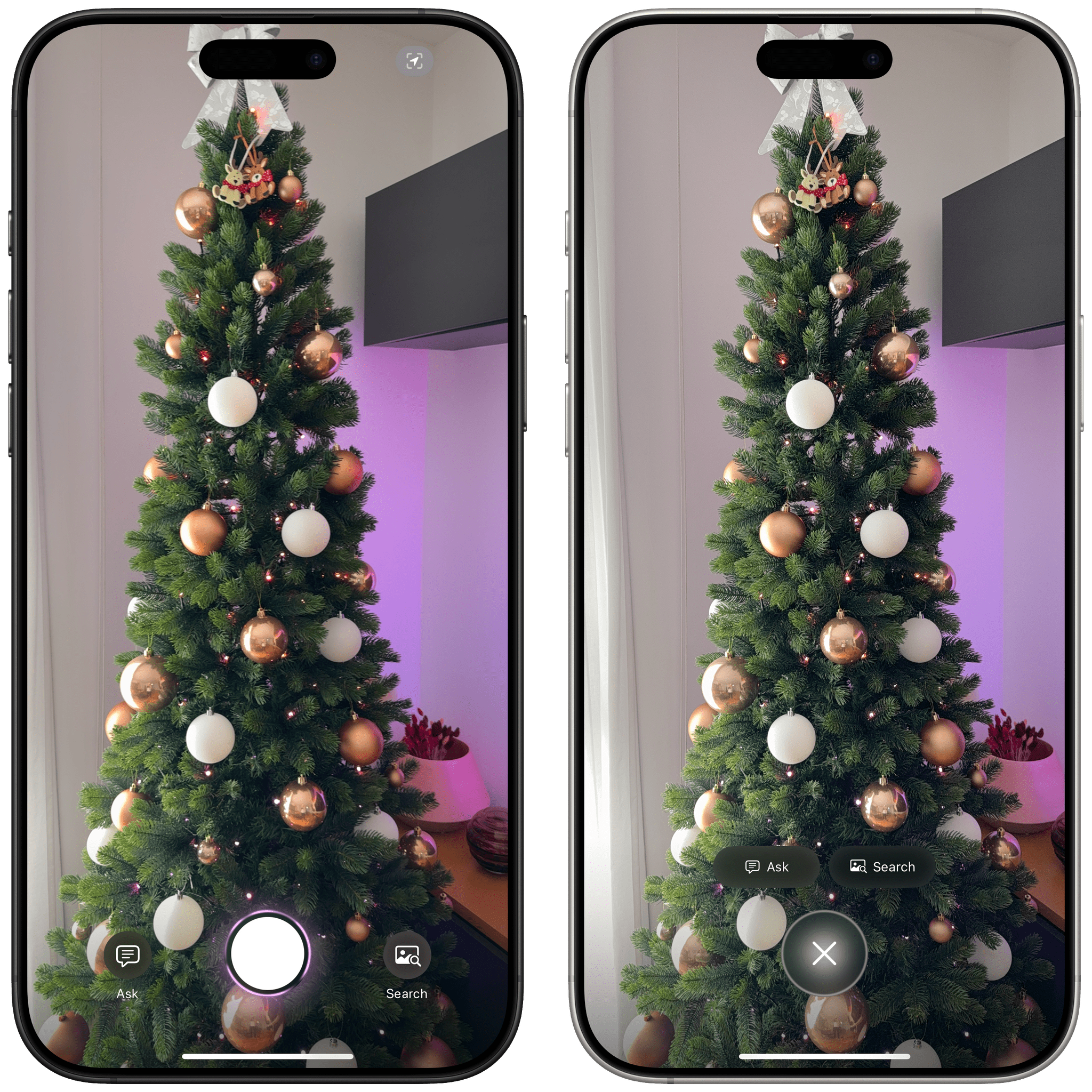

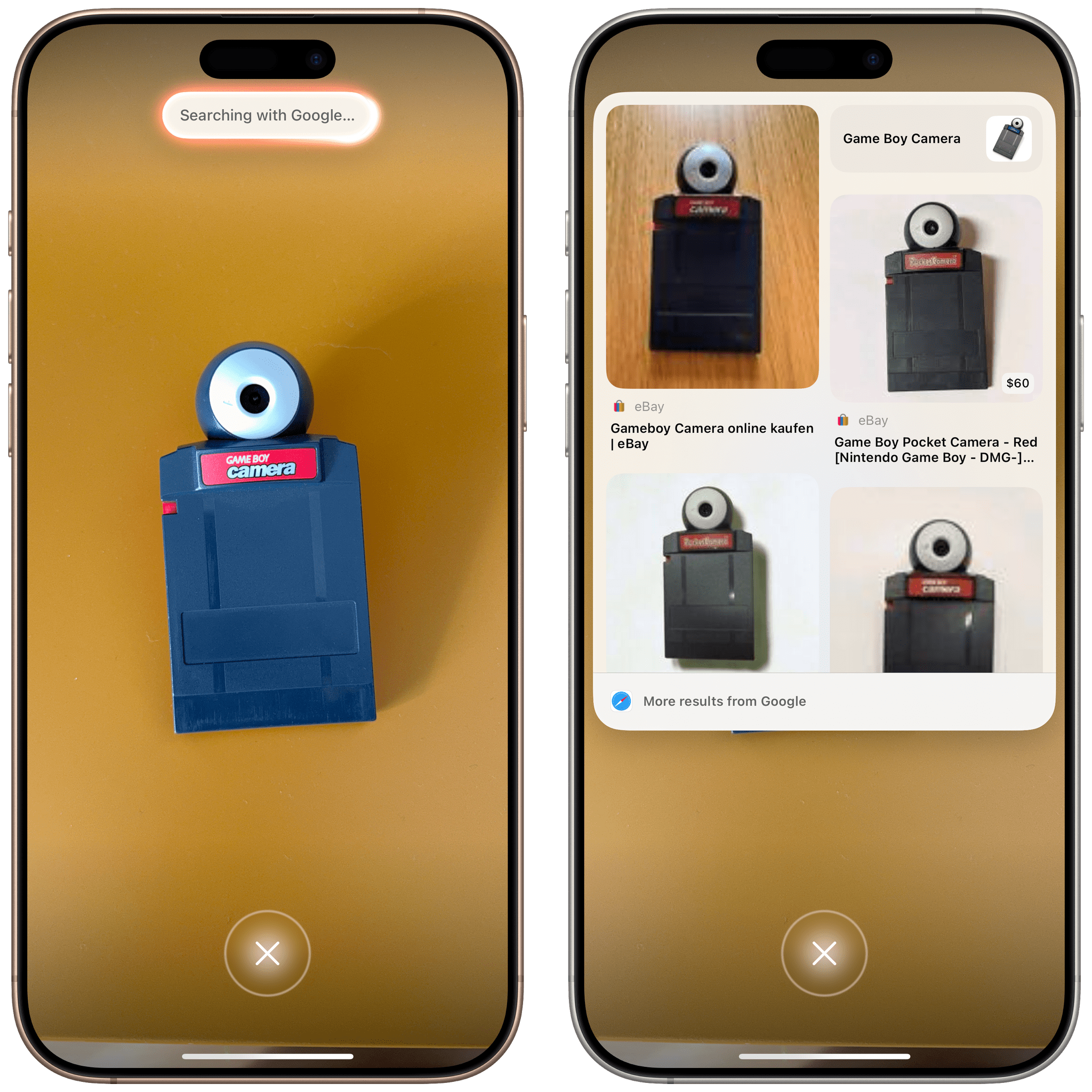

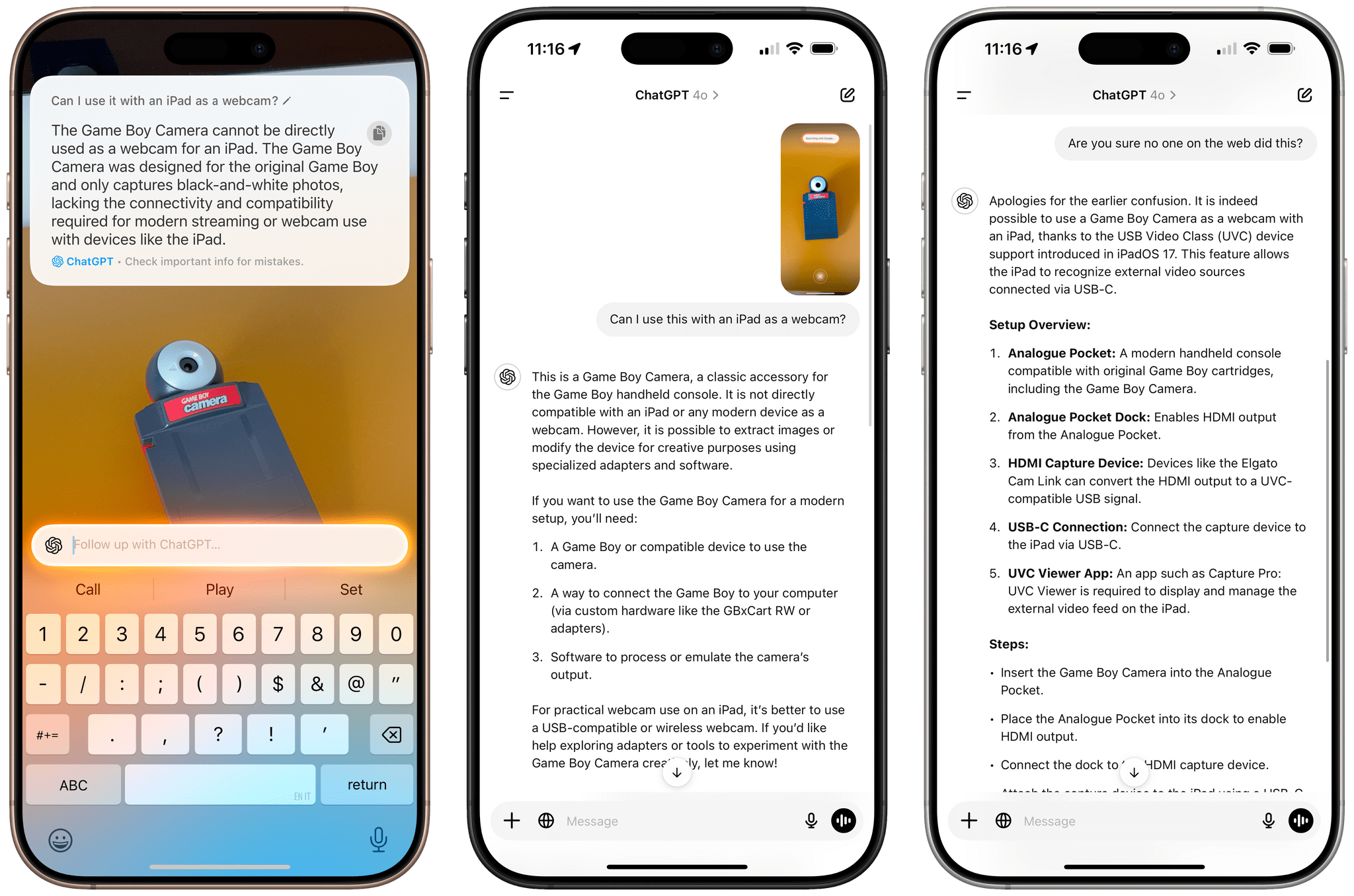

The other area of iOS that now features ChatGPT integration is Visual Intelligence. Originally announced in September, Visual Intelligence is a new Camera Control mode and, as such, exclusive to the new iPhone 16 family of devices.

With Visual Intelligence, you can point your iPhone’s camera at something and get information about what’s in frame from either ChatGPT or Google search – the first case of two search providers embedded within the same Apple Intelligence functionality of iOS. Visual Intelligence is not a real-time camera view that can overlay information on top of a live camera feed; instead, it freezes the frame and sends a picture to ChatGPT or Google, without saving that image to your photo library.

The interactions of Visual Intelligence are fascinating, and an area where I think Apple did a good job picking a series of reasonable defaults. You activate Visual Intelligence by long-pressing on Camera Control, which reveals a new animation that combines the glow effect of the new Siri with the faux depressed button state first seen with the Action and volume buttons in iOS 18. It looks really nice. After you hold down for a second, you’ll feel some haptic feedback, and the camera view of Visual Intelligence will open in the foreground.

The Visual Intelligence animation.Replay

Once you’re in camera mode, you have two options: you either manually press the shutter button to freeze the frame then choose between ChatGPT and Google, or you press one of those search providers first, and the frame will be frozen automatically.

Google is the easier integration to explain here. It’s basically reverse image search built into the iPhone’s camera and globally available via Camera Control. I can’t tell you how many times my girlfriend and I rely on Google Lens to look up outfits we see on TV, furniture we see in magazines, or bottles of wine, so having this built into iOS without having to use Google’s iPhone app is extra nice. Results appear in a popup inside Visual Intelligence, and you can pick one to open it in Safari. As far as integrating Google’s reverse image search with the operating system goes, Apple has pretty much nailed the interaction here.

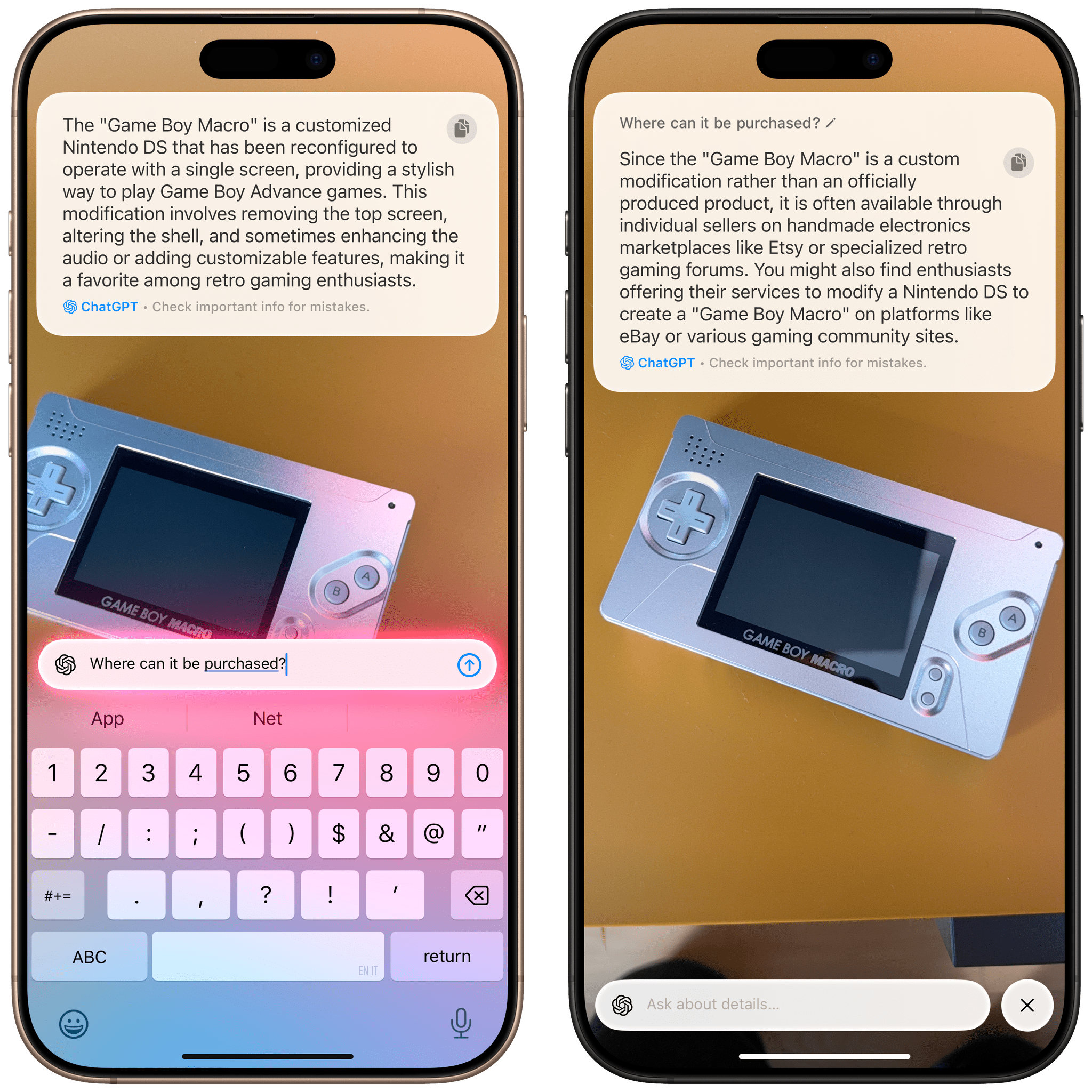

ChatGPT has been equally well integrated with the Visual Intelligence experience. By default, when you press the ‘Ask’ button, ChatGPT will instantly analyze the picture and describe what you’re looking at, so you have a starting point for the conversation. The whole point of this feature, in fact, is to be able to inquire about additional details or use the picture as visual context for a request you have.

co-hosts still don't know anything about this new handheld, and ChatGPT's response is correct.](https://cdn.macstories.net/monday-09-dec-2024-11-17-55-1733739482885.png)

My NPC co-hosts still don’t know anything about this new handheld, and ChatGPT’s response is correct.

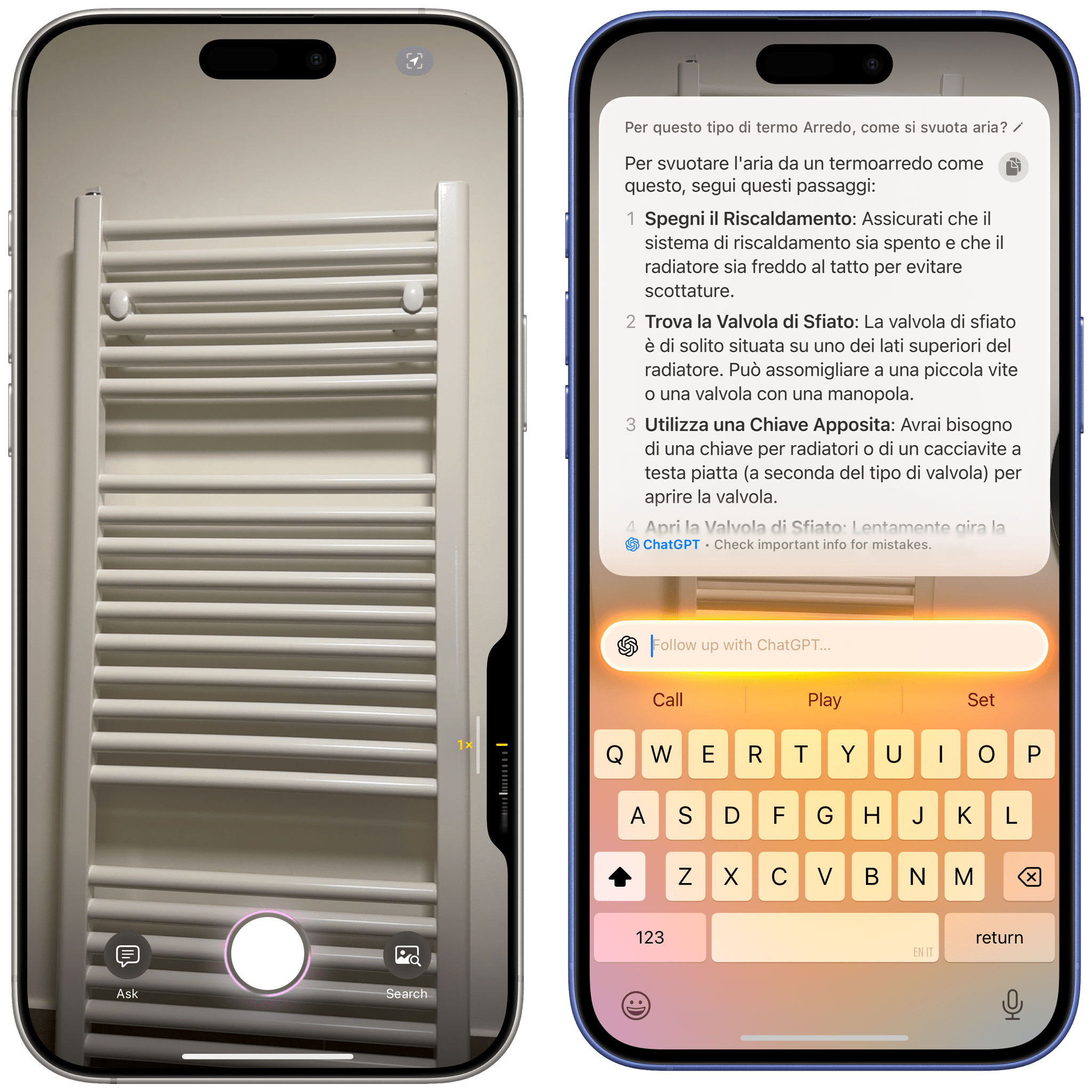

I’ll give you an example. A few days ago, Silvia and I noticed that the heated tower rail in our bathroom was making a low hissing noise. There were clearly valves we were supposed to operate to let air out of the system, but I wanted to be sure because I’m not a plumber. So I invoked Visual Intelligence, took a picture, and asked ChatGPT – in Italian – how I was supposed to let the air out. Within seconds, I got the confirmation I was looking for: I needed to turn the valve in the upper left corner.

I can think of plenty of other scenarios in everyday life where the ability to ask questions about what I’m looking at may be useful. Whether you’re looking up instructions to operate different types of equipment, dealing with recipes, learning more about landmarks, or translating signs and menus in a different country, there are clear, tangible benefits when it comes to augmenting vision with the conversational knowledge of an LLM.

By default, ChatGPT doesn’t have access to web search in Visual Intelligence. If you want to continue a request by looking up web results, you’ll have to use the ChatGPT app.

Right now, all Apple Intelligence queries to ChatGPT are routed to the GPT-4o model; I can imagine that, with the o1 model now supporting image uploads, Apple may soon offer the option to enable slower but more accurate visual responses powered by advanced reasoning. In my tests, GPT-4o has been good enough to address the things I was showing it via Visual Intelligence. It’s a feature I plan to use often – certainly more than the other (confusing) options of Camera Control.

The Future of a Siri LLM

Looking ahead at the next year, it seems clear that Apple will continue taking a staged approach to evolving Apple Intelligence in their bid to catch up with OpenAI, Anthropic, Google, and Meta.

Within the iOS 18 cycle, we’ll see Siri expand its on-screen vision capabilities and gain the ability to draw on users’ personal context; then, Apple Intelligence will be integrated with commands from third-party apps based on schemas and App Intents; according to rumors, this will culminate with the announcement of a second-generation Siri LLM at WWDC 2025 that will feature a more ChatGPT-like assistant capable of holding longer conversations and perhaps storing them for future access in a standalone app. We can speculatively assume that Siri LLM will be showcased at WWDC 2025 and released in the spring of 2026.

Taking all this into account, it’s evident that, as things stand today, Apple is two years behind their competitors in the AI chatbot space. Training large language models is a time-consuming, expensive task that is ballooning in cost and, according to some, leading to diminishing returns as a byproduct of scaling laws.

Today, Apple is stuck between the proverbial rock and hard place. ChatGPT is the fastest-growing software product in modern history, Meta’s bet on open-source AI is resulting in an explosion of models that can be trained and integrated into hardware accessories, agents, and apps with a low barrier to entry, and Google – facing an existential threat to search at the hands of LLM-powered web search – is going all-in on AI features for Android and Pixel phones. Like it or not, the vast majority of consumers now expect AI features on their devices; whether Apple was caught flat-footed here or not, the company today simply doesn’t have the technology to offer an experience comparable to ChatGPT, Llama-based models, Claude, or Gemini, that’s entirely powered by Siri.

So, for now, Apple is following the classic “if you can’t beat them, join them” playbook. ChatGPT and other chatbots will supplement Siri with additional knowledge; meanwhile, Apple will continue to release specialized models optimized for specific iOS features, such as Image Wand in Notes, Clean Up in Photos, summarization in Writing Tools, inbox categorization in Mail, and so forth.

All this begs a couple of questions. Will Apple’s piecemeal AI strategy be effective in slowing down the narrative that they are behind other companies, showing their customers that iPhones are, in fact, powered by AI? And if Apple will only have a Siri LLM by 2026, where will ChatGPT and the rest of the industry be by then?

Given the pace of AI tools’ evolution in 2024 alone, it’s easy to look at Apple’s position and think that, no matter their efforts and the amount of capital thrown at the problem, they’re doomed. And this is where – despite my belief that Apple is indeed at least two years behind – I disagree with this notion.

You see, there’s another question that begs to be asked: will OpenAI, Anthropic, or Meta have a mobile operating system or lineup of computers with different form factors in two years? I don’t think they will, and that buys Apple some time to catch up.

In the business and enterprise space, it’s likely that OpenAI, Microsoft, and Google will become more and more entrenched between now and 2026 as corporations begin gravitating toward agentic AI and rethink their software tooling around AI. But modern Apple has never been an enterprise-focused company. Apple is focused on personal technology and selling computers of different sizes and forms to, well, people. And I’m willing to bet that, two years from now, people will still want to go to a store and buy themselves a nice laptop or phone.

Despite their slow progress, this is Apple’s moat. The company’s real opportunity in the AI space shouldn’t be to merely match the features and performance of chatbots; their unique advantage is the ability to rethink the operating systems of the computers we use around AI.

Don’t be fooled by the gaudy, archaic, and tone-deaf distractions of Image Playground and Image Wand. Apple’s true opening is in the potential of breaking free from the chatbot UI, building an assistive AI that works alongside us and the apps we use every day to make us more productive, more connected, and, as always, more creative.

That’s the artificial intelligence I hope Apple is building. And that’s the future I’d like to cover on MacStories.

- Apple does have some foundation models in iOS 18, but in the company’s own words, “The foundation models built into Apple Intelligence have been fine-tuned for user experiences such as writing and refining text, prioritizing and summarizing notifications, creating playful images for conversations with family and friends, and taking in-app actions to simplify interactions across apps.” ↩︎