For the past two years since my girlfriend and I moved into our new apartment, my desk has been in a constant state of flux. Those who have been reading MacStories for a while know why. There were two reasons: I couldn’t figure out how to use my iPad Pro for everything I do, specifically for recording podcasts the way I like, and I couldn’t find an external monitor that would let me both work with the iPad Pro and play videogames when I wasn’t working.

This article – which has been six months in the making – is the story of how I finally did it.

Over the past six months, I completely rethought my setup around the 11” iPad Pro and a monitor that gives me the best of both worlds: a USB-C connection for when I want to work with iPadOS at my desk and multiple HDMI inputs for when I want to play my PS5 Pro or Nintendo Switch. Getting to this point has been a journey, which I have documented in detail on the MacStories Setups page.

This article started as an in-depth examination of my desk, the accessories I use, and the hardware I recommend. As I was writing it, however, I realized that it had turned into something bigger. It’s become the story of how, after more than a decade of working on the iPad, I was able to figure out how to accomplish the last remaining task in my workflow, but also how I fell in love with the 11” iPad Pro all over again thanks to its nano-texture display.

I started using the iPad as my main computer 12 years ago. Today, I am finally able to say that I can use it for everything I do on a daily basis.

Here’s how.

Table of Contents

- iPad Pro for Podcasting, Finally

- iPad Pro at a Desk: Embracing USB-C with a New Monitor

- The 11” iPad Pro with Nano-Texture Glass

- iPad Pro and Video Recording for MacStories’ Podcasts

- iPad Pro and the Vision Pro

- iPad Pro as a Media Tablet for TV and Game Streaming…at Night

- Hardware Mentioned in This Story

- Back to the iPad

iPad Pro for Podcasting, Finally

If you’re new to MacStories, I’m guessing that you could probably use some additional context.

Through my ups and downs with iPadOS, I’ve been using the iPad as my main computer for over a decade. I love the iPad because it’s the most versatile and modular computer Apple makes. I’ve published dozens of stories about why I like working on the iPad so much, but there was always one particular task that I just couldn’t use the device for: recording podcasts while saving a backup of a Zoom call alongside my local audio recording.

I tried many times over the years to make this possible, sometimes with ridiculous workarounds that involved multiple audio interfaces and a mess of cables. In the end, I always went back to my Mac and the trusty Audio Hijack app since it was the easiest, most reliable way to ensure I could record my microphone’s audio alongside a backup of a VoIP call with my co-hosts. As much as I loved my iPad Pro, I couldn’t abandon my Mac completely. At one point, out of desperation, I even found a way to use my iPad as a hybrid macOS/iPadOS machine and called it the MacPad.

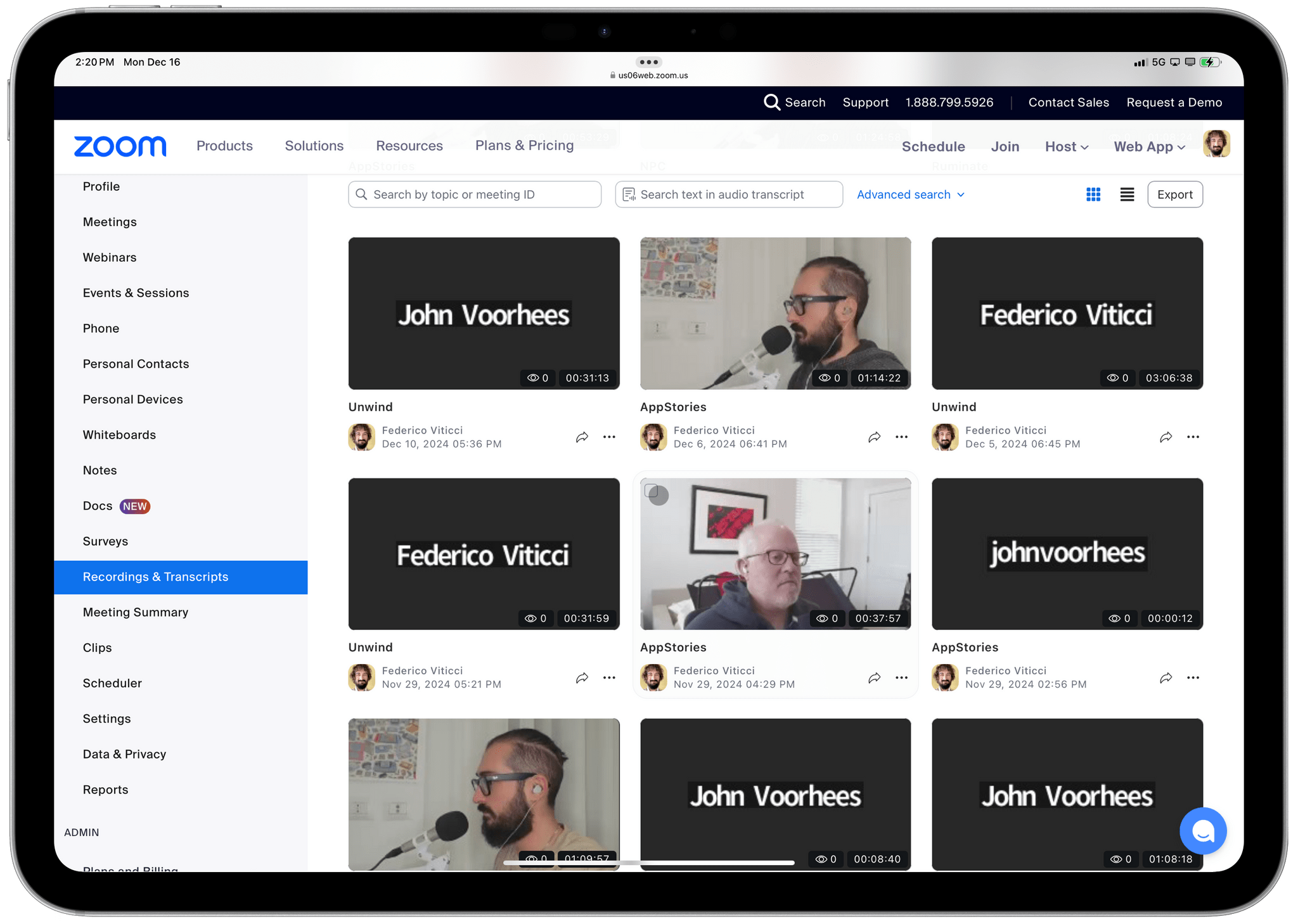

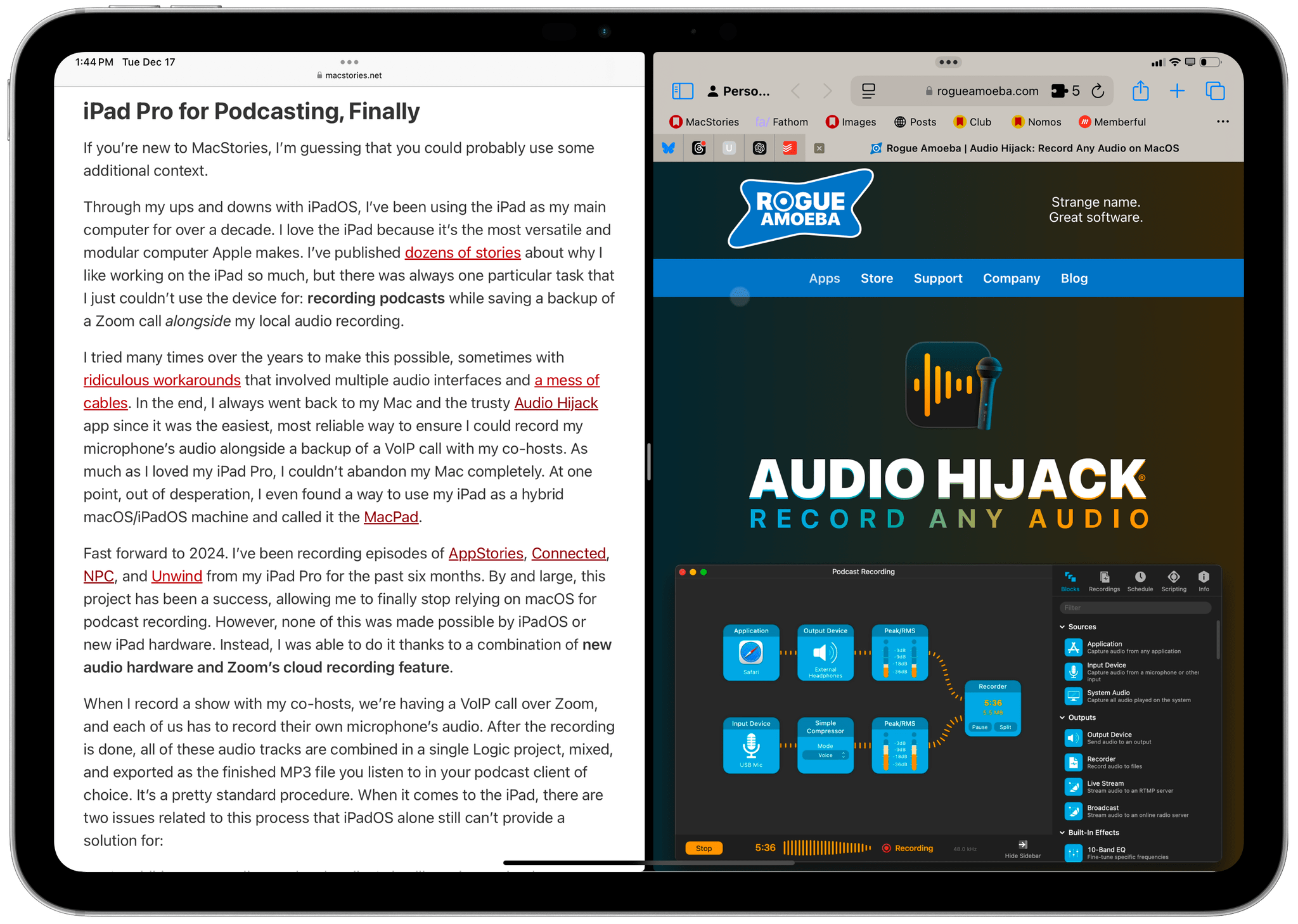

Fast forward to 2024. I’ve been recording episodes of AppStories, Connected, NPC, and Unwind from my iPad Pro for the past six months. By and large, this project has been a success, allowing me to finally stop relying on macOS for podcast recording. However, none of this was made possible by iPadOS or new iPad hardware. Instead, I was able to do it thanks to a combination of new audio hardware and Zoom’s cloud recording feature.

When I record a show with my co-hosts, we’re having a VoIP call over Zoom, and each of us has to record their own microphone’s audio. After the recording is done, all of these audio tracks are combined in a single Logic project, mixed, and exported as the finished MP3 file you listen to in your podcast client of choice. It’s a pretty standard procedure. When it comes to the iPad, there are two issues related to this process that iPadOS alone still can’t provide a solution for:

- In addition to recording my local audio, I also like to have a backup recording of the entire call on Zoom – you know, just as a precaution. On the Mac, I can easily do this with a session in Audio Hijack. On the iPad, there’s no way to do it because the system can’t capture audio from two different sources at once.

- Backups aside, the bigger issue is that, due to how iPadOS is architected, if I’m on a Zoom call, I can’t record my local audio at the same time, period.

As you can see, if I were to rely on iPadOS alone, I wouldn’t be able to record podcasts the way I like to at all. This is why I had to employ additional hardware and software to make it happen.

For starters, per Jason Snell, I found out that Zoom now supports a cloud recording feature that automatically uploads and saves each participant’s audio track. This is great. I enabled this feature for all the scheduled meetings in my Zoom account, and now, as soon as an AppStories call starts, the automatic cloud recording also kicks in. If anything goes wrong with my microphone, audio interface, or iPad at any point, I know there will be a backup waiting for me in my Zoom account a few minutes after the call is finished. I turned this option on months ago, and it’s worked flawlessly so far, giving me the peace of mind that a backup is always happening behind the scenes whenever we record on Zoom.

But what about recording my microphone’s audio in the first place? This is where hardware comes in. As I was thinking about this limitation of iPadOS again earlier this year, I realized that the solution had been staring me in the face this entire time: instead of recording my audio via iPadOS, I should offload that task to external hardware. And a particular piece of gear that does exactly this has been around for years.

Enter Sound Devices’ MixPre-3 II, a small, yet rugged, USB audio interface that lets you plug in up to three microphones via XLR, output audio to headphones via a standard audio jack, and – the best part – record your microphone’s audio to an SD card. (I use this one.)

That was my big realization a few months ago: rather than trying to make iPadOS hold a Zoom call and record my audio at the same time, what if I just used iPadOS for the call and delegated recording to a dedicated accessory?

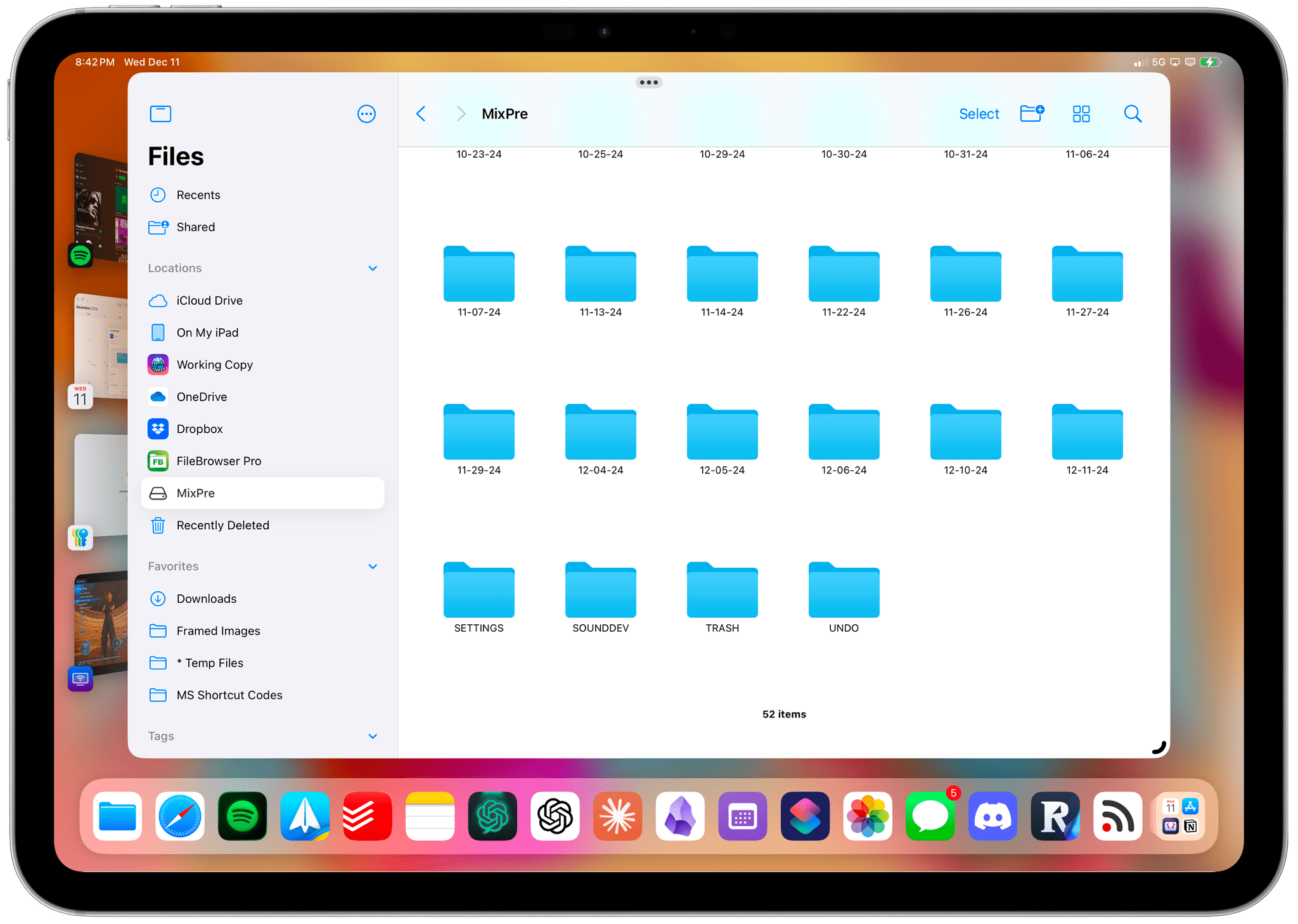

I’m here to tell you that, after some configuration, this works splendidly. Here’s the idea: the MixPre-3 acts as a USB interface for the iPad Pro, but at the same time, it can also record its microphone input to a WAV track that is kept completely separate from iPadOS. The recording feature is built into the MixPre’s software itself; the iPad has no idea that it’s happening. When I finish recording and press the stop button on the MixPre, I can then switch the device’s operating mode from USB audio interface to USB drive, and my iPad will see the MixPre’s SD card as an external source in the Files app.

Then, from the Files app, I can grab the audio file and upload it to Dropbox. For all my issues with the Files app (which has only marginally improved in iPadOS 18 with a couple of additions), I have to say that transferring heavy files from the MixPre’s SD card has been reliable.

The trickiest aspect of using the MixPre with my iPad has been configuring it so that it can record audio from a microphone plugged in via XLR locally while also passing that audio over USB to the iPad and receiving audio from the iPad, outputting it to headphones connected to the MixPre. Long story short, while there are plenty of YouTube guides you can follow, I configured my MixPre in advanced mode so that it records audio using channel 3 (where my microphone is plugged in) and passes audio back and forth over USB using the USB 1 and 2 channels.

It’s difficult for me right now to encapsulate how happy I am that I was finally able to devise a solution for recording podcasts with call backups on my iPad Pro. Sure, the real winners here are Zoom’s cloud backup feature and the MixPre’s excellent USB support. However, I think it should be noted that, until a few years ago, not only was transferring files from an external drive on the iPad impossible, but some people were even suggesting that it was “wrong” to assert that an iPad should support that feature.

As I’ll explore throughout this story, the, “An iPad isn’t meant to do certain things,” ship sailed years ago. It’s time to accept the reality that some people, including me, simply prefer getting their work done on a machine that isn’t a MacBook.

iPad Pro at a Desk: Embracing USB-C with a New Monitor

A while back, I realized that I like the idea of occasionally taking breaks from work by playing a videogame for a few minutes in the same space where I get my work done. This may seem like an obvious idea, but what you should understand about me is that I’ve never done this since I started MacStories 15 years ago. My office has always been the space for getting work done; all game consoles stayed in the living room, where I’d spend some time in the evening or at night if I wasn’t working. Otherwise, I could play on one of my handhelds, usually in bed before going to sleep.

This year, however, the concept of taking a quick break from writing (like, say, 20 minutes) without having to switch locations altogether has been growing on me. So I started looking for alternatives to Apple’s Studio Display that would allow me to easily hop between the iPad Pro, PlayStation 5 Pro, and Nintendo Switch with minimal effort.1

Before you tell me: yes, I tried to make the Studio Display work as a gaming monitor. Last year, I went deep down the rabbit hole of USB-C/HDMI switches that would be compatible with the Studio Display. While I eventually found one, the experience was still not good enough for high-performance gaming; the switch was finicky to set up and unreliable. Plus, even if I did find a great HDMI switch, the Studio Display is always going to be limited to a 60Hz refresh rate. The Studio Display is a great productivity monitor, but I don’t recommend it for gaming. I had to find something else.

After weeks of research, I settled on the Gigabyte M27U as my desk monitor. I love this display: it’s 4K at 27” (I didn’t want to go any bigger than that), refreshes at 160Hz (which is sweet), has an actual OSD menu to tweak settings and switch between devices, and, most importantly, lets me connect computers and consoles over USB-C, HDMI, or DisplayPort.

There have been some downgrades coming from the Studio Display. For starters, my monitor doesn’t have a built-in webcam, which means I had to purchase an external one that’s compatible with my iPad Pro. (More on this later.) The speakers don’t sound nearly as good as the Studio Display’s, either, so I often find myself simply using the iPad Pro’s (amazing) built-in speakers or my new AirPods Max, which I surprisingly love after a…hack.

Furthermore, the M27U offers 400 nits of brightness compared to the Studio Display’s 600 nits. I notice the difference, and it’s my only real complaint about this monitor, which is slim enough and doesn’t come with the useless RGB bells and whistles that most gaming monitors feature nowadays.

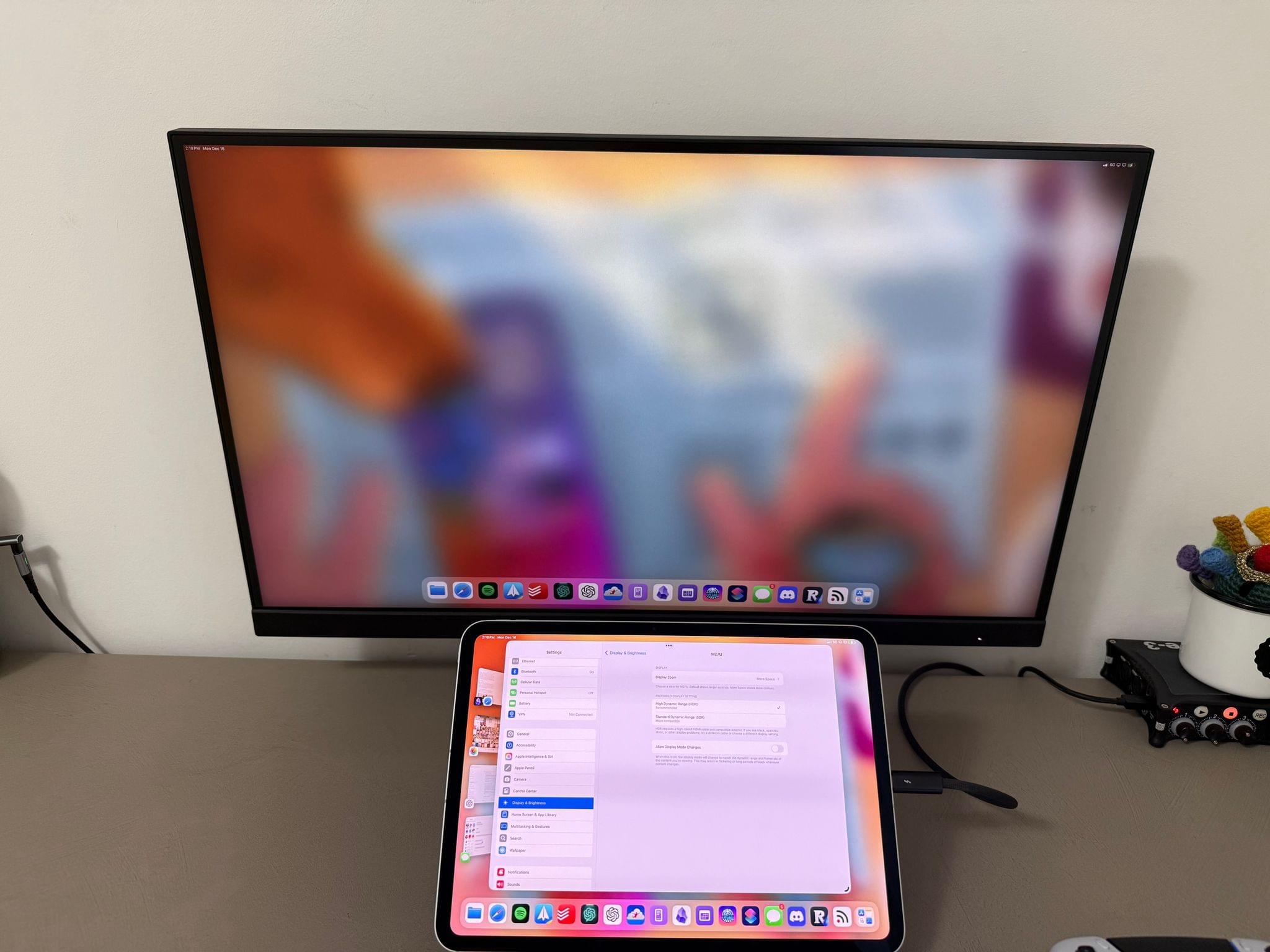

In using the monitor, I’ve noticed something odd about its handling of brightness levels. By default, the iPad Pro connected to my CalDigit TS4 dock (which is then connected over USB-C to the monitor) wants to use HDR for the external display, but that results in a very dim image on the M27U:

The most likely culprit is the fact that this monitor doesn’t properly support HDR over USB-C. If I choose SDR instead of HDR for the monitor, the result is a much brighter panel that doesn’t make me miss the Studio Display that much:

Another downside of using an external monitor over USB-C rather than Thunderbolt is the lack of brightness and volume control via the Magic Keyboard’s function keys. Neither of these limitations is a dealbreaker; I don’t care about volume control since I prefer the iPad Pro’s built-in speakers regardless, and I always keep the brightness set to 100% anyway.

The shortcomings of this monitor for Apple users are more than compensated for by its astounding performance when gaming. Playing games on the M27U is a fantastic experience: colors look great, and the high refresh rate is terrific to see in real life, especially for PS5 games that support 120Hz and HDR. Nintendo Switch games aren’t nearly as impressive from a pure graphical standpoint (there is no 4K output on the current Switch, let alone HDR or 120Hz), but they usually make up for it in art direction and vibrant colors. I’ve had a lovely time playing Astro Bot and Echoes of Wisdom on the M27U, especially because I could dip in and out of those games without having to switch rooms.

What truly sells the M27U as a multi-device monitor isn’t performance alone, though; it’s the ease of switching between multiple devices connected to different inputs. On the back of the monitor, there are two physical buttons: a directional nub that lets you navigate various menus and a KVM button that cycles through currently active inputs. When one of my consoles is awake and the iPad Pro is connected, I can press the KVM button to instantly toggle between the USB-C input (iPad) and whichever HDMI input is active (either the PS5 or Switch). Alternatively, if – for whatever reason – everything is connected and active all at once, I can press the nub on the back and open the ‘Input’ menu to select a specific one.

I recognize that this sort of manual process is probably antithetical to what the typical Apple user expects. But I’m not your typical Apple user or pundit. I love the company’s minimalism, but I also like modularity and using multiple devices. The M27U is made of plastic, its speakers are – frankly – terrible, and it’s not nearly as elegant as the Apple Studio Display. At the same time, quickly switching between iPadOS and The Legend of Zelda makes it all worth it.

Looking ahead at what’s coming in desktop monitor land, I think my next upgrade (sometime in late 2025, most likely) is going to be a 27” 4K OLED panel (ideally with HDMI and Thunderbolt 5?). For now, and for its price, the M27U is an outstanding piece of gear that transformed my office into a space for work and play.

The 11” iPad Pro with Nano-Texture Glass

You may remember that, soon after Apple’s event in May, I decided to purchase a 13” iPad Pro with standard glass. I used that iPad for about a month, and despite my initial optimism, something I was concerned about came true: even with its reduction in weight and thickness, the 13” model was still too unwieldy to use as a tablet outside of the Magic Keyboard. I was hoping its slimmer profile and lighter body would help me take it out of the keyboard case and use it as a pure tablet more often; in reality, nothing can change the fact that you’re holding a 13” tablet in your hands, which can be too much when you just want to watch some videos or read a book.

I had slowly begun to accept that unchanging reality of the iPad lineup when Apple sent me two iPad Pro review units: a 13” iPad Pro with nano-texture glass and a smaller 11” model with standard glass. A funny thing happened then. I fell in love with the 11” size all over again, but I also wanted the nano-texture glass. So I sold my original 13” model and purchased a top-of-the-line 11” iPad Pro with cellular connectivity, 1 TB of storage, and nano-texture glass.

I was concerned the nano-texture glass would take away the brilliance of the iPad’s OLED display. I was wrong.

It’s no exaggeration when I say that this is my favorite iPad of all time. It has reignited a fire inside of me that had been dormant for a while, weakened by years of disappointing iPadOS updates and multitasking debacles.

I have been using this iPad Pro every day for six months now. I wrote and edited the entire iOS and iPadOS 18 review on it. I record podcasts with it. I play and stream videogames with it. It’s my reading device and my favorite way to watch movies and YouTube videos. I take it with me everywhere I go because it’s so portable and lightweight, plus it has a cellular connection always available. The new 11” iPad Pro is, quite simply, the reason I’ve made an effort to go all-in on iPadOS again this year.

There were two key driving factors behind my decision to move from the 13” iPad Pro back to the 11”: portability and the display. In terms of size, this is a tale as old as the iPad Pro. The large model is great if you primarily plan to use it as a laptop, and it comes with superior multitasking that lets you see more of multiple apps at once, whether you’re using Split View or Stage Manager. The smaller version, on the other hand, is more pleasant to use as a tablet. It’s easier to hold and carry around with one hand, still big enough to support multitasking in a way that isn’t as cramped as an iPad mini, and, of course, just as capable as its bigger counterpart when it comes to driving an external display and connected peripherals. With the smaller iPad Pro, you’re trading screen real estate for portability; in my tests months ago, I realized that was a compromise I was willing to make.

As a result, I’ve been using the iPad Pro more, especially at the end of the workday, when I can take it out of the Magic Keyboard to get some reading done in Readwise Reader or catch up on my queue in Play. In theory, I could also accomplish these tasks with the 13” iPad Pro; in practice, I never did because, ergonomically, the larger model just wasn’t that comfortable. I always ended up reaching for my iPhone instead of the iPad when I wanted to read or watch something, and that didn’t feel right.

Much to my surprise, using the 11” iPad Pro with old-school Split View and Slide Over has also been a fun, productive experience.

When I’m working at my desk, I have to use Stage Manager on the external monitor, but when I’m just using the iPad Pro, I prefer the classic multitasking environment. There’s something to the simplicity of Split View with only two apps visible at once that is, at least for me, conducive to writing and focusing on the current task. Plus, there’s also the fact that Split View and Slide Over continue to offer a more mature, fleshed-out take on multitasking: there are fewer keyboard-related bugs, there’s a proper window picker for apps that support multiwindowing, and replacing apps on either side of the screen is very fast via the Dock, Spotlight, or Shortcuts actions (which Stage Manager still doesn’t offer). Most of the iOS and iPadOS 18 review was produced with Split View; if you haven’t played around with “classic” iPadOS multitasking in a while, I highly recommend checking it out again.

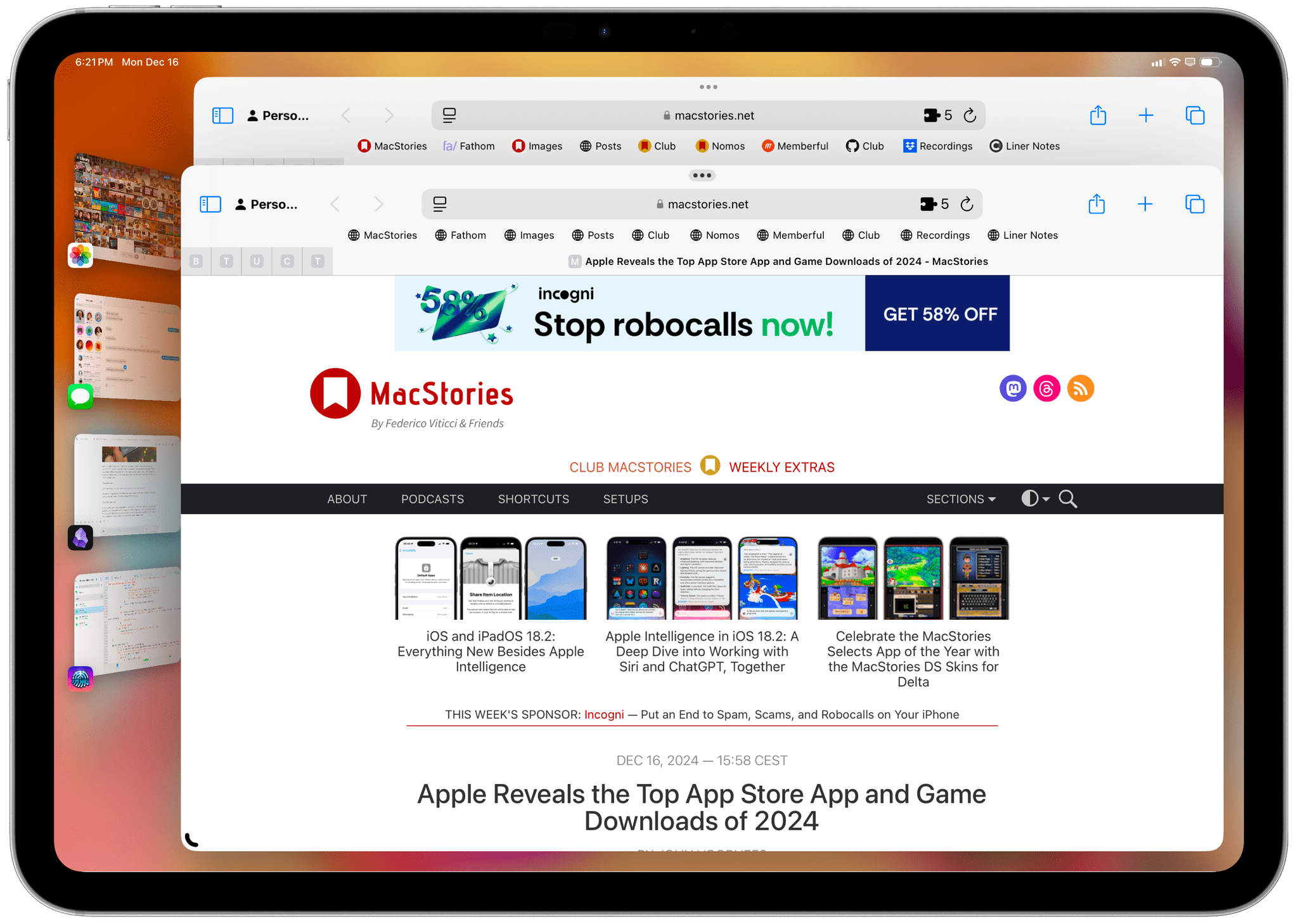

One of the other nice perks of Split View – a feature that has been around for years now2, but I’d forgotten about – is the ease of multitasking within Safari. When I’m working in the browser and want to compare two webpages side by side, taking up equal parts of the screen, I can simply drag a tab to either side of the screen to create a new Safari Split View:

When I drag a link to the side, Split View instantly splits the screen in half with two Safari windows.

Conversely, doing the same with Stage Manager opens a new Safari window, which I then have to manually resize if I want to compare two webpages:

So far, I’ve focused on the increased portability of the 11” iPad Pro and how enjoyable it’s been to use a tablet with one hand again. Portability, however, is only one side of this iPad Pro’s story. In conjunction with its portable form factor, the other aspect of the 11” iPad Pro that makes me enjoy using it so much is its nano-texture glass.

Long story short, I’m a nano-texture glass convert now, and it’s become the kind of technology I want everywhere.

My initial concern with the nano-texture glass was that it would substantially diminish the vibrancy and detail of the iPad Pro’s standard glass. I finally had an OLED display on my iPad, and I wanted to make sure I’d fully take advantage of all its benefits over mini-LED. After months of daily usage, I can say not only that my concerns were misplaced and this type of glass is totally fine, but that this option has opened up new use cases for the iPad Pro that just weren’t possible before.

For instance, I discovered the joy of working with my iPad Pro outside, without the need to chase down a spot in the shade so I can see the display more clearly. One of the many reasons we bought this apartment two years ago is the beautiful balcony, which faces south and gets plenty of sunlight all year long. We furnished the balcony so we could work on our laptops there when it’s warm outside, but in practice, I never did because it was too bright. Everything reflected on the screen, making it barely readable. That doesn’t happen anymore with the nano-texture iPad Pro. Without any discernible image or color degradation compared to the standard iPad Pro, I am – at long last – able to sit outside, enjoy some fresh air, and bask in the sunlight with my dogs while also typing away at my iPad Pro using a screen that remains bright and legible.

Sure, I’m talking about the display now. But I just want to stop for a second and appreciate how elegant and impossibly thin the M4 iPad Pro is.

If you know me, you also know where this is going. After years of struggle and begrudging acceptance that it just wasn’t possible, I took my iPad Pro to the beach earlier this year and realized I could work in the sun, with the waves crashing in front of me as I wrote yet another critique of iPadOS. I’ve been trying to do this for years: every summer since I started writing annual iOS reviews 10 years ago, I’ve attempted to work from the beach and consistently given up because it was impossible to see text on the screen under the hot, August sun of the Italian Riviera. That’s not been the case with the 11” iPad Pro. Thanks to its nano-texture glass, I got to have my summer cake and eat it too.

I can see the comments on Reddit already – “Italian man goes outside, realizes fresh air is good” – but believe me, to say that this has been a quality-of-life improvement for me would be selling it short. Most people won’t need the added flexibility and cost of the nano-texture glass. But for me, being unable to efficiently work outside was antithetical to the nature of the iPad Pro itself. I’ve long sought to use a computer that I could take with me anywhere I went. Now, thanks to the nano-texture glass, I finally can.

iPad Pro and Video Recording for MacStories’ Podcasts

I struggled to finish this story for several months because there was one remaining limitation of iPadOS that kept bothering me: I couldn’t figure out how to record audio and video for MacStories’ new video podcasts while also using Zoom.

What I’m about to describe is the new aspect of my iPad workflow I’m most proud of figuring out. After years of waiting for iPadOS to eventually improve when it comes to simultaneous audio and video streams, I used some good old blue ocean strategy to fix this problem. As it turns out, the solution had been staring me in the face the entire time.

Consider again, for a second, the setup I described above. The iPad is connected to a CalDigit Thunderbolt dock, which in turn connects it to my external monitor and the MixPre audio interface. My Neumann microphone is plugged into the MixPre, as are my in-ear buds; as I’ve explained, this allows me to record my audio track separately on the MixPre while coming through to other people on Zoom with great voice quality and also hearing myself back. For audio-only podcasts, this works well, and it’s been my setup for months.

As MacStories started growing its video presence as a complement to text and audio, however, I suddenly found myself needing to record video versions of NPC and AppStories in addition to audio. When I started recording video for those shows, I was using an Elgato FaceCam Pro 4K webcam; the camera had a USB-C connection, so thanks to UVC support, it was recognized by iPadOS, and I could use it in my favorite video-calling apps. So far, so good.

The problem, of course, was that when I was also using the webcam for Zoom, I couldn’t record a video in Camo Studio at the same time. It was my audio recording problem all over again: iPadOS cannot handle concurrent media streams, so if the webcam was being used for the Zoom call, then Camo Studio couldn’t also record its video feed.

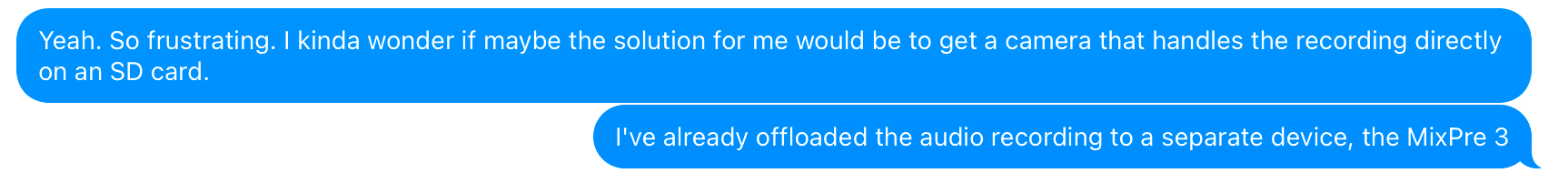

Once again, I felt powerless. I’d built this good-looking setup with a light and a microphone arm and a nice poster on the wall, and I couldn’t do it all with my iPad Pro because of some silly software limitation. I started talking to my friend (and co-host of Comfort Zone) Chris Lawley, who’s also been working on the iPad for years, and that’s when it dawned on me: just like I did with audio, I should offload the recording process to external hardware.

My theory was simple. I needed to find the equivalent of the MixPre, but for video: a camera that I could connect over USB-C to the iPad Pro and use as a webcam in Zoom (so my co-hosts could see me), but which I could also operate to record video on its own SD card, independent of iPadOS. At the end of each recording session, I would grab the audio file from the MixPre, import the video file from the camera, and upload them both to Dropbox – no Mac involved in the process at all.

If the theory was correct – if iPadOS could indeed handle both the MixPre and a UVC camera at the same time while on a Zoom call – then I would be set. I could get rid of my MacBook Air (or what’s left of it, anyway) for good and truly say that I can do everything on my iPad Pro after more than a decade of iPad usage.

And well…I was right.

I did a lot of research on what could potentially be a very expensive mistake, and the camera I decided to go with is the Sony ZV-E10 II. This is a mirrorless Sony camera that’s advertised as made for vlogging and is certified under the Made for iPhone and iPad accessory program. After watching a lot of video reviews and walkthroughs, it seemed like the best option for me for a variety of reasons:

- I know nothing about photography and don’t plan on becoming a professional photographer. I just wanted a really good camera with fantastic image quality for video recording that could work for hours at a time while recording in 1080p. The ZV-E10 II is specifically designed with vlogging in mind and has an ‘intelligent’ shooting mode that doesn’t require me to tweak any settings for exposure or ISO.

- The ZV-E10 supports USB-C connection to the iPad – and, specifically, UVC – out of the box. USB connections are automatically detected, so the camera gets picked up on the iPad by apps like Zoom, FaceTime, and Camo Studio.

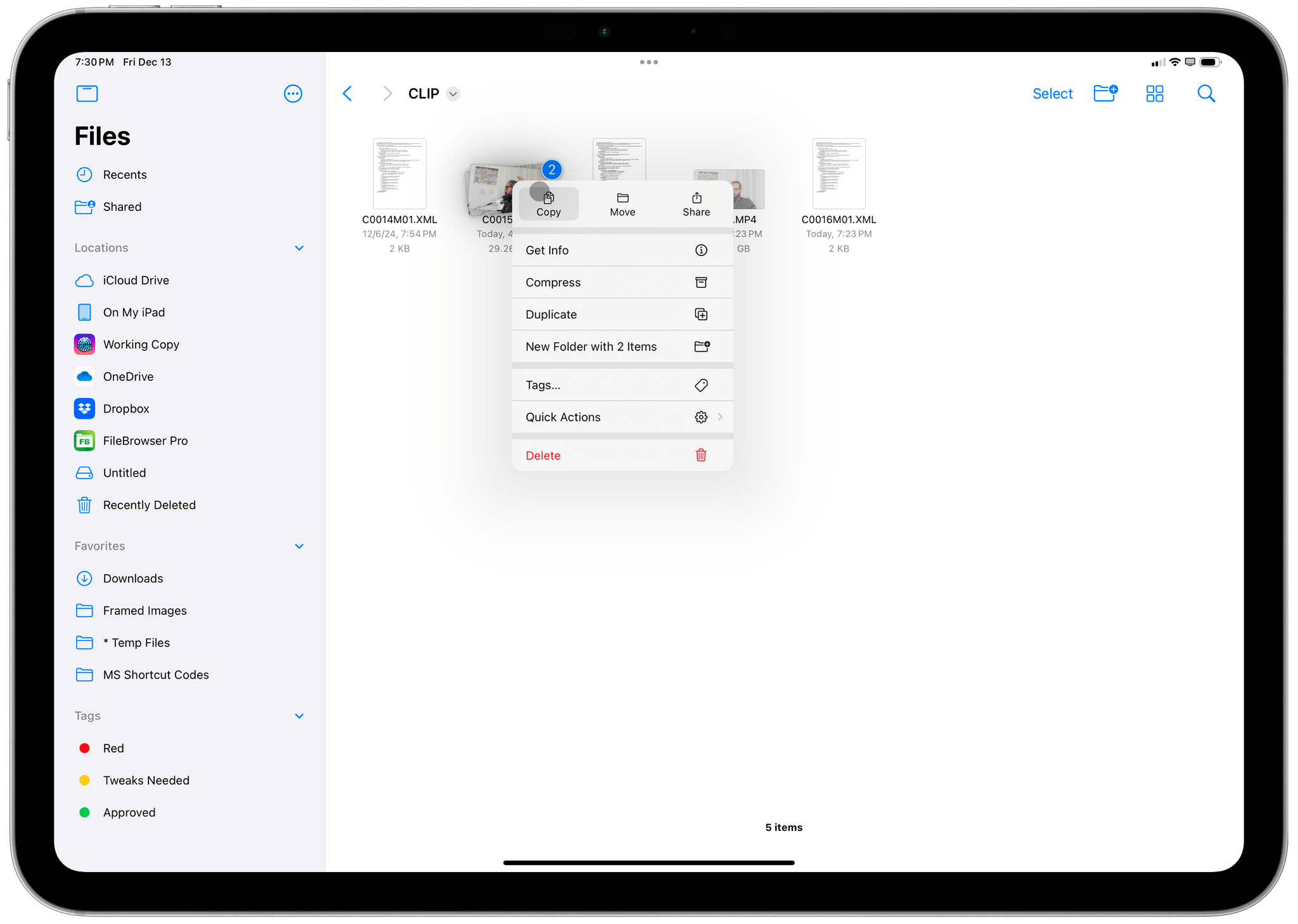

- The camera can record video to an SD card while also streaming over USB to an iPad. The recording is completely separate from iPadOS, and I can start it by pressing a physical button on the camera, which plays a helpful sound to confirm when it starts and stops recording. Following Chris’ recommendation, I got this SD card from Lexar, which I plan to rotate on a regular basis to avoid storage degradation.

- The ZV-E10 II has a flip-out display that can swivel to face me. This allows me to keep an eye on what I look like in the video and has the added benefit of helping the camera run cooler. (More on this below.)

The ZV-E10 II seemed to meet all my requirements for an iPad-compatible mirrorless USB camera, so I ordered one in white (of course, it had to match my other accessories) with the default 16-50mm lens kit. The camera arrived about two months ago, and I’ve been using it to record episodes of AppStories and NPC entirely from my iPad Pro, without using a Mac anywhere in the process.

To say that I’m happy with this result would be an understatement. There are, however, some implementation details and caveats worth covering.

For starters, the ZV-E10 II notoriously overheats when recording long sessions at 4K, and since NPC tends to be longer than an hour, I had to make sure this wouldn’t happen. Following a tip from Chris, we decided to record all of our video podcasts in 1080p and upscale them to 4K in post-production. This is good enough for video podcasts on YouTube, and it allows us to work with smaller files while preventing the camera from running into any 4K-related overheating issues. Second, to let heat dissipate more easily and quickly while recording, I’m doing two things:

- I always keep the display open, facing me. This way, heat from the display isn’t transferred back to the main body of the camera.

- I’m using a “dummy battery”. This is effectively an empty battery that goes into the camera but actually gets its power from a wall adapter. There are plenty available on Amazon, and the one I got works perfectly. With this approach, the camera can stay on for hours at a time since heat is actually produced in the external power supply rather than inside the camera’s battery slot.

In terms of additional hardware, I’m also using a powerful 12” Neewer ring light for proper lighting with an adjustable cold shoe mount to get my angle just right. I tried a variety of ring lights and panels from Amazon; this one had the best balance of power and price for its size. (I didn’t want to get something that was too big since I want to hide its tripod in a closet when not in use.)

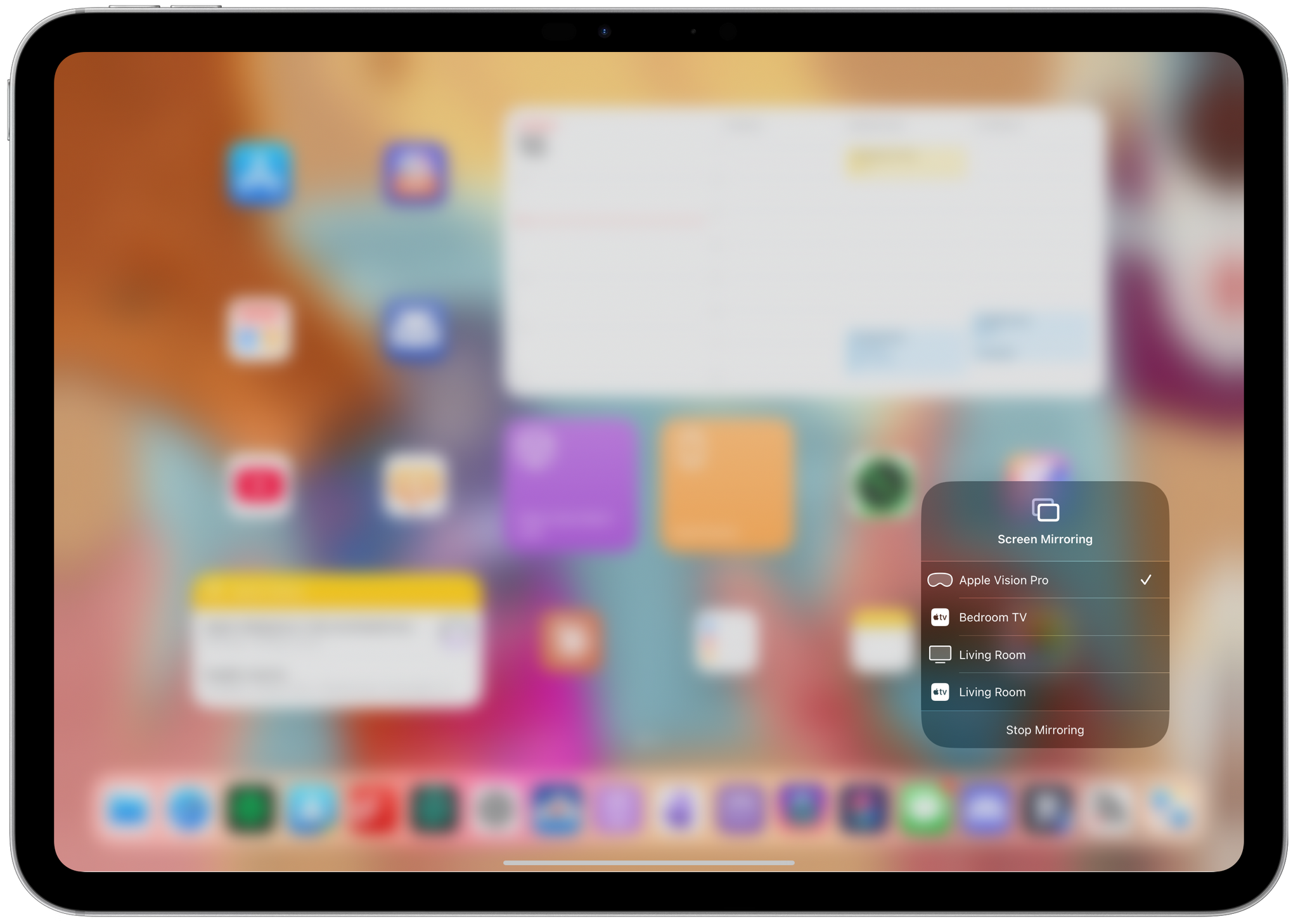

The software story is a bit more simplistic, and right in line with the limitations of iPadOS we’re familiar with. If you’ve followed along with the story so far, you know that I have to plug both my MixPre-3 II and ZV-E10 II into the iPad Pro. To do this, I’m using a CalDigit TS4 dock in the middle that also handles power delivery, Ethernet, and the connection to my monitor. The only problem is that I have to remember to connect my various accessories in a particular order; specifically, I have to plug in my audio interface last, or people on Zoom will hear me speaking through the camera’s built-in microphone.

This happens because, unlike macOS, iPadOS doesn’t have a proper ‘Sound’ control panel in Settings to view and assign different audio sources and output destinations. Instead, everything is “managed” from the barebones Control Center UI, which doesn’t let me choose the MixPre-3 II for microphone input unless it is plugged in last. This isn’t a dealbreaker, but seriously, how silly is it that I can do all this work with an iPad Pro now and its software still doesn’t match my needs?

When streaming USB audio and video to Zoom on the iPad from two separate devices, I also have to remember that if I accidentally open another camera app while recording, video in Zoom will be paused. This is another limitation of iPadOS: an external camera signal can only be active in one app at a time, so if I want to, say, take a selfie while recording on the iPad, I can’t – unless I’m okay with video being paused on Zoom while I do so.

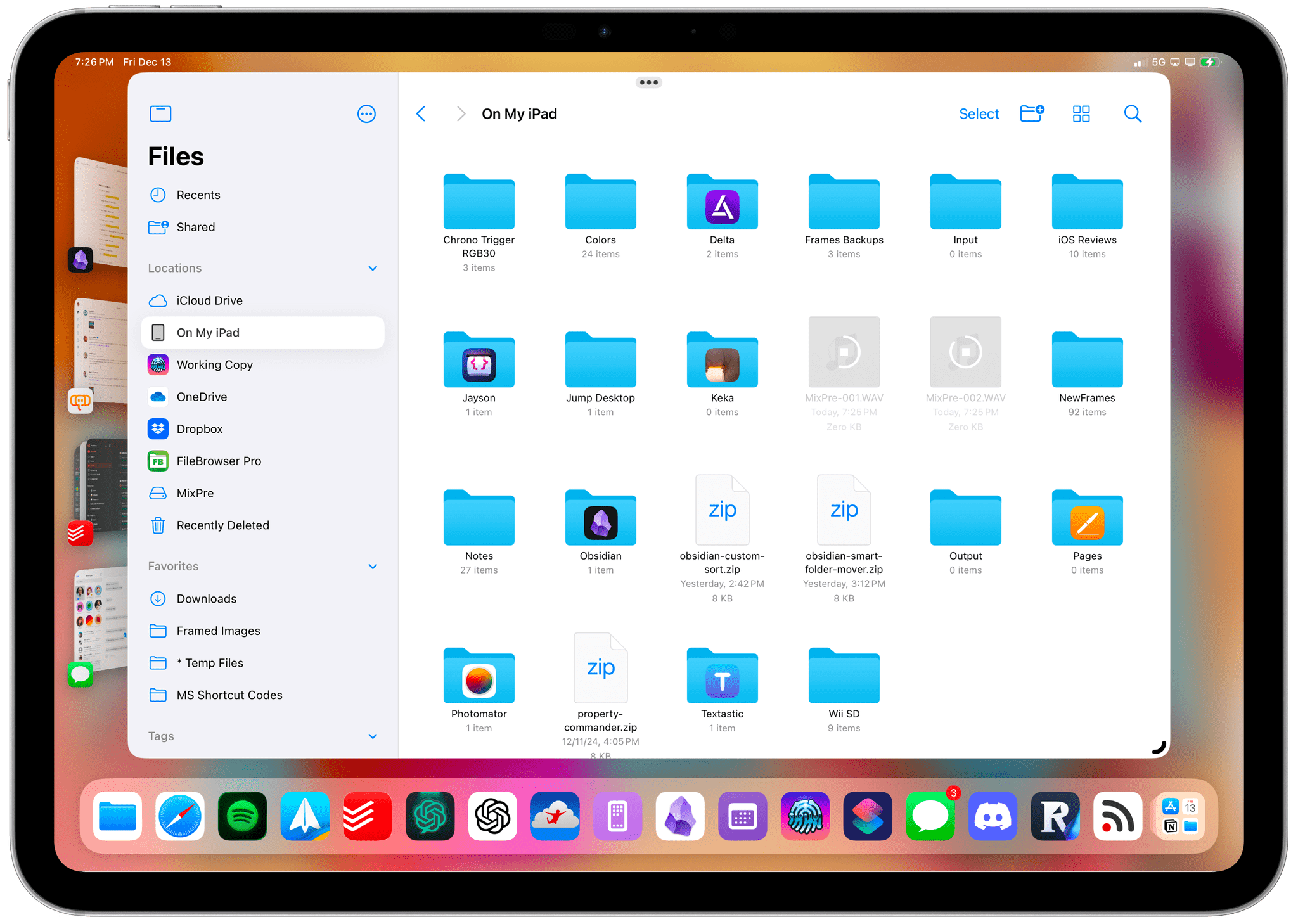

When I’m done recording a video, I press the stop button on the camera, grab its SD card, put it in Apple’s USB-C SD card adapter, and plug it into the iPad Pro. To do this, I have to disconnect the Thunderbolt cable that connects my iPad Pro to the CalDigit TS4. I can’t plug the adapter into the Magic Keyboard’s secondary USB-C port since it’s used for power delivery only, something that I hope will change eventually. In any case, the Files app does a good enough job copying large video files from the SD card to my iPad’s local storage. On a Mac, I would create a Hazel automation to grab the latest file from a connected storage device and upload it to Dropbox; on an iPad, there are no Shortcuts automation triggers for this kind of task, so it has to be done manually.

And that’s pretty much everything I have to share about using a fancy webcam with the iPad Pro. It is, after all, a USB feature that was enabled in iPadOS 17 thanks to UVC; it’s nothing new or specific to iPadOS 18 this year. While I wish I had more control over the recording process and didn’t have to use another SD card to save videos, I’m happy I found a solution that works for me and allows me to keep using the iPad Pro when I’m recording AppStories and NPC.

iPad Pro and the Vision Pro

I’m on the record saying that if the Vision Pro offered an ‘iPad Virtual Display’ feature, my usage of the headset would increase tenfold, and I stand by that. Over the past few weeks, I’ve been rediscovering the joy of the Vision Pro as a (very expensive) media consumption device and stunning private monitor. I want to use the Vision Pro more, and I know that I would if only I could control its apps with the iPad’s Magic Keyboard while also using iPadOS inside visionOS. But I can’t; nevertheless, I persist in the effort.

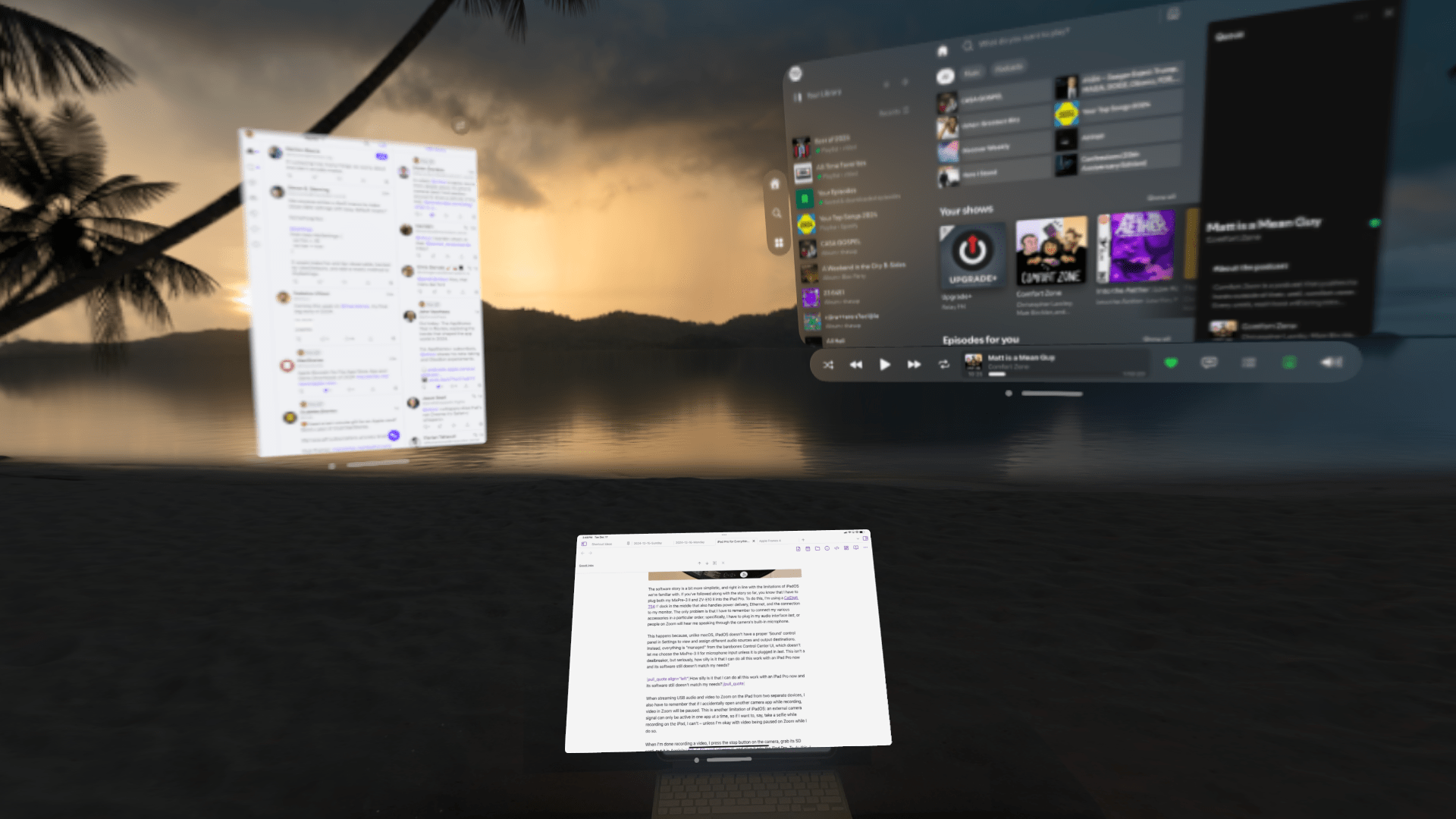

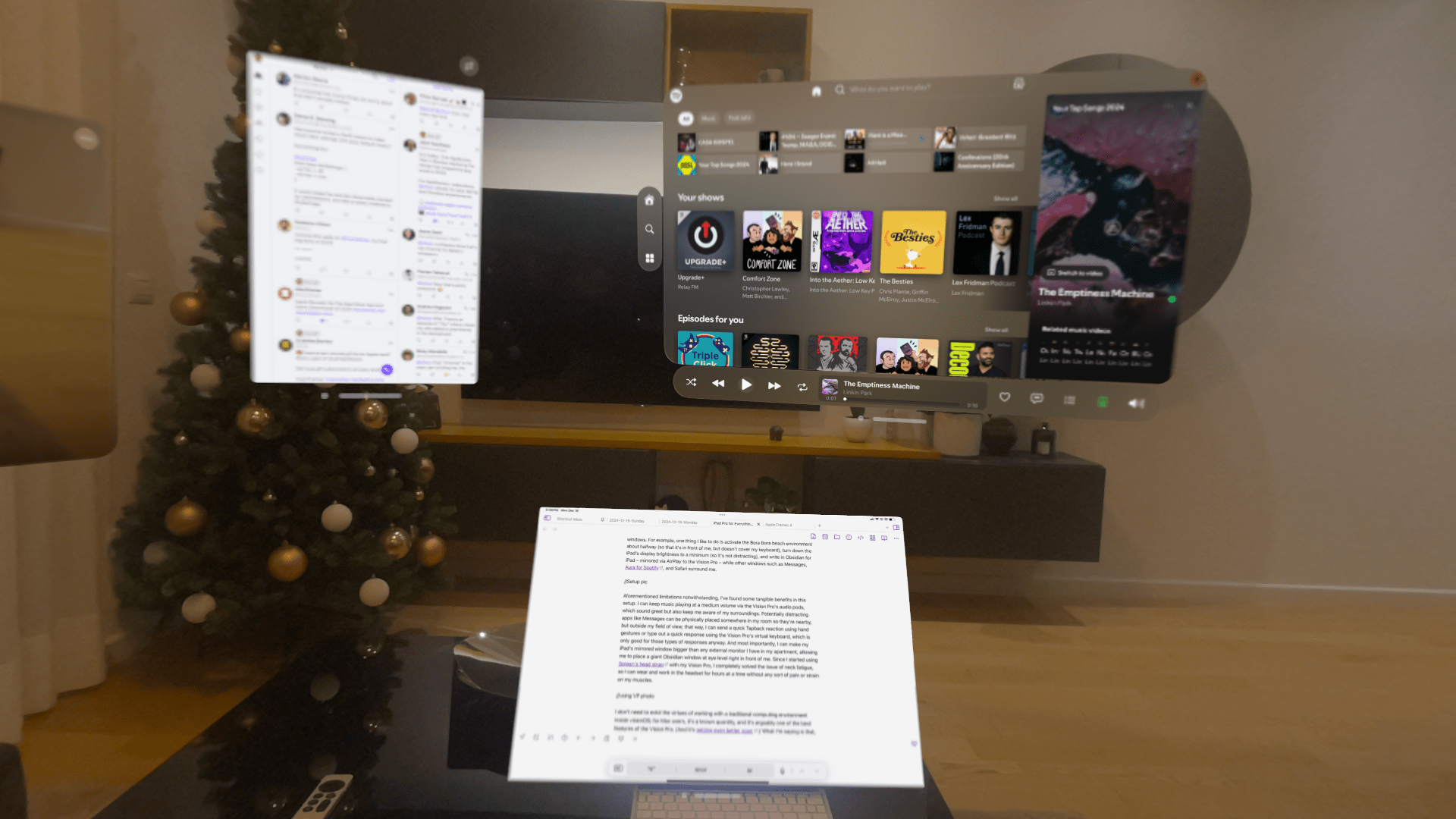

As Devon covered in his review of visionOS 2, one of the Vision Pro’s new features is the ability to turn into a wireless AirPlay receiver that can mirror the screen of a nearby Apple device. That’s what I’ve been doing lately when I’m alone in the afternoon and want to keep working with my iPad Pro while also immersing myself in an environment or multitasking outside of iPadOS: I mirror the iPad to the Vision Pro and work with iPadOS in a window surrounded by other visionOS windows.

Now, I’ll be honest: this is not ideal, and Apple should really get around to making the iPad a first-class citizen of its $3,500 spatial computer just like the Mac can be. If I don’t own a Mac and use an iPad as my main computer instead, I shouldn’t be penalized when I’m using the Vision Pro. I hope iPad Virtual Display is in the cards for 2025 as Apple continues to expand the Vision line with more options. But for now, despite the minor latency that comes with AirPlay mirroring and the lack of true integration between the iPad’s Magic Keyboard and visionOS, I’ve been occasionally working with my iPad inside the Vision Pro, and it’s fun.

There’s something appealing about the idea of a mixed computing environment where the “main computer” becomes a virtual object in a space that is also occupied by other windows. For example, one thing I like to do is activate the Bora Bora beach environment about halfway (so that it’s in front of me, but doesn’t cover my keyboard), turn down the iPad’s display brightness to a minimum (so it’s not distracting), and write in Obsidian for iPad – mirrored via AirPlay to the Vision Pro – while other windows such as Messages, Aura for Spotify, and Safari surround me.

This is better multitasking than Stage Manager – which is funny, because most of these are also iPad apps.

Aforementioned limitations notwithstanding, I’ve found some tangible benefits in this setup. I can keep music playing at a medium volume via the Vision Pro’s audio pods, which sound great but also keep me aware of my surroundings. Potentially distracting apps like Messages can be physically placed somewhere in my room so they’re nearby, but outside my field of view; that way, I can send a quick Tapback reaction using hand gestures or type out a quick response using the Vision Pro’s virtual keyboard, which is only good for those types of responses anyway. And most importantly, I can make my iPad’s mirrored window bigger than any external monitor I have in my apartment, allowing me to place a giant Obsidian window at eye level right in front of me.

Since I started using Spigen’s head strap with my Vision Pro, I completely solved the issue of neck fatigue, so I can wear and work in the headset for hours at a time without any sort of pain or strain on my muscles.

I don’t need to extol the virtues of working with a traditional computing environment inside visionOS; for Mac users, it’s a known quantity, and it’s arguably one of the best features of the Vision Pro. (And it’s only gotten better with time.) What I’m saying is that, even with the less flexible and not as technically remarkable AirPlay-based flavor of mirroring, I’ve enjoyed being able to turn my iPad’s diminutive display into a large, TV-sized virtual monitor in front of me. Once again, it goes back to the same idea: I have the most compact iPad Pro I can get, but I can make it bigger via physical or virtual displays. I just wish Apple would take things to the next level here for iPad users as well.

iPad Pro as a Media Tablet for TV and Game Streaming…at Night

In the midst of working with the iPad Pro, something else happened: I fell in love with it as a media consumption device, too. Despite my appreciation for the newly “updated” iPad mini, the combination of a software feature I started using and some new accessories made me completely reevaluate the iPad Pro as a computer I can use at the end of the workday as well. Basically, this machine is always with me now.

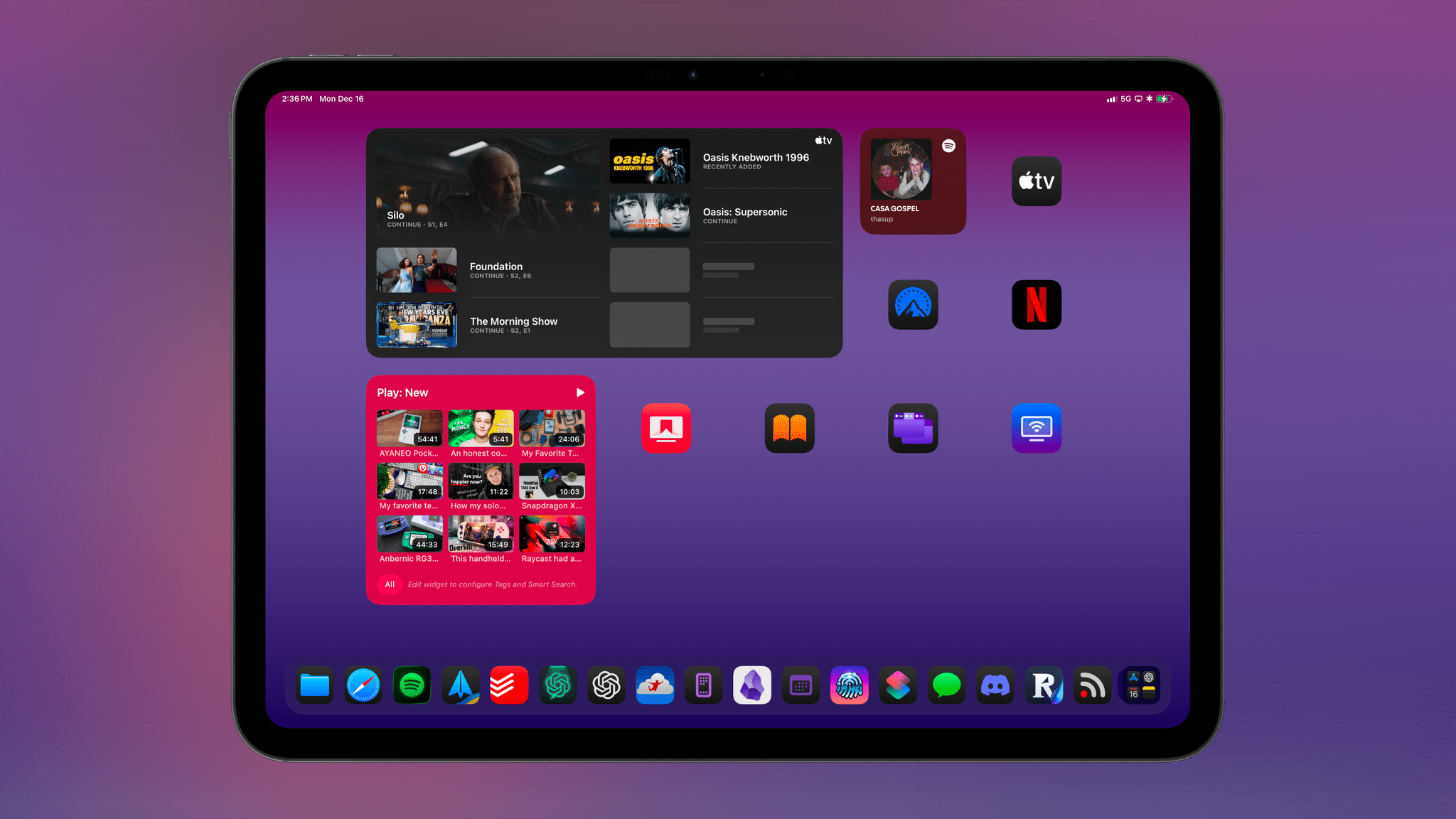

Let’s start with the software. This may sound obvious to several MacStories readers, but I recently began using Focus modes again, and this change alone allowed me to transform my iPad Pro into a different computer at night.

Specifically, I realized that I like to use my iPad Pro with a certain Home and Lock Screen configuration during the day and use a different combo with dark mode icons at night, when I’m in bed and want to read or watch something. So after ignoring them for years, I created two Focus modes: Work Mode and Downtime. The first Focus is automatically enabled every morning at 8:00 AM and lasts until 11:59 PM; the other one activates at midnight and lasts until 7:59 AM.3 This way, I have a couple of hours with a media-focused iPad Home Screen before I go to sleep at night, and when I wake up around 9:00 AM, the iPad Pro is already configured with my work apps and widgets.

I don’t particularly care about silencing notifications or specific apps during the day; all I need from Focus is a consistent pair of Home and Lock Screens with different wallpapers for each. As you can see from the images in this story, the Work Mode Home Screen revolves around widgets for tasks and links, while the Downtime Home Screen prioritizes media apps and entertainment widgets.

This is something I suggested in my iPad mini review, but the idea here is that software, not hardware, is turning my iPad Pro into a third place device. With the iPad mini, the act of physically grabbing another computer with a distinct set of apps creates a clear boundary between the tools I use for work and play; with this approach, software transforms the same computer into two different machines for two distinct times of day.

I also used two new accessories to smooth out the transition from business during the day to relaxation at night with the iPad Pro. A few weeks back, I was finally able to find the kind of iPad Pro accessory I’d been looking for since the debut of the M4 models: a back cover with a built-in kickstand. Last year, I used a similar cover for the M2 iPad Pro, and the idea is the same: this accessory only protects the back of the device, doesn’t have a cover for the screen, and comes with an adjustable kickstand to use the iPad in landscape at a variety of viewing angles.

The reason I wanted this product is simple. This is not a cover I use for protecting the iPad Pro; I only want to attach it in the evening, when I’m relaxing with the iPad Pro on my lap and want to get some reading done or watch some TV. In fact, this cover never leaves my nightstand. When I’m done working for the day, I leave the Magic Keyboard on my desk, bring the iPad Pro into the bedroom, and put it in the cover, leaving it there for later.

I know what you’re thinking: couldn’t I just use a Magic Keyboard for the same exact purpose? Yes, I could. But the thing is, because it doesn’t have a keyboard on the front, this cover facilitates the process of tricking my brain into thinking I’m no longer in “work mode”. Even if I wanted, I couldn’t easily type with this setup. By making the iPad Pro more like a tablet rather than a laptop, the back cover – combined with my Downtime Focus and different Home Screen – reminds me that it’s no longer time to get work done with this computer. Once again, it’s all about taking advantage of modularity to transform the iPad Pro into something else – which is precisely what a traditional MacBook could never do.

But I went one step further.

If you recall, a few weeks ago on NPC, my podcast about portable gaming, I mentioned a “gaming pillow” – a strange accessory that promises to provide you with a more comfortable experience when playing with a portable console by combining a small mounting clasp with a soft pillow to put on your lap. Instead of feeling the entire weight of a Steam Deck or Legion Go in your hand, the pillow allows you to mount the console on its arm, offload the weight to the pillow, and simply hold the console without feeling any weight on your hands.

Fun, right? Well, as I mentioned in the episode, that pillow was a no-brand version of a similar accessory that the folks at Mechanism had pre-announced, and which I had pre-ordered and was waiting for. In case you’re not familiar, Mechanism makes a suite of mounting accessories for handhelds, including the popular Deckmate, which I’ve been using for the past year. With the Mechanism pillow, I could combine the company’s universal mounting system for my various consoles with the comfort of the pillow to use any handheld in bed without feeling its weight on my wrists.

I got the Mechanism pillow a few weeks ago, and not only do I love it (it does exactly what the company advertised, and I’ve been using it with my Steam Deck and Legion Go), but I also had the idea of pairing it with the iPad Pro’s back cover for the ultimate iPad mounting solution…in bed.

All I had to do was take one of Mechanism’s adhesive mounting clips and stick it to the back of the aforementioned iPad cover. Now, if I want to use the iPad Pro in bed without having to hold it myself, I can attach the cover to the gaming pillow, then attach the iPad Pro to the cover, and, well, you can see the result in the photo above. Believe me when I say this: it looks downright ridiculous, Silvia makes fun of me every single day for using it, and I absolutely adore it. The pillow’s plastic arm can be adjusted to the height and angle I want, and the whole structure is sturdy enough to hold everything in place. It’s peak laziness and iPad comfort, and it works incredibly well for reading, watching TV, streaming games with a controller in my hands, and catching up on my YouTube queue in Play.

Speaking of streaming games, there is one final – and very recent – addition to my iPad-centric media setup I want to mention: NDI streaming.

NDI (which stands for Network Device Interface) is a streaming protocol created by NewTek that allows high-quality video and audio to be transmitted over a local network in real time. Typically, this is done through hardware (an encoder) that gets plugged into the audio/video source and transmits data across your local network for other clients to connect to and view that stream. The advantages of NDI are its plug-and-play nature (clients can automatically discover NDI streamers on the network), high-bandwidth delivery, and low latency.

We initially covered NDI in the context of game streaming on MacStories back in February, when John explained how to use the Kiloview N40 to stream games to a Vision Pro with better performance and less latency than a typical PlayStation Remote Play or Moonlight environment. In his piece, John covered the excellent Vxio app, which remains the premier utility for NDI streaming on both the Vision Pro and iPad Pro. He ended up returning the N40 because of performance issues on his network, but I’ve stuck with it since I had a solid experience with NDI thanks to my fancy ASUS gaming router.

Since that original story on NDI was published, I’ve upgraded my setup even further, and it has completely transformed how I can enjoy PS5 games on my iPad Pro without leaving my bed at night. For starters, I sold my PS5 Slim and got a PS5 Pro. I wouldn’t recommend this purchase to most people, but given that I sit very close to my monitor to play games and can appreciate the graphical improvements enabled by the PS5 Pro, I figured I’d get my money’s worth with Sony’s latest and greatest PS5 revision. So far, I can confirm that the upgrade has been incredible: I can get the best possible graphics in FFVII Rebirth or Astro Bot without sacrificing performance.

Secondly, I switched from the Kiloview N40 to the bulkier and more expensive Kiloview N60. I did it for a simple reason: it’s the only Kiloview encoder that, thanks to a recent firmware upgrade, supports 4K HDR streaming. The lack of HDR was my biggest complaint about the N40; I could see that colors were washed out and not nearly as vibrant as when I was playing games on my TV. It only seemed appropriate that I would pair the PS5 Pro with the best possible version of NDI encoding out there.

After following developer Chen Zhang’s tips on how to enable HDR input for the N60, I opened the Vxio app, switched to the correct color profile, and was astounded:

The image quality with the N60 is insane. This is Astro Bot being streamed at 4K HDR to my iPad Pro with virtually no latency.

The image above is a native screenshot of Astro Bot being streaming to my iPad Pro using NDI and the Vxio app over my network. Here, let me zoom in on the details even more:

Now, picture this: it’s late at night, and I want to play some Astro Bot or Final Fantasy VII before going to sleep. I grab my PS5 Pro’s DualSense Edge controller4, wake up the console, switch the controller to my no-haptics profile, and attach the iPad Pro to the back cover mounted on the gaming pillow. With the pillow on my lap, I can play PS5 games at 4K HDR on an OLED display in front of me, directly from the comfort of my bed. It’s the best videogame streaming experience I’ve ever had, and I don’t think I have to add anything else.

If you told me years ago that a future story about my iPad Pro usage would wrap up with a section about a pillow and HDR, I would have guessed I’d lost my mind in the intervening years. And here we are.

Hardware Mentioned in This Story

Here’s a recap of all the hardware I mentioned in this story:

- iPad Pro (M4, 11”, with nano-texture glass, 1 TB, Wi-Fi and Cellular)

- Gigabyte M27U monitor

- AirPods Max (USB-C)

- CalDigit TS4 Thunderbolt dock

- Sound Devices MixPre-3 II

- Neumann KMS 105 microphone

- Sony ZV-E10 II camera

- SD card (for MixPre and camera)

- Dummy battery for camera

- Neewer 12” ring light with stand and cold shoe mount

- Apple SD card adapter

- Spigen head strap for Vision Pro

- TineeOwl iPad Pro back cover

- Gaming pillow

- Mechanism Deckmate bundle

- ASUS ROG Rapture WiFi 6E Gaming Router GT-AXE16000 central router + ASUS AXE7800 satellite

- PS5 Pro

- Kiloview N60 NDI encoder

Back to the iPad

After months of research for this story, and after years of experiments trying to get more work done from an iPad, I’ve come to a conclusion:

Sometimes, you can throw money at a problem on the iPad and find a solution that works.

I can’t stress this enough, though: with my new iPad workflow, I haven’t really fixed any of the problems that afflict iPadOS. I found new solutions thanks to external hardware; realistically, I have to thank USB-C more than iPadOS for making this possible. The fact that I’m using my iPad Pro for everything now doesn’t mean I approve of the direction Apple has taken with iPadOS or the slow pace of its development.

As I was wrapping up this story, I found myself looking back and reminiscing about my iPad usage over the past 12 years. One way to look at it is that I’ve been trying to get work done on the iPad for a third of my entire life. I started in 2012, when I was stuck in a hospital bed and couldn’t use a laptop. I persisted because I fell in love with the iPad’s ethos and astounding potential; the idea of using a computer that could transform into multiple things thanks to modularity latched onto my brain over a decade ago and never went away.

I did, however, spend a couple of years in “computer wilderness” trying to figure out if I was still the same kind of tech writer and if I still liked using the iPad. I worked exclusively with macOS for a while. Then I secretly used a Microsoft Surface for six months and told no one about it. Then I created a hybrid Mac/iPad device that let me operate two platforms at once. For a brief moment, I even thought the Vision Pro could replace my iPad and become my main computer.

I’m glad I did all those things and entertained all those thoughts. When you do something for a third of your life, it’s natural to look outside your comfort zone and ask yourself if you really still enjoy doing it.

And the truth is, I’m still that person. I explored all my options – I frustrated myself and my readers with the not-knowing for a while – and came out at the end of the process believing even more strongly in what I knew years ago:

The iPad Pro is the only computer for me.

Even with its software flaws, scattershot evolution, and muddled messaging over the years, only Apple makes this kind of device: a thin, portable slab of glass that can be my modular desktop workstation, a tablet for reading outside, and an entertainment machine for streaming TV and videogames. The iPad Pro does it all, and after a long journey, I found a way to make it work for everything I do.

I’ve stopped using my MacPad, I gave up thinking the Vision Pro could be my main computer, and I’m done fooling myself that, if I wanted to, I could get my work done on Android or Windows.

I’m back on the iPad. And now more than ever, I’m ready for the next 12 years.

- NPC listeners know this already, but I recently relocated my desktop-class eGPU (powered by an NVIDIA 4090) to the living room. There are two reasons behind this. First, when I want to play PC games with high performance requirements, I can do so with the most powerful device I own on the best gaming monitor I have (my 65” LG OLED television). And second, I have a 12-meter USB4 cable that allows me to rely on the eGPU while playing on my Legion Go in bed. Plus, thanks to their support for instant sleep and resume, both the PS5 and Switch are well-suited for the kind of shorter play sessions I want to have in the office. ↩︎

- Remember when split view for tabs used to be a Safari-only feature? ↩︎

- Oddly enough, despite the fact that I set all my Focus modes to sync between devices, the Work Mode Focus wouldn’t automatically activate on my iPad Pro in the morning (though it would on the iPhone). I had to set up a secondary automation in Shortcuts on the iPad Pro to make sure it switches to that Focus before I wake up. ↩︎

- When you’re streaming with NDI, you don’t pair a controller with your iPad since you’re merely observing the original video source. This means that, in the case of my PS5 Pro, its controller needs to be within range of the console when I’m playing in another room. Thankfully, the DualSense has plenty of range, and I haven’t run into any input latency issues. ↩︎