I enjoyed this explanation by The Verge’s Tom Warren on how Microsoft’s Phone Link app – which has long allowed Android users to connect their smartphones to a Windows PC – has been updated to support iOS notifications and sending texts via iMessage. From the story:

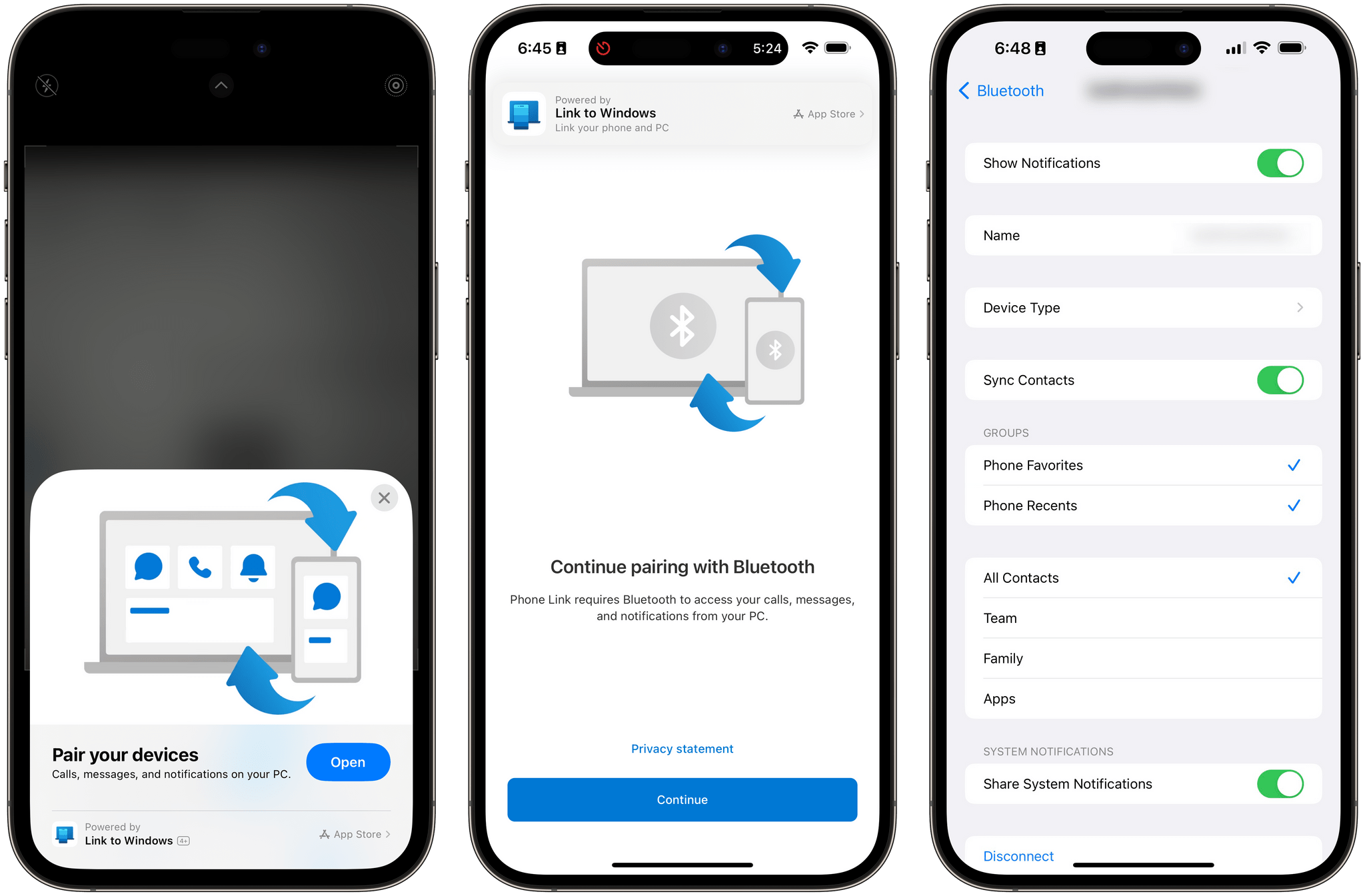

The setup process between iPhone and PC is simple. Phone Link prompts you to scan a QR code from your iPhone to link it to Windows, which automatically opens a lightweight App Clip version of Phone Link on iOS to complete the Bluetooth pairing. Once paired, you have to take some important steps to enable contact sharing over Bluetooth, enable “show notifications,” and allow system notifications to be shared to your PC over Bluetooth. These settings are all available in the Bluetooth options for the device you paired to your iPhone.

And:

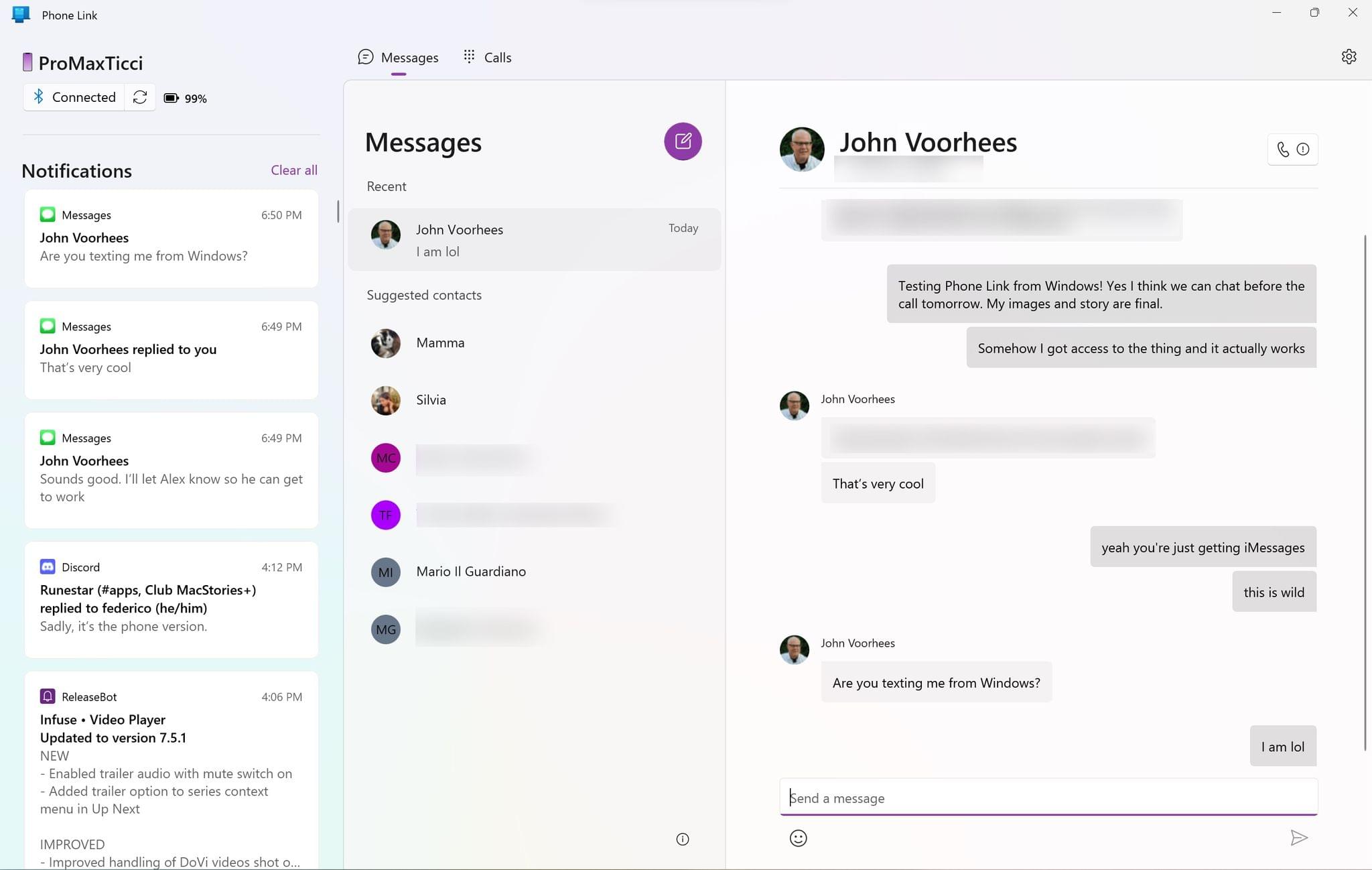

Microsoft’s Phone Link works by sending messages over Bluetooth to contacts. Apple’s iOS then intercepts these messages and forces them to be sent over iMessage, much like how it will always automatically detect when you’re sending a message to an iPhone and immediately switch it to blue bubbles and not the green ones sent via regular SMS. Phone Link intercepts the messages you receive through Bluetooth notifications and then shows these in the client on Windows.

I got access to the updated version of Phone Link on my PC today, and this integration is pretty wild and it actually works, albeit with several limitations.

First, the setup process is entirely based on an App Clip by Microsoft, which is the first time I’ve seen and used an App Clip in real life. Essentially, my understanding is that this works similarly to how an iPhone can pair with an old-school Bluetooth car system: the iPhone and PC pair via Bluetooth, and you can then provide the PC with access to your notifications and contacts from iOS’ Bluetooth settings. This is the same UI I have for my KIA Sportage’s system, which uses regular Bluetooth to pair with my iPhone and can also display contacts and missed calls.

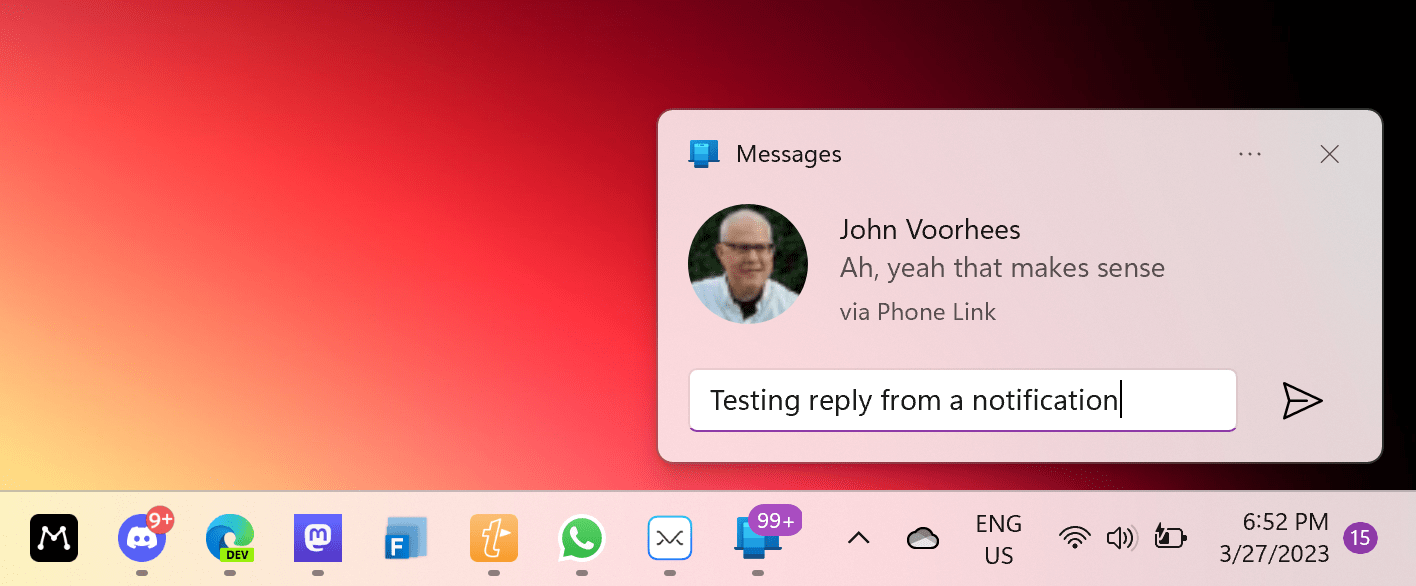

The difference between my car and Phone Link, of course, is that with Phone Link you can type text messages from a PC and they will be sent as iMessages on iOS. This bit of dark magic comes with a lot of trade-offs (check out Warren’s full story for the details on this), but it works for individual contacts. I’ve been able to start a conversation with John, reply to his messages from Windows notifications, and even send him URLs, and they were all correctly “intercepted” by iOS and sent over as iMessages. I’ve also been impressed by the ability to clear notifications from a PC and have them go away on iOS’ Lock Screen immediately.

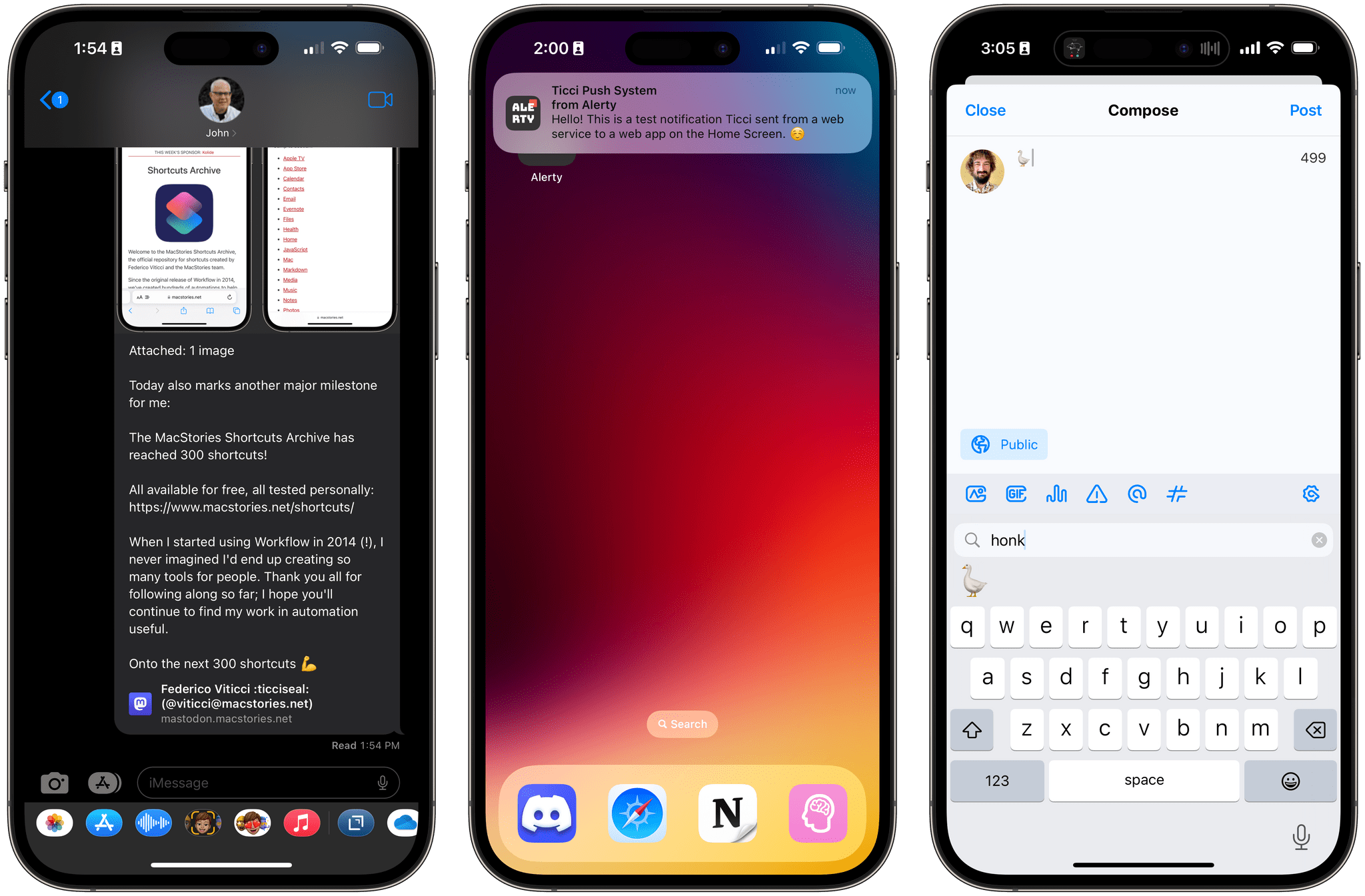

The limitations of Phone Link for iPhone users mean you’ll always have to fall back to the actual iOS device for something – whether it’s posting in an iMessage group or sending a photo or acting on notifications – but for quick messages, glancing at notifications, and clearing them, I think this integration is more than good enough.