I enjoyed this look by M.G. Siegler at the current AI landscape, evaluating the positions of all the big players and trying to predict who will come out on top based on what we can see today. I’ve been thinking about this a lot lately. The space is changing so rapidly, with weekly announcements and rumors, that it’s challenging to keep up with all the latest models, app integrations, and reasoning modes. But one thing seems certain: with 400 million weekly users, ChatGPT is winning in the public eye.

However, I was captivated by this analogy, and I wish I’d thought of it myself:

Professionals and power users will undoubtedly pay for, and get value out of, multiple models and products. But just as with the streaming wars, consumers are not going to buy all of these services. And unlike that war, where all of the players had differentiating content, again, the AI services are reaching some level of parity (for consumer use cases). So whereas you might have three or four streaming services that you pay for, you will likely just have one main AI service. Again, it’s more like search in that way.

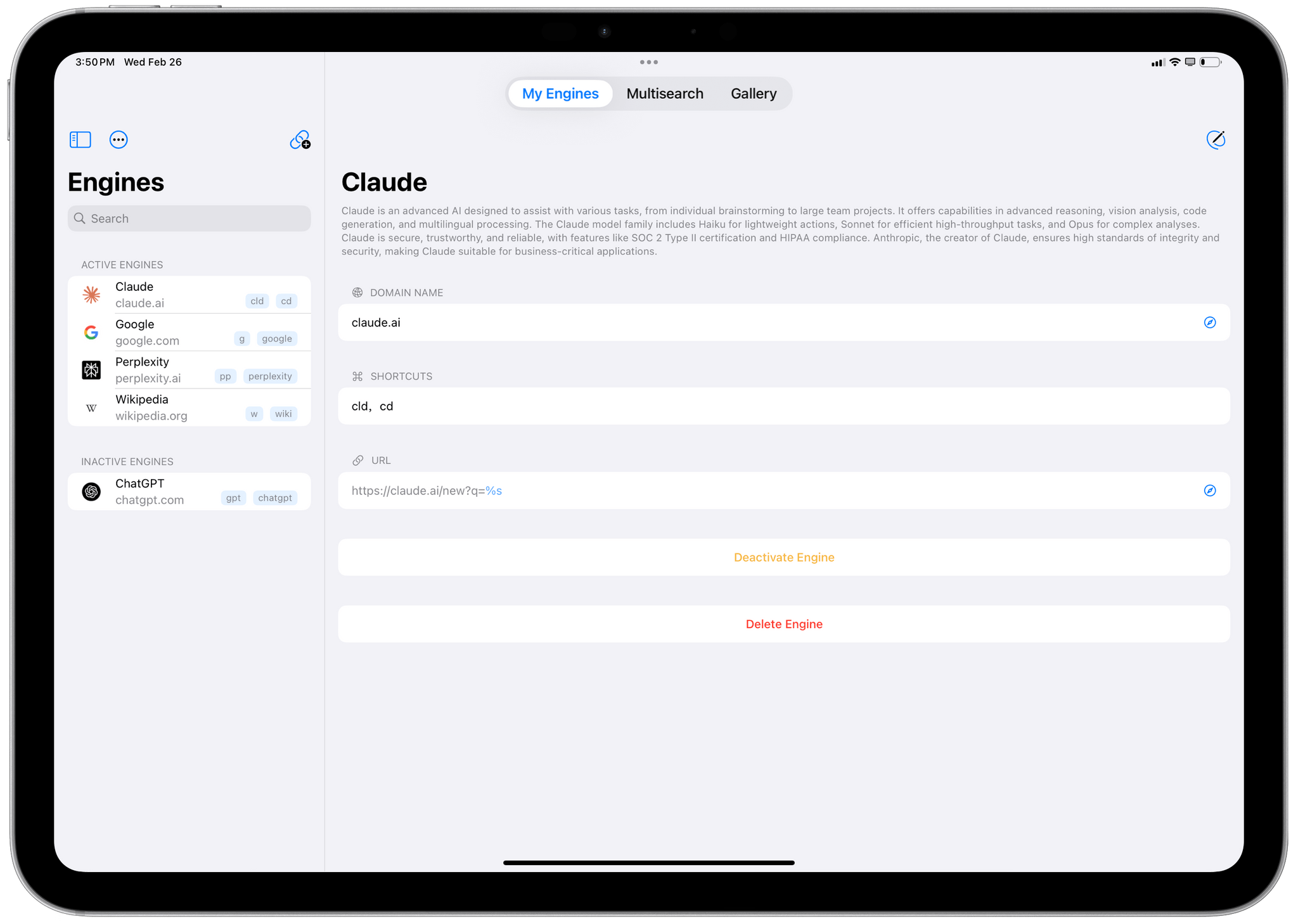

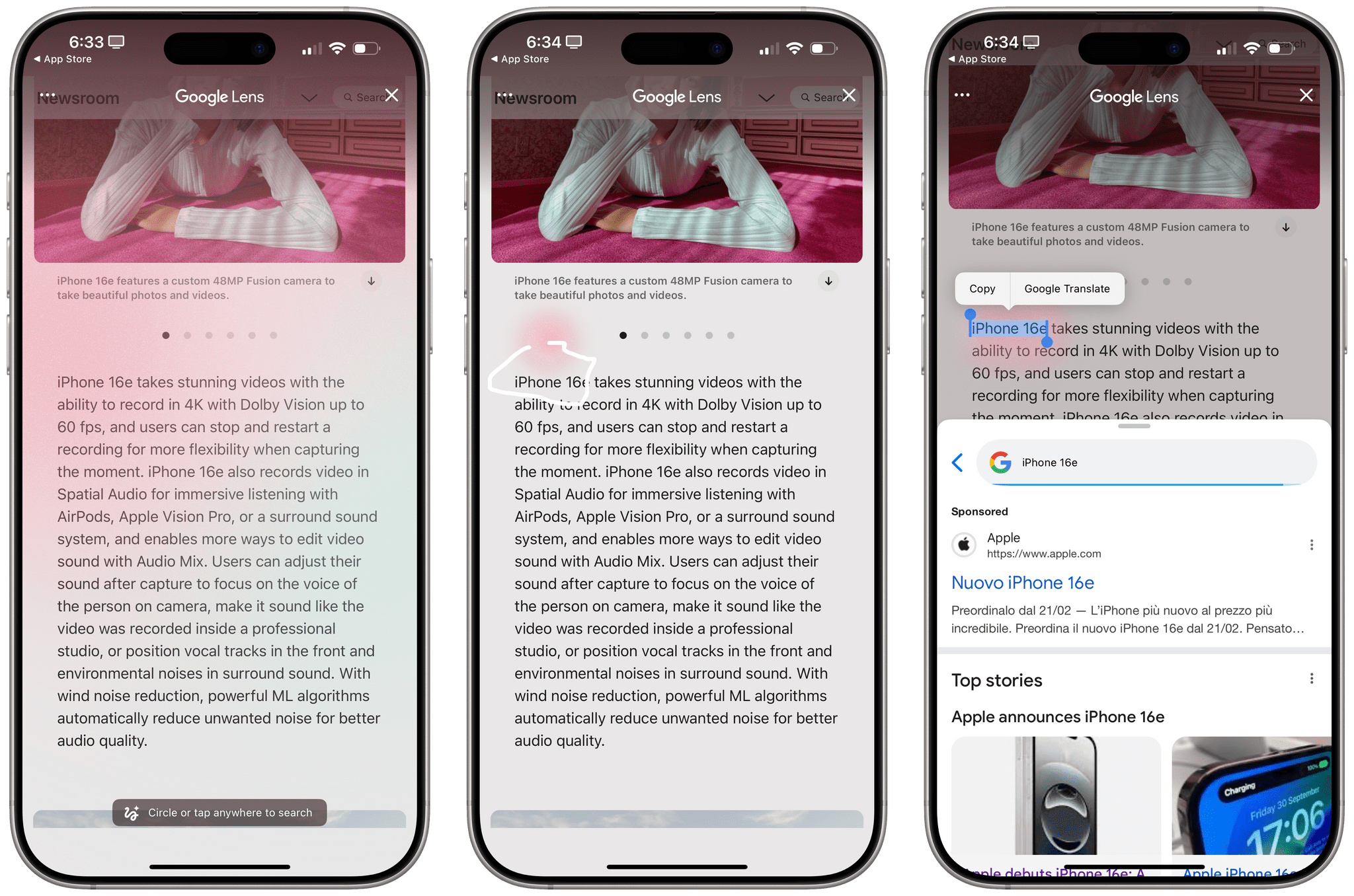

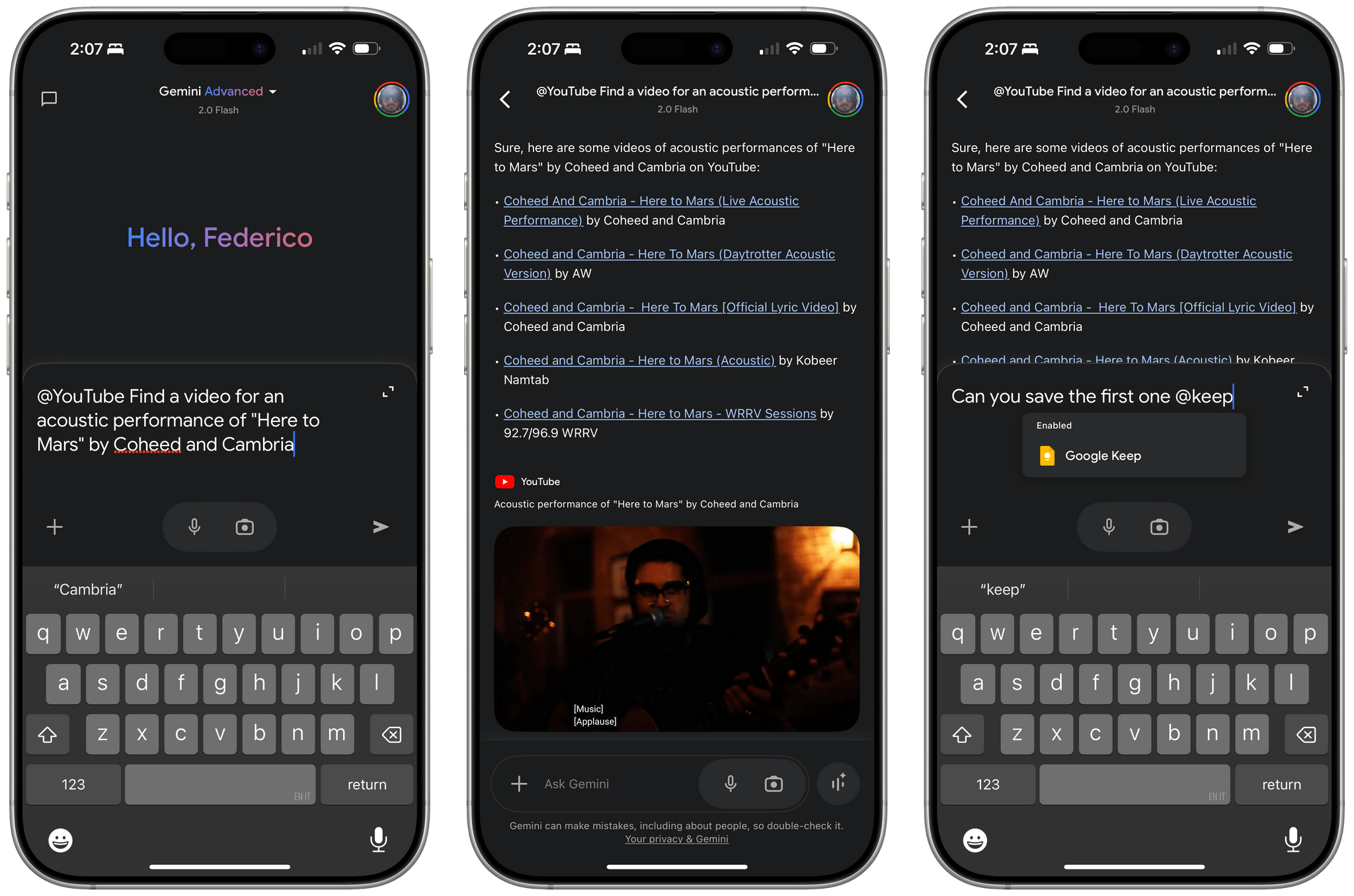

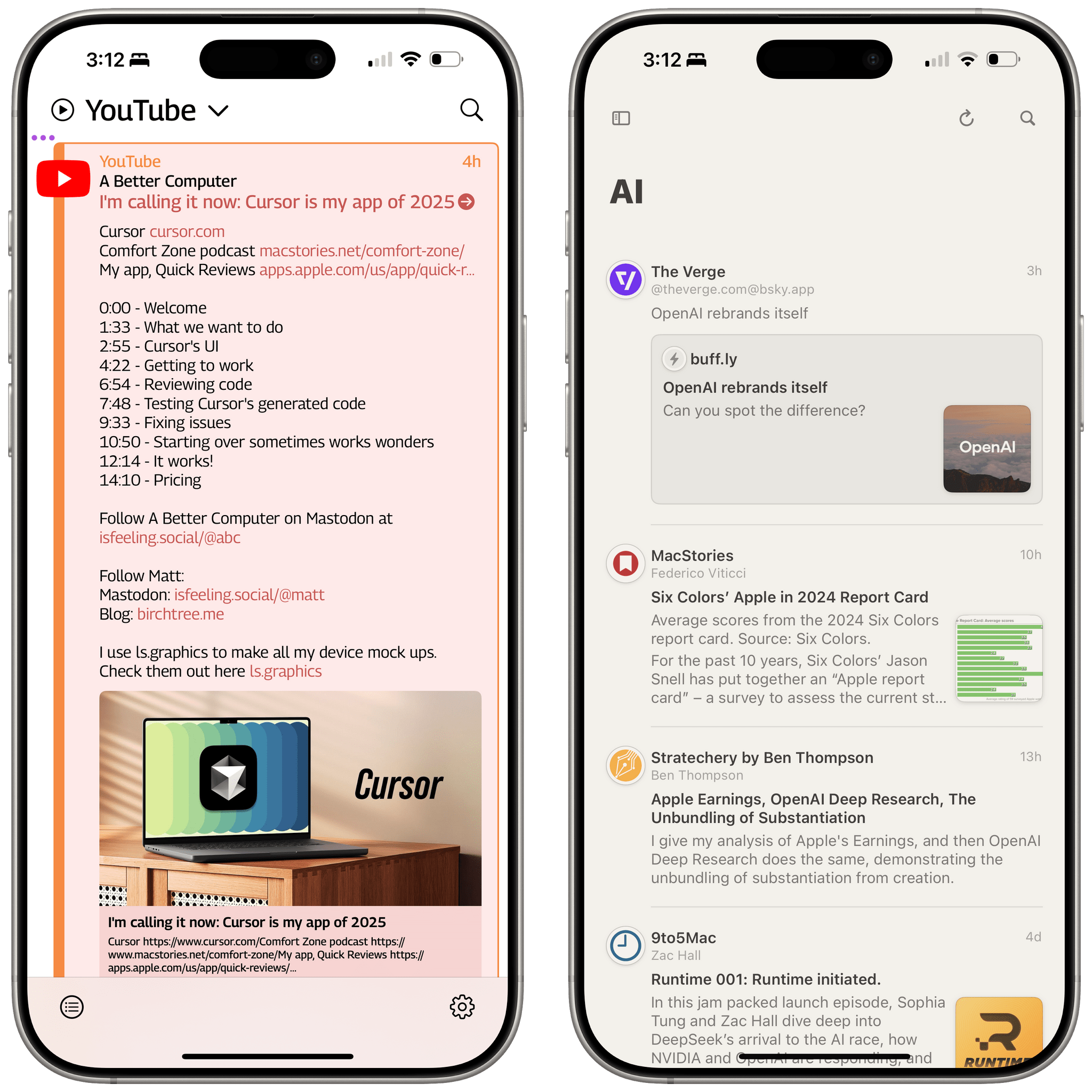

I see the parallels between different streaming services and different AI models, and I wonder if it’s the sort of diversification that happens before inevitable consolidation. Right now, I find ChatGPT’s Deep Research superior to Google Gemini, but Google has a more fascinating and useful ecosystem story; Claude is better at coding, editing prose, and following complex instructions than any other model I’ve tested, but it feels limited by a lack of extensions and web search (for now). As a result, I find myself jumping between different LLMs for different tasks. And that’s not to mention the more specific products I use on a regular basis, such as NotebookLM, Readwise Chat, and Whisper. Could it be that, just like I’ve always appreciated distinct native apps for specific tasks, maybe I also prefer dedicated AIs for different purposes now?

I continue to think that, long term, it’ll once again come down to iOS versus Android, as it’s always been. But I also believe that M.G. Siegler is correct: until the dust settles (if it ever does), power users will likely use multiple AIs in lieu of one AI to rule them all. And for regular users, at least for the time being, that one AI is ChatGPT.

.](https://cdn.macstories.net/img_0925-1738676881715.webp)