One of the perks of a Club MacStories+ and Club Premier membership are special columns published periodically by me and John. In this week’s Macintosh Desktop Experience column, John explained how widgets in macOS Sonoma are the glue between apps and services that make the Mac feel even more like part of an integrated ecosystem of platforms and devices:

The Mac’s place in users’ computing lives has changed a lot since Steve Jobs returned to Apple and reimagined the Mac as a digital hub. Those days were marked by comparatively weak mobile phones, MP3 players, camcorders, and pocket digital cameras that benefitted from being paired with the Mac and Apple’s iLife suite.

The computing landscape is markedly different now. The constellation of gadgets surrounding the Mac in Jobs’ digital hub have all been replaced by the iPhone and iPad – powerful, portable computers in their own right. That’s been a seismic shift for the Mac. Today, the Mac is in a better place than it’s been in many years thanks to Apple silicon, but it’s no longer the center of attention. Instead, it sits alongside the iPhone and iPad as capable computing peers.

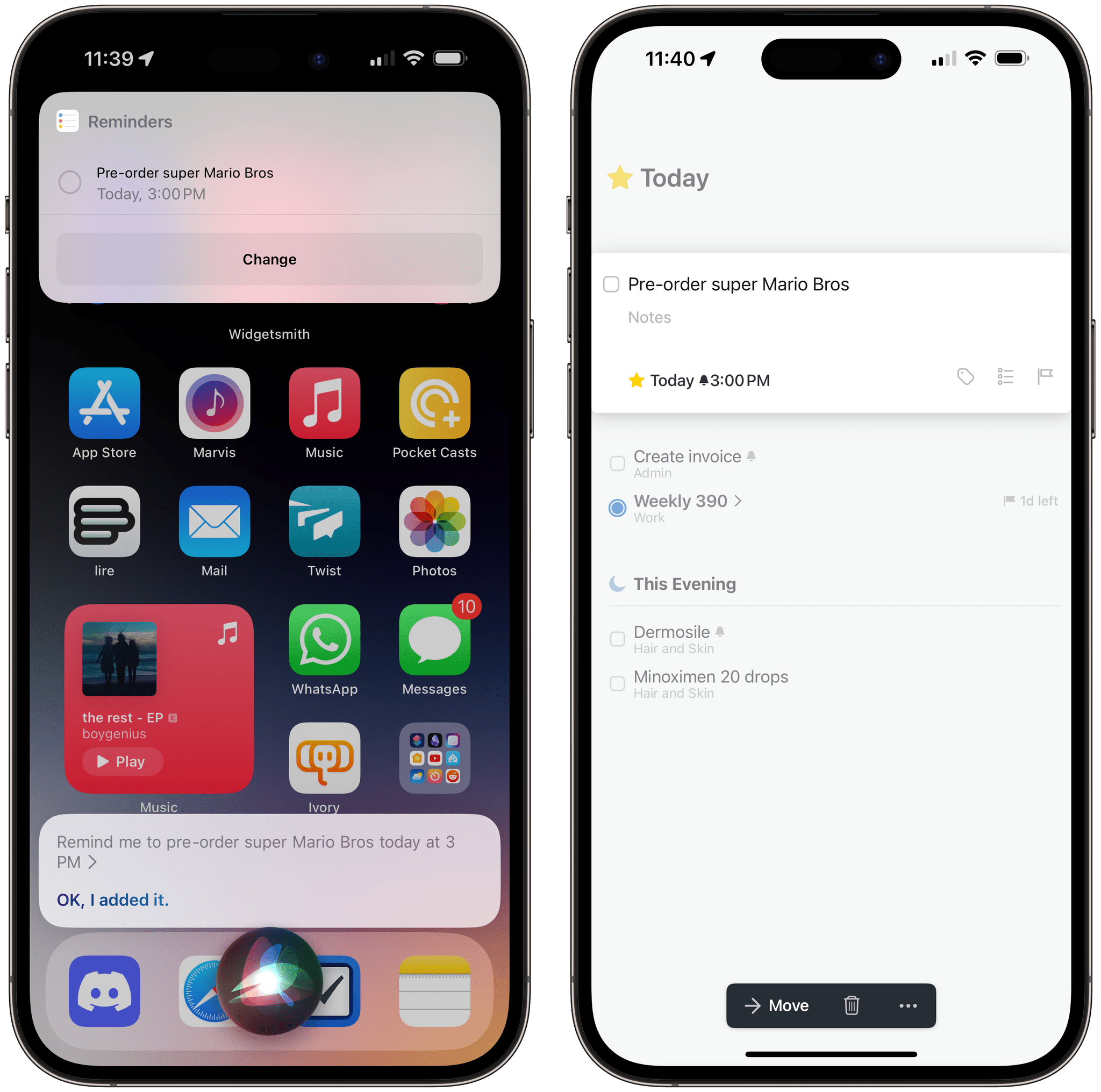

What hasn’t changed from the digital hub days is the critical role played by software. In 2001, iLife’s apps enabled the digital hub, but in 2023, the story is about widgets.

Stay until the end of the story and don’t miss the photo of John’s desk setup, which looks wild at first, but actually makes a lot of sense in the context of widgets.

Macintosh Desktop Experience is one of the many perks of a Club MacStories+ and Club Premier membership and a fantastic way to recognize the modern reality of macOS as well as get the most of your Mac thanks to John’s app recommendations, workflows, and more.

Join Club MacStories+:

Join Club Premier:

](https://cdn.macstories.net/banneras-1629219199428.png)