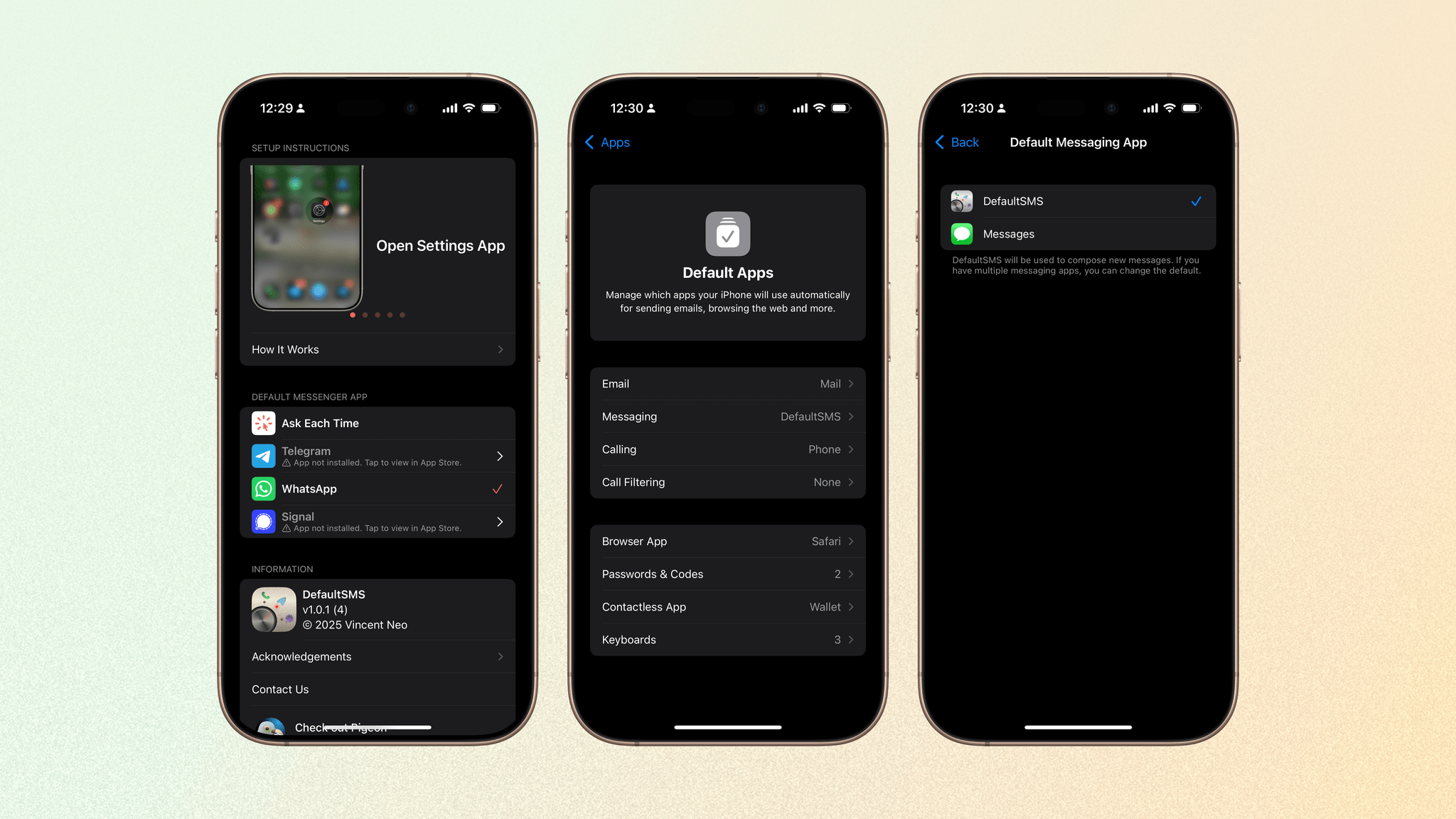

In iOS 18.2, Apple introduced the ability for users to set their default apps for messaging, calling, call filtering, passwords, contactless payments, and keyboards. Previously, it was only possible to specify default apps for mail and browsing, so this was a big step forward.

While apps like 1Password quickly took advantage of these new changes, there have been few to no takers in the calling, contactless payments, and messaging categories. Enter DefaultSMS, a new app that, as far as I can tell, seems to be the first to make use of the default messaging app setting.

Default SMS is not a messaging app. What it does is use this new setting to effectively bounce the user into the messaging app of their choice when they tap on a phone number elsewhere within iOS. Telegram, WhatsApp, and Signal are the options currently supported in the app.

Initial setup is quick. First, you select the messaging app you would like to use within DefaultSMS. Then, you head to Settings → Apps → Default Apps → Messaging and select DefaultSMS instead of Messages.

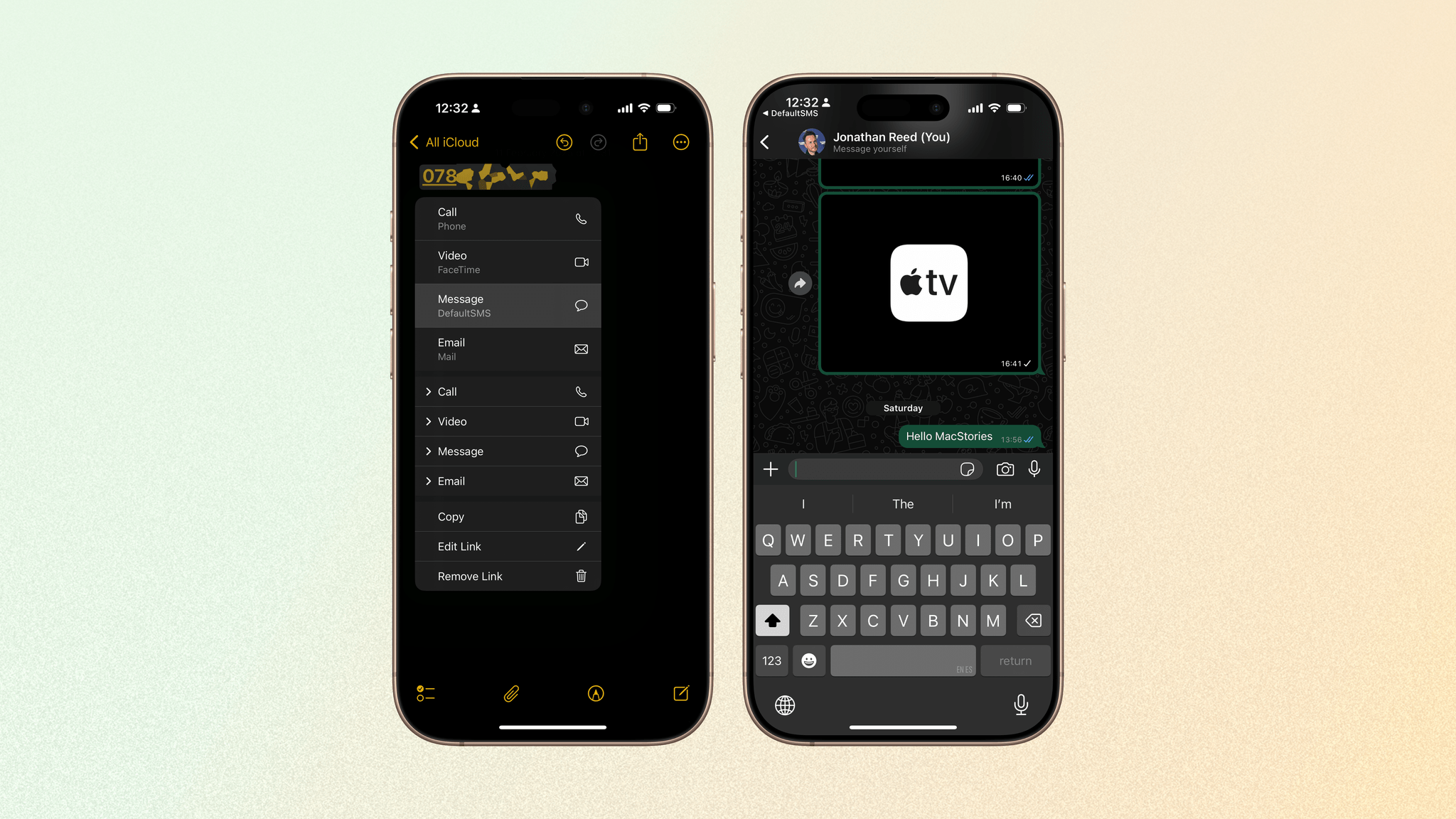

Now, whenever you tap on a phone number from a website, email, note, or other source within iOS, the system will recognize the sms:// link and open a new message to that number in your default messaging app, now specified as DefaultSMS. The app will then bounce you into your messaging app of choice to start the conversation. The developer says the process is 100% private, with DefaultSMS retaining none of this information.

It’s worth pointing out a few things about the app:

- You can only message someone who already has the app you are messaging from.

- If someone sends you an SMS, it will still be delivered to the Messages app.

- Once you start a conversation, you will be messaging from the app you have chosen (such as WhatsApp), not via SMS.

So why does this app exist? I put this question to the developer, Vincent Neo, who said, “The focus of the app is more towards countries where a significant part of the population already prefers a specific platform very frequently, such that users are very likely to prefer that over other platforms (including SMS), similar to your case, where everyone you know has WhatsApp.”

Quite simply, DefaultSMS allows you to choose which app you want to use to start a conversation when you tap a phone number, rather than always reverting to Messages. The app also highlights a flaw in the phrase “default messaging app”: there are still no APIs for apps to receive SMS messages. Until those are added, we will have to rely on clever third-party utilities like DefaultSMS to get us halfway there.

DefaultSMS is available on the App Store for $0.99.

.](https://cdn.macstories.net/img_0925-1738676881715.webp)