Alongside macOS Monterey, Apple today released iOS and iPadOS 15.1 – the first major updates to the operating systems introduced last month. Don’t expect a large collection of changes from this release, though: 15.1 mostly focuses on enabling SharePlay (which was announced at WWDC, then postponed to a later release a few months ago), rolling Safari back to a reasonable design, and bringing a few tweaks for the Camera app and spatial audio. Let’s take a look.

SharePlay

The big-ticket item of iOS and iPadOS 15.1 is SharePlay, which is a way for iPhone and iPad users to have “synchronized experiences” with others when using certain apps during a FaceTime call. I tested SharePlay with FaceTime over the past week; while I continue to believe this is the kind of “pandemic feature” that would have been a lot more useful to more people during the lockdowns of 2020, it is quite a technical achievement, and it’s been nicely implemented by Apple. Its only downside, frankly, is that it’s a year too late.

But let’s start from the beginning. At a high level, SharePlay is a way for you to either listen, watch, or collaborate on something together with other people while a FaceTime call is active. This is the most important point to understand about SharePlay, and one that confused a few MacStories readers over the summer: SharePlay isn’t an asynchronous, iCloud-based collaboration feature similar to shared Notes or Reminders: it’s a FaceTime feature that takes place within a FaceTime call. Call participants have the ability to pause, play, rewind, or otherwise control what is being played over SharePlay; Apple famously demoed SharePlay with the ability to watch Apple TV+ or listen to Apple Music together, and those are the kind of experiences you can look forward to testing right after updating to iOS 15.1.

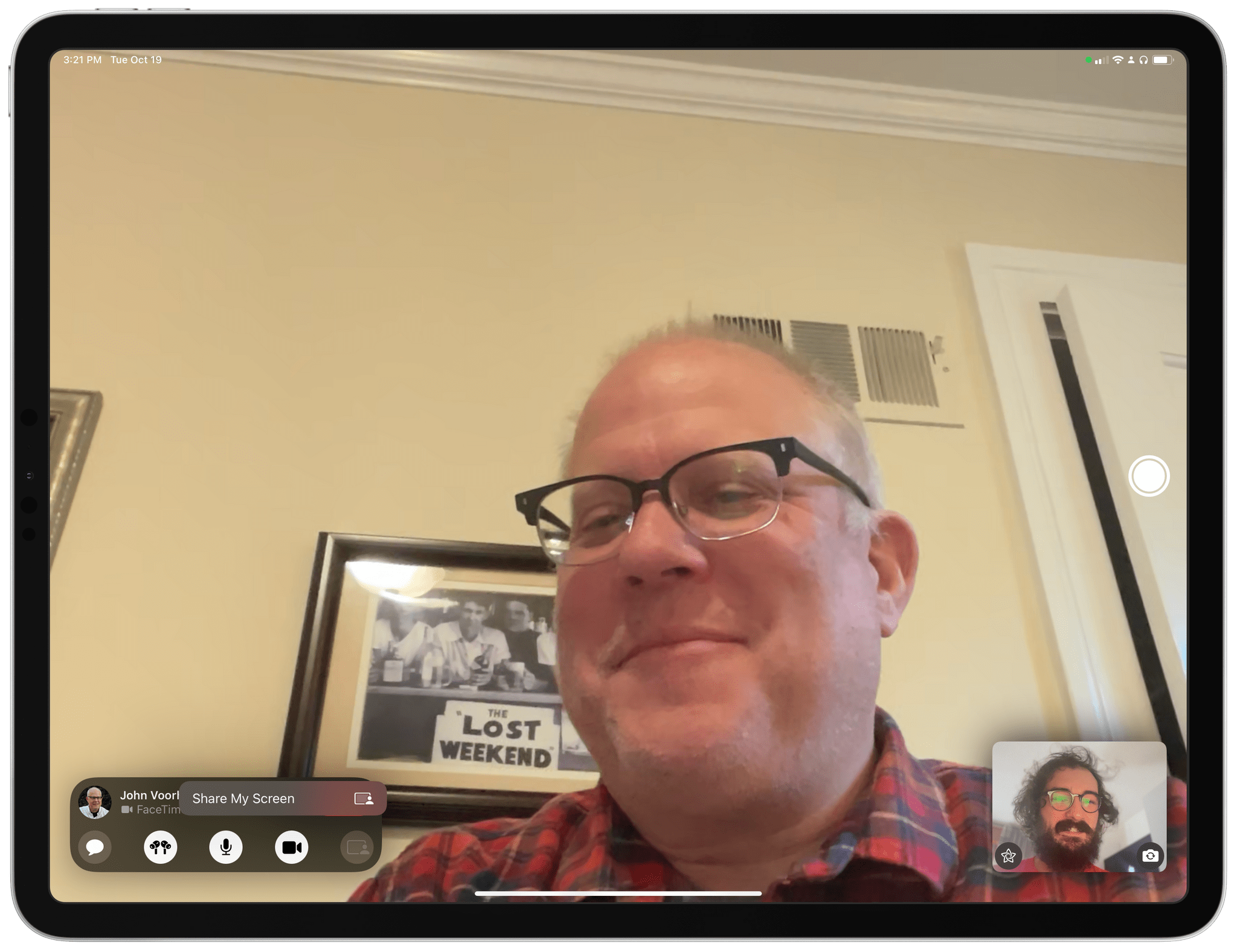

I think, however, that more than TV or Music, the sleeper hit that makes for the best SharePlay demo is a new button that has been added to the main FaceTime UI: screen sharing.

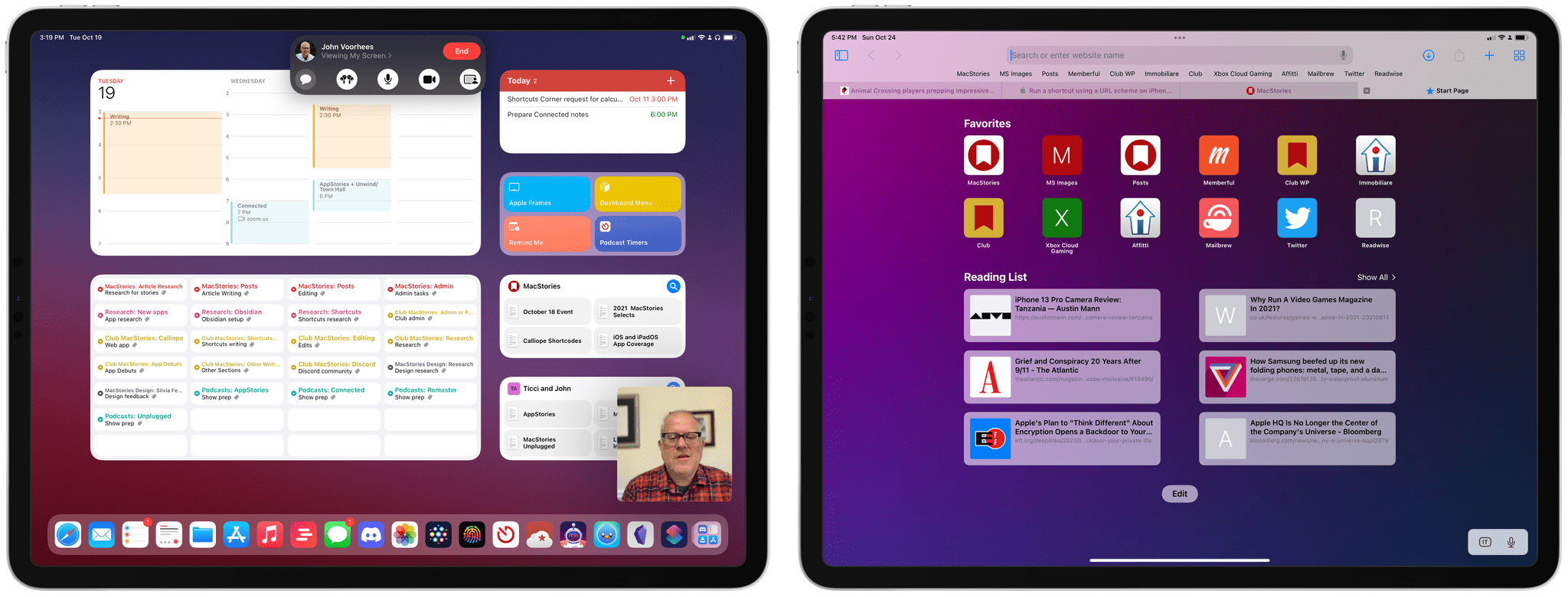

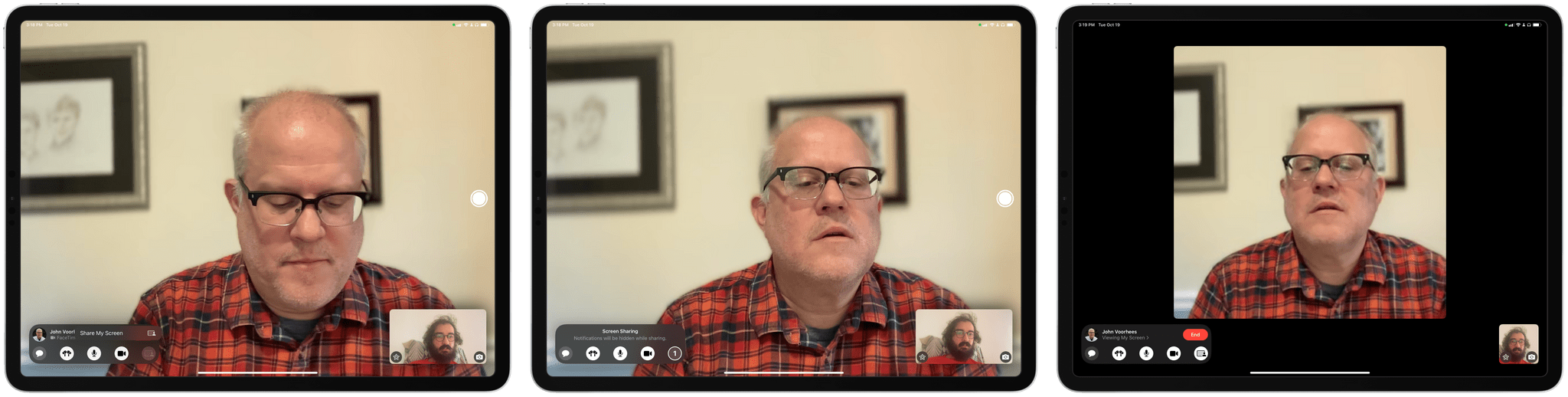

Powered by SharePlay, pressing the rectangle-with-a-person button in FaceTime will now let you share your screen with other people on a FaceTime call. When you start sharing your screen, FaceTime will play a countdown and tell you that notifications will be hidden while your screen is being shared. You can then exit the FaceTime app and show whatever you want to show on your iPhone and iPad to other people, who will see everything you’re doing on the device. You can tap a new purple screen sharing icon in the status bar to bring up a contextual FaceTime menu (new in iOS and iPadOS 15) that tells you who’s viewing the screen and gives you controls to stop screen sharing and tweak settings for audio and microphone.

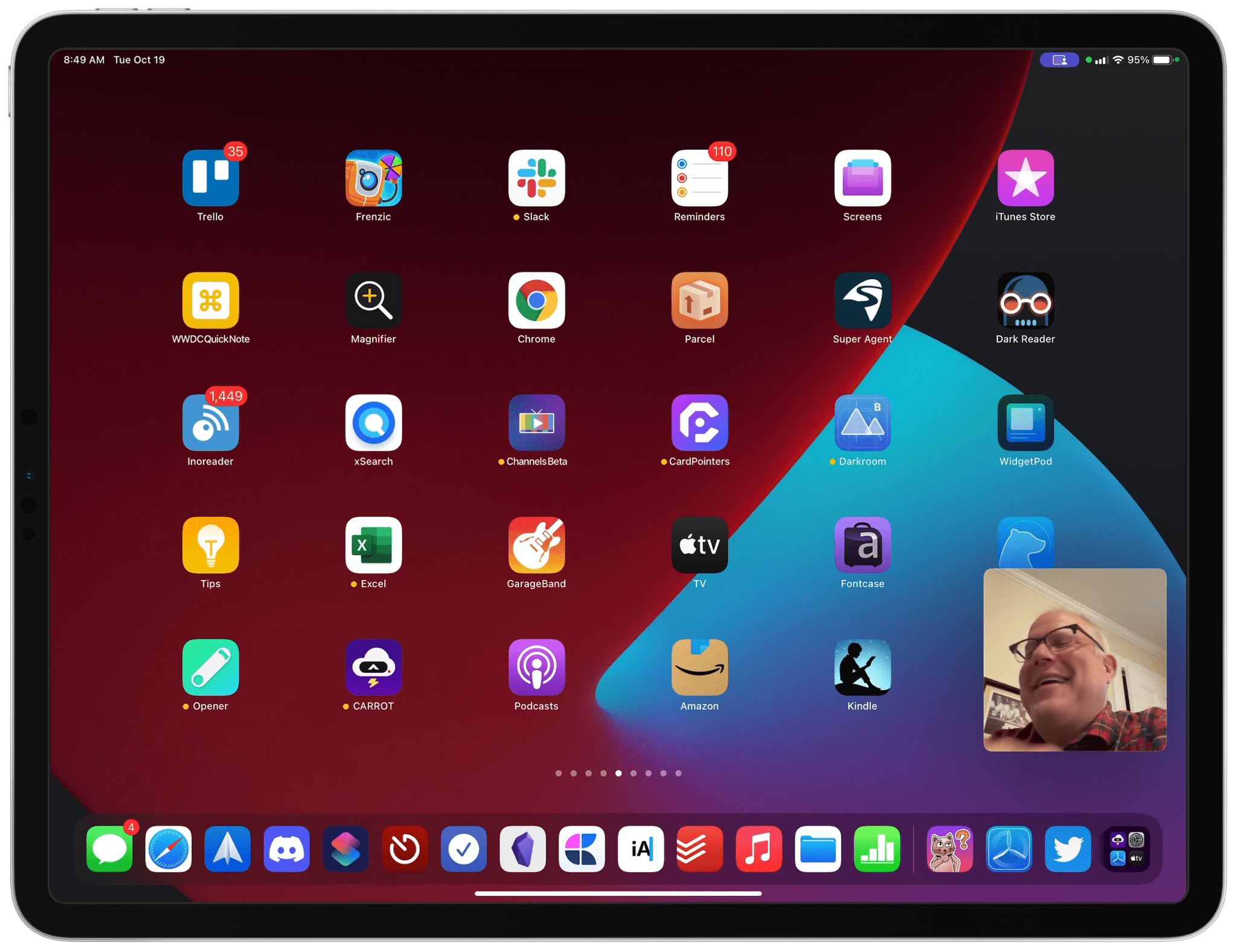

In iOS and iPadOS 15.1, you’ll find a new option to share your screen in FaceTime (pictured above, bottom left corner of the screen).

If you’re on the receiving end of a screen being shared, you’ll get a special notification that someone started sharing their screen if you’re not inside the FaceTime app; if you’re in FaceTime, the shared screen will pop up with a Picture in Picture mode within the call, which you can expand to full-screen. Both these UI elements – the special notification and Picture in Picture inside FaceTime – are also used for other SharePlay interactions I’ll describe below.

When you’re watching someone else’s screen, you still see them in FaceTime’s Picture in Picture mode. Here, the number of apps and unread badges suggests only one thing: this is John’s Home Screen .

In my tests with screen sharing in FaceTime over Wi-Fi, image quality from John’s iPad was great, with minimal degradation that did not prevent me from reading small text such as text inside widgets on John’s Home Screen. When I was watching John’s screen, I could still see him in a floating window in the corner of the FaceTime UI.

I had a feeling this would be the case, but after testing it, I’m convinced that screen sharing will be the most popular and useful SharePlay feature for all Apple users. Screen sharing built into iOS and iPadOS will be incredible for tech support with family members or friends who are having issues with their devices; starting with iOS 15.1, you can just tell them to hop on FaceTime, press the rectangle icon, and you’ll be able to see their screen. Whoever thought of building this feature inside FaceTime at Apple should get an award. I love it, and I look forward to solving my mom’s iPhone problems with it.

I wish I could be as effusive about the other built-in SharePlay modes for listening to music or watching movies and TV shows together on FaceTime. They’re…fine. Both modes are technically impressive, but ultimately feel like gimmicks that were devised in a different era of the pandemic to help people spend time together, remotely. I’m sure there will be legitimate use cases for both SharePlay integrations for people who live abroad and, say, can’t see their friends and family in real life for long periods of time; I just don’t think such a potential, occasional use of SharePlay will justify continued investment by Apple going forward or yield significant third-party developer adoption.

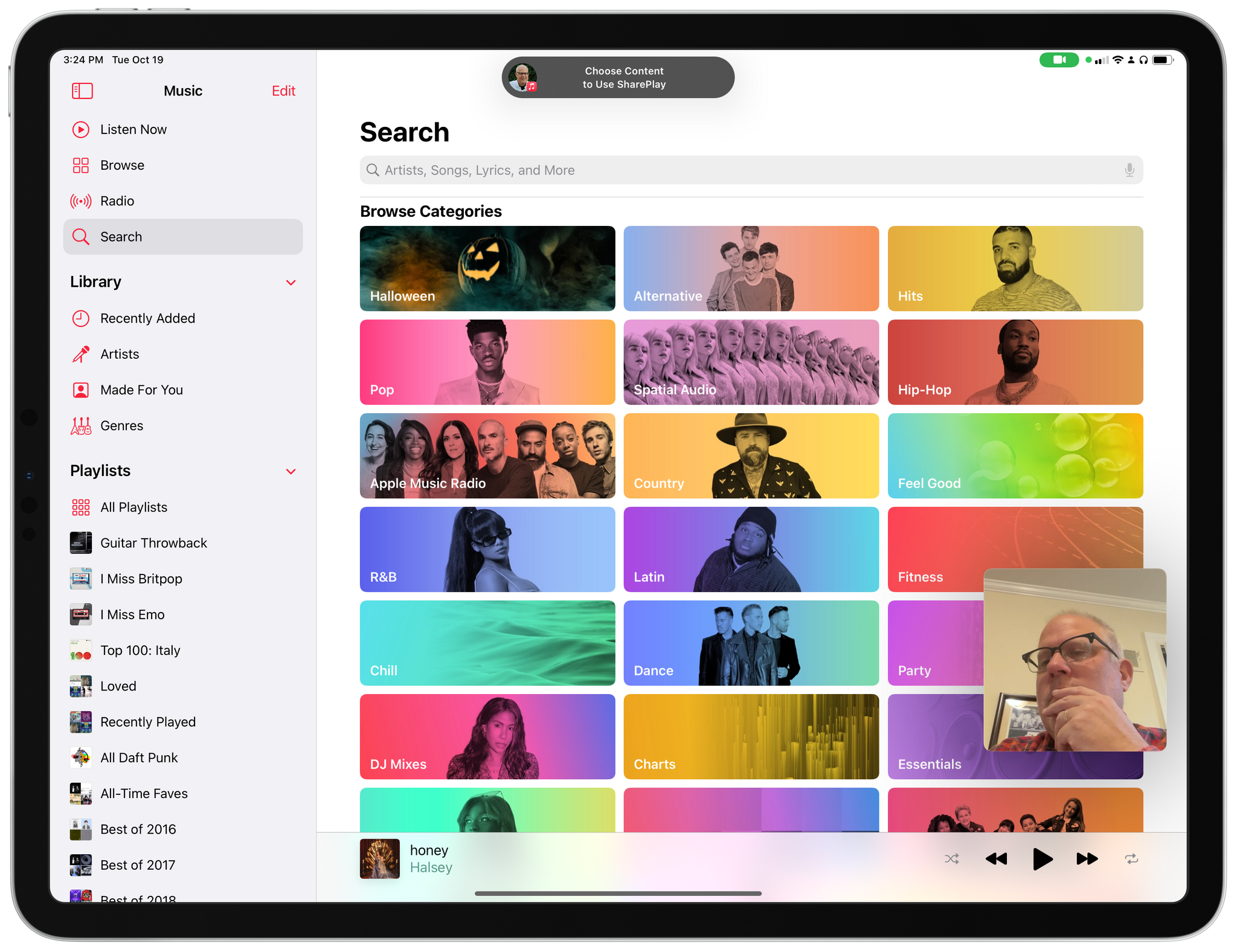

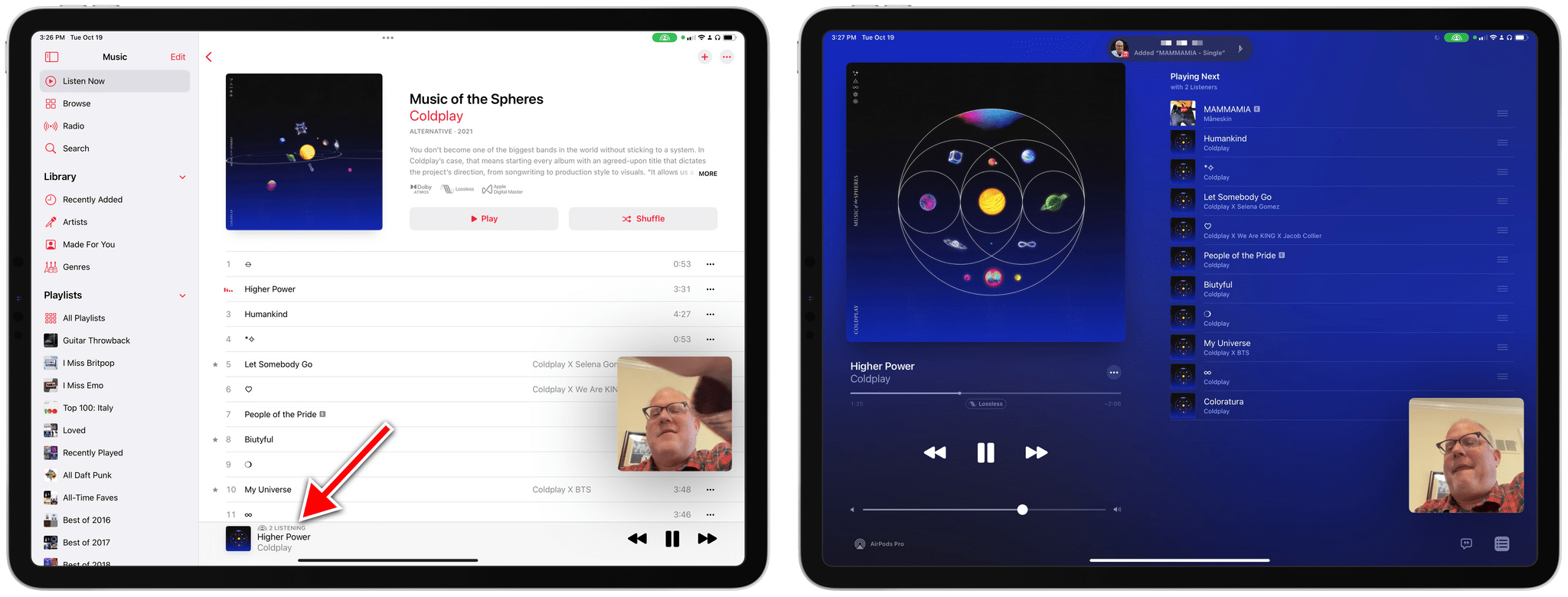

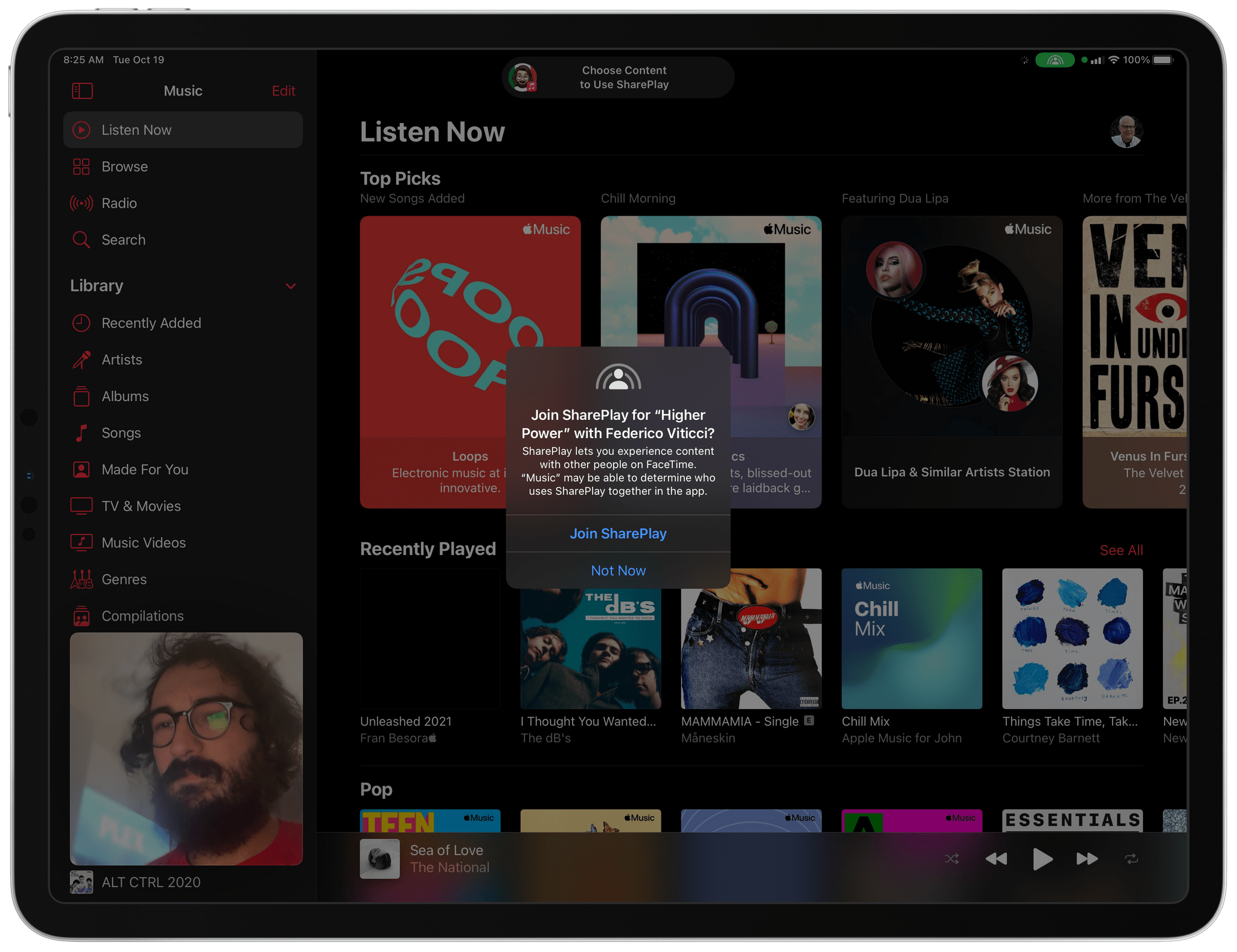

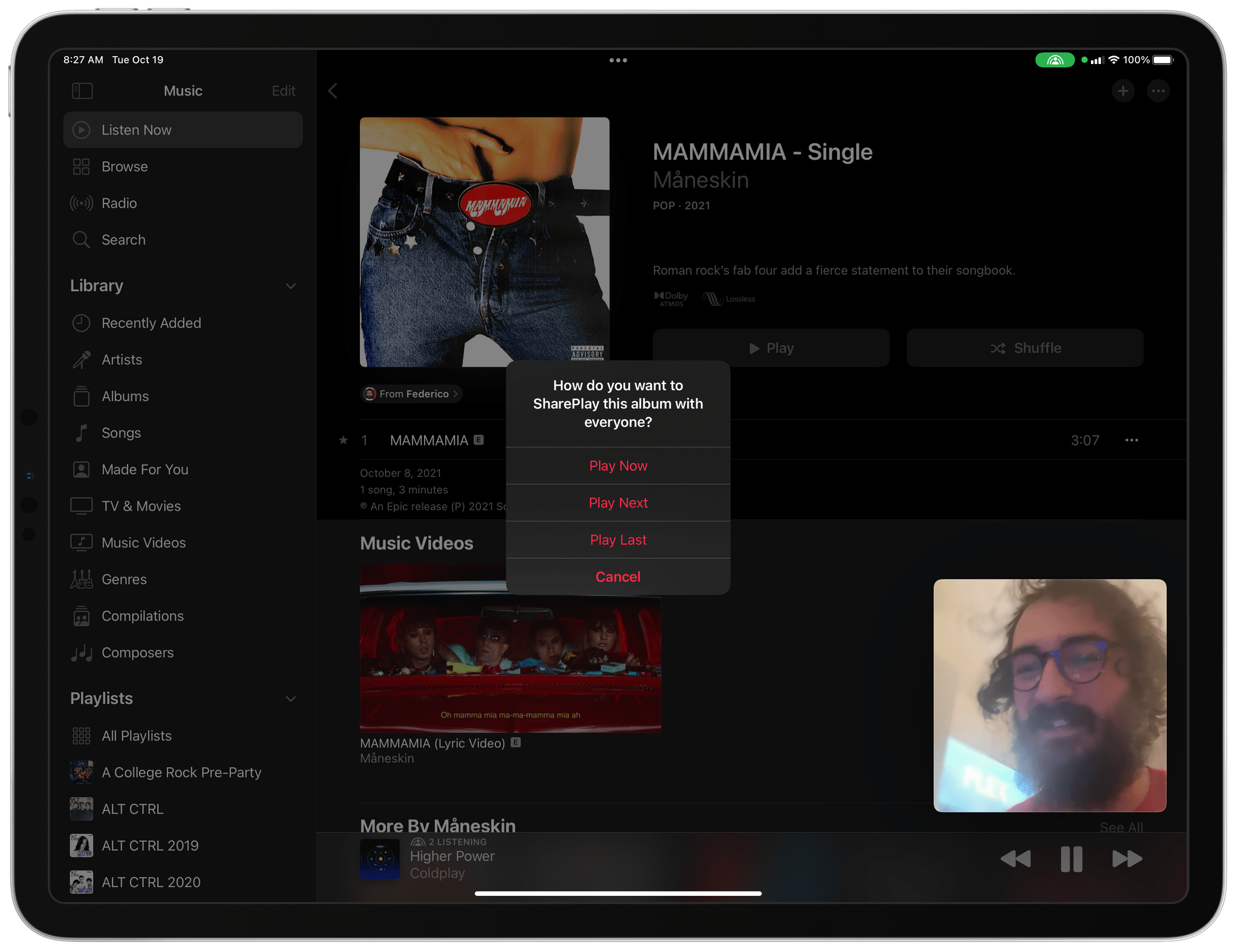

When you open the Music app while a FaceTime call is active, you have a couple of different ways to start a SharePlay activity. First, you’ll get an alert (it’s a toast-style alert that pops down from the top of the screen, similar to Apple Pencil notifications or the gesture-based copy and paste menu) that confirms you can choose content to enjoy with other people over SharePlay. This style of alert is used throughout SharePlay, including compatible third-party apps, to display activity such as paused media or sessions started or ended during the call.

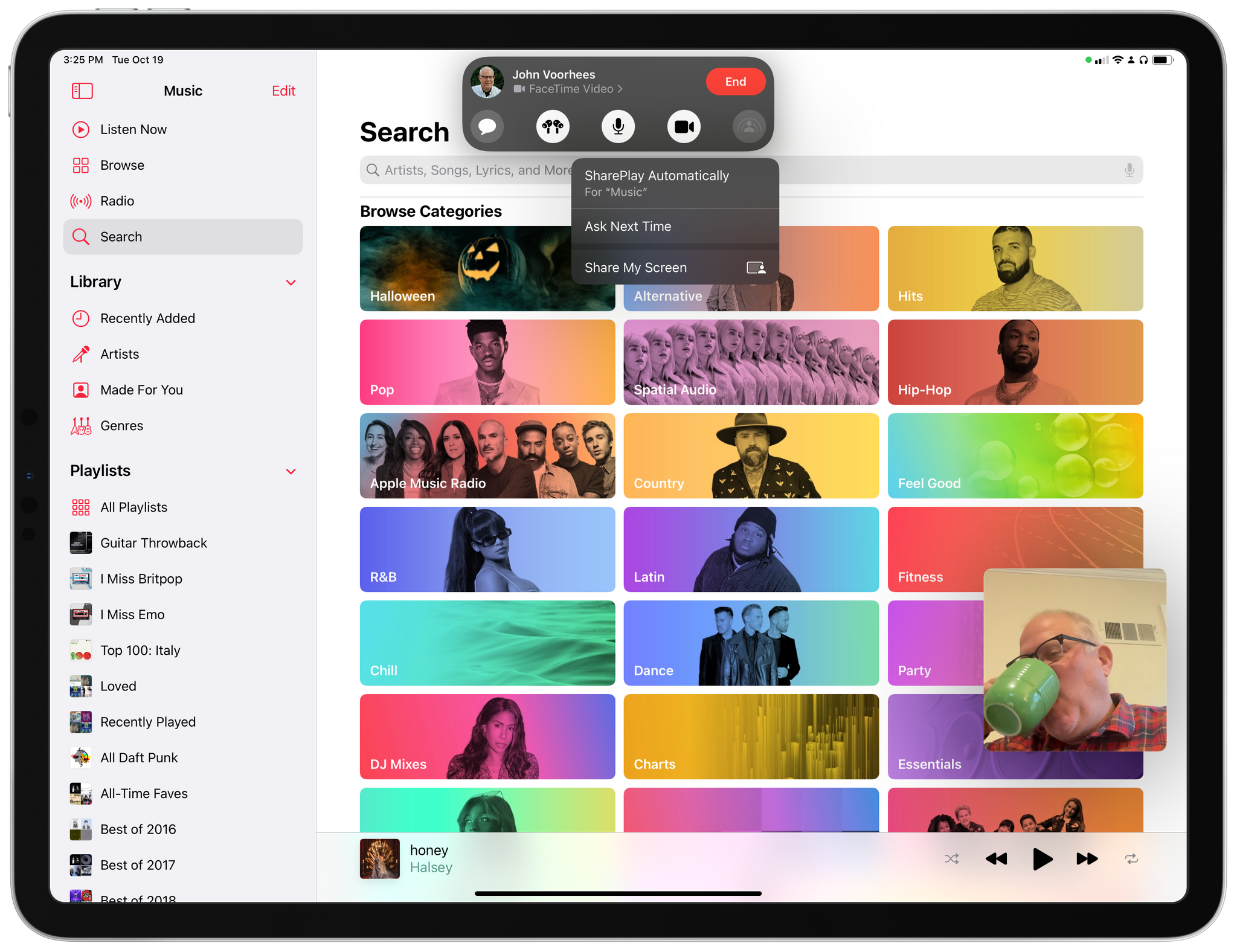

At any point, you can also tap the FaceTime icon in the status bar to invoke the system-wide FaceTime menu, which, starting with iOS and iPadOS 15.1, features a prominent SharePlay button. This button presents a sub-menu with a global option to share your screen and choose whether you want to share content from the current app over SharePlay automatically or be asked next time you start or play something.

The idea here, I guess, is that Apple wants to make it easy for a ‘call host’ to select the ‘Share Automatically’ option, which will continuously share whatever they pick in the current app over SharePlay. In Music, this meant I was able to click different albums in my Listen Now page, and they started playing on John’s iPad because FaceTime was sharing them automatically.

In my tests last week, I was impressed by SharePlay’s reliability and Apple’s implementation in Music. I was able to start and end “activities” (i.e. start and stop specific music albums or playlists), and John could do the same on his device. When we were listening together, the mini player at the bottom of the Music app displayed a label that said ‘2 listening’, which was a nice touch. Remarkably, our Up Next queue was also shared, so John could add songs to my Music app’s queue, and vice versa.

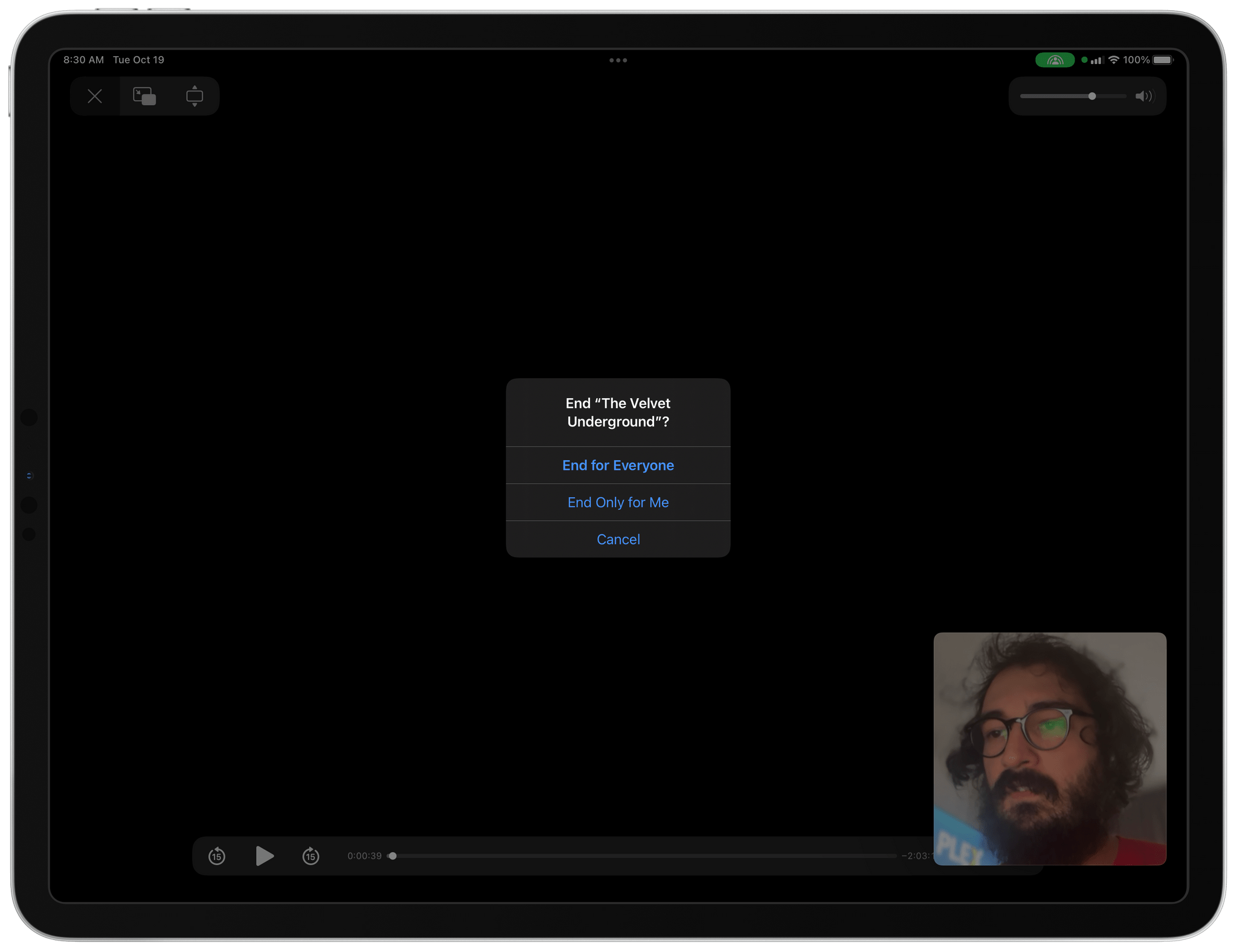

And there’s more: if you need to leave a FaceTime call but don’t want to end the current activity for everyone else, you can do so by stopping the activity and choose ‘End Only for Me’. This will be useful for those times when you have a call with multiple participants listening to an album or watching a TV show together but you need to leave early.

Apple even considered the fact that people may still be talking while listening to music over FaceTime, so the Music app will automatically lower the volume when your friends are talking. The company calls this ‘smart volume’, and it worked well in my tests.

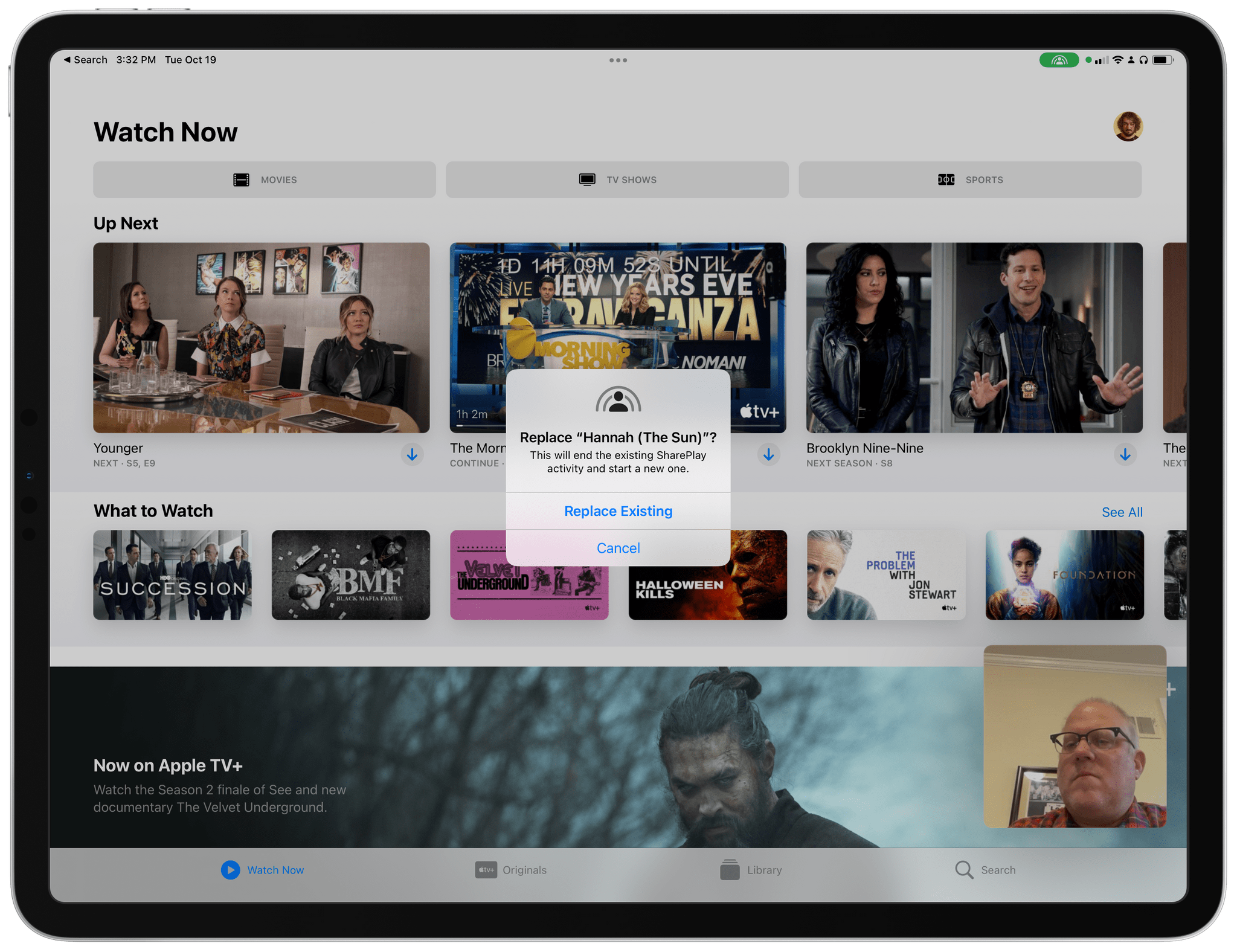

I didn’t test Fitness+1 (another Apple app with SharePlay integration), but we did try to watch an episode of The Morning Show together via SharePlay on FaceTime. The integration between the TV app and SharePlay is mostly comparable to Music, with some differences. If you’re inside the FaceTime app while someone else starts a SharePlay activity in TV, the video will start playing in Picture in Picture inside FaceTime. As with the Apple Music integration, the assumption here is that you’re also an Apple TV+ subscriber (SharePlay cannot be used to share subscription content with other non-subscribers over FaceTime). You can expand the video to full-screen, which will open the main TV app behind the scenes. Even in full-screen mode, you’ll still have a floating FaceTime window so you can see your friends while watching a movie or TV show together.

The TV app comes with another perk: integration with the physical Apple TV device. Instead of having both TV content and the FaceTime UI onscreen at the same time, you can start a SharePlay activity for the TV app on the iPhone or iPad, transfer the video to the Apple TV so you can watch it on a bigger screen, and continue the FaceTime call on iOS or iPadOS. If you have an Apple TV available, that feels like a better approach for conversations with multiple people.

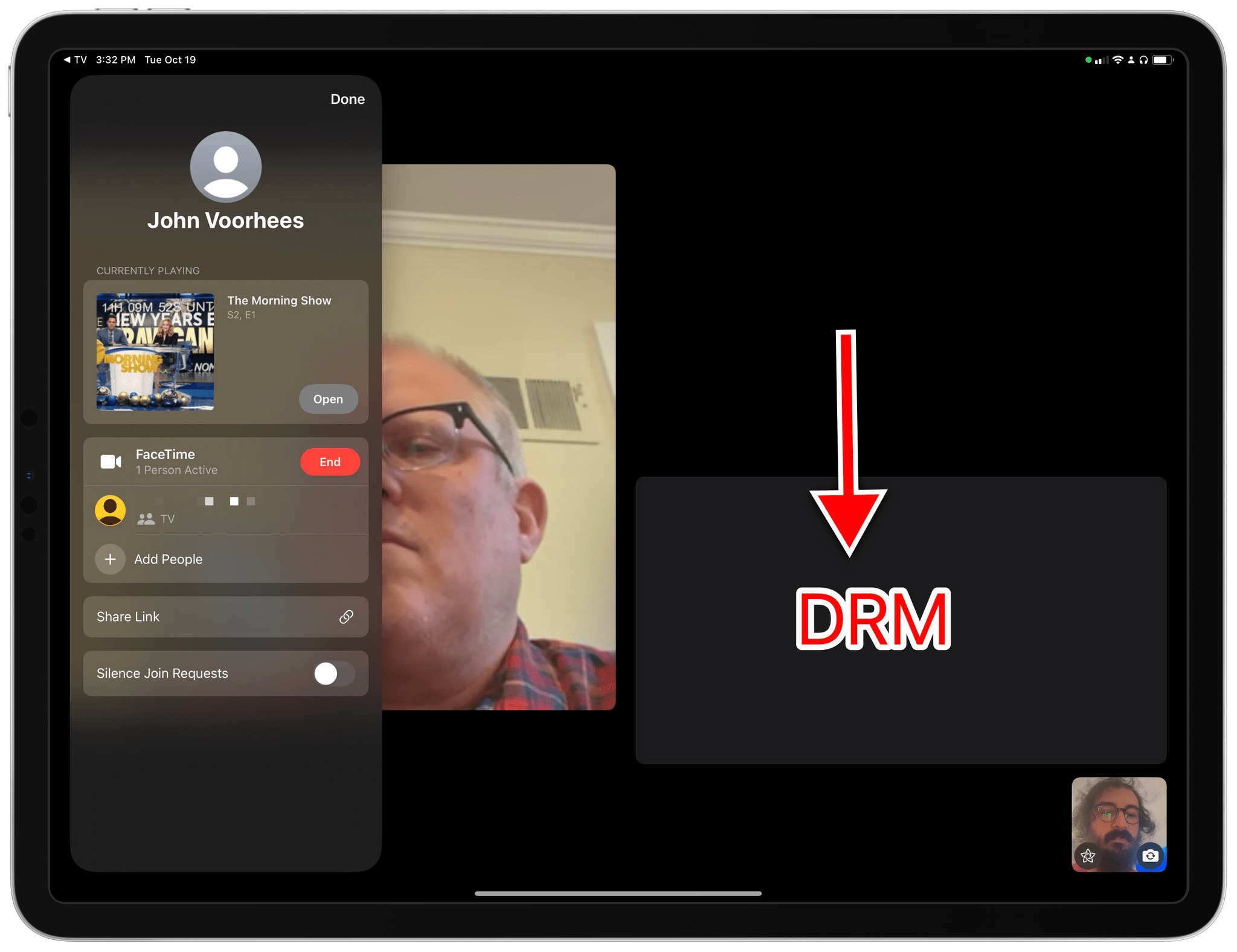

It should also be noted that, for both Music and TV, you can always keep an eye on what is currently playing with a new section of the call inspector:

The call inspector in FaceTime shows details about content being shared with SharePlay. The black rectangle is content from Apple TV+, but DRM prevents the ability to take screenshots.

Here’s where I stand on SharePlay for Music and TV content: I think the implementation is a remarkable technical achievement, with synced real-time playback, shared queues, and excellent integration of services, hardware, and software that only Apple can do. From a mere technical standpoint, SharePlay is fun, reliable, and uniquely Apple. My problem with it is that, in late 2021, with life in Italy having essentially returned to a pre-pandemic normalcy, I don’t think I’m ever going to use SharePlay for music and TV shows in practice.

Like I said above, I understand if there are people who continue to appreciate the advantages of SharePlay regardless of the pandemic and lockdowns; if that’s the case, you’re going to love it. Personally, I think SharePlay would have made a lot more sense for a lot more people last year. Now, it just kind of feels like a wasted opportunity because we can all hang out in person again. SharePlay is technically impressive, but it’s too late.

SharePlay and Third-Party Apps

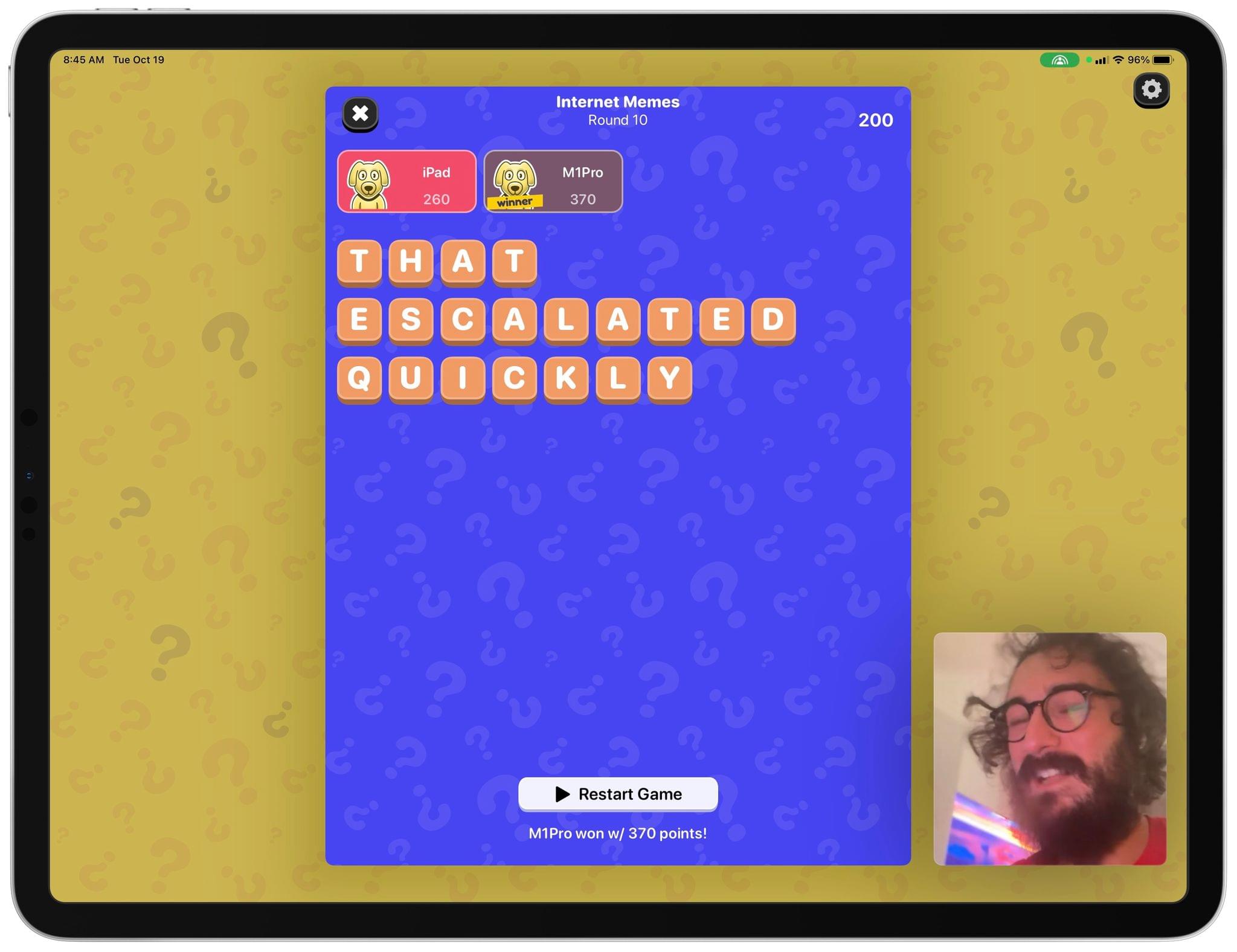

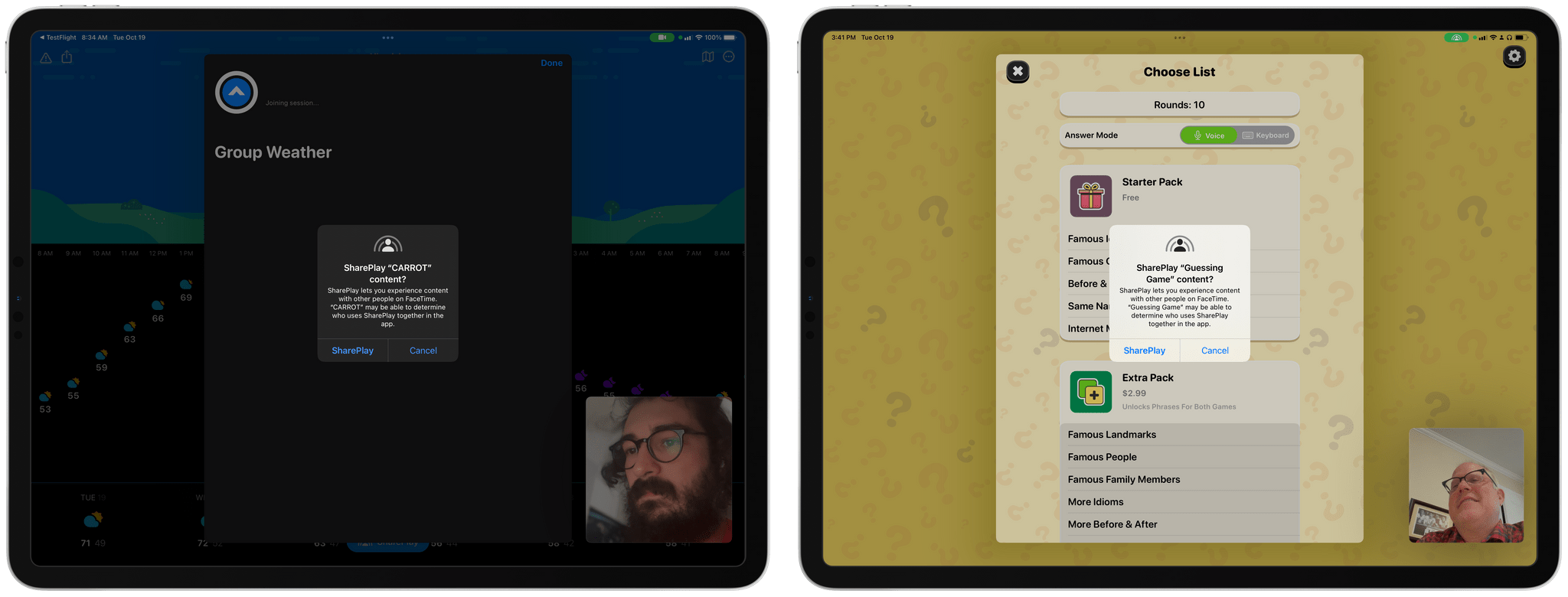

We also tested SharePlay with a variety of third-party apps that are adding support for the SharePlay framework to enable shared activities over FaceTime calls.

My sense is that SharePlay is largely a gimmick for most of these early apps: while I don’t begrudge developers for wanting to support one of the very few new APIs in iOS and iPadOS 15 (it’s an easy way to let Apple feature your app on the App Store), it’s also undeniable that SharePlay doesn’t really work for apps and utilities that were designed as offline, single-user experiences. The vast majority of third-party SharePlay-enabled apps I’ve tried gave me the same vibes as iMessage apps five years ago: cool to play with them once, only to never try them again.

While third-party developers can design custom UIs to detect when FaceTime is active and SharePlay can be started, the initial permission dialog is consistent everywhere.

At the same time, I can see how the SharePlay framework might work for very specific collaboration-focused apps or asynchronous, turn-based, multiplayer games that don’t require fast-paced gameplay. My favorite example so far is SharePlay Guessing Game, a new game by Greg Gardner that is entirely played with your friends over SharePlay on FaceTime. In the game, you have to guess complete sentences as letters slowly fill blank spaces onscreen. The twist: because the game is played on FaceTime, each person can guess the sentence by just saying it out loud, and the game will use speech recognition to automatically fill in the sentence and assign points to whoever guessed it first.

This game is incredibly fun, and it’s also the only example of SharePlay “clicking” for me in a third-party app so far. I think there’s potential for certain education apps, collaborative whiteboard utilities, and other shared experiences to tap into SharePlay with good and useful results. But I also expect to see a lot of…questionable SharePlay implementations designed to simply grab Apple’s attention in the short term, and I don’t think those are going to catch on at all.

Safari Changes

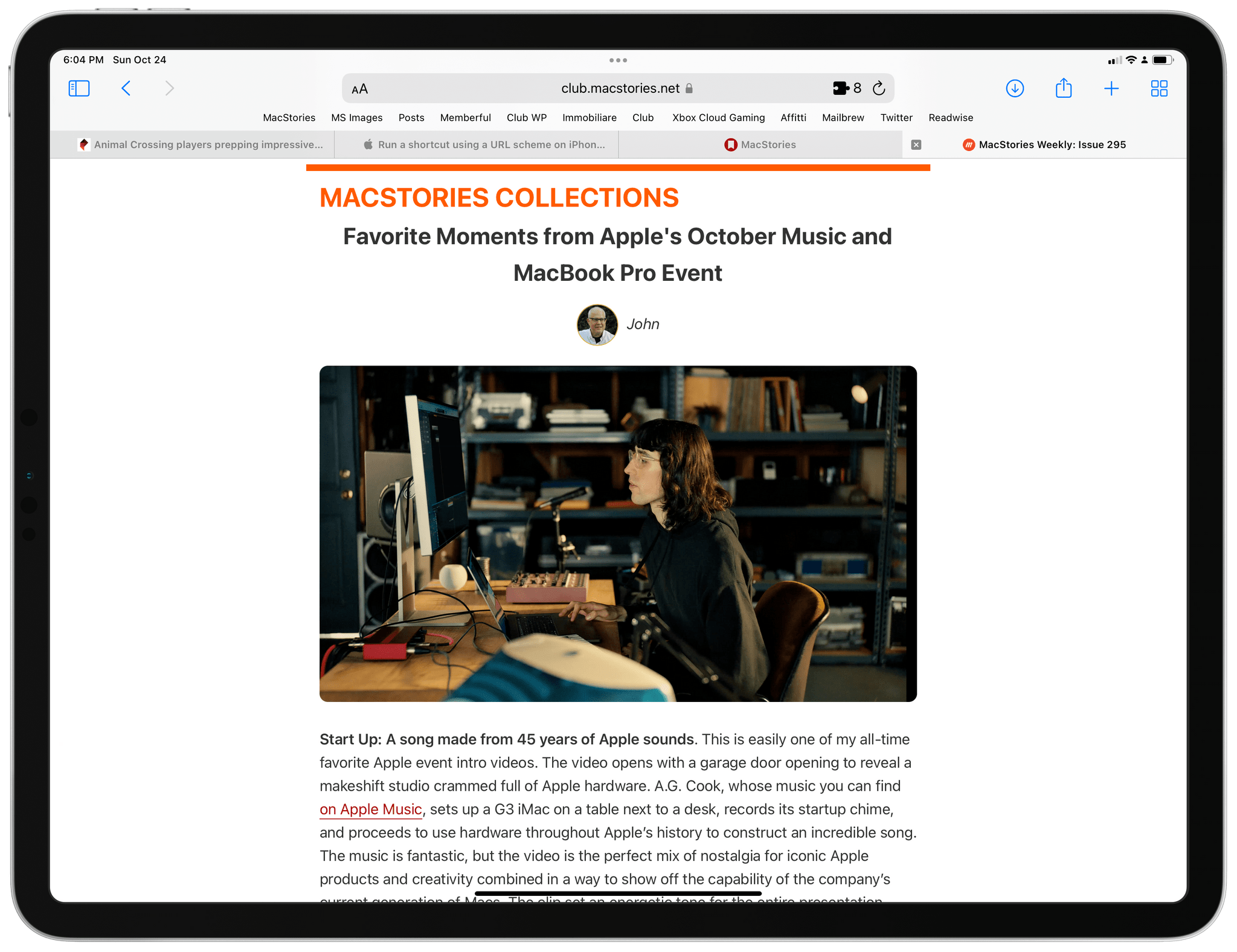

Well, that escalated quickly.

With iPadOS 15.1, Apple has undone the most controversial and, quite frankly, terrible changes that were brought to the app’s redesign in version 15.0. What I hoped would happen when I wrote my iOS and iPadOS 15 review came true a mere month later: Safari’s tabs on iPad have reverted to their previous design, and the favorites bar has been moved back above tabs. Sadly, the useless compact mode is still around on iPad and Mac, but I wouldn’t be surprised if that gets removed as well given its lack of a reason to exist.

With the Bad Safari Design™ gone, we’re left with an excellent upgrade to Apple’s web browser that is now packed with useful features such as tab groups, customizable start page, and extensions. As I wrote last month, the new default tab bar mode on iPhone has grown on me, and I couldn’t imagine going back to the old design with the address bar at the top now. Judging from anecdotal experience, it feels like the new iPhone design has been well received and that people find it more convenient to use for Google searches and switching tabs. There are still features missing from the iPad version (why isn’t the sidebar customizable? Has Reading List been abandoned at this point?), but, overall, I’m glad Apple listened to feedback and iterated quickly.

Last month, I wrote:

Essentially, I’d love to have the same design as Safari for iPadOS 14, but with the added functionalities I’m going to detail on the next page.

This is what we’re getting in iPadOS 15.1. If you haven’t updated because of the horrible redesign in iPadOS 15.0, it’s now safe to do so.

Everything Else

Here’s a rundown of all the other changes you can find in iOS and iPadOS 15.1:

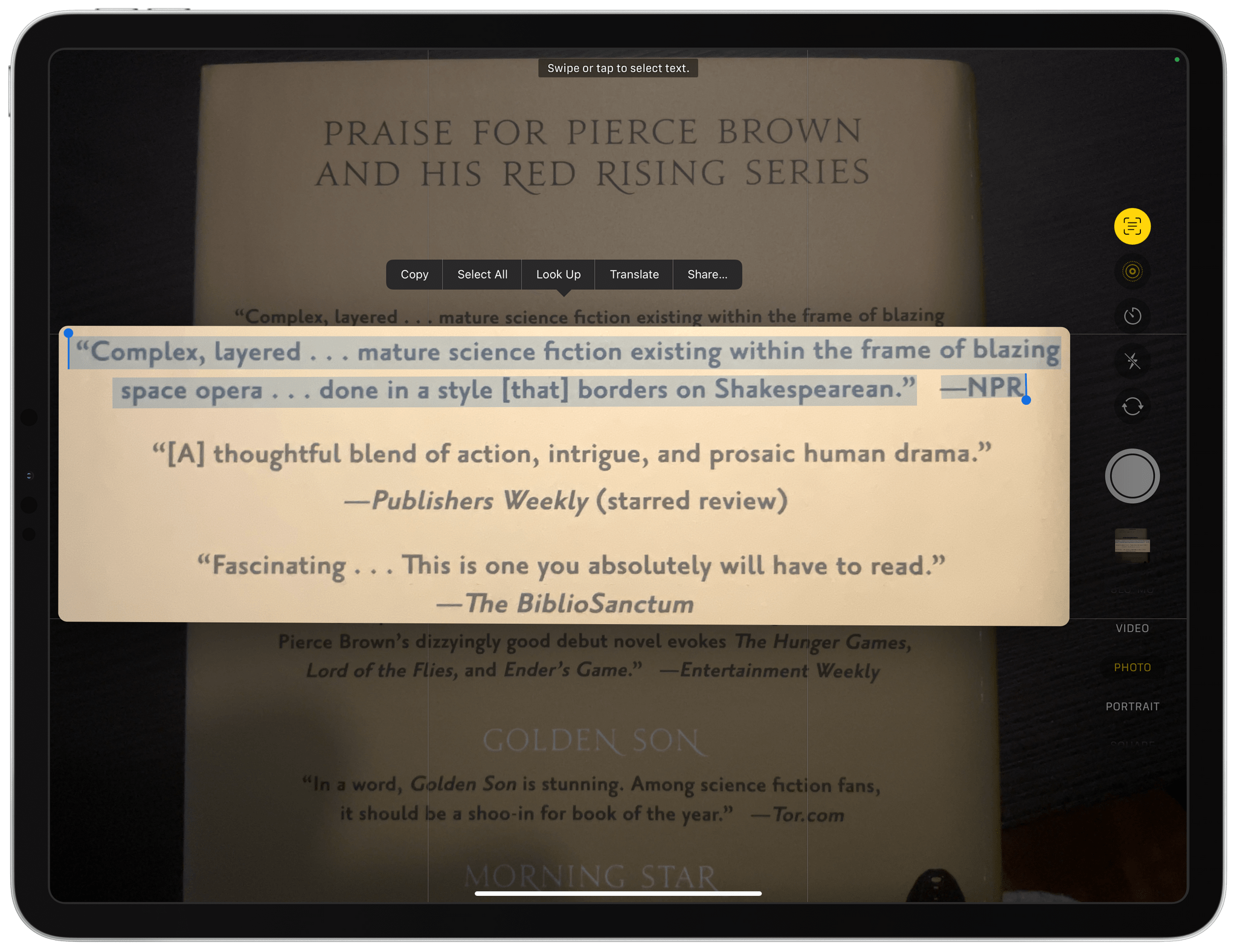

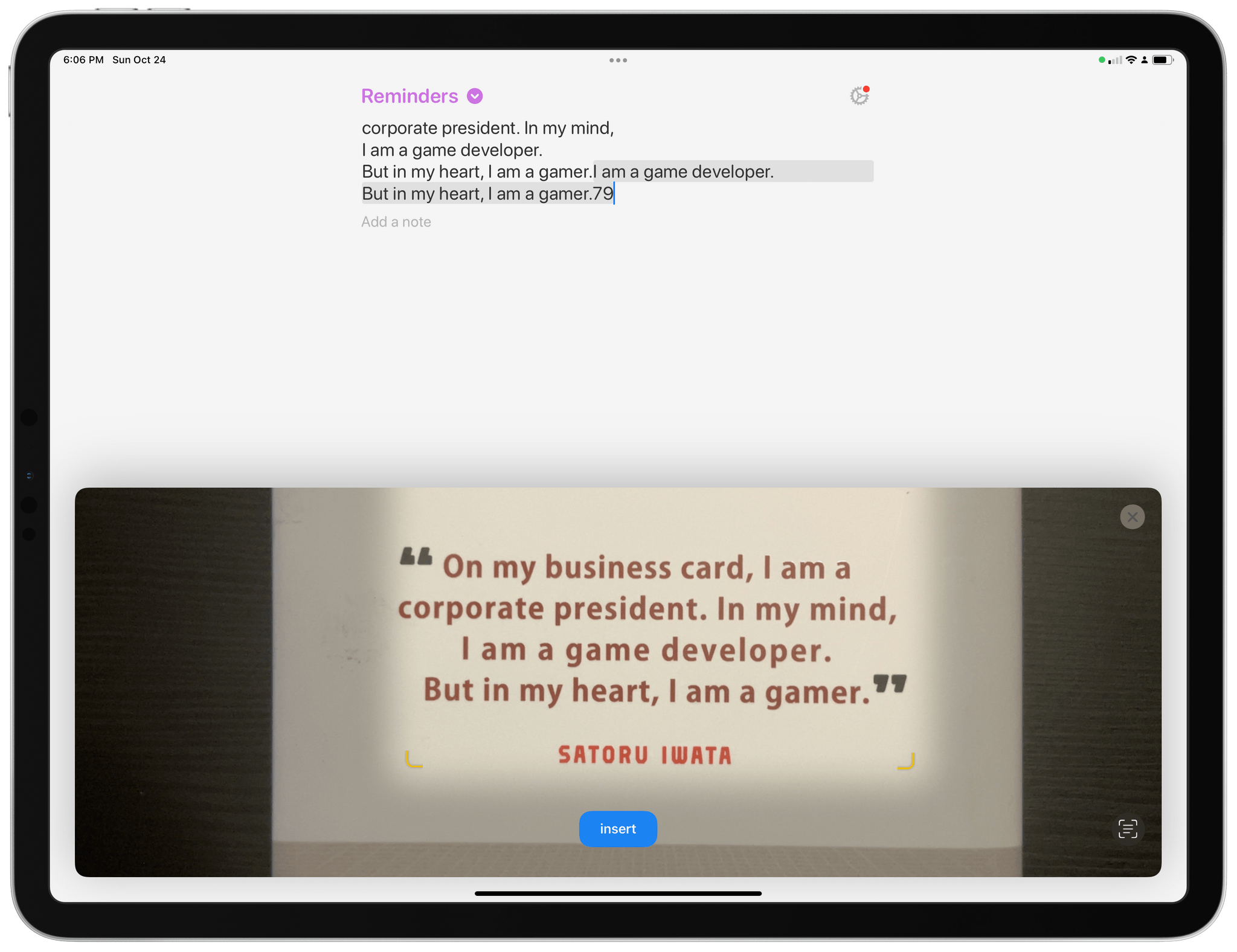

Live Text in the Camera on iPad. Surprisingly absent from iPadOS 15.0, support for Live Text in the Camera (see this section of my review) has arrived in iPadOS 15.1. While you could recognize and select text in the Photos app for iPad before, you couldn’t scan text in real-time from the Camera app; now, if you have an iPad with an A12 Bionic chip or later, you can hold up your tablet and scan text in real-time anywhere you are.

I also want to point out the amazing UI for using Live Text in text fields inside apps. Because this special Camera mode takes over the entire software keyboard, this is what you get on a 12.9” iPad Pro in landscape when you insert text using the Camera:

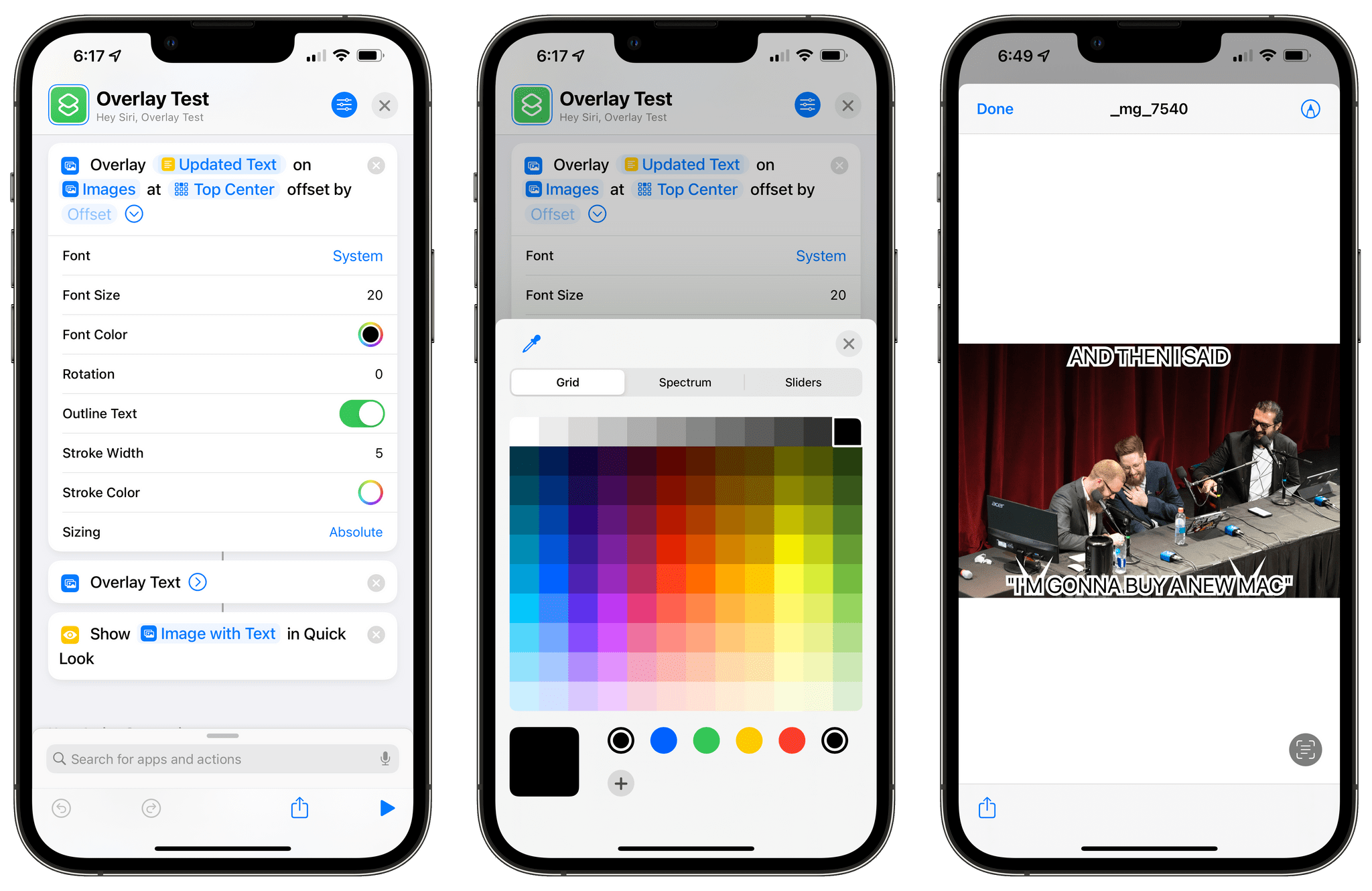

New ‘Overlay Text’ action in Shortcuts. With this new action in iOS and iPadOS 15.1, you’ll be able to overlay arbitrary text on top of an image, which is something I’ve long wanted to do in Shortcuts without relying on silly workarounds that involved turning text to an image first. Now, you can pass any text string to this action, choose where on the image it should be overlaid, and even control aspects such as its outline stroke, font (custom ones are supported via the system’s native font picker), proportional and absolute sizing, and color.

The Overlay Text image action was clearly inspired by the advanced controls available in the Overlay Image one, and I’m impressed by how flexible this action can be. I suspect that it will be used in shortcuts that create memes based on popular Internet templates, which is something I’ve been considering myself for a fun holiday project.

With its support for the native font and color pickers, the Overlay Text action is also a fascinating example of a new generation of advanced Shortcuts actions that come with a variety of embedded controls. I hope this is the start of a new trend for Apple.

Shortcuts stability. Speaking of Shortcuts, I’m happy to confirm that Apple has fixed several of the most annoying bugs that were affecting the app in the initial release of iOS and iPadOS 15.0.

This is not to say the app is perfect now: I still experience regular problems with drag and drop in the editor; the app occasionally crashes when running shortcuts on iPad; there are still a plethora of UI glitches and inconsistencies likely caused by the adoption of SwiftUI. However, at the very least, I can now click UI elements with the pointer inside the editor, the ‘Copy to Clipboard’ action seems to be working again, and permission alerts come up only once. There’s progress being made in Shortcuts, which is good, but it’s still a shame the app launched in such a precarious condition last month. The situation, unfortunately, is even worse in macOS Monterey.

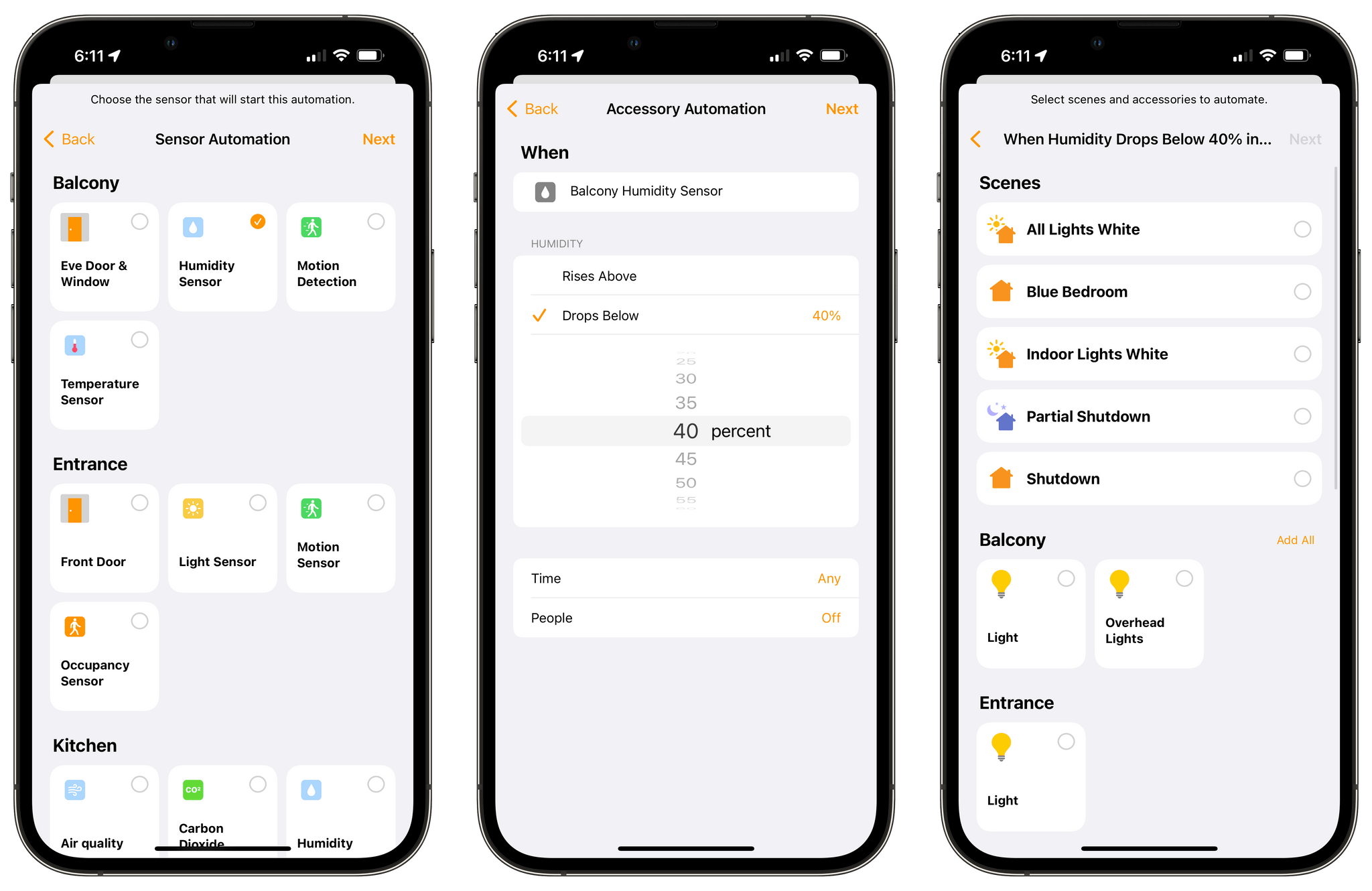

HomeKit automation triggers for sensor thresholds. Starting with iOS and iPadOS 15.1, you can finally create automation triggers in the Home app based on thresholds from humidity, air quality, and light level sensors. The “finally” is justified: I first wrote about how the HomeKit framework supported threshold-based automations in 2017. It was a strange decision on Apple’s part: the HomeKit API supported these triggers, but Apple wasn’t surfacing them in the Home app. As a result, if you wanted to create an automation for, say, humidity rising above 60%, you had to use a third-party HomeKit client, and then those automations would show up (and work correctly!) in Apple’s Home app. It didn’t make any sense.

In iOS and iPadOS 15.1, sensors will show up as options in Accessory Automations, and you’ll find new triggers for ‘Rises Above’ and ‘Drops Below’ that you can connect with other actions in the Home app. I’m very happy to see this fix, and I plan on using it myself to connect my humidity sensor to a dehumidifier powered by a HomeKit smart plug.

New options for spatial audio. If you’re intrigued by Apple Music’s support for spatial audio (for both Dolby Atmos content and Apple’s ‘spatialize stereo’ setting), but don’t want to commit to it full-time, you’ll be relieved to know that iOS 15.1 introduces new toggles to adjust spatial audio from Control Center, without visiting Settings. With spatial audio enabled, long-press the volume slider in Control Center and you’ll be presented with new toggles to turn it off or change its head-tracking mode to a ‘fixed’ one.

Previously, spatial audio for music was always set to track your head’s movements in real-time when wearing compatible headphones such as AirPods Pro and AirPods Max. I know that a lot of people (myself included) found that distracting for music, particularly when walking or working out. With the new ‘fixed’ mode, you can enjoy the benefits of spatial audio for a greater soundstage without any physical tracking involved. More importantly, I appreciate how I can now leave Dolby Atmos set to ‘Automatic’ in Settings but turn it off from Control Center for albums that have a spatial mix I don’t like.

ProRes video and Auto Macro toggle. Lastly, there are two interesting additions for photographers and videomakers in iOS 15.1 for the iPhone 13 Pro line.

First, you can now choose to record videos in Apple ProRes, which is supported for up to 30fps and 4K and 60fps at 1080p. I never plan on shooting in ProRes myself, but I know that it’s one of the most popular formats for video post-production, so I’m glad Apple added it as an option.

The company is also offering a new Auto Macro toggle in Settings ⇾ Camera to disable the automatic switching between lenses when capturing macro photos and videos. If you disable this, the Camera app will no longer switch back and forth between lenses when you get close to a subject; instead, you’ll have to manually switch to the ultra-wide camera yourself if you want to capture a macro. I would have still preferred to see a proper ‘Macro Mode’ button in the Camera app for additional clarity, but I guess this will do for now.

iOS and iPadOS 15.1 are solid updates to last month’s release that bring back functionality that was originally announced at WWDC, improve stability, and, more importantly, fix Safari on iPad for good. They are both available today from the Software Update section of the Settings app on all devices which supported iOS and iPadOS 15. If you were annoyed by the issues in Safari and Shortcuts before, I recommend upgrading to 15.1 today.

- John was busy finishing his macOS Monterey review last week; I thought asking him to jump on the indoor bike and do a workout with me on FaceTime was a little too much. ↩︎