According to TechCrunch’s Matthew Panzarino, Apple will roll out the Deep Fusion camera feature announced at the company’s fall iPhone event today as part of the iOS developer beta program.

Deep Fusion is Apple’s new method of combining several images exposures at the pixel level for enhanced definition and color range beyond what is possible with traditional HDR techniques. Panzarino explains how Deep Fusion works:

The camera shoots a ‘short’ frame, at a negative EV value. Basically a slightly darker image than you’d like, and pulls sharpness from this frame. It then shoots 3 regular EV0 photos and a ‘long’ EV+ frame, registers alignment and blends those together.

This produces two 12MP photos – 24MP worth of data – which are combined into one 12MP result photo. The combination of the two is done using 4 separate neural networks which take into account the noise characteristics of Apple’s camera sensors as well as the subject matter in the image.

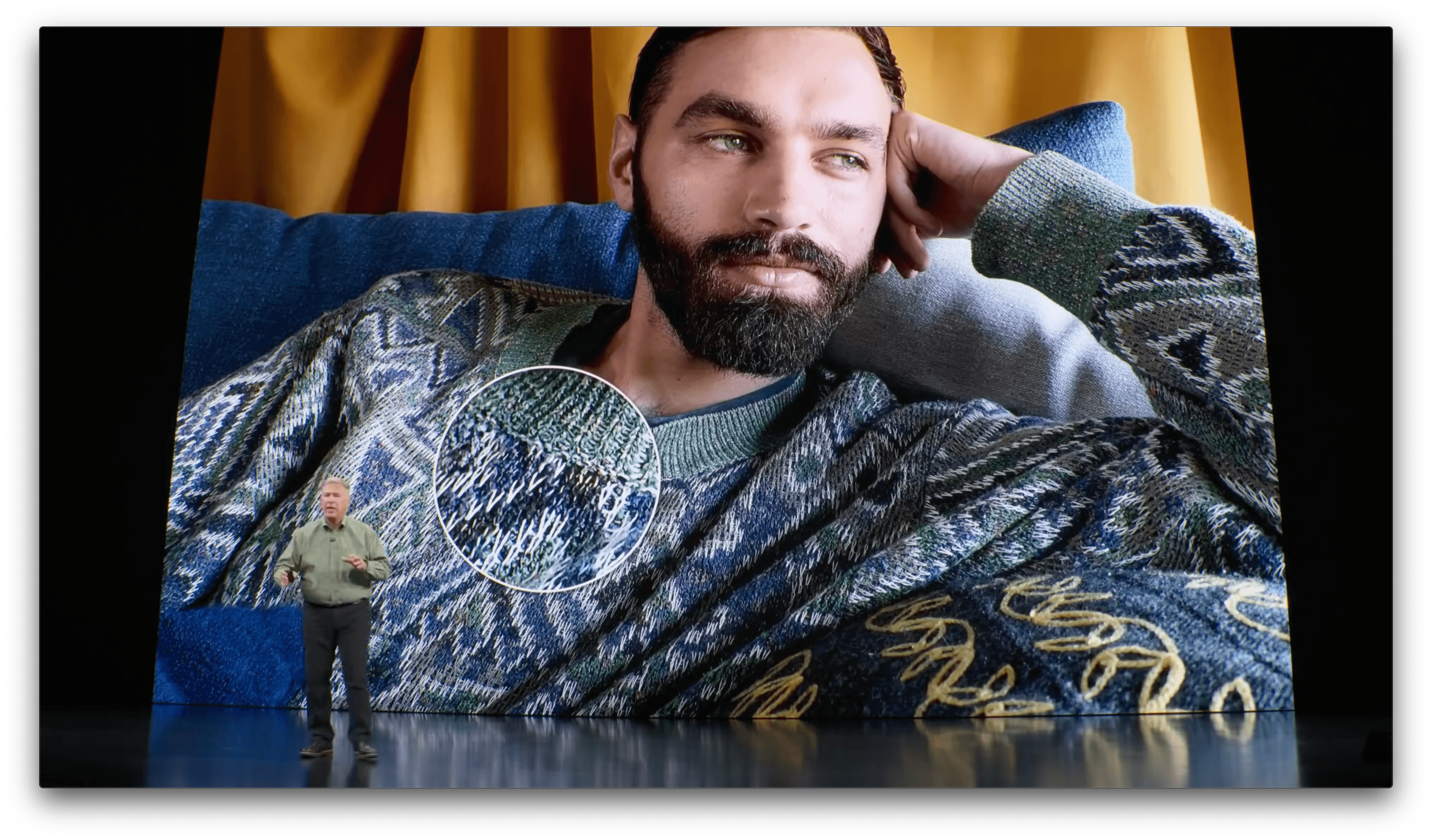

Apple told Panzarino that the technique “results in better skin transitions, better clothing detail and better crispness at the edges of moving subjects.”

There is no button or switch to turn Deep Fusion on. Like the over-crop feature that uses the ultra wide lens to allow photo reframing after the fact, Deep Fusion is engaged automatically depending on the camera lens used and light characteristics of the shot being taken. Panzarino also notes that Deep Fusion, which is only available for iPhones that use the A13 processor, does not work when the over-crop feature is turned on.

I’ve been curious about Deep Fusion since it was announced. It’s remarkable that photography has become as much about machine learning as it is about the physics of light and lenses. Deep Fusion is also the sort of feature that can’t be demonstrated well onstage, so I’m eager to get my hands on the beta and try it myself.