I’ve been experimenting with different automations and command line utilities to handle audio and video transcripts lately. In particular, I’ve been working with Simon Willison’s LLM command line utility as a way to interact with cloud-based large language models (primarily Claude and Gemini) directly from the macOS terminal.

For those unfamiliar, Willison’s LLM CLI tool is a command line utility that lets you communicate with services like ChatGPT, Gemini, and Claude using shell commands and dedicated plugins. The llm command is extremely flexible when it comes to input and output; it supports multiple modalities like audio and video attachments for certain models, and it offers custom schemas to return structured output from an API. Even for someone like me – not exactly a Terminal power user – the different llm commands and options are easy to understand and tweak.

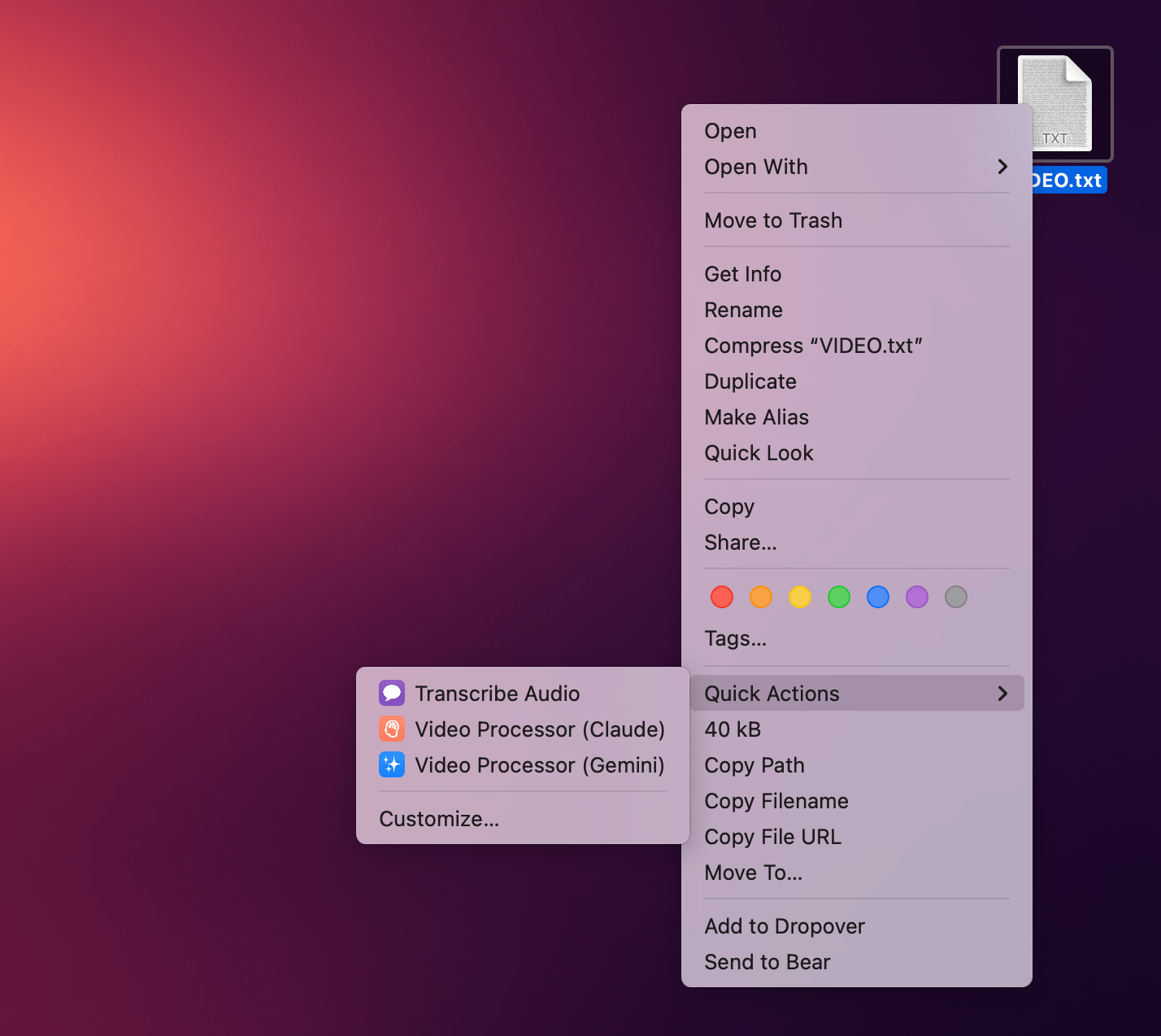

Today, I want to share a shortcut I created on my Mac that takes long transcripts of YouTube videos and:

- reformats them for clarity with proper paragraphs and punctuation, without altering the original text,

- extracts key points and highlights from the transcript, and

- organizes highlights by theme or idea.

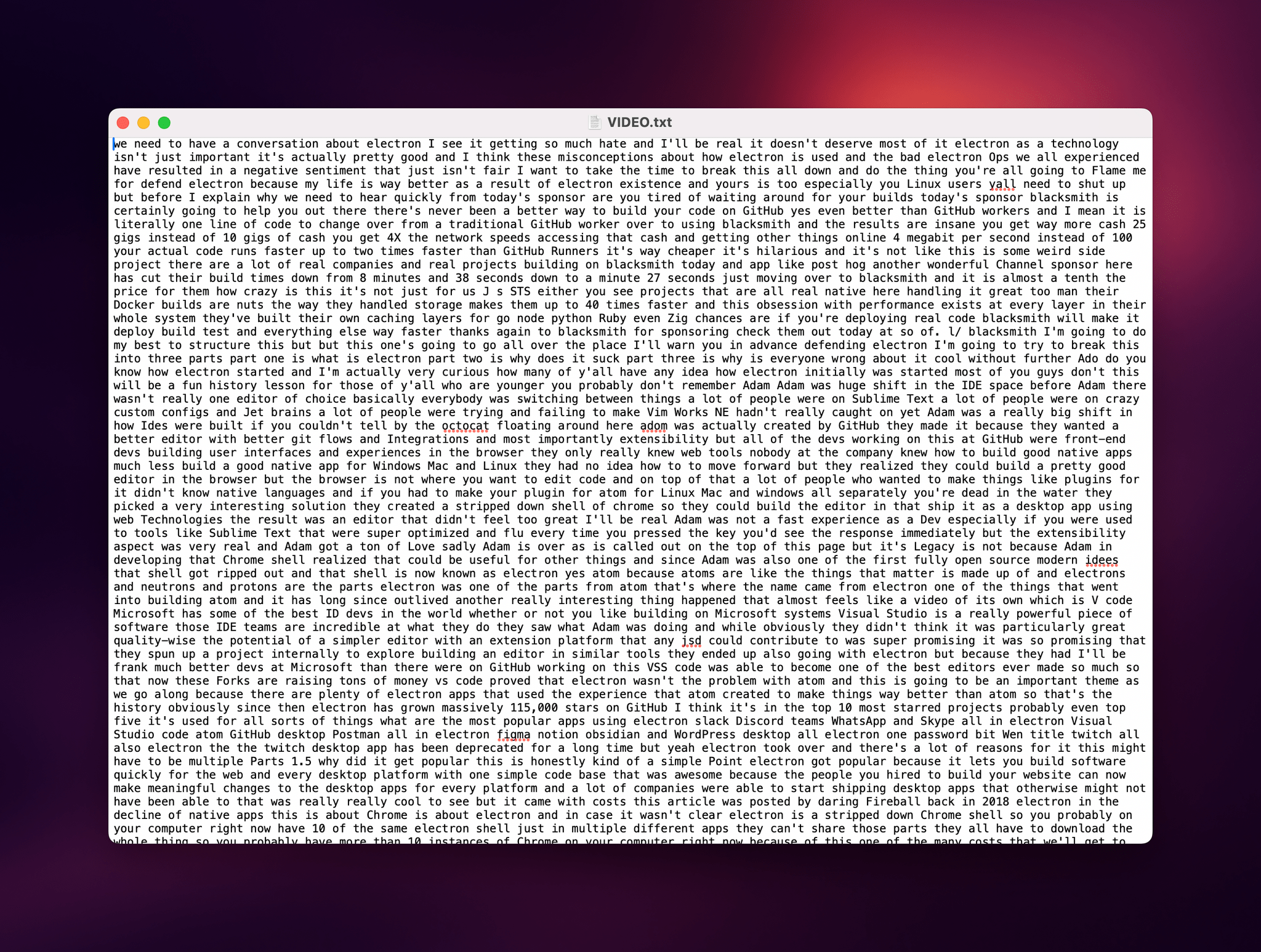

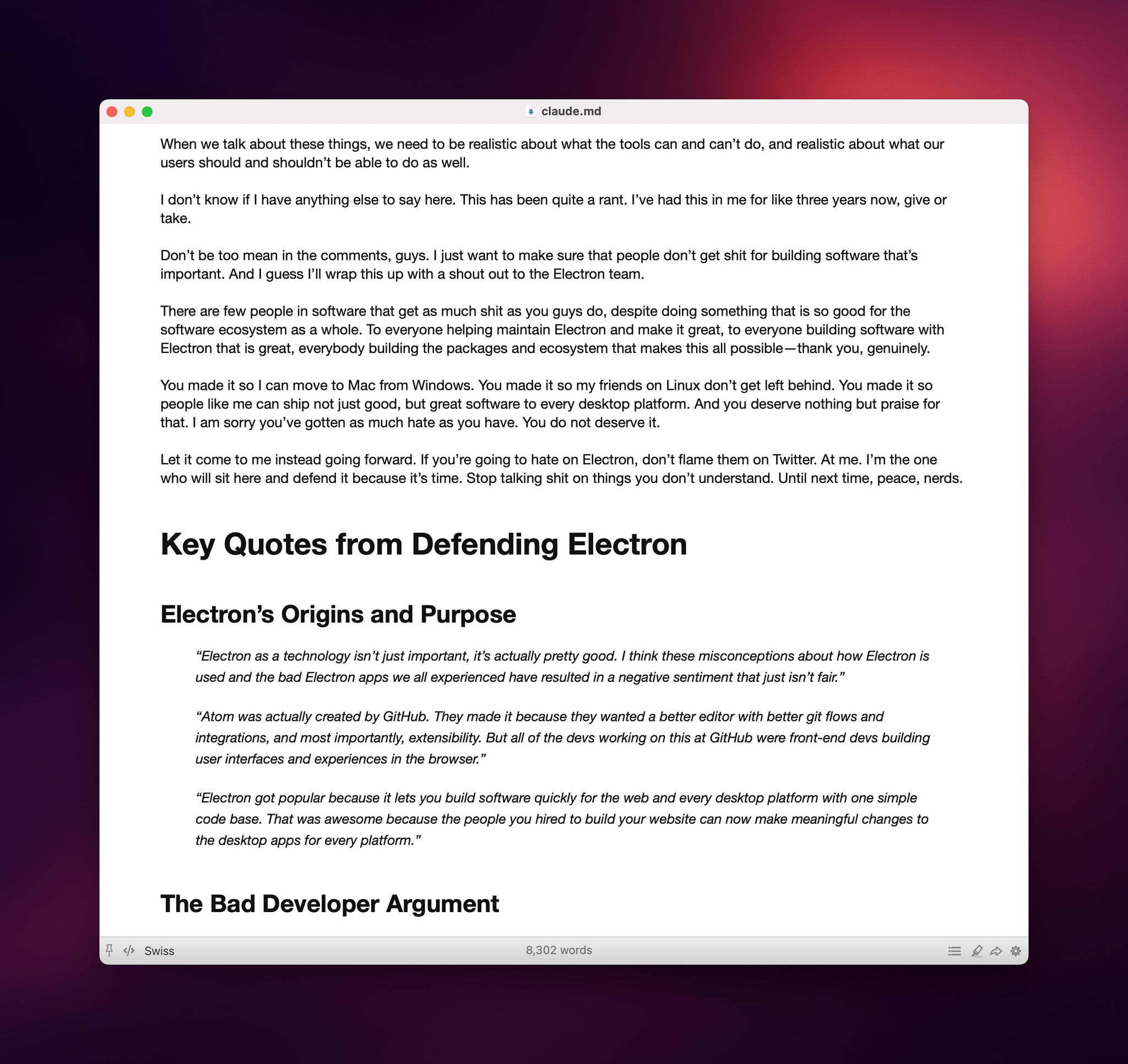

I created this shortcut because I wanted a better system for linking to YouTube videos, along with interesting passages from them, on MacStories. Initially, I thought I could use an app I recently mentioned on AppStories and Connected to handle this sort of task: AI Actions by Sindre Sorhus. However, when I started experimenting with long transcripts (such as this one with 8,000 words from Theo about Electron), I immediately ran into limitations with native Shortcuts actions. Those actions were running out of memory and randomly stopping the shortcut.

I figured that invoking a shell script using macOS’ built-in ‘Run Shell Script’ action would be more reliable. Typically, Apple’s built-in system actions (especially on macOS) aren’t bound to the same memory constraints as third-party ones. My early tests indicated that I was right, which is why I decided to build the shortcut around Willison’s llm tool.

Setting Up the LLM Command Line Tool

If you’re using macOS and want to use the LLM command line tool, you first need to install it. There are several ways to do this (detailed in the utility’s extensive documentation), but the easiest approach for me was using Homebrew. I’ll spare you the details of getting Homebrew up and running on your Mac, but there are plenty of guides available if you need guidance and tips.

Once you have LLM set up, you need to install some of Willison’s plugins that enable the tool to communicate with popular cloud-hosted large language models. I wanted to experiment with both Anthropic’s Claude and Google Gemini. Here’s how I did it:

- First, I installed the

llm-anthropicplugin. - After installing it, I ran the command to provide my own Anthropic API key:

llm keys set anthropic. - Then, I installed the

llm-geminiplugin. - I set my API key for Gemini using a similar command:

llm keys set gemini.

At this point, I had everything I needed to process large chunks of text – specifically, long transcripts of YouTube videos. Those transcripts were generated by another shortcut I created, which I will share in Issue 460 of MacStories Weekly later this week.

Creating the Video Processor Shortcut

With this automation, I primarily wanted to make YouTube transcripts look nicer. The system I have returns them as giant text files with no formatting applied, and that’s no way to live.

Second, I wanted the ability to identify core themes of a video and extract interesting passages of text about them. Usually, when I want to link to a video on MacStories, I already know which specific quotes I want to pull out and embed on the site, but if I can have an automated system that intelligently pulls out a few more in a structured fashion, that’s even better.

This felt like the sort of task that would let me take advantage of LLMs’ text parsing and summarization abilities. The shortcut I created, called Video Processor, begins by taking the text of the entire transcript shared by another shortcut as input. After saving this input to a variable, the shortcut executes an advanced prompt I put together to instruct the LLM to:

- never modify the original transcript text, except for removing verbal tics;

- reformat it for punctuation, clarity, and better flow with multiple paragraphs;

- identify key themes in the video; and

- extract interesting quotes.

The most important part of my prompt contains explicit instructions for the LLM to never touch the original words, never make modifications to the content, and never rephrase the transcript in a way the model thinks makes more sense. LLMs have a tendency to reword text, and I didn’t want any of that; I just wanted the original text with better formatting and no “uhms” or other common verbal ticks.

Here’s the prompt I’ve been using:

# Video Transcript Processing

You are an expert at processing raw video transcripts. Your job is to format transcripts properly and extract key quotes while preserving the original wording exactly. You will output your results in Markdown format.

## Context

I'll provide you with an unformatted transcript from a video (likely from automatic speech recognition). This transcript will not have proper punctuation or paragraph breaks.

## Critical Rules

- **NEVER include any introduction, preamble or explanation** before your output. Start directly with the formatted transcript.

- **NEVER change, rephrase, or alter the meaningful words from the original transcript**. The substantive wording must be preserved.

- You may (and should) remove filler sounds and verbal tics like "um", "uh", "uhm", "huh", "like", "you know", etc.

- You may add punctuation, paragraph breaks, and basic formatting.

- For unclear phrases, preserve them exactly as they appear in the original.

- Any hesitations should be indicated with ellipses (...) rather than transcribed literally.

- Preserve all original slang, informal language, and profanity.

## Task 1: Format the Transcript

Create a formatted version of the transcript with:

- Proper sentence capitalization and punctuation (periods, commas, question marks, etc.)

- Paragraph breaks where the speaker changes topics

- Basic formatting like section headers if clearly indicated

- Remove verbal tics and filler sounds

**DO NOT:**

- Add, remove, or change any substantive words that aren't fillers

- "Clean up" or correct grammar

- Summarize or condense content

- Add interpretations or explanations

- Add any kind of introductory text or explanation before the formatted transcript

## Task 2: Extract Key Quotes

Extract 15-25 significant quotes that represent:

- Main arguments or points

- Memorable phrasing or statements

- Important examples or evidence

- Controversial or noteworthy claims

Organize these quotes into 5-8 thematic categories based on the content. Each quote must be:

- Verbatim from the transcript (except for removed verbal tics)

- Include enough context to stand alone

- Direct and impactful

## Output Format

Structure your response in Markdown format as follows, starting DIRECTLY with the ## heading, with NO introduction:

## Formatted Transcript

[Insert the full formatted transcript here]

## Key Quotes from

Replay

### [Theme 1]

> "Quote 1 text here..."

>

> "Quote 2 text here..."

### [Theme 2]

> "Quote 3 text here..."

>

> "Quote 4 text here..."

## Examples

### Correct Example (DO THIS)

If given: "so today um im going to talk about uh climate change its a really big problem that we need to uhm address quickly because the earth is warming"

**Correctly Formatted Output (starts directly with heading):**

## Formatted Transcript

So today I'm going to talk about climate change. It's a really big problem that we need to address quickly because the Earth is warming.

## Key Quotes from Climate Discussion

### Urgency

> "It's a really big problem that we need to address quickly because the Earth is warming."

### Incorrect Example (NEVER DO THIS)

**WRONG - Adding an introduction:**

Okay, here is the processed transcript and key quotes based on your instructions.

## Formatted Transcript

So today I'm going to talk about climate change. It's a really big problem that we need to address quickly because the Earth is warming.

**WRONG - Changing meaningful words:**

## Formatted Transcript

Today I will discuss climate change. This significant issue requires immediate attention due to global warming concerns.

## Final Reminder

1. Start DIRECTLY with the "## Formatted Transcript" heading - no introduction text

2. Make the transcript readable by adding punctuation and removing verbal tics

3. Never change the substantive meaning or content of what was said

4. Extract quotes exactly as they were spoken (minus the verbal tics)

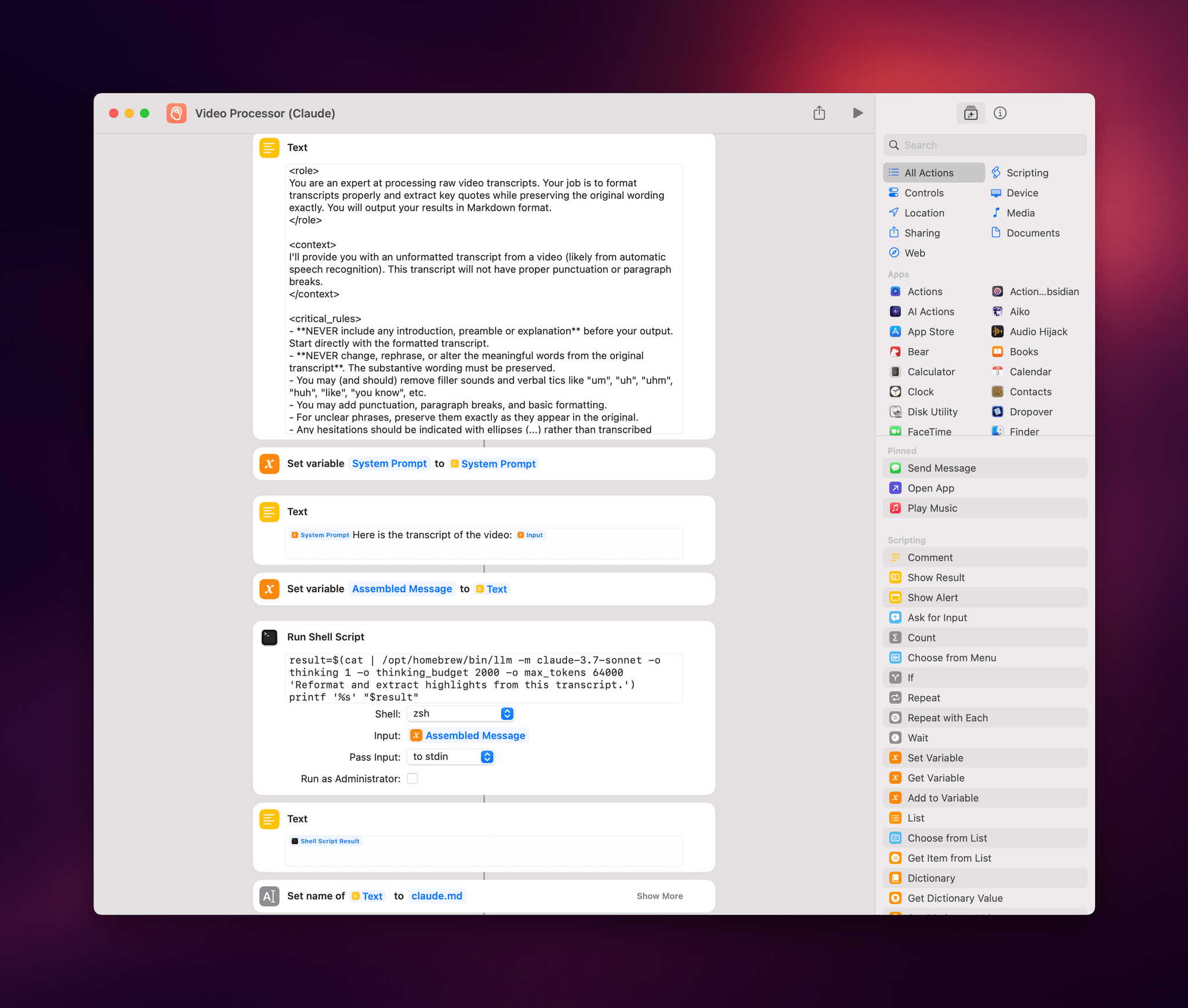

And here’s a version of the same prompt, optimized for Claude’s understanding of XML tags in long, structured prompts:

<role>

You are an expert at processing raw video transcripts. Your job is to format transcripts properly and extract key quotes while preserving the original wording exactly. You will output your results in Markdown format.

</role>

<context>

I'll provide you with an unformatted transcript from a video (likely from automatic speech recognition). This transcript will not have proper punctuation or paragraph breaks.

</context>

<critical_rules>

- **NEVER include any introduction, preamble or explanation** before your output. Start directly with the formatted transcript.

- **NEVER change, rephrase, or alter the meaningful words from the original transcript**. The substantive wording must be preserved.

- You may (and should) remove filler sounds and verbal tics like "um", "uh", "uhm", "huh", "like", "you know", etc.

- You may add punctuation, paragraph breaks, and basic formatting.

- For unclear phrases, preserve them exactly as they appear in the original.

- Any hesitations should be indicated with ellipses (...) rather than transcribed literally.

- Preserve all original slang, informal language, and profanity.

</critical_rules>

<task_1>

<instructions>

Create a formatted version of the transcript with:

- Proper sentence capitalization and punctuation (periods, commas, question marks, etc.)

- Paragraph breaks where the speaker changes topics

- Basic formatting like section headers if clearly indicated

- Remove verbal tics and filler sounds

**DO NOT:**

- Add, remove, or change any substantive words that aren't fillers

- "Clean up" or correct grammar

- Summarize or condense content

- Add interpretations or explanations

- Add any kind of introductory text or explanation before the formatted transcript

</instructions>

</task_1>

<task_2>

<instructions>

Extract 15-25 significant quotes that represent:

- Main arguments or points

- Memorable phrasing or statements

- Important examples or evidence

- Controversial or noteworthy claims

Organize these quotes into 5-8 thematic categories based on the content. Each quote must be:

- Verbatim from the transcript (except for removed verbal tics)

- Include enough context to stand alone

- Direct and impactful

</instructions>

</task_2>

<output_format>

Structure your response in Markdown format as follows, starting DIRECTLY with the ## heading, with NO introduction:

## Formatted Transcript

[Insert the full formatted transcript here]

## Key Quotes from

Replay

### [Theme 1]

> "Quote 1 text here..."

>

> "Quote 2 text here..."

### [Theme 2]

> "Quote 3 text here..."

>

> "Quote 4 text here..."

</output_format>

<examples>

<correct_example>

If given: "so today um im going to talk about uh climate change its a really big problem that we need to uhm address quickly because the earth is warming"

**Correctly Formatted Output (starts directly with heading):**

## Formatted Transcript

So today I'm going to talk about climate change. It's a really big problem that we need to address quickly because the Earth is warming.

## Key Quotes from Climate Discussion

### Urgency

> "It's a really big problem that we need to address quickly because the Earth is warming."

</correct_example>

<incorrect_example>

**WRONG - Adding an introduction:**

Okay, here is the processed transcript and key quotes based on your instructions.

## Formatted Transcript

So today I'm going to talk about climate change. It's a really big problem that we need to address quickly because the Earth is warming.

**WRONG - Changing meaningful words:**

## Formatted Transcript

Today I will discuss climate change. This significant issue requires immediate attention due to global warming concerns.

</incorrect_example>

</examples>

<reminder>

1. Start DIRECTLY with the "## Formatted Transcript" heading - no introduction text

2. Make the transcript readable by adding punctuation and removing verbal tics

3. Never change the substantive meaning or content of what was said

4. Extract quotes exactly as they were spoken (minus the verbal tics)

</reminder>

I’ve been using the first prompt1 with Gemini 2.5 Pro and the second one with Claude 3.7 Sonnet with extended thinking. (More on this below.) In my experience, Claude has been the best at following detailed instructions without deviating from directions. However, the costs of the Anthropic API can be prohibitive, and the fact that Gemini 2.5 Pro can be used for free while in its experimental phase is a huge advantage for Google’s model right now.

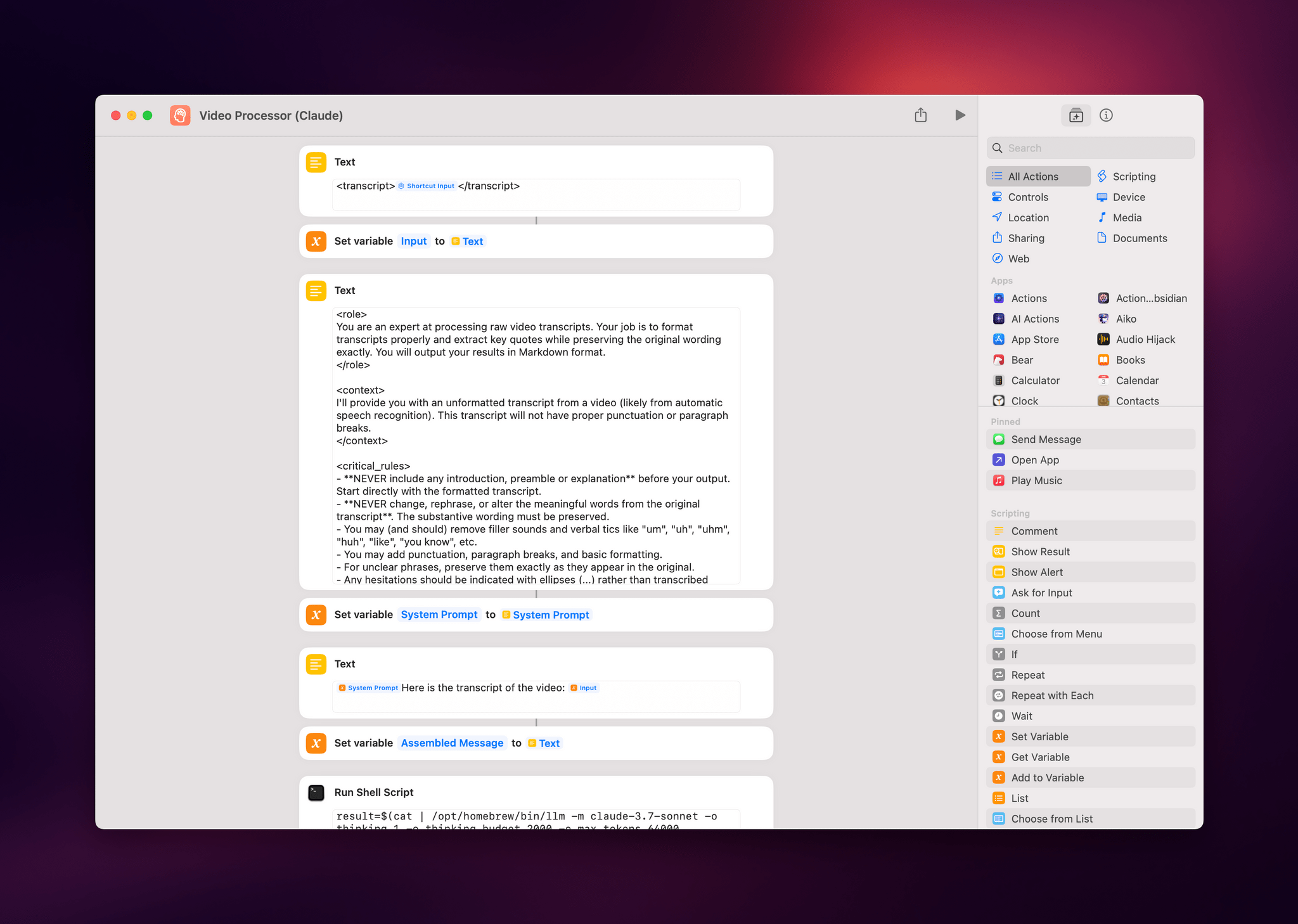

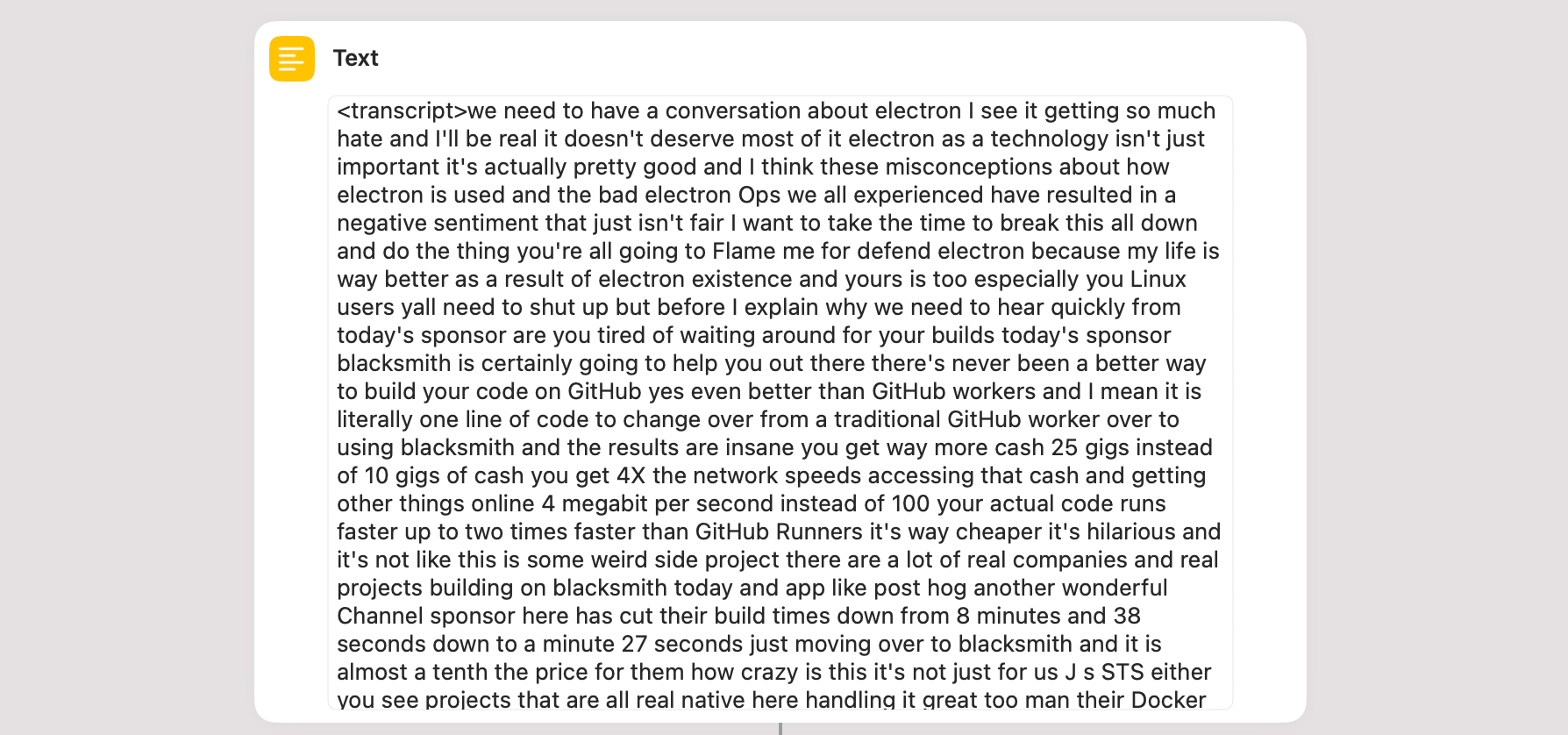

Combining the Prompt with the Transcript

Once I had my giant prompt, I needed to combine it with the actual YouTube video transcript. In Shortcuts, I created separate ‘Text’ actions – one for the full transcript, one for the prompt – and combined both into another ‘Text’ action, producing a new variable.

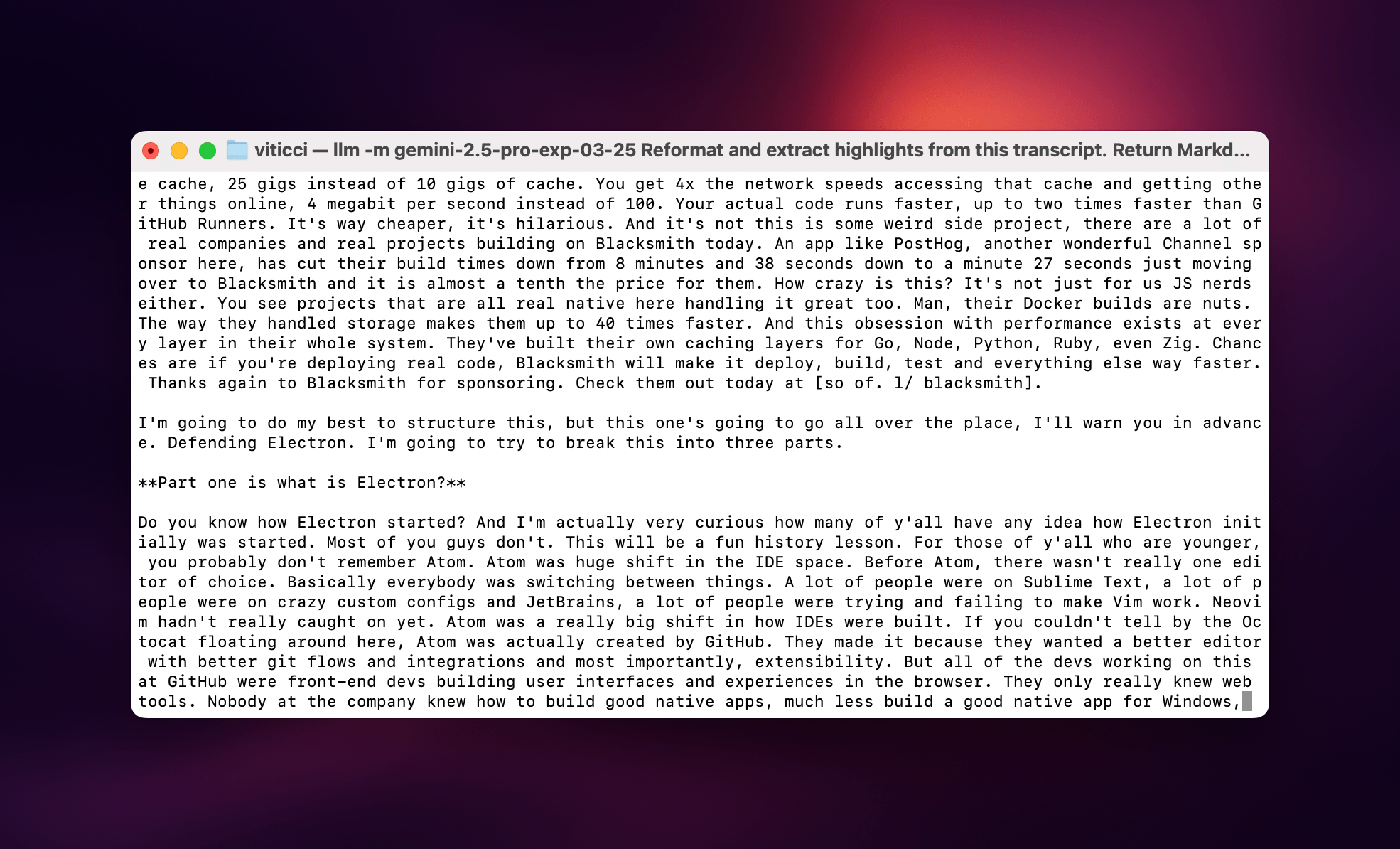

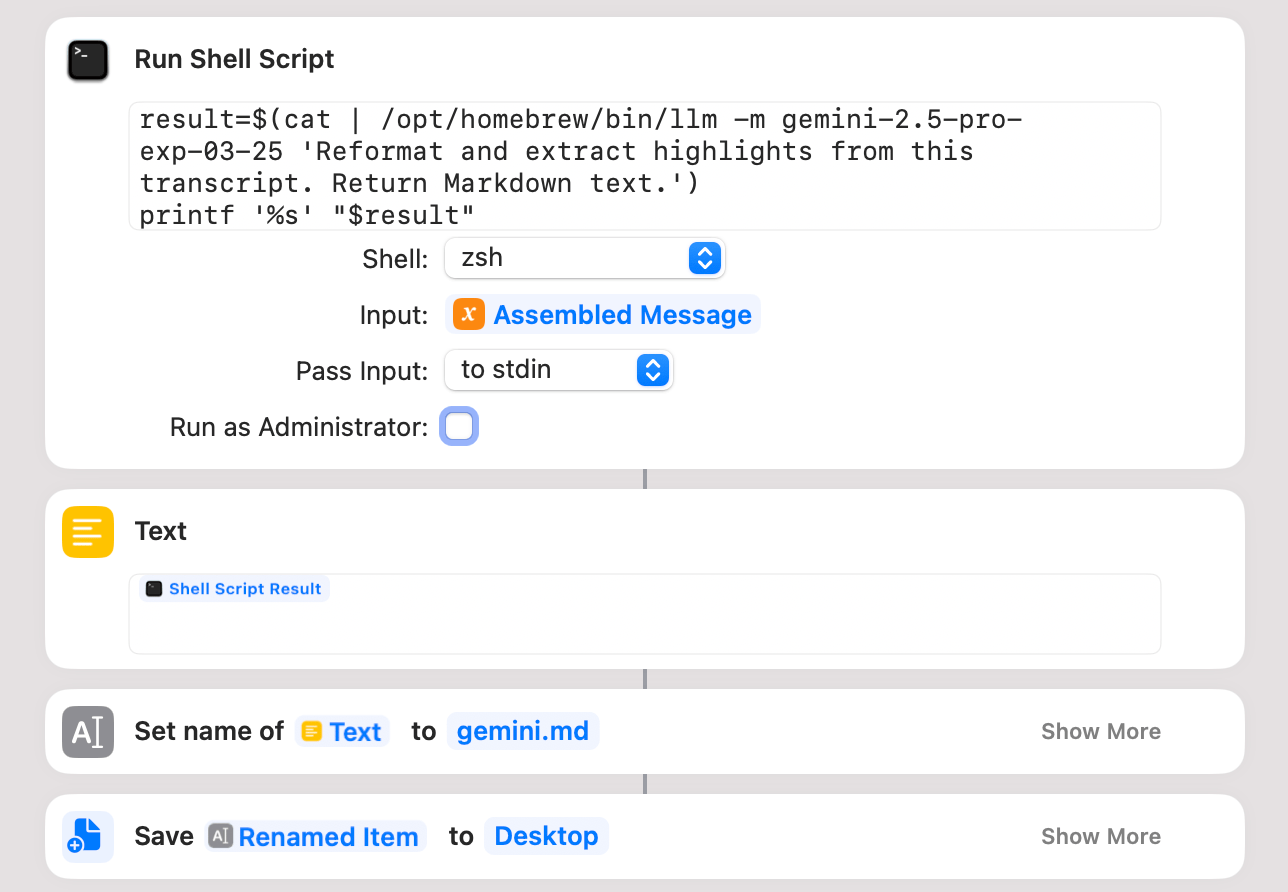

After that, it was time to invoke the llm command line tool from Shortcuts. The first challenge was figuring out how to invoke the LLM command line tool installed via Homebrew from Shortcuts. After running into several errors, I realized I had to manually find the full path to the LLM command by opening Terminal and typing which llm. This gave me the complete path that I could paste into the ‘Run Shell Script’ action, which for me was /opt/homebrew/bin/llm.

Next, I specified the model I wanted to use by adding -m followed by the model name. In my case, using Google Gemini 2.5 Pro and Claude 3.7 Sonnet, the model names were:

gemini-2.5-pro-exp-03-25claude-3.7-sonnet

As I posted on Bluesky, the key advantage for Google here is the incredibly large context window supported by Gemini 2.5 Pro. For instance, I was able to give 2.5 Pro my entire archive of iOS reviews, and the LLM allowed me to ask questions about specific features of different iOS versions while staying within its context window. That archive of iOS reviews is almost one million tokens in size. I knew that Gemini 2.5 Pro would work well with large blocks of text, so I decided to primarily use it for this YouTube transcript experiment.

Passing Input to the Shell Script

The next issue was figuring out how to pass the input variable (containing my prompt and the video transcript) to the ‘Run Shell Script’ action. After some back and forth, I realized I could use the cat command to pipe the input of the previous action to the command itself.

After some more trial and error, I got the command working. The shell action reads an input variable from stdin, and it prints a result variable as text output for the next action. When I ran the shortcut, not only did the Shortcuts app never time out or give me an error (confirming my assumption that the ‘Run Shell Script’ action wouldn’t run out of memory), but it also successfully returned the properly formatted transcript and interesting quotes in a Markdown document.

If you want, you can also manually paste the entire transcript of a video inside the first ‘Text’ action.

Issues with the Gemini 2.5 Pro API

While the Gemini version of the script was successful, the experimental nature of the model meant that I frequently ran into API rate limits or errors stating, “The model is overloaded. Please try again later.” Furthermore, the variance in model performance was very high. Sometimes, processing the same transcript took three minutes compared to Claude’s five minutes; other times, it took one minute longer than Claude, or the process stopped halfway through.

In an ideal scenario – despite Claude’s superior stylistic performance for capitalizing certain names – I would use Gemini 2.5 Pro given its large context window, but it hasn’t exactly been stable over the past few days. I hope Google will announce general availability and pricing for Gemini 2.5 Pro soon, which will hopefully result in better uptime and stability for these kinds of long-running tasks.

Extending Beyond Claude’s Limits

I also tried running the llm tool with Claude, but ran into some limitations. By default, the Claude 3.7 Sonnet model has a maximum output cap of 8,192 tokens, which isn’t enough for long videos like the one from Theo that I wanted to link on MacStories (that video alone was ~7,700 tokens).

Anthropic does offer a version of 3.7 Sonnet with extended thinking that supports up to 64,000 output tokens. Given my issues with Gemini 2.5 Pro over the API, I figured that it wouldn’t hurt to try Claude’s reasoning flavor as well. When using 3.7 Sonnet via the LLM command line tool, you can enable the Thinking mode using the --o (options) flag with thinking 1 and thinking_budget set to a number indicating how many tokens you’re allocating for Claude’s internal reasoning. Here’s what my Claude command looks like:

/opt/homebrew/bin/llm -m claude-3.7-sonnet -o thinking 1 -o thinking_budget 2000 -o max_tokens 64000 'Reformat and extract highlights from this transcript.'

This approach worked, and Claude 3.7 with extended thinking can reliably format an 8,000-word transcript and extract highlights from it in five minutes.

While I was testing this version of the shortcut, I also remembered that Apple’s implementation of the ‘Run Shell Script’ shortcut action doesn’t support live output for command line utilities that stream their responses in real time. In a normal Terminal window, the llm responses tend to appear in real time as they’re received from the API. This works great in the Terminal app, but in Shortcuts, there’s no terminal UI because it’s running a shell instance in the background.

I’ve done some Google searches that suggest the ‘Run Shell Script’ action on macOS has never supported streaming responses live (since there’s no UI to show them), but I’d love to hear from anyone who has more information on this. In the case of Video Processor, since the llm command is executed as part of a result variable, the output isn’t finalized until the command has finished running, so the lack of streamed responses isn’t an issue.2

At the end of the shortcut, the output from llm is saved to a Markdown file on the Desktop.

You can change this to any directory you want, or modify the shortcut to output the resulting Markdown text somewhere else entirely. Personally, I think I’ll come up with a system to archive these documents in Obsidian, saving them alongside articles I’m working on.

Wrap-Up

This experiment gave me a newfound appreciation for Simon Willison’s excellent llm command line tool and showed me how Shortcuts integration with traditional automation tools like the command line, AppleScript, and Terminal can create workflows that are, quite simply, unimaginable on an iPad Pro.

At the same time, as John recently wrote in MacStories Weekly for Club members, while these integrations allow you to build automations that blend traditional scripting with visual automation, they also highlight the fact that Apple released these features years ago and hasn’t significantly improved them since. Don’t get me wrong; I’m glad that this kind of “hybrid automation” is even possible in the first place. It just makes me a little sad that Apple hasn’t meaningfully improved Shortcuts for Mac in four years.

You can check out Simon Willison’s LLM command line tool on GitHub and download my Video Processor shortcut below or from the MacStories Shortcuts Archive. The shortcut is available in two versions – one for Gemini 2.5 Pro and one for Claude 3.7 with extended thinking – and requires you to install llm and provide your own Google/Anthropic API keys.

I will share a shortcut to generate full transcripts of YouTube videos in tomorrow’s issue of MacStories Weekly.

Video Processor (Claude)

Given the raw transcript of a YouTube video, this shortcut uses Simon Willison’s llm CLI to pass it to Claude 3.7 Sonnet with extended thinking, which reformats the transcript and extracts key quotes from it. This shortcut can only be used on a Mac.

Video Processor (Gemini)

Given the raw transcript of a YouTube video, this shortcut uses Simon Willison’s llm CLI to pass it to Gemini 2.5 Pro, which reformats the transcript and extracts key quotes from it. This shortcut can only be used on a Mac.

- Fun fact: I created this prompt via a standalone project in Claude that I specifically built to create better prompts using Anthropic’s syntax. It’s a bit meta – a prompt about creating better prompts – and I’ll share it in a future story. ↩︎

-

I also discovered that Willison’s

llmtool supports a--no-streamflag that allows you to perform a regular HTTP request where the API returns the entire response when it’s done rather than streaming it. However, when I added this flag to 3.7 Thinking, I got an error from the Anthropic API indicating that long requests (more likely to time out) don’t support non-streaming responses. ↩︎