Thursday is Global Accessibility Awareness Day, and to mark the occasion, Apple has previewed several new accessibility features coming to its OSes later this year. Although this accessibility preview has become an annual affair, this year’s preview is more packed than most years, with a wide variety of features for navigating UIs, automating tasks, interacting with Siri and CarPlay, enabling live captions in visionOS, and more. Apple hasn’t announced when these features will debut, but if past years are any indication, most should be released in the fall as part of the annual OS release cycle.

Eye Tracking

Often, Apple’s work in one area lends itself to new accessibility features in another. With Eye Tracking in iOS and iPadOS, the connection to the company’s work on visionOS is clear. The feature will allow users to look at UI elements on the iPhone and iPad, and the front-facing camera – combined with a machine learning model – will follow their gaze, moving the selection as what they look at changes. No additional hardware is necessary.

Eye Tracking also works with Dwell, meaning that when a user pauses their gaze on an interface element, it will be clicked. The feature, which requires a one-time calibration setup process, will work with Apple’s apps, as well as third-party apps, on iPhones and iPads with an A12 Bionic chip or newer.

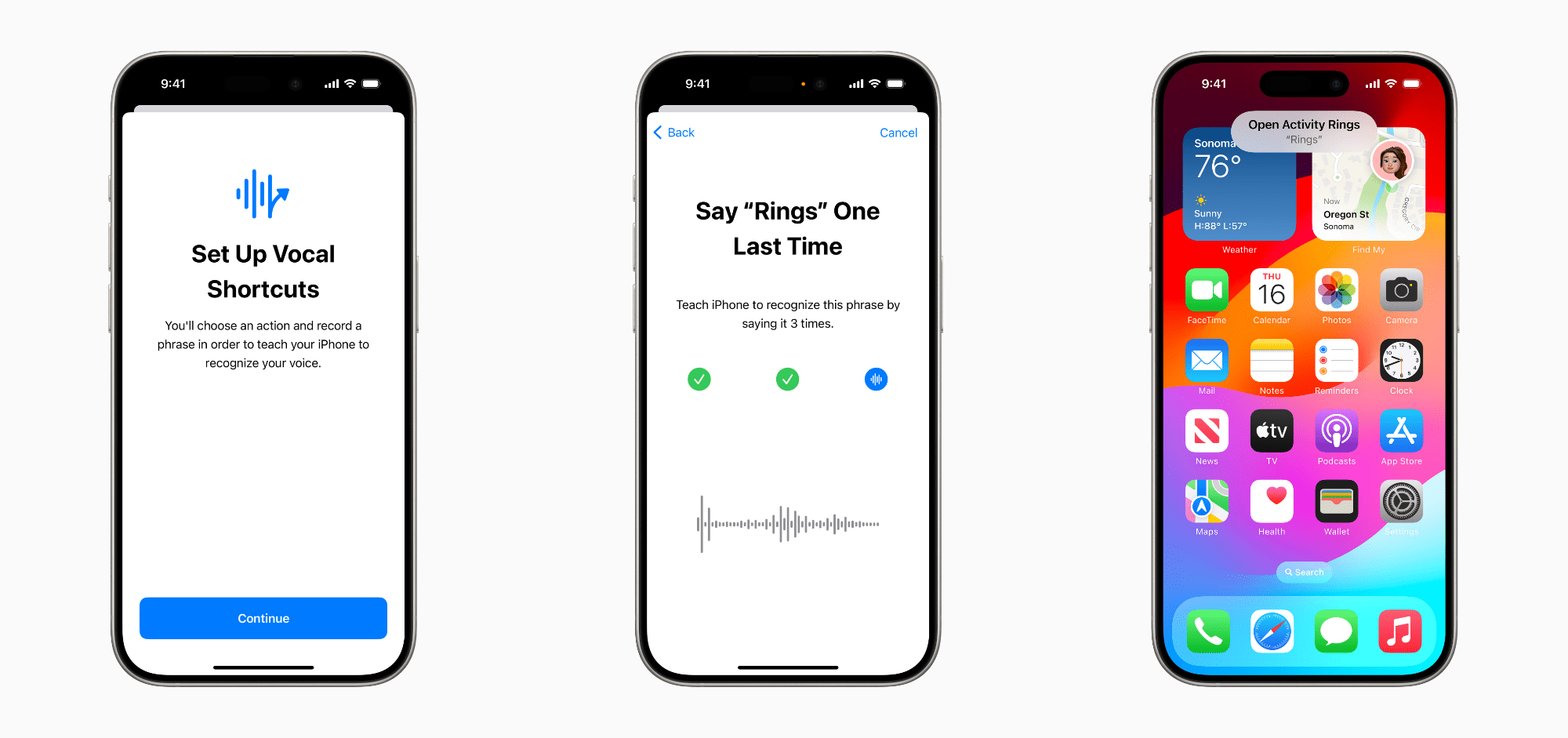

Vocal Shortcuts

Vocal Shortcuts provide a way to define custom utterances that launch shortcuts and other tasks. The phrases are defined on-device for maximum privacy using a process similar to Personal Voice. The feature is like triggering shortcuts with Siri, but it doesn’t require an assistant trigger word or phrase.

Music Haptics

For deaf and hard-of-hearing iPhone customers, Apple has implemented Music Haptics, which use haptic feedback to allow users to feel songs streamed via Apple Music without any additional devices. Apple has also created an API for third-party developers who want to incorporate Music Haptics into their streaming services or other music apps.

CarPlay and Vehicle Motion Cues

CarPlay is getting a complete accessibility makeover later this year with several new features. The system will gain sound recognition for things like car horns and sirens. Users will be able to control the UI of CarPlay with their voice. There will be a color filter setting, allowing color-blind users to see the CarPlay interface better. Plus, options to make text bold and larger will be added.

Apple is also introducing a solution for passengers who experience motion sickness when using a device in the car. Vehicle Motion Cues are a series of dots that sit on the edge of your device and animate as the vehicle you’re in moves. The dots shift left and right as the car turns and up and down as it accelerates and brakes, providing your brain with contextual cues that counteract the conflict between what you see and feel, which causes motion sickness. The feature can be set to turn on automatically when your device senses that you’re in a car or activated via Control Center.

Vision Pro Live Captioning

The Vision Pro is gaining Live Captions throughout visionOS. You’ll have the option to see captions onscreen in various contexts including when someone speaks to you and while enjoying immersive video. The Vision Pro will also add support for additional Made for iPhone hearing devices and cochlear hearing processors. And other accessibility options are coming to visionOS as well:

Updates for vision accessibility will include the addition of Reduce Transparency, Smart Invert, and Dim Flashing Lights for users who have low vision, or those who want to avoid bright lights and frequent flashing.

Everything Else

Apple’s press release previews several other accesibilty-related updates as well:

- For users who are blind or have low vision, VoiceOver will include new voices, a flexible Voice Rotor, custom volume control, and the ability to customize VoiceOver keyboard shortcuts on Mac.

- Magnifier will offer a new Reader Mode and the option to easily launch Detection Mode with the Action button.

- Braille users will get a new way to start and stay in Braille Screen Input for faster control and text editing; Japanese language availability for Braille Screen Input; support for multi-line braille with Dot Pad; and the option to choose different input and output tables.

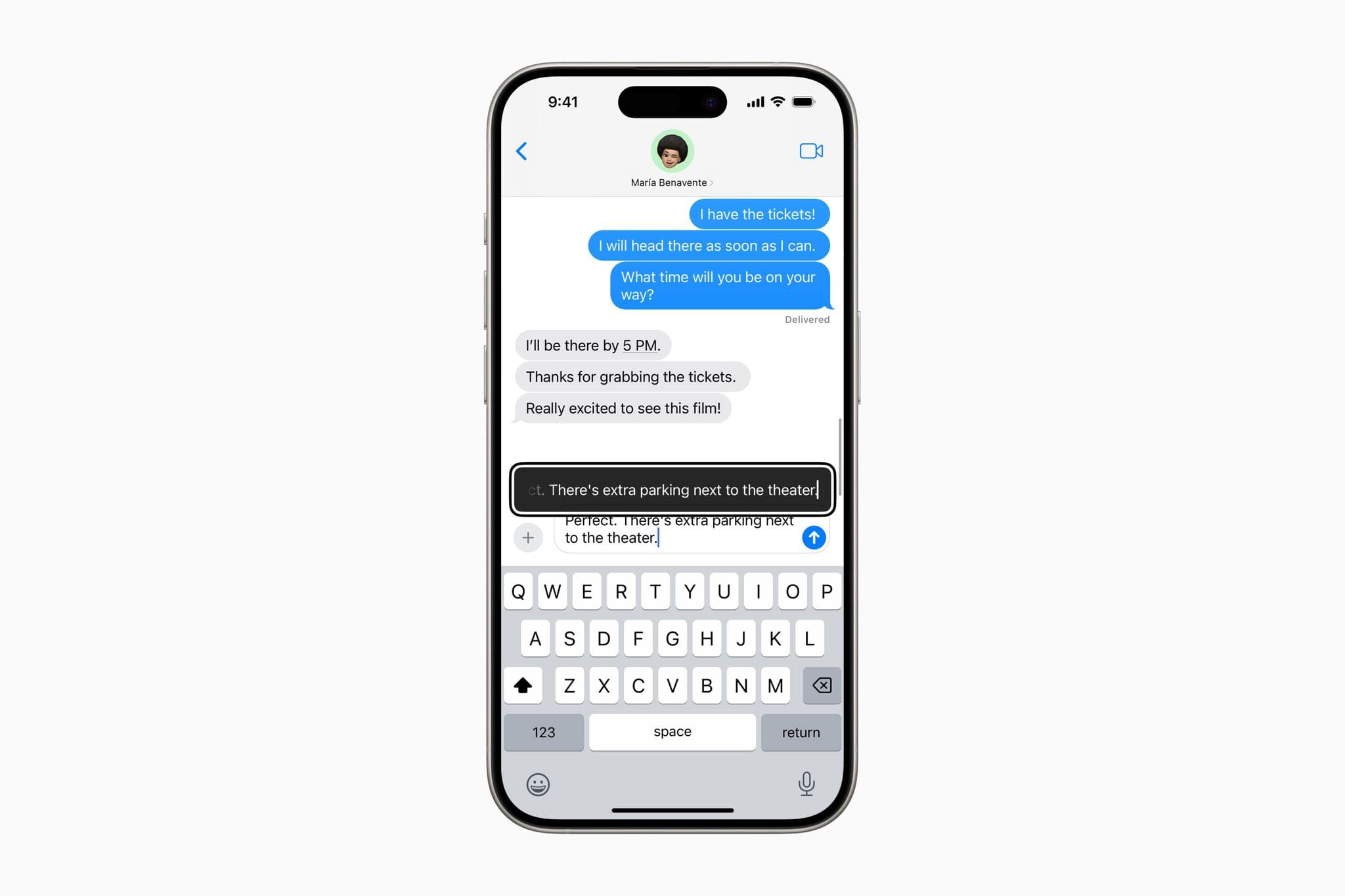

- For users with low vision, Hover Typing shows larger text when typing in a text field, and in a user’s preferred font and color.

- For users at risk of losing their ability to speak, Personal Voice will be available in Mandarin Chinese. Users who have difficulty pronouncing or reading full sentences will be able to create a Personal Voice using shortened phrases.

- For users who are nonspeaking, Live Speech will include categories and simultaneous compatibility with Live Captions.

- For users with physical disabilities, Virtual Trackpad for AssistiveTouch allows users to control their device using a small region of the screen as a resizable trackpad.

- Switch Control will include the option to use the cameras in iPhone and iPad to recognize finger-tap gestures as switches.

- Voice Control will offer support for custom vocabularies and complex words.

In addition, throughout the month of May, Apple Stores are offering free classes to help customers learn about accessibility features. Apple has added a ‘Calming Sounds’ shortcut to its Shortcuts Gallery that plays soothing soundscapes. Plus, the App Store, Apple TV app, Books, Fitness+, and Apple Support are joining in with content that focuses on accessibility.

The week of Global Accessibility Awareness Day is the perfect time to spotlight these upcoming OS features. It raises awareness of GAAD and spotlights Apple’s efforts to use technology to make its products available to as many people as possible. I’m looking forward to testing everything announced today as the summer beta cycle begins.