It’s not often that I come across something that slides so effortlessly into my everyday workflow as the MOFT Invisible Stand has. It doesn’t use batteries, it takes up negligible space, and it’s so light that I never question throwing it in my bag when I leave the house. The stand is barely there until I need it, which is when it really shines.

Now You See It, Now You Don’t: A Review of the MOFT Invisible Stand

A Peek Into LookUp’s Word of the Day Art and Why It Could Never Be AI-Generated

Yesterday, Vidit Bhargava, developer of the award-winning dictionary app LookUp, wrote on his blog about the way he hand-makes each piece of artwork that accompanies the app’s Word of the Day. While revealing that he has employed this practice every day for an astonishing 10 years, Vidit talked about how each image is made from scratch as an illustration or using photography that he shoots specifically for the design:

Each Word of the Day has been illustrated with care, crafting digital illustrations, picking the right typography that conveys the right emotion.

Some words contain images, these images are painstakingly shot, edited and crafted into a Word of the Day graphic by me.

I’ve noticed before that each Word of the Day image in LookUp seemed unique, but I assumed Vidit was using stock imagery and illustrations as a starting point. The revelation that he is creating these from scratch every single day was incredible and gave me a whole new level of respect for the developer.

The idea of AI-generated art (specifically art that is wholly generated from scratch by LLMs) is something that really sticks in my throat – never more so than with the recent rip-off of the beautiful, hand-drawn Studio Ghibli films by OpenAI. Conversely, Vidit’s work shows passion and originality.

To quote Vidit, “Real art takes time, effort and perseverance. The process is what makes it valuable.”

You can read the full blog post here.

Podcast Rewind: Our Favorite Utilities and the Nintendo Switch 2 Hardware Story

Enjoy the latest episodes from MacStories’ family of podcasts:

AppStories

This week, Federico and I share some of our favorite utility apps, including Amphetamine, Text Lens, Gifski, Folder Peek, Mic Drop, Keka, and Marked.

This episode is sponsored by:

- Rogue Amoeba: Makers of incredibly useful audio tools for your Mac. Use the code

MS2504through the end of April to get 20% off Rogue Amoeba’s apps.

NPC: Next Portable Console

This week, Federico, Brendon and I dive into Nintendo’s reveal of the Switch 2, analyzing the technical specifications, Mouse Control, the camera accessory, the new Pro Controller, Game Share, Nintendo’s strategy shift, and more.

This episode is sponsored by:

- Rogue Amoeba: Makers of incredibly useful audio tools for your Mac. Use the code

MS2504through the end of April to get 20% off Rogue Amoeba’s apps.

NPC XL

On a special early-release epiaode of NPC XL, Brendon, Federico, and I go beyond the hardware to dig into the tech behind the games Nintendo announced alongside the Switch 2 and consider game compatibility, the debut of GameCube games as part of Nintendo Online, and more.

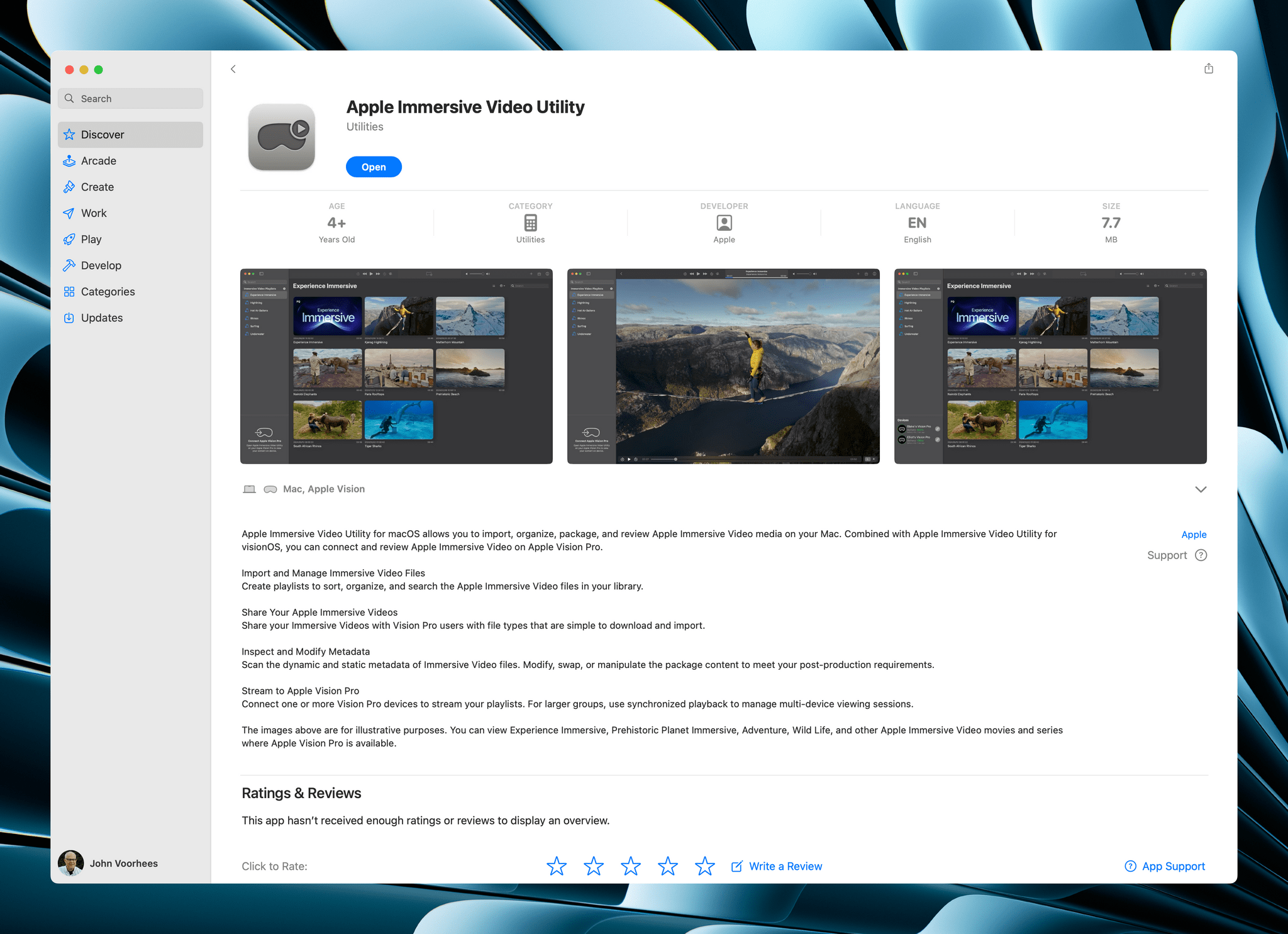

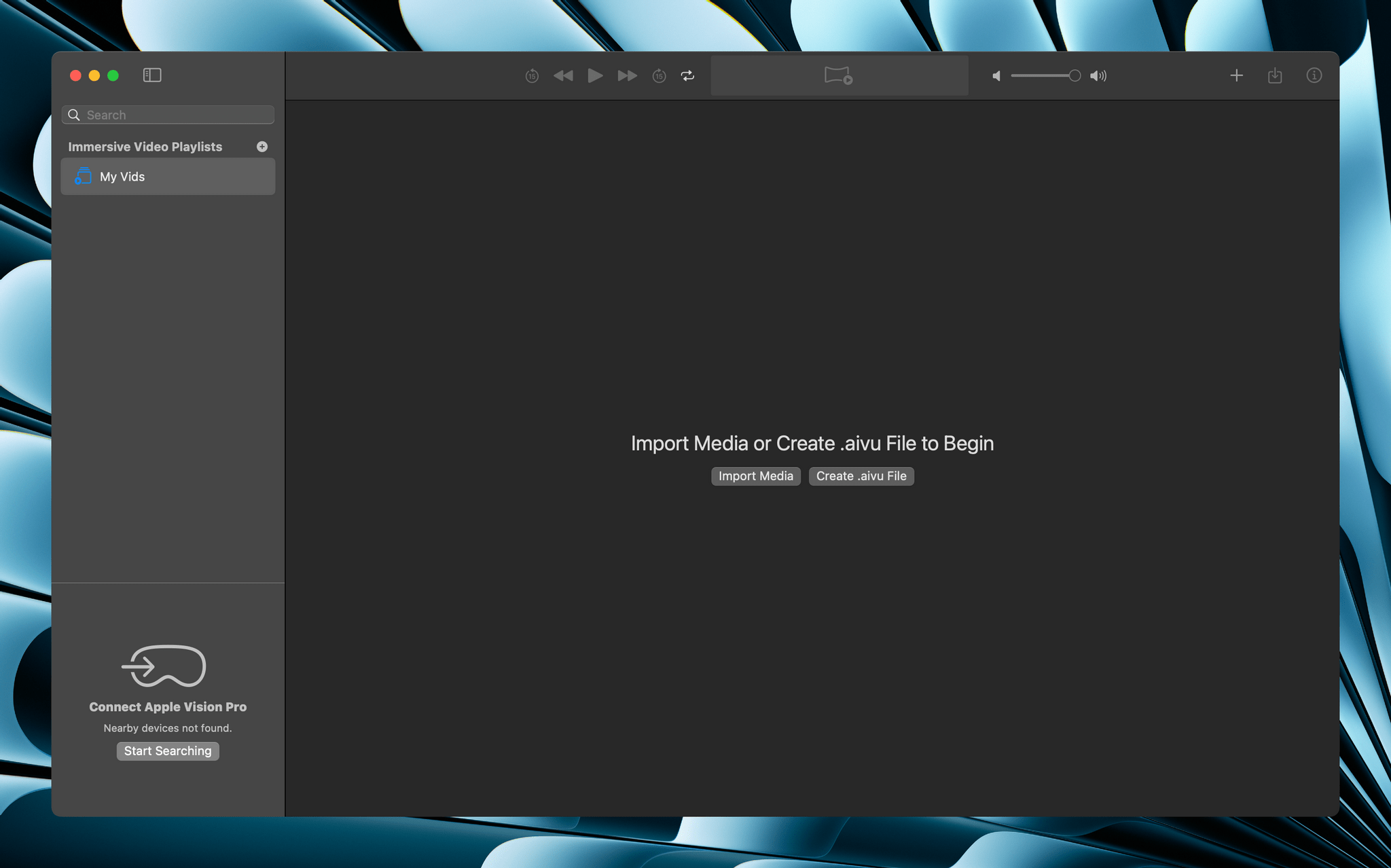

Apple Immersive Video Utility Released

Apple has released a new companion app called Apple Immersive Video Utility for Vision Pro owners that allows them to organize and manage immersive content with the help of a Mac. The utility, which is available for the Mac and Vision Pro, allows users to view, stream, and organize Apple Immersive Video into playlists. The app supports more than one Vision Pro, too, synchronizing playback of content streamed from a Mac to multiple Vision Pros. Videos can also be transferred from the Mac app to a Vision Pro for watching them there.

The App Store description only touches on it, but Apple Immersive Video Utility, the company’s first new Mac app in a long time that wasn’t released as part of an OS update, appears to be designed for post-production work by video professionals. The app could also be used in group educational and training settings based on its feature set.

However, the fact that NAB, the National Association of Broadcasters, conference is going on this week suggests that the app is primarily designed for post-production video work. In fact, the app seems to go hand-in-hand with Blackmagic’s URSA Cine Immersive, an Apple Immersive Video camera that was also shown off at NAB this week, and DaVinci Resolve Studio 20, which supports editing of Apple Immersive Video.

To expand the library of available Apple Immersive Video, there need to be tools to create and manage the huge video files that are part of the process. It’s good to see Apple doing that along with companies like Blackmagic. I expect we’ll see more hardware and software solutions for the format as the months go by.

Rogue Amoeba: Turn Your Mac Into an Audio Powerhouse [Sponsor]

Rogue Amoeba, makers of powerful audio tools for your Mac, are back to sponsor MacStories. From professional podcasters to home users, their lineup of products can assist you with all your audio needs.

Making recordings with Audio Hijack is a cinch, and transcribing audio is even faster with version 4.5.

No need to record? Take advantage of SoundSource for amazing control of your Mac’s audio. You’ll have control of each app playing audio on your Mac right from your menu bar, so you can adjust per-app volume, apply audio effects, and even redirect playback to a different device.

And if you want to overhaul your microphone capabilities in audio or video calls, check out Loopback so you can make a virtual input device from inside the Mac: Target audio playing from apps running right on your Mac, like their fun soundboard app Farrago to bring in background audio or sound effect clips, and bring it together with your microphone without any loss in quality. You can even pair Loopback with Audio Hijack, to add effects for your microphone.

Rogue Amoeba’s software is always available to try for free, and recent updates have brought a dramatically simpler setup process. Visit their site to download free fully functional trials. MacStories reader you can save 20% on any purchase through their store, with coupon code STORIES2504 at checkout. Act fast, as that deal ends April 20!

Our thanks to the team at Rogue Amoeba for sponsoring MacStories this week.

Podcast Rewind: Vibe Coding, Scrobbling, Mythic Quest, and Switch 2 Game Tech

Enjoy the latest episodes from MacStories’ family of podcasts:

Comfort Zone

Matt has built an Obsidian plugin with a fun name, Niléane is keeping the scrobbling dream alive, and everyone tries to find a great new Raycast extension.

MacStories Unwind

This week, the latest on the NVIDIA tech driving the Switch 2 and a new Donkey Kong game brings back podcast memories, plus Indiana Jones and the Great Circle and a super-slim Qi battery pack for your iPhone.

Magic Rays of Light

Sigmund and Devon highlight new Apple Original anthology series Side Quest and recap the recently wrapped season of its parent series Mythic Quest.

Is Electron Really That Bad?→

I’ve been thinking about this video by Theo Browne for the past few days, especially in the aftermath of my story about working on the iPad and realizing its best apps are actually web apps.

I think Theo did a great job contextualizing the history of Electron and how we got to this point where the majority of desktop apps are built with it. There are two sections of the video that stood out to me and I want to highlight here. First, this observation – which I strongly agree with – regarding the desktop apps we ended up having thanks to Electron and why we often consider them “buggy”:

There wouldn’t be a ChatGPT desktop app if we didn’t have something like Electron. There wouldn’t be a good Spotify player if we didn’t have something like Electron. There wouldn’t be all of these awesome things we use every day. All these apps… Notion could never have existed without Electron. VS Code and now Cursor could never have existed without Electron. Discord absolutely could never have existed without Electron.

All of these apps are able to exist and be multi-platform and ship and theoretically build greater and greater software as a result of using this technology. That has resulted in some painful side effects, like the companies growing way faster than expected because they can be adopted so easily. So they hire a bunch of engineers who don’t know what they’re doing, and the software falls apart. But if they had somehow magically found a way to do that natively, it would have happened the same exact way.

This has nothing to do with Electron causing the software to be bad and everything to do with the software being so successful that the companies hire too aggressively and then kill their own software in the process.

The second section of the video I want to call out is the part where Theo links to an old thread from the developer of BoltAI, a native SwiftUI app for Mac that went through multiple updates – and a lot of work on the developer’s part – to ensure the app wouldn’t hit 100% CPU usage when simply loading a conversation with ChatGPT. As documented in the thread from late 2023, this is a common issue for the majority of AI clients built with SwiftUI, which is often less efficient than Electron when it comes to rendering real-time chat messages. Ironic.

Theo argues:

You guys need to understand something. You are not better at rendering text than the Chromium team is. These people have spent decades making the world’s fastest method for rendering documents across platforms because the goal was to make Chrome as fast as possible regardless of what machine you’re using it on. Electron is cool because we can build on top of all of the efforts that they put in to make Electron and specifically to make Chromium as effective as it is. The results are effective.

The fact that you can swap out the native layer with SwiftUI with even just a web view, which is like Electron but worse, and the performance is this much better, is hilarious. Also notice there’s a couple more Electron apps he has open here, including Spotify, which is only using less than 3% of his CPU. Electron apps don’t have to be slow. In fact, a lot of the time, a well-written Electron app is actually going to perform better than an equivalently well-written native app because you don’t get to build rendering as effectively as Google does.

Even if you think you made up your mind about Electron years ago, I suggest watching the entire video and considering whether this crusade against more accessible, more frequently updated (and often more performant) desktop software still makes sense in 2025.

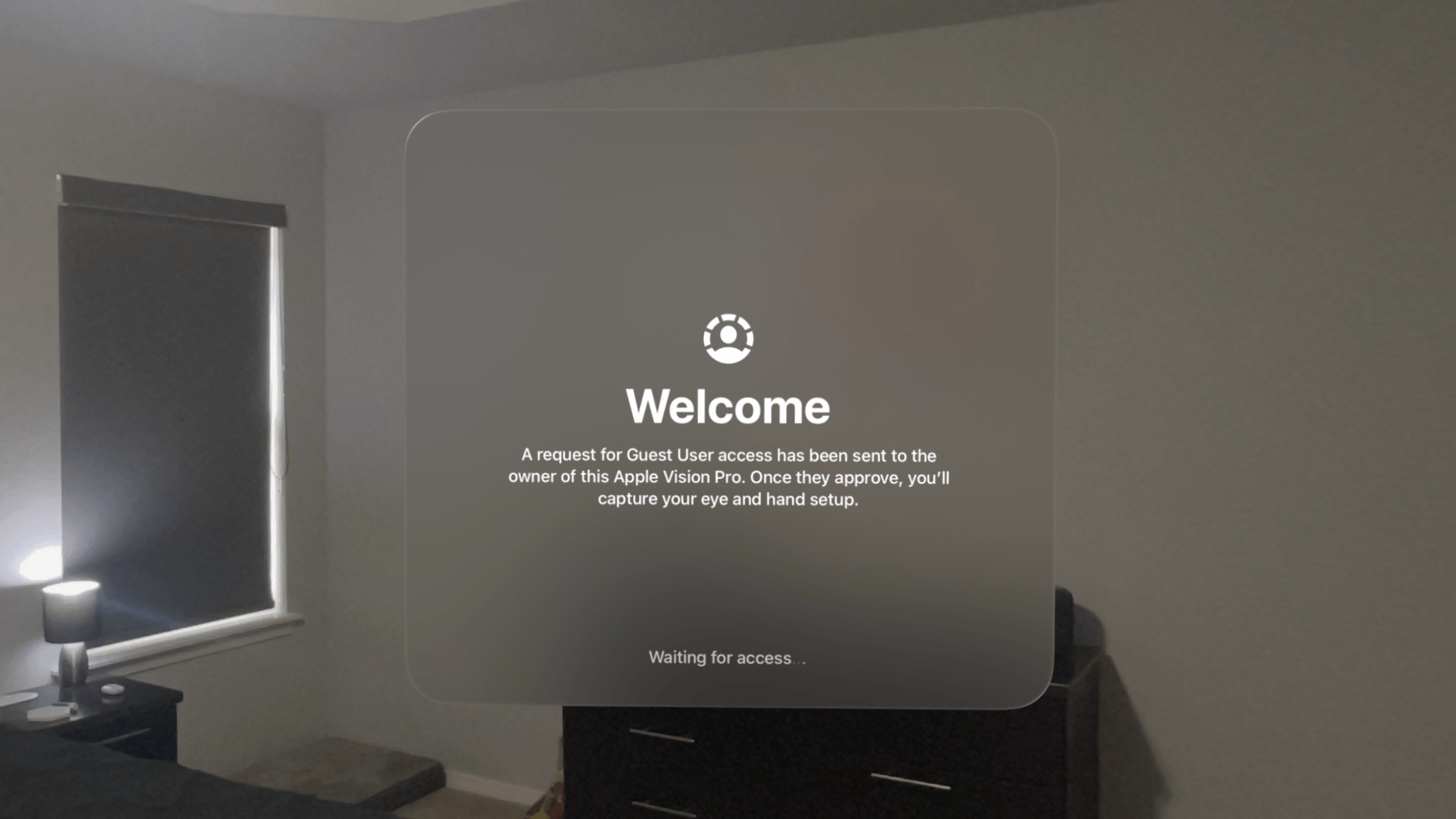

Hands-On with Guest User Mode in visionOS 2.4

The Apple Vision Pro is a device that begs to be shared with others. Sure, mirroring your view to a TV or iPhone via AirPlay is a decent way to give people a glimpse into the experience, but so much about visionOS – the windows floating in real-world spaces, immersive videos, 3D environments, spatial photos, and more – can only be truly understood by seeing them with your own eyes. That’s why Guest User mode is so vital to the platform.

Guest User was included in the very first version of visionOS, and Apple has iterated on the feature over time, most notably by adding the option to save a guest’s hand and eye data for 30 days in visionOS 2.0 to speed up repeat sessions. With this week’s release of visionOS 2.4, Guest User has received another major update, one that I think Vision Pro users will be very happy about.

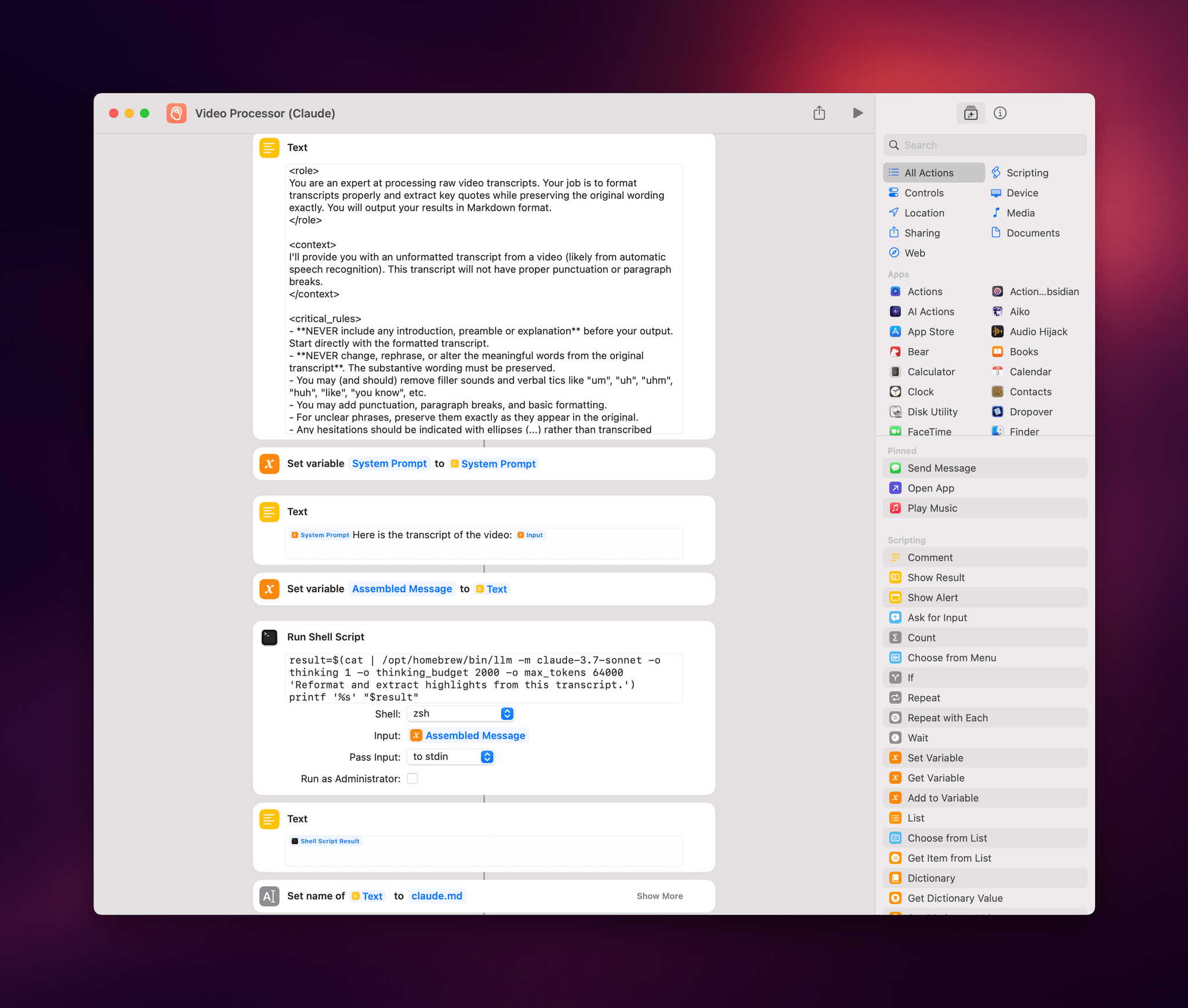

Using Simon Willison’s LLM CLI to Process YouTube Transcripts in Shortcuts with Claude and Gemini

I’ve been experimenting with different automations and command line utilities to handle audio and video transcripts lately. In particular, I’ve been working with Simon Willison’s LLM command line utility as a way to interact with cloud-based large language models (primarily Claude and Gemini) directly from the macOS terminal.

For those unfamiliar, Willison’s LLM CLI tool is a command line utility that lets you communicate with services like ChatGPT, Gemini, and Claude using shell commands and dedicated plugins. The llm command is extremely flexible when it comes to input and output; it supports multiple modalities like audio and video attachments for certain models, and it offers custom schemas to return structured output from an API. Even for someone like me – not exactly a Terminal power user – the different llm commands and options are easy to understand and tweak.

Today, I want to share a shortcut I created on my Mac that takes long transcripts of YouTube videos and:

- reformats them for clarity with proper paragraphs and punctuation, without altering the original text,

- extracts key points and highlights from the transcript, and

- organizes highlights by theme or idea.

I created this shortcut because I wanted a better system for linking to YouTube videos, along with interesting passages from them, on MacStories. Initially, I thought I could use an app I recently mentioned on AppStories and Connected to handle this sort of task: AI Actions by Sindre Sorhus. However, when I started experimenting with long transcripts (such as this one with 8,000 words from Theo about Electron), I immediately ran into limitations with native Shortcuts actions. Those actions were running out of memory and randomly stopping the shortcut.

I figured that invoking a shell script using macOS’ built-in ‘Run Shell Script’ action would be more reliable. Typically, Apple’s built-in system actions (especially on macOS) aren’t bound to the same memory constraints as third-party ones. My early tests indicated that I was right, which is why I decided to build the shortcut around Willison’s llm tool.