Busy day at Google today: the company rolled out version 2.0 of its Gemini AI assistant (previously announced in December) with a variety of new and updated models to more users. From the Google blog:

Today, we’re making the updated Gemini 2.0 Flash generally available via the Gemini API in Google AI Studio and Vertex AI. Developers can now build production applications with 2.0 Flash.

We’re also releasing an experimental version of Gemini 2.0 Pro, our best model yet for coding performance and complex prompts. It is available in Google AI Studio and Vertex AI, and in the Gemini app for Gemini Advanced users.

We’re releasing a new model, Gemini 2.0 Flash-Lite, our most cost-efficient model yet, in public preview in Google AI Studio and Vertex AI.

Finally, 2.0 Flash Thinking Experimental will be available to Gemini app users in the model dropdown on desktop and mobile.

Google’s reasoning model (which, similarly to DeepSeek-R1 or OpenAI’s o1/o3 family, can display its “chain of thought” and perform multi-step thinking about a user query) is currently ranked #1 in the popular Chatbot Arena LLM leaderboard. A separate blog post from Google also details the new pricing structure for third-party developers that want to integrate with the Gemini 2.0 API and confirms some of the features coming soon to both Gemini 2.0 Flash and 2.0 Pro, such as image and audio output. Notably, there is also a 2.0 Flash-Lite model that is even cheaper for developers, which I bet we’re going to see soon in utilities like Obsidian Web Clipper, composer fields of social media clients, and more.

As part of my ongoing evaluation of assistive AI tools, since Gemini’s initial rollout in December, I’ve been using it in place of ChatGPT, progressively replacing the latter. Today, after the general release of 2.0 Flash, I went ahead and finally swapped ChatGPT for Gemini in my iPhone’s dock.

This will probably need to be an in-depth article at some point, but my take so far is that although ChatGPT gets more media buzz and is the more mainstream product1, I think Google is doing more fascinating work with a) their proprietary AI silicon and b) turning LLMs into actual products for personal and professional use that are integrated with their ecosystem. Gemini (rightfully) got a bad rap with its initial release last year, and while it still hallucinates responses (but all LLMs still do), its 2.0 models are more than good enough for the sort of search queries I was asking ChatGPT before. Plus, we pay for Google Workspace at MacStories, and I like that Gemini is directly integrated with the services we use on a daily basis, such as Drive and Gmail.

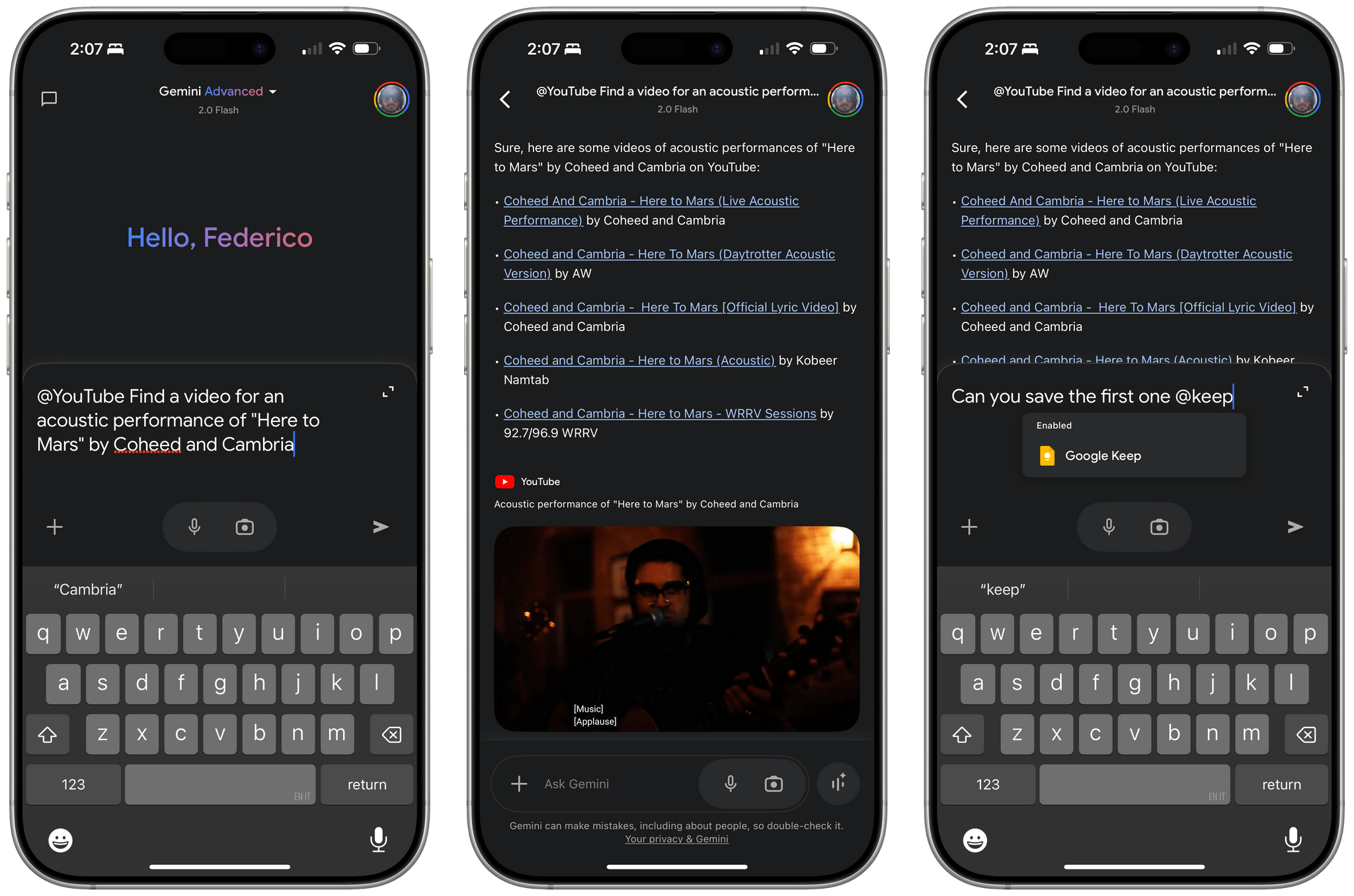

Most of all, I’m very intrigued by Gemini’s support for extensions, which turn conversations with a chatbot into actions that can be performed with other Google apps. For instance, I’ve been enjoying the ability to save research sessions to Google Keep by simply invoking the app and asking Gemini what I wanted to save. I’ve searched YouTube videos with it, looked up places in Google Maps, and – since I’ve been running a platform-agnostic home automation setup in my apartment that natively supports HomeKit, Alexa, and Google Home all at once – even controlled my lights with it. While custom GPTs in ChatGPT seem sort of abandonware now, Gemini’s app integrations are fully functional, integrated across the Google ecosystem, and expanding to third-party services as well.2

Even more impressively, today Google rolled out a preview of a reasoning version of Gemini 2.0 that can integrate with YouTube, Maps, and Search. The idea here is that Gemini can think longer about your request, display its thought process, then do something with apps. So I asked:

I want you to find the best YouTube videos with Oasis acoustic performances where Liam is the singer. Only consider performances dated 1994-1996 that took place in Europe. I am not interested in demos, lyrics videos, or other non-live performances. They have to be acoustic sets with Noel playing the guitar and Liam singing.

Surely enough, I was presented with some solid results. If Google can figure out how to integrate reasoning capabilities with advanced Gmail searches, that’s going to give services like Shortwave and Superhuman a run for their money. And that’s not to mention all the other apps in Google’s suite that could theoretically receive a similar treatment.

However, the Gemini app falls short of ChatGPT and Claude in terms of iOS/iPadOS user experience in several key areas.

The app doesn’t support widgets (which Claude has), doesn’t offer any Shortcuts actions (both Claude and ChatGPT have them), doesn’t have a native iPad app (sigh), and I can’t figure out if there’s a deep link to quickly start a new chat on iOS. The photo picker is also bad in that it only lets you attach one image at a time, and the web app doesn’t support native PWA installation on iPhone and iPad.

Clearly, there’s a long road ahead for Google to make Gemini a great experience on Apple platforms. And yet, none of these missing features have been dealbreakers for me when Gemini is so fast and I can connect my conversations to the other Google services I already use. This is precisely why I remain convinced that a “Siri LLM” (“Siri Chat” as a product name, perhaps?) with support for conversations integrated and/or deep-linked to native iOS apps may be Apple’s greatest asset…in 2026.

Ultimately, I believe that, even though ChatGPT has captured the world’s attention, it is Gemini that will be the ecosystem to beat for Apple. It always comes down to iPhone versus Android after all. Only this time, Apple is the one playing catch-up.