It’s Called the Carousel

The photos on our devices are more than files in a library. They’re tiny bits of our past. The places we went to; the people we were; those we met. Together, they’re far more powerful than memory alone. Photos allow us to ache, cherish, and remember.

Without tools to rediscover and relive memories, none of that matters. A camera that’s always with us has enabled us to take a picture for every moment, but it created a different set of issues. There’s too much overhead in finding our old selves in a sea of small thumbnails. And what purpose is to a photo if it’s never seen again?

Apple sees this as a problem, too, and they want to fix it with iOS 10. With storage, syncing, and 3D Touch now taken care of, the new Photos focuses on a single, all-encompassing aspect of the experience:

You.

Computer Vision

Apple’s rethinking of what Photos can do starts with a layer of intelligence built into our devices. The company refers to it as “advanced computer vision”, and it spans elements such as recognition of scenes, objects, places, and faces in photos, categorization, relevancy thresholds, and search.

Second, Apple believes iOS devices are smart and powerful enough to handle this aspect of machine learning themselves. The intelligence-based features of Photos are predicated on an implementation of on-device processing that doesn’t transmit private user information to the cloud – not even Apple’s own iCloud (at least not yet).

Photos’ learning is done locally on each device by taking advantage of the GPU: after a user upgrades to iOS 10, the first backlog of photos will be analyzed overnight when a device is connected to Wi-Fi and charging; after the initial batch is done, new pictures will be processed almost instantaneously after taking them. Photos’ deep learning classification is encrypted locally, it never leaves the user’s device, and it can’t be read by Apple.

As a Google Photos user, I was more than doubtful when Apple touted the benefits of on-device intelligence with iOS 10’s Photos app. What were the chances Apple, a new player in the space, could figure out deep learning in Photos just by using the bits inside an iPhone?

You’ll be surprised by how much Apple has accomplished with Photos in iOS 10. It’s not perfect, and, occasionally, it’s not as eerily accurate as Google Photos, but Photos’ intelligence is good enough, sometimes great, and it’s going to change how we relive our memories.

Memories

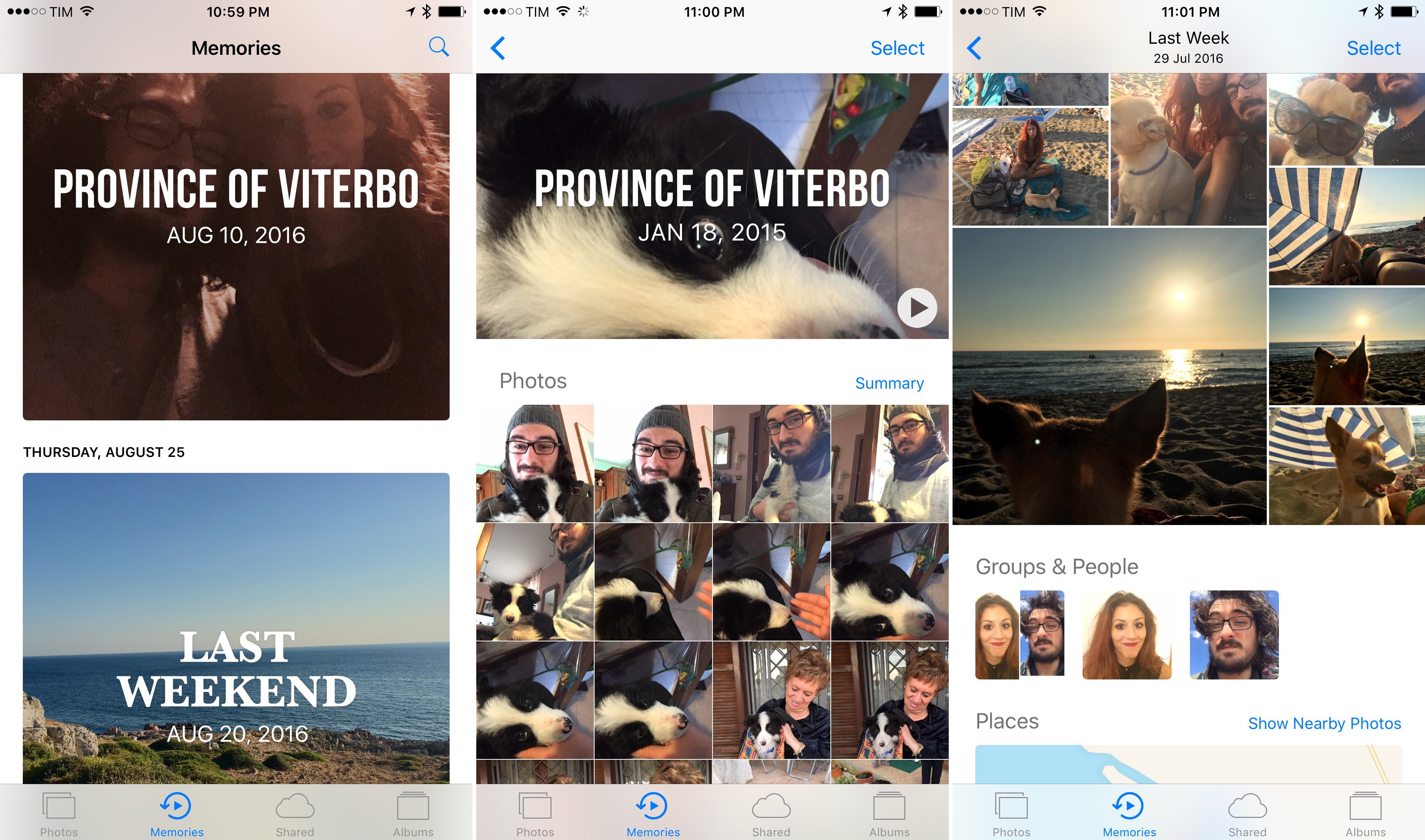

Of the three intelligence features in Photos, Memories is the one that gained a spot in the tab bar. Memories creates collections of photos automatically grouped by people, date, location, and other criteria. They’re generated almost daily depending on the size of your library, quantity of information found in photos, and progress of on-device processing.

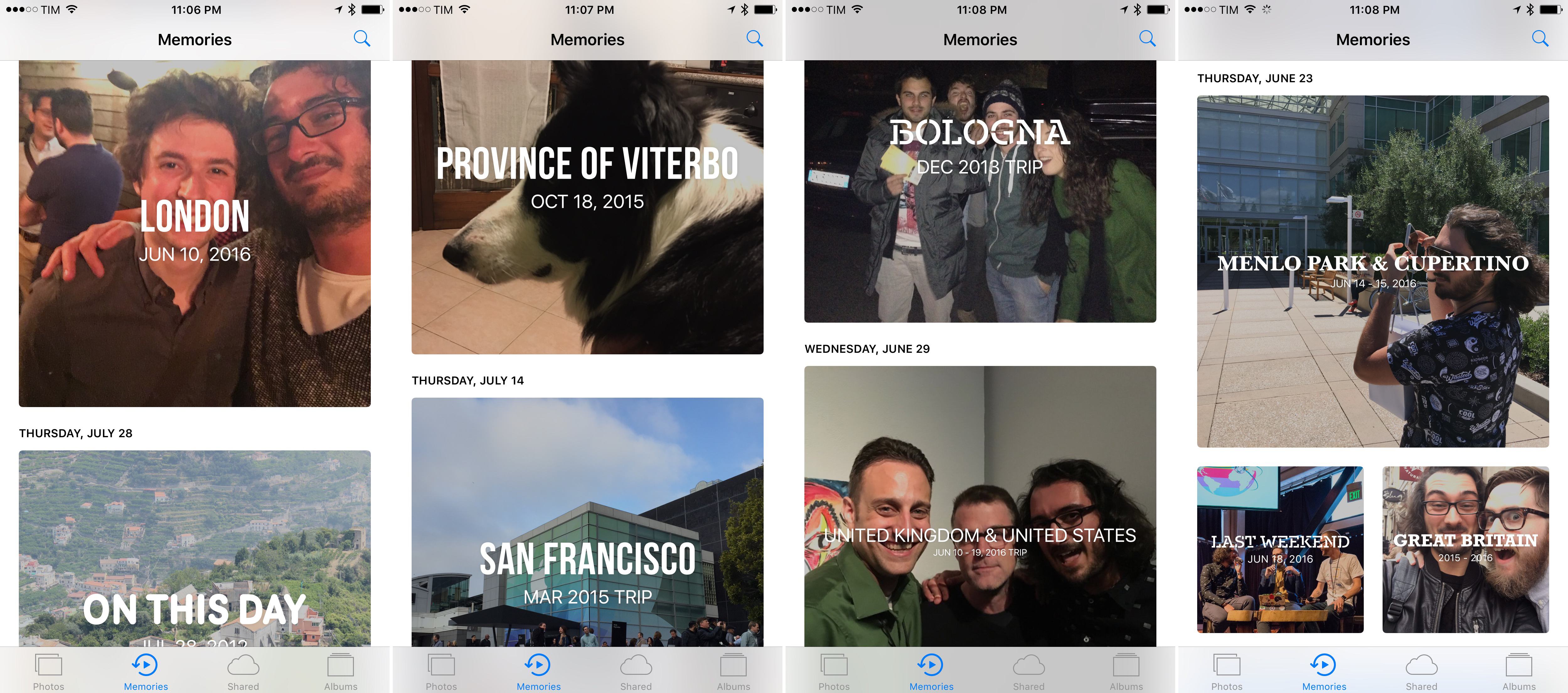

The goal of Memories is to let you rediscover moments from your past. There are some specific types of memories. For instance, you’ll find memories for a location, a person, a couple, a day, a weekend, a trip spanning multiple weeks, a place, or “Best Of” collections that highlight photos from multiple years.

In my library, I have memories for my trip to WWDC (both “Great Britain and United States” and “Myke and Me”), pictures taken “At the Beach”, and “Best of This Year”. There’s a common thread in the memories Photos generates, but they’re varied enough and iOS does a good job at bringing up relevant photos at the right time.

Behind the scenes, Memories are assembled with metadata contained in photos or recognized by on-device intelligence. Pieces of data like location, time of the day, and proximity to points of interest are taken into consideration, feeding an engine that also looks at aspects such as faces.

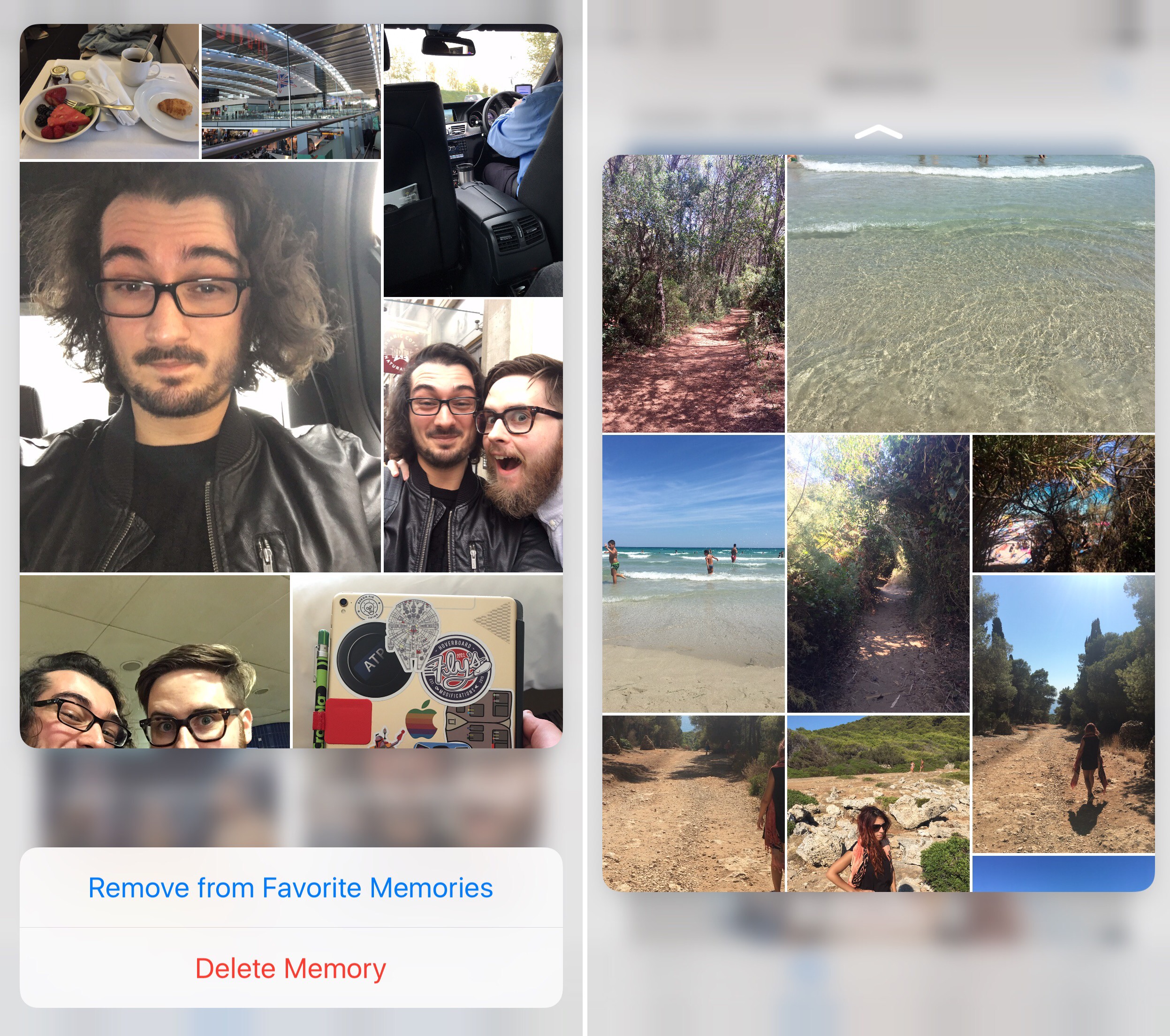

Scrolling Memories feels like flipping through pages of a scrapbook. Cover images are intelligently chosen from the app; if you press a memory’s preview, iOS brings up a collage-like peek with buttons to delete a memory or add it to your favorites.

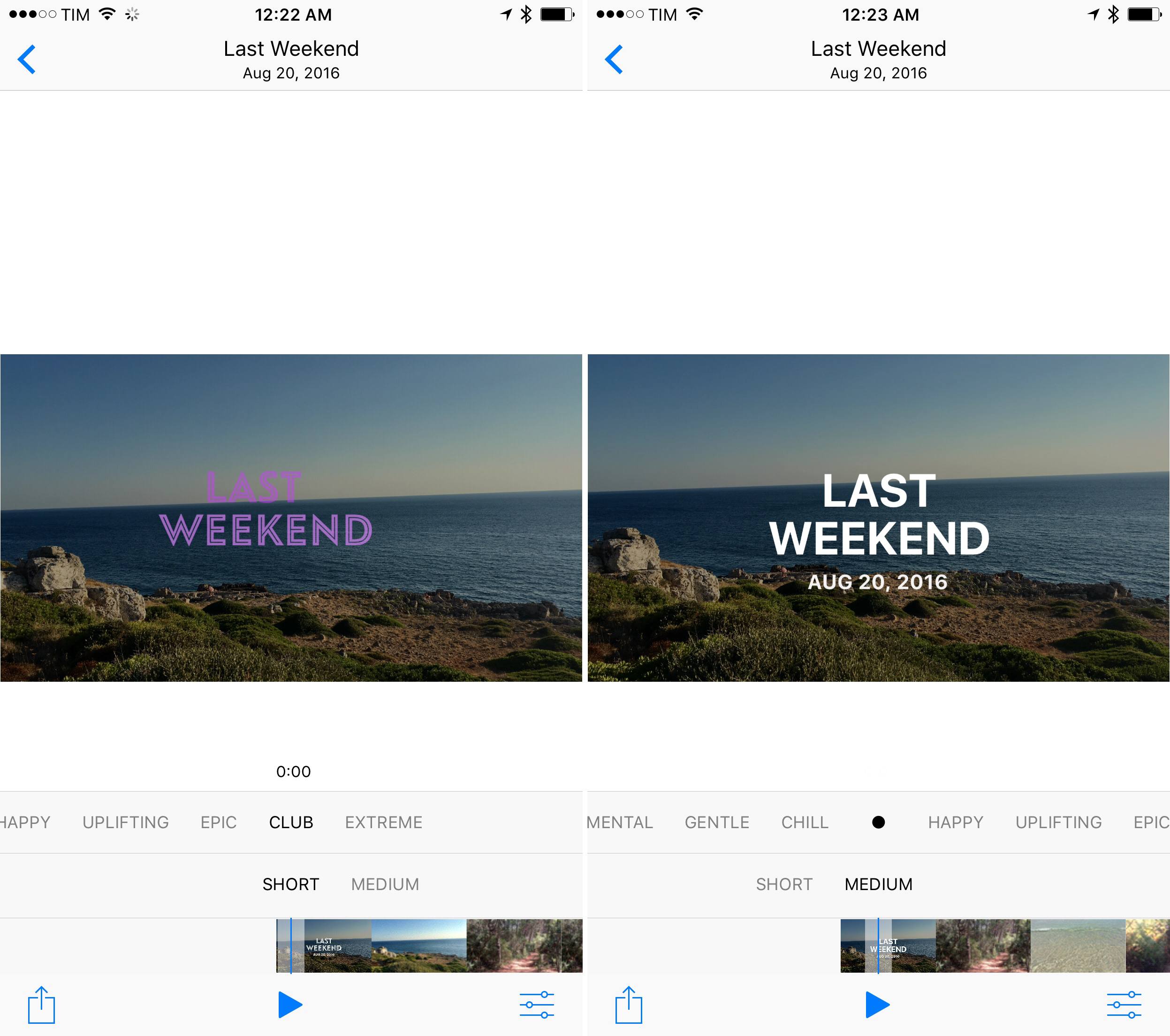

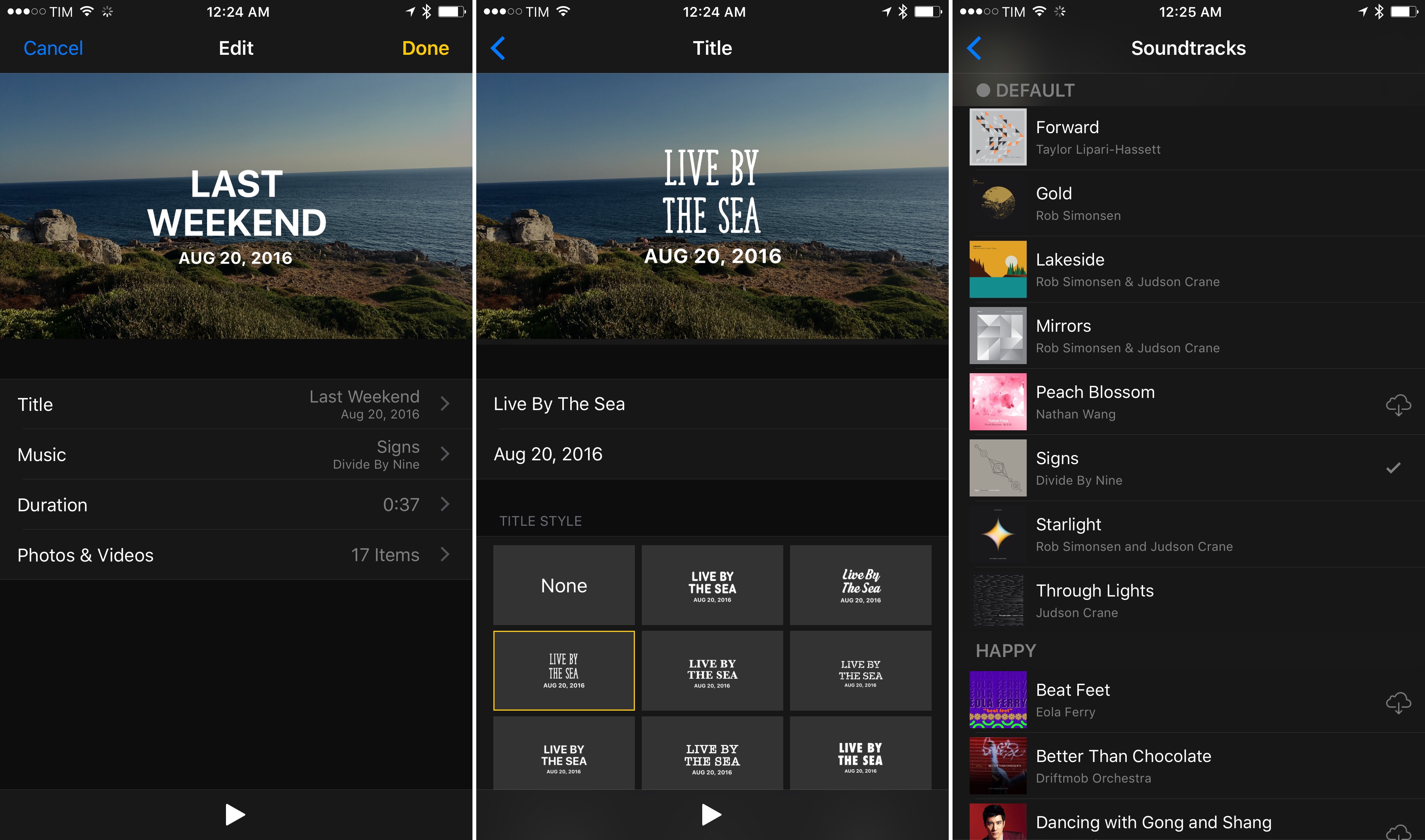

Tapping a memory transitions to a detail screen where the cover morphs into a playable video preview at the top. Besides photos, Memories generates a slideshow movie that you can save as a video in your library. Slideshows combine built-in soundtracks (over 80), pictures, videos, and Live Photos to capture an extended representation of a memory that you can share with friends or stream for the whole family on an Apple TV.

Each video comes with quick adjustment controls and deeper settings reminiscent of iMovie. In the main view, there’s a scrollable bar at the bottom to pick one of eight “moods”, ranging from dreamy and sentimental to club and extreme. Photos picks a neutral mood by default, which is a mix of uplifting and sentimental; moods affect the music used in the slideshows, as well as the cover text, selection of media, and transitions between items. You can also change the duration of a movie (short, medium, and long); doing so may require Photos to download additional assets from iCloud.

To have finer control over Memories’ movies, you can tap the editing button in the bottom right (the three sliders). Here, you can customize the title and subtitle with your own text and different styles, enter a duration in seconds, manually select photos and videos from a memory, and replace Apple’s soundtrack with your favorite music.66

Below the slideshow, Memories displays a grid of highlights. Both in the grid and the slideshow, Photos applies de-duplication, removing photos similar to each other.67 Apple’s Memories algorithm tends to promote pictures that are well-lit, or where people are smiling, to a bigger size in the grid. In Memories, a photo’s 3D Touch peek menu includes a ‘Show Photos from this Day’ option to jump to a specific moment.

As you scroll further down a memory’s contents, you’ll notice how Photos exposes some of the data it uses to build Memories with People and Places.

The memories you see in the main Memories page are highlights – the best memories recommended for you. In reality, iOS 10 keeps a larger collection of memories generated under the hood. For example, every moment (the sub-group of photos taken at specific times and locations) can be viewed as a memory. In each memory, you’ll find up to four suggestions for related memories, where the results are more hit-and-miss.

In many ways, Apple’s Memories are superior to Google Assistant’s creations: they’re not as frequent and they truly feel like the best moments from your past. Where Google Photos’ Assistant throws anything at the wall to see what you might want to save, I can’t find a memory highlighted by Photos that isn’t at least somewhat relevant to me. iOS 10’s Memories feel like precious stories made for me instead of clever collages.68

Memories always bring back some kind of emotion. I find myself anticipating new entries in the Memories screen to see where I’ll be taken next.

People and Groups

Available for years on the desktop, Faces have come to Photos on iOS with the ability to browse and manage people matched by the app.

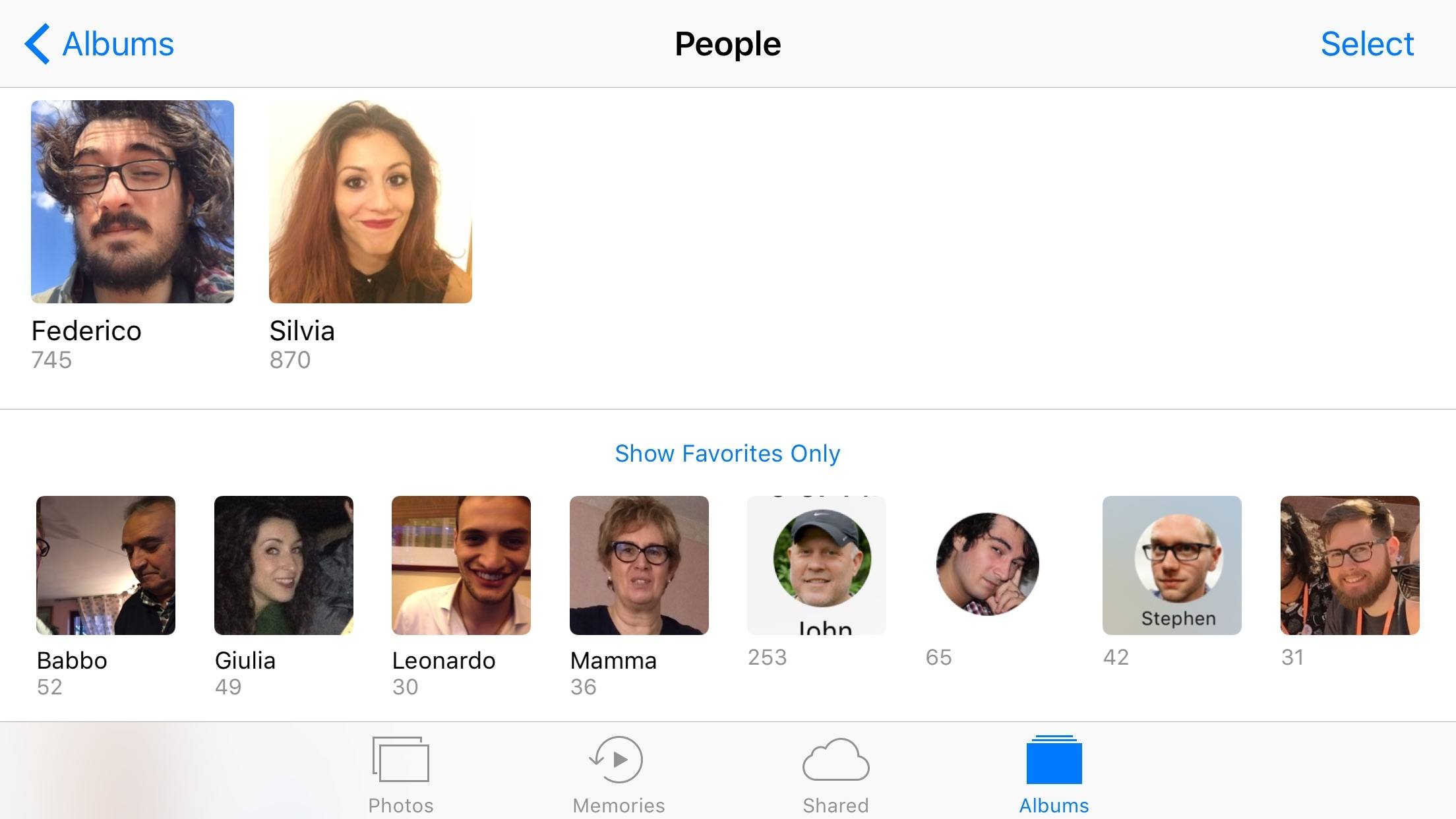

There are multiple ways to organize people recognized in your photo library. The easiest is the People view, a special album with a grid of faces that have either been matched and assigned to a person or that still need to be tagged.

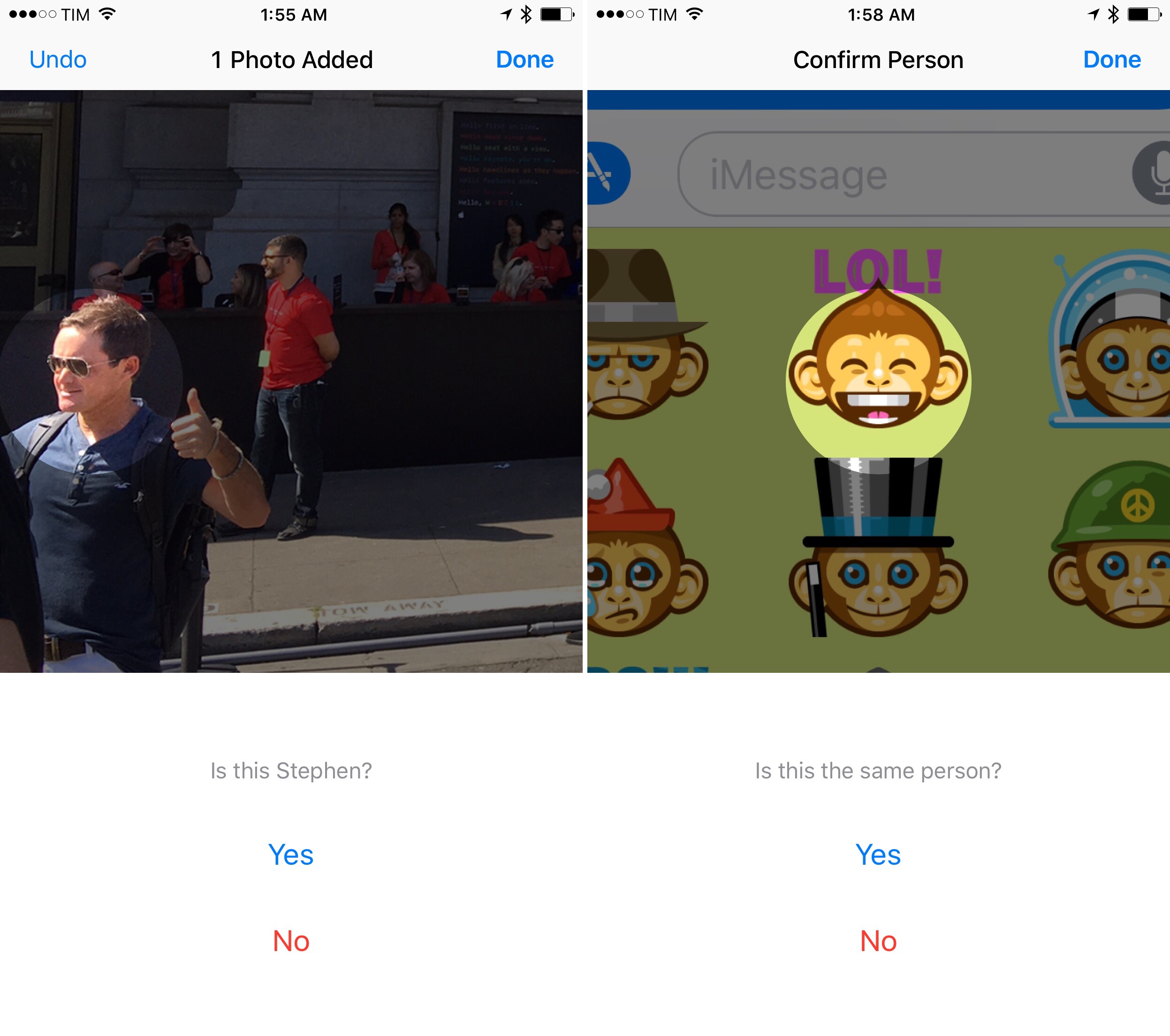

Like on macOS, the initial tagging process is manual: when you tap on an unnamed face, photos from that person have an ‘Add Name’ button in the title bar. You can choose one of your contacts to assign the photos to.

As you start building a collection of People, the album’s grid will populate with more entries. To have quicker access to the most important people – say, your kids or partner – you can drag faces towards the top and drop them in a favorites area.69

Marking people as favoritesReplay

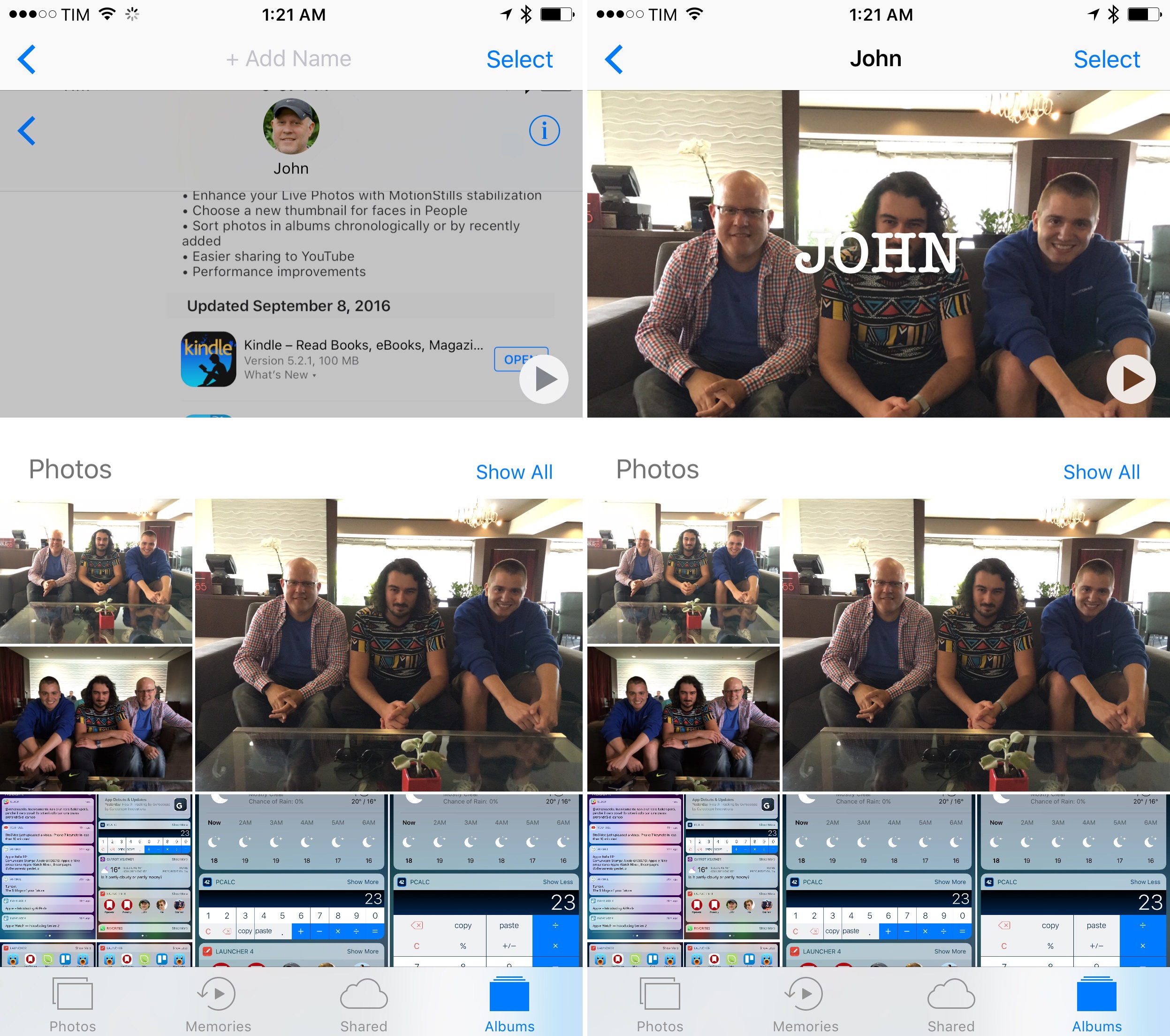

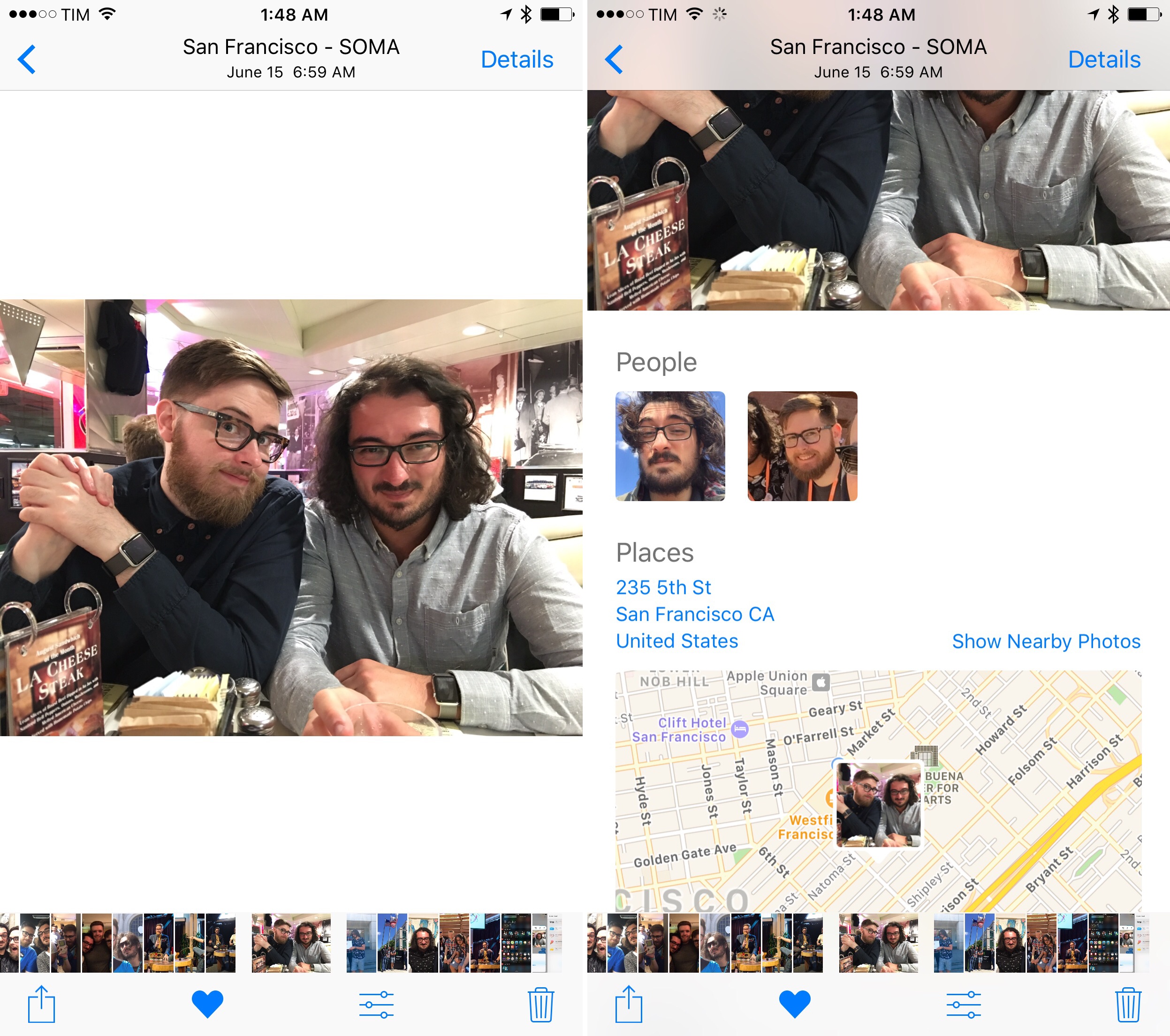

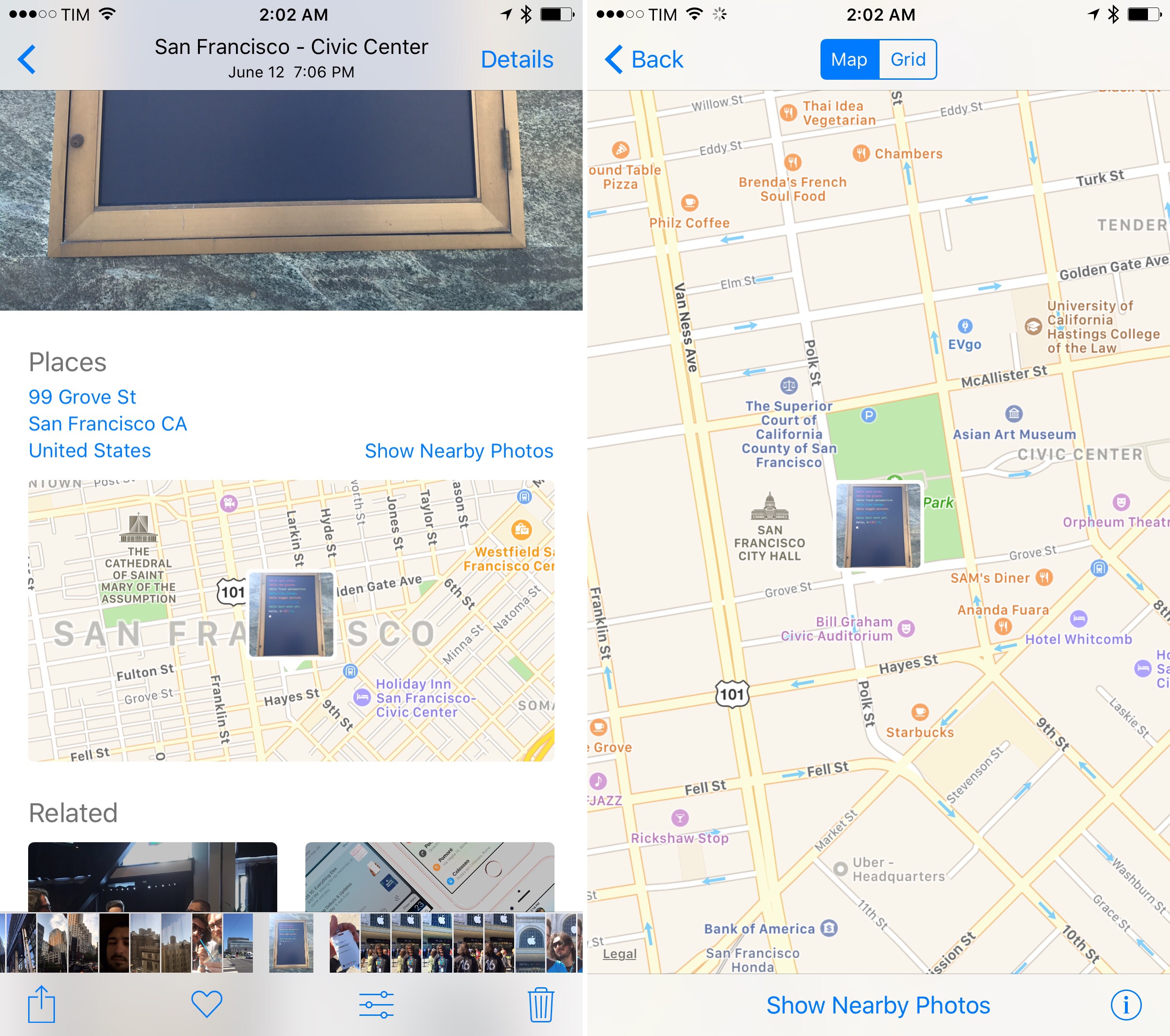

Another way to deal with faces is from a photo’s detail view. In iOS 10, you can swipe up on a photo (or tap ‘Details’ in the top right) to locate it on a map, show nearby photos, view related memories (again, mostly chosen randomly), and see which people Photos has recognized.

This is one of my favorite additions to Photos.70 Coalescing location metadata and faces in the same screen is an effective way to remember a photo’s context.

No matter how you get to a person’s photos, there will always be a dedicated view collecting them all. If there are enough pictures, a Memories-like slideshow is available at the top. Below, you get a summary of photos in chronological order, a map of where photos were taken, more related memories, and additional people. When viewing people inside a person’s screen71, iOS will display a sub-filter to view people and groups. Groups help you find photos of that person and yourself together.

Due to EU regulations on web photo services, I can’t use Google Photos’ face recognition in Italy, therefore I can’t compare the quality of Google’s feature with Photos in iOS 10. What I have noticed, though, is that local face recognition in Photos isn’t too dissimilar from the functionality that existed in iPhoto. Oftentimes, Photos gets confused by people with similar facial features such as beards; occasionally, Photos can’t understand a photo of someone squinting her eyes belongs to a person that had already been recognized. But then other times, Photos’ face recognition is surprisingly accurate, correctly matching photos from the same person through the years with different hairstyles, beards, hair color, and more. It’s inconsistently good.

Despite some shortcomings, I’d rather have face recognition that needs to be trained every couple of weeks than not have it at all.

You can “teach” photos about matched people in two ways: you can merge unnamed entries that match an existing person (just assign the same name to the second group of photos and you’ll be asked to merge them), or you can confirm a person’s additional photos manually. You can find the option at the bottom of a person’s photos.

The biggest downside of face support in iOS 10 is lack of iCloud sync . Photos runs its face recognition locally on each device, populating the Faces album without syncing sets of people via iCloud Photo Library. The face-matching algorithm is the same between multiple devices, but you’ll have to recreate favorites and perform training on every device. I’ve ended up managing and browsing faces mostly on my iPhone to eschew the annoyance of inconsistent face sets between devices. I hope Apple adds face sync in a future update to iOS 10.

Confirming faces in Photos is a time-consuming, boring process that, however, yields a good return on investment. It’s not compulsory, but you’ll want to remember to train Photos every once in a while to help face recognition. In my first training sessions, suggestions were almost hilariously bad – insofar as suggesting pictures of Myke Hurley and me were the same person. After some good laughs and taps, Photos’ questions have become more pertinent, stabilizing suggestions for new photos as well.

Face recognition in iOS 10’s Photos is not a dramatic leap from previous implementations in Apple’s Mac clients, but it’s good enough, and it can be useful.

Places

Display of location metadata has never been Photos’ forte, which created a gap for third-party apps to fill. In iOS 10, Apple has brought MapKit-fueled Places views to, er, various places inside the app.

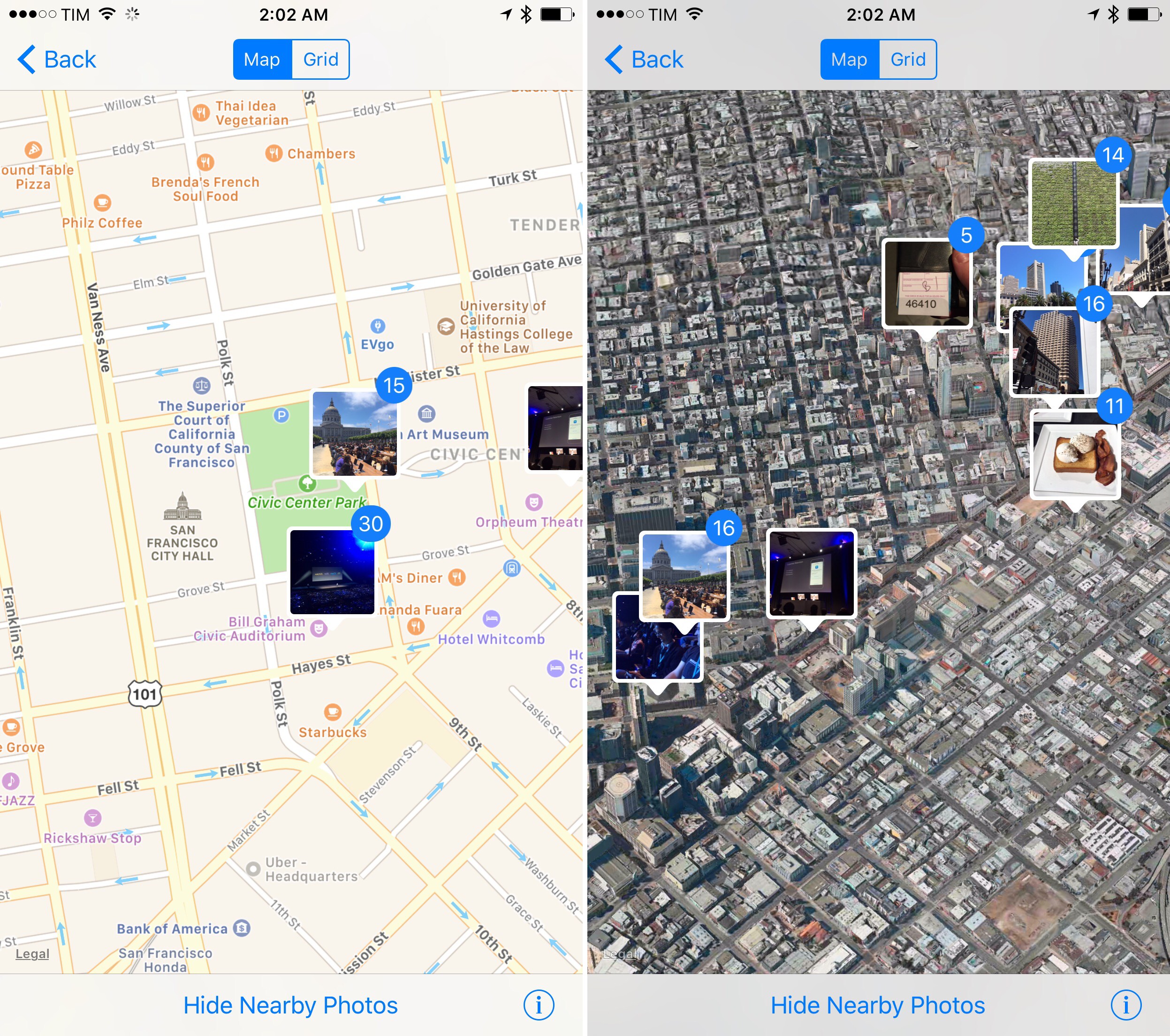

If Location Services were active when taking a picture, a photo’s detail view will have a map to show where it was taken. The map preview defaults to a single photo. You can tap it to open a bigger preview, with buttons to show photos taken nearby in addition to the current one.

When in full-screen, you can switch from the standard map style to hybrid or satellite (with and without 3D enabled). The combination of nearby photos and satellite map is great to visualize clusters of photos taken around the same location across multiple years. When you want to see the dates of all nearby photos, there’s a grid view that organizes them by moment.

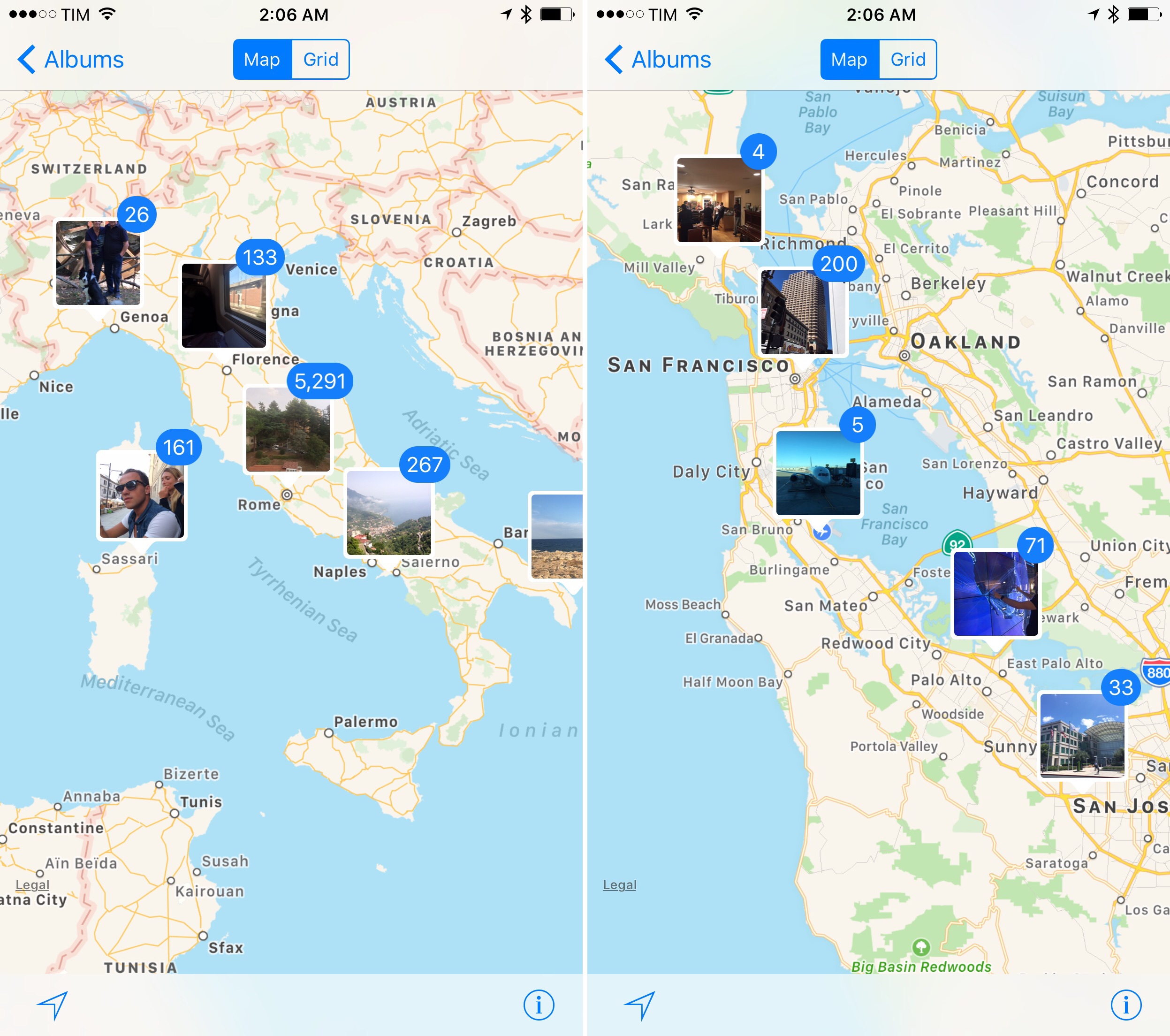

Nearby photos make 3D maps useful, too. I seldom use Flyover on iOS, but I like to zoom into a 3D map and view, for instance, photos taken around the most beautiful city in the world.

You can view all places at once from a special Places album. By default, this album loads a zoomed-out view of your country, but you can move around freely (like in Nearby) and pan to other countries and continents. It’s a nice way to visualize all your photos on a map, but it can also be used to explore old photos you’ve taken at your current location thanks to the GPS icon in the bottom left.

As someone who’s long wanted proper Maps previews inside Photos, I can’t complain. Nearby and Places are ubiquitous in Photos and they add value to the photographic memory of a picture. Apple waited until they got this feature right.

Search

Proactive suggestion of memories and faces only solves one half of Photos’ discovery. Sometimes, you have a vague recollection of the contents of a photo and want to search for it. Photos’ content search is where Apple’s artificial intelligence efforts will be measured up against Google’s admirable natural language search.

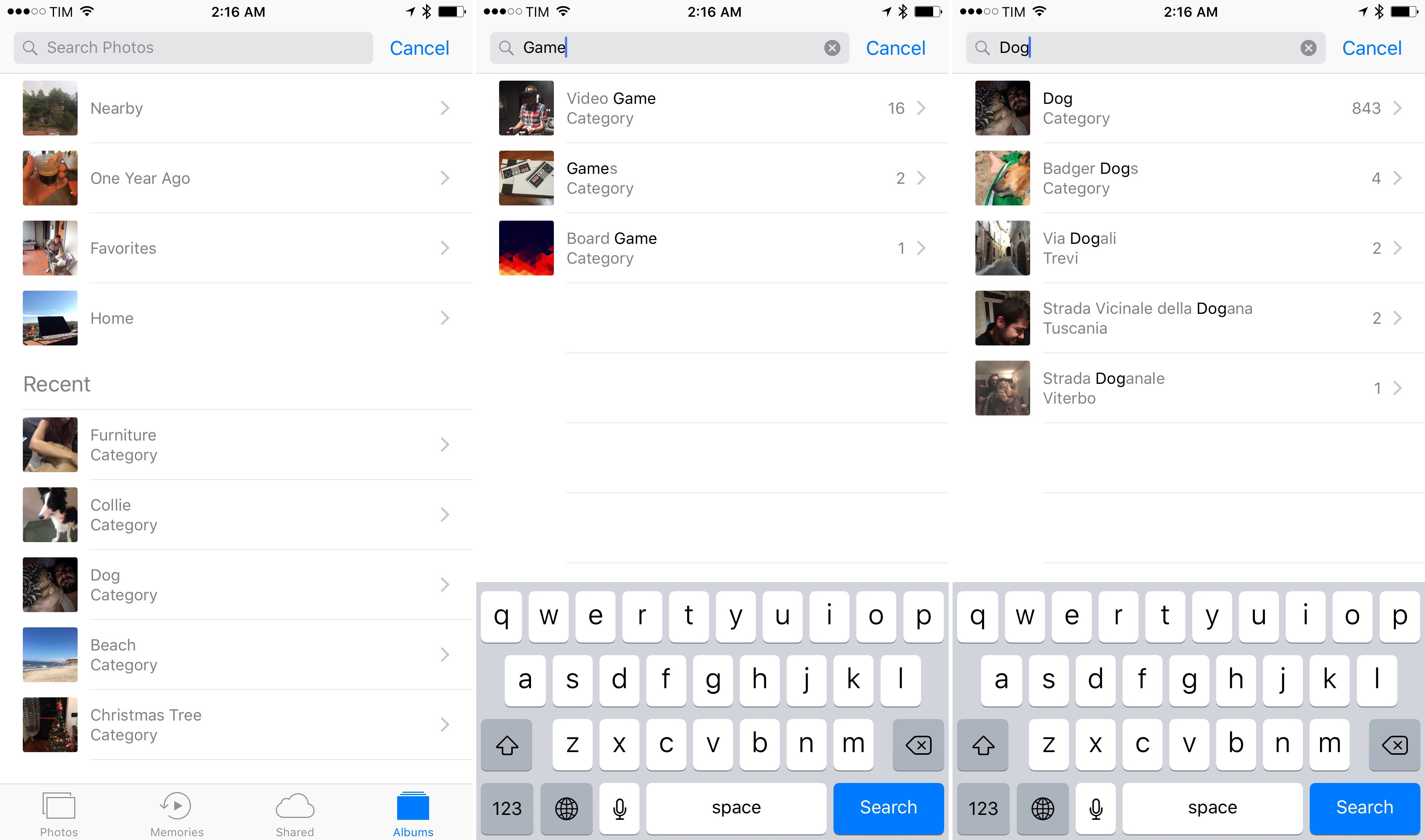

Photos in iOS 10 lets you search for things in photos. Apple is tackling photo search differently than Google, though. While Google Photos lets you type anything into the search field and see if it returns any results, iOS 10’s Photos search is based on categories. When typing a query, you have to tap on one of the built-in categories for scenes and objects supported by Apple. If there’s no category suggestion for what you’re typing, it means you can’t search for it.

The search functionality is imbued with categories added by Apple, plus memories, places, albums, dates, and people – some of which were already supported in iOS 9. Because of Apple’s on-device processing, an initial indexing will be performed after upgrading to iOS 10.72

The range of categories Photos is aware of varies. There are macro categories, such as “animal”, “food”, or “vehicle”, to search for families of objects; mid-range categories that include generic types like “dog”, “hat”, “fountain”, or “pizza”; and there are fewer, but more specific, categories like “beagle”, “teddy bear”, “dark glasses”, or, one of my favorites, the ever-useful “faucet”.

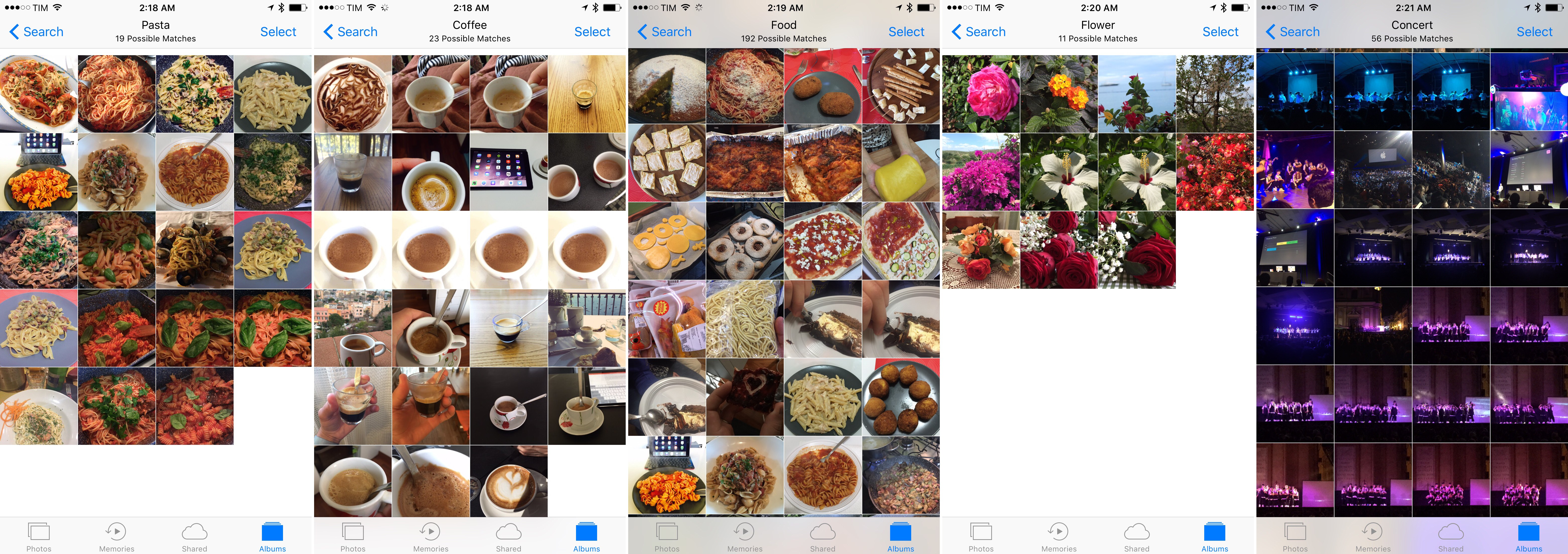

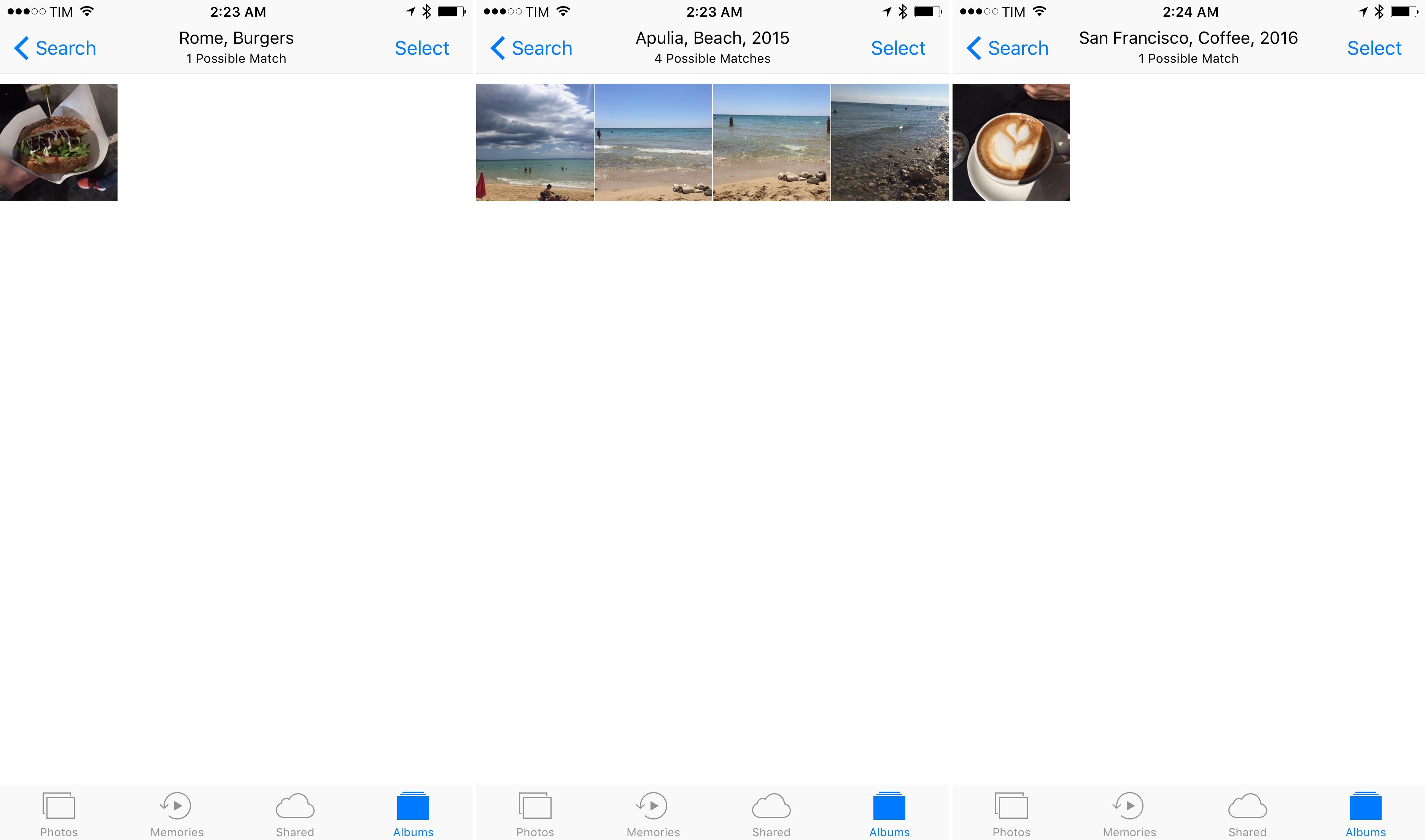

Apple’s goal was to provide users with a reasonable set of common words that represent what humans take pictures of. The technology gets all the more impressive when you start concatenating categories with each other or with other search filters. Two categories like “aircraft” and “sky” can be combined in the same search query and you’ll find the classic picture taken from inside a plane. You can also mix and match categories with places and dates: “Beach, Apulia, 2015” shows me photos of the beach taken during my vacation in Puglia last year; “Rome, food” lets me remember the many times I’ve been at restaurants here. I’ve been able to concatenate at least four search tokens in the same query; more may be possible.

All this may not be shocking for tech-inclined folks who have used Google Photos. But there are millions of iOS users who haven’t signed up for Google’s service and have never tried AI-powered photo search before. To have a similar feature in built-in app, developed in a privacy-conscious way, with a large set of categories to choose from – that’s a terrific change for every iOS user.

Apple isn’t storing photos’ content metadata in the cloud to analyze them at scale – your photos are private and indexing/processing are performed on-device, like Memories (even if you have iCloud Photo Library with Optimize Storage turned on). It’s an over-simplification, but, for the sake of the argument, this means that iOS 10 ships with a “master algorithm” that contains knowledge of its own and indexes photos locally without sending any content-related information to the cloud. Essentially, Apple had to create its computer vision from scratch and teach it what a “beach” looks like.

In everyday usage, Photos’ scene search is remarkable when it works – and a little disappointing when it doesn’t.

When a query matches a category and results are accurate, content-aware search is amazing. You can type “beach” and Photos will show you pictures of beaches because it knows what a beach is. You can search for pictures of pasta and suddenly feel hungry. Want to remember how cute your dog was as a puppy? There’s a category for that.

I’ve tested search in Photos for the past three months, and I’ve often been able to find the photo I was looking for thanks to query concatenation and mid-range descriptions, such as “pasta, 2014” or “Rome, dog, 2016”. Most of the time, what Apple has achieved is genuinely impressive.

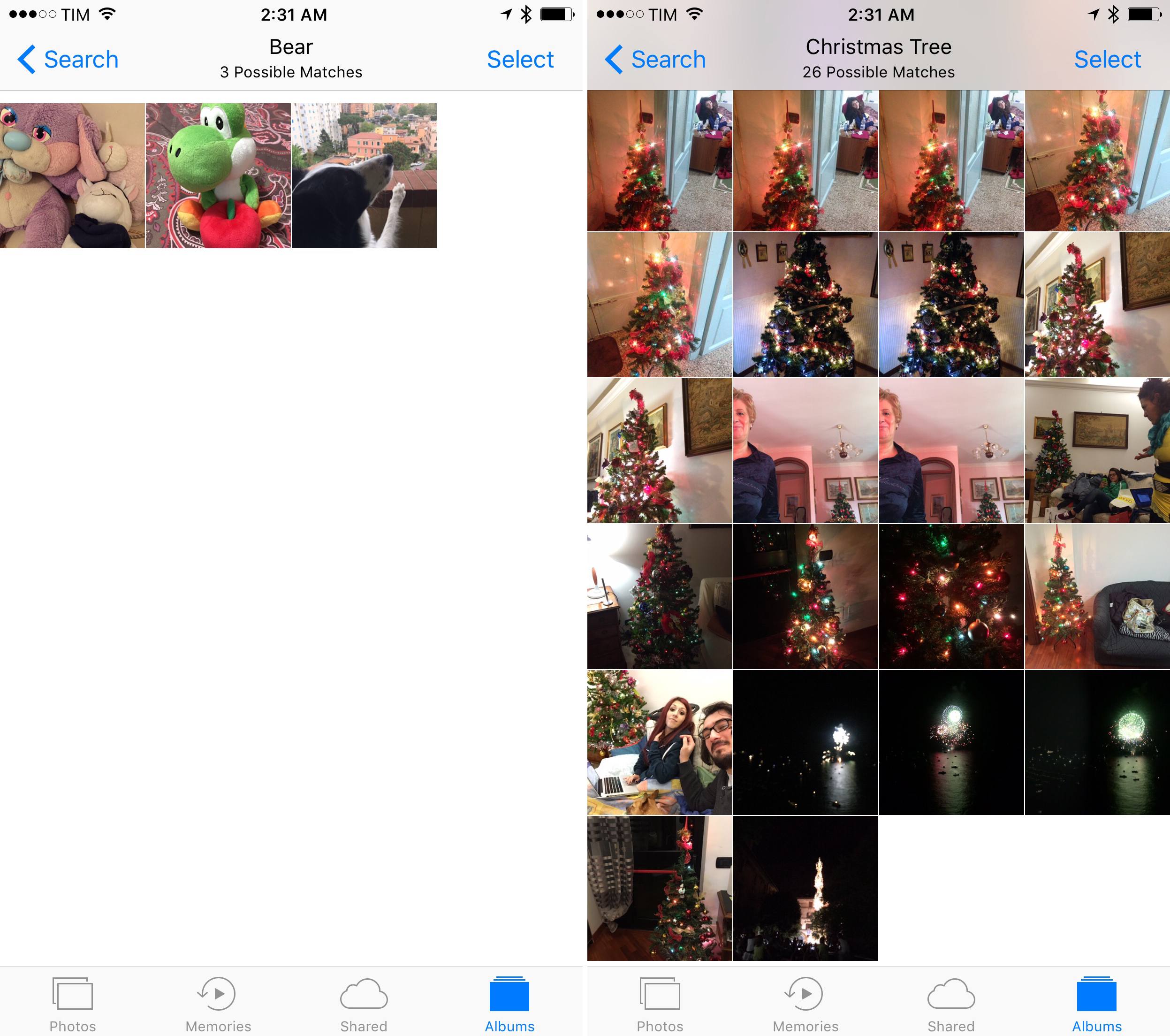

On a few occasions, Photos’ categories didn’t contain results I was expecting to be in there, or they matched a photo that belonged to a different category (such as my parents’ border collie, recognized as a “bear”, or fireworks tagged as “Christmas tree”).

Understandably, Apple’s first take on scene search with computer vision isn’t perfect. These issues could be remedied if there was a way to fix false positives and train recognition on unmatched photos, but no such option is provided in iOS 10. The decision to omit manual intervention hinders the ability to let users help Photos’ recognition, and it makes me wonder how long we’ll have to wait for improvements to the algorithm.

Compared to Google Photos’ search, Apple’s version in iOS 10 is already robust. It’s a good first step, especially considering that Apple is new to this field and they’re not compromising on user privacy.

An Intelligent Future

What’s most surprising about the new Photos is how, with one iOS update, Apple has gone from zero intelligence built into the app to a useful, capable alternative to Google Photos – all while taking a deeply different approach to image analysis.

Admittedly, iOS 10’s Photos is inspired by what Google has been doing with Google Photos since its launch in May 2015. 200 million monthly active users can’t be wrong: Google Photos has singlehandedly changed consumer photo management thanks to automated discovery tools and scene search. Any platform owner would pay attention to the third-party asking users to delete photos from their devices to archive them in a different cloud.

Apple has a chance to replicate the same success of Google Photos at a much larger scale, directly into the app more millions of users open every day. It isn’t just a matter of taking a page from Google for the sake of feature parity: photos are, arguably, the most precious data for iPhone users. Bringing easier discovery of memories, new search tools, and emotion into photo management yields loyalty and, ultimately, lock-in.

This isn’t a fight Apple is willing to give up. In their first round, Apple has shown that they can inject intelligence into Photos without sacrificing our privacy. Let’s see where they go from here.

- From what I've been able to try, any Apple Music track downloaded for offline listening should be supported in Memories. ↩︎

- You can turn this off in the grid view to show every item assigned to the memory by toggling Show All/Summary. ↩︎

- I do wish Apple's Photos could proactively generate animations and collages like Google does, but it's nothing that can't be added in the future. ↩︎

- You can also 3D Touch a face and swipe on the peek to favorite/unfavorite or hide it from the People album. ↩︎

- If you'd rather not use the Photos app to browse faces, you can ask Siri to "show me photos of [person]", which will open search results in Photos. These are the same results you'd get by typing in Photos' search field and choosing a person-type result. ↩︎

- We need to go deeper. ↩︎

- According to Apple, Memories, Related, People, and Scene search are not supported on 32-bit devices – older iPhones and iPads that don't meet the hardware requirements for image indexing. ↩︎