Everything Else

With the breadth and depth of iOS, it’s impossible to list every single change or new feature. Whether it’s a setting, a briefly documented API, or a subtle visual update, there are plenty of details and tidbits in iOS 10.

Differential Privacy

As a branch of cryptography and mathematics, I want to leave a proper discussion of Apple’s application of differential privacy to folks who are better equipped to talk about it (Apple is supposed to publish a paper on the subject in the near future). See this great explanation by Matthew Green and ‘The Algorithmic Foundations of Differential Privacy’ (PDF link), published by Cynthia Dwork and Arron Roth.

Here’s my attempt to offer a layman’s interpretation of differential privacy: it’s a way to collect user data at scale without personally identifying any individual. Differential privacy, used in conjunction with machine learning, can help software spot patterns and trends while also ensuring privacy with a system that goes beyond anonymization of users. It can’t be mathematically reversed. iOS 10 uses differential privacy in specific ways; ideally, the goal is to apply this technique to more data-based features to make iOS smarter.

From Apple’s explanation of differential privacy:

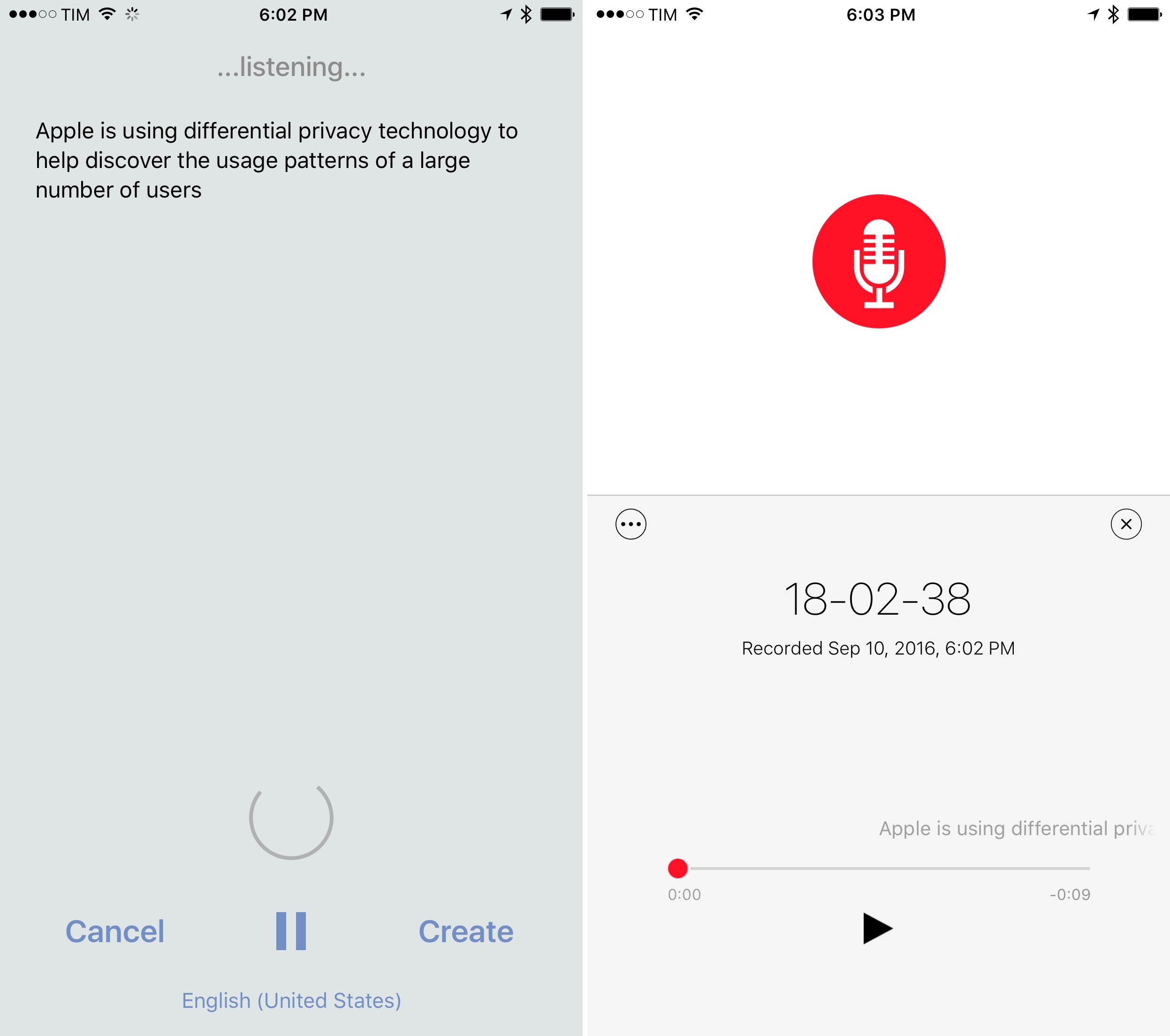

Starting with iOS 10, Apple is using Differential Privacy technology to help discover the usage patterns of a large number of users without compromising individual privacy. To obscure an individual’s identity, Differential Privacy adds mathematical noise to a small sample of the individual’s usage pattern. As more people share the same pattern, general patterns begin to emerge, which can inform and enhance the user experience. In iOS 10, this technology will help improve QuickType and emoji suggestions, Spotlight deep link suggestions and Lookup Hints in Notes.

If Apple’s approach works, iOS will be able to offer more intelligent suggestions at scale without storing identifiable information for individual users. Differential privacy has the potential to give Apple a unique edge on services and data collection. Let’s wait and see how it’ll play out.

Speech recognition

iOS has offered transcription of spoken commands with a dictation button in the keyboard since the iPhone 4S and iOS 5. According to Apple, a third of all dictation requests comes from apps, with over 65,000 apps using dictation services per day for the 50 languages and dialects iOS supports.

iOS 10 introduces a new API for continuous speech recognition that enables developers to build apps that can recognize human speech and transcribe it to text. The speech recognition API has been designed for those times when apps don’t want to present a keyboard to start dictation, giving developers more control.

Speech recognition uses the same underlying technology of Siri and dictation. Unlike dictation in the keyboard, though, speech recognition also works for recorded audio files stored locally in addition to live audio. After feeding audio to the API, developers are given rich transcriptions that include alternative interpretations, confidence levels, and timing details. None of this is exposed to the microphone button in the keyboard, and it can be implemented natively in an app’s UI.

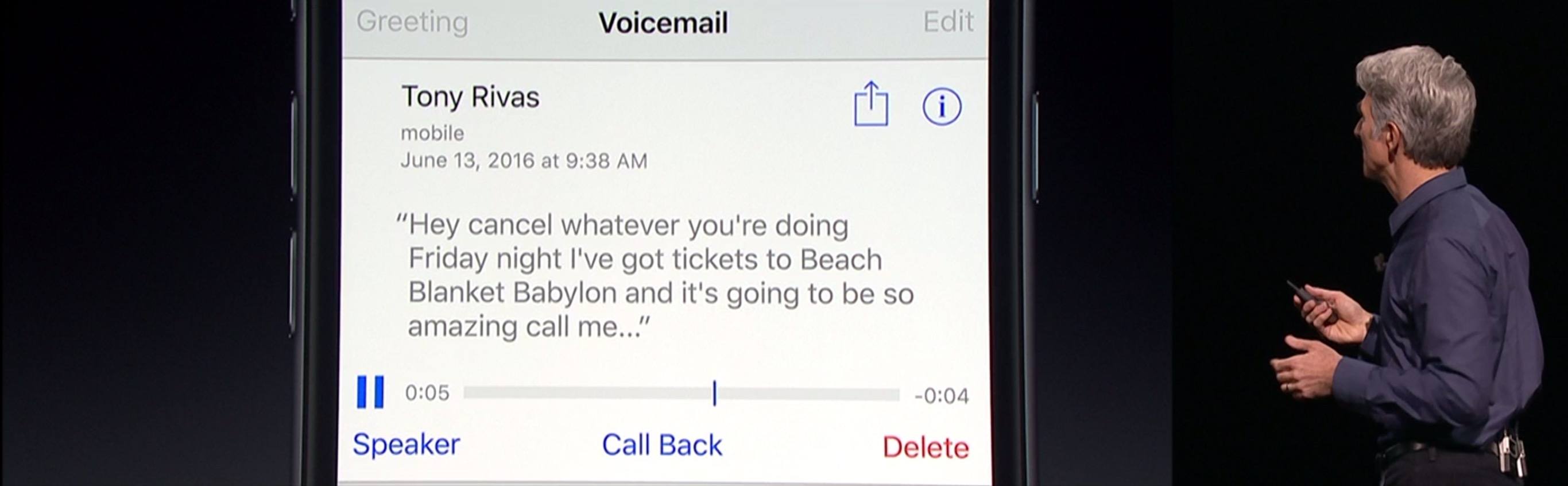

There are some limitations to keep in mind. Speech recognition is free, but not unlimited. There’s a limit of 1 minute for audio recordings (roughly the same of dictation) with per-device and per-day recognition limits that may result in throttling. Also, speech recognition usually requires an Internet connection. On newer devices (including the iPhone 6s), speech recognition is supported offline, too. User permission will always be required to enable speech recognition and allow apps to transcribe audio. Apple itself is likely using the API in their new voicemail transcription feature available in the Phone app.

I was able to test speech recognition with new versions of Drafts and Just Press Record for iOS 10. In Drafts, my iPhone 6s supported offline speech recognition and transcription was nearly instantaneous – words appeared on screen a fraction of a second after I spoke them. Greg Pierce has built a custom UI for audio transcription inside the app; other developers will be able to design their own and implement the API as they see fit. In Just Press Record, transcripts aren’t displayed in real-time as you speak – they’re generated after an audio file has been saved, and they are embedded in the audio player UI.

I’m looking forward to podcast clients that will let me share an automatically generated quote from an episode I’m listening to.

Do Not Disturb gets smarter

Do Not Disturb has a setting to always allow phone calls from everyone while every other notification is being muted.

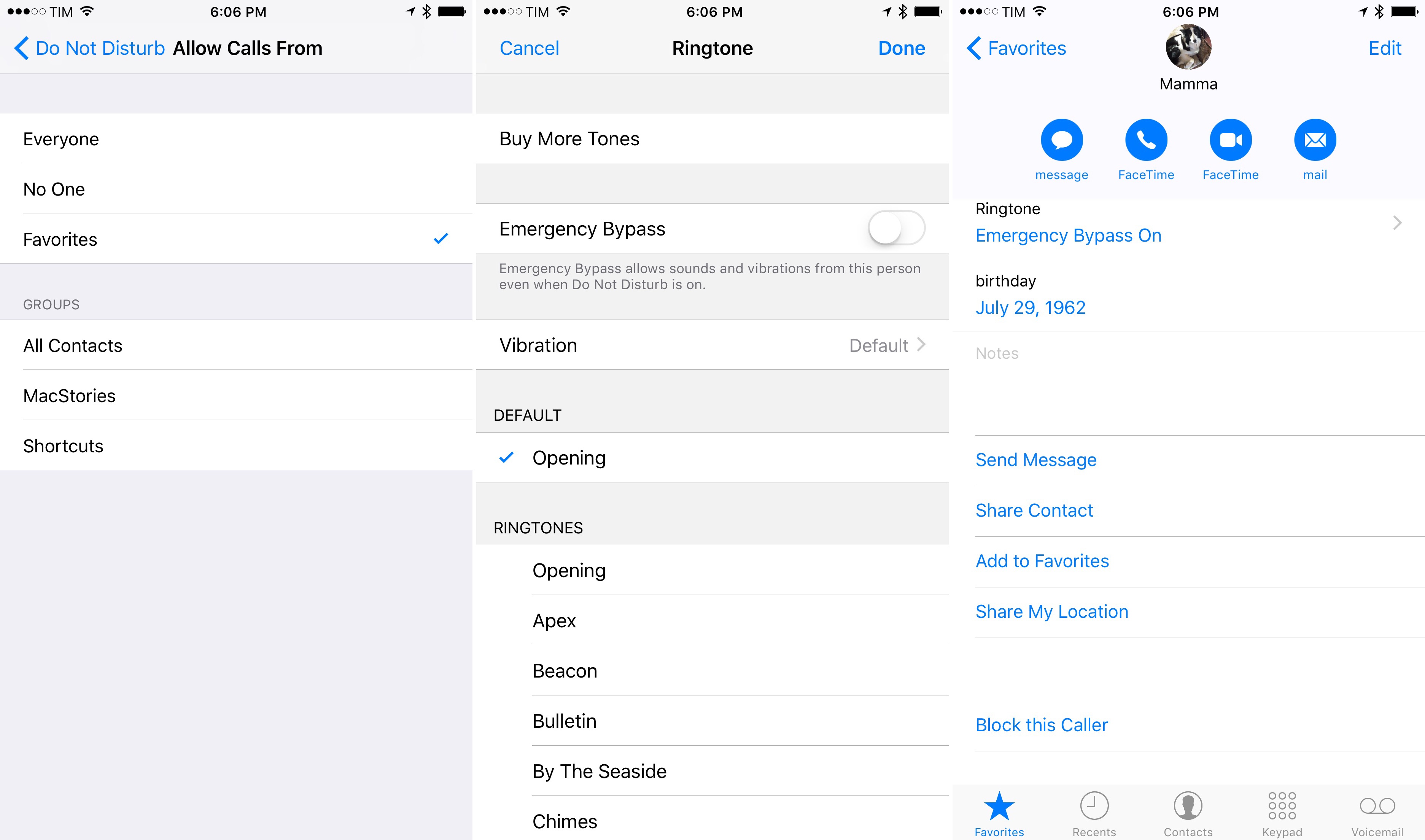

An Emergency Bypass toggle has been added to a contact’s editing screen for Ringtone and Text Tone. When enabled, it’ll allow sounds and vibrations from that person even when Do Not Disturb is on. If you enable Emergency Bypass, it’ll be listed as a blue button in the contact card to quickly edit it again.

Tap and hold links for share sheet

Apple is taking a page from Airmail (as I hoped) to let you tap & hold a link and share it with extensions – a much-needed time saver.

Parked car

I couldn’t test this because I don’t have a car with a Bluetooth system (yet), but iOS 10 adds a proactive Maps feature that saves the location of your car as soon as it’s parked. iOS sends you a notification after you disconnect from your car’s Bluetooth, dropping a special pin in Maps to remind you where you parked. The feature also works with CarPlay systems.

Spotlight search continuation

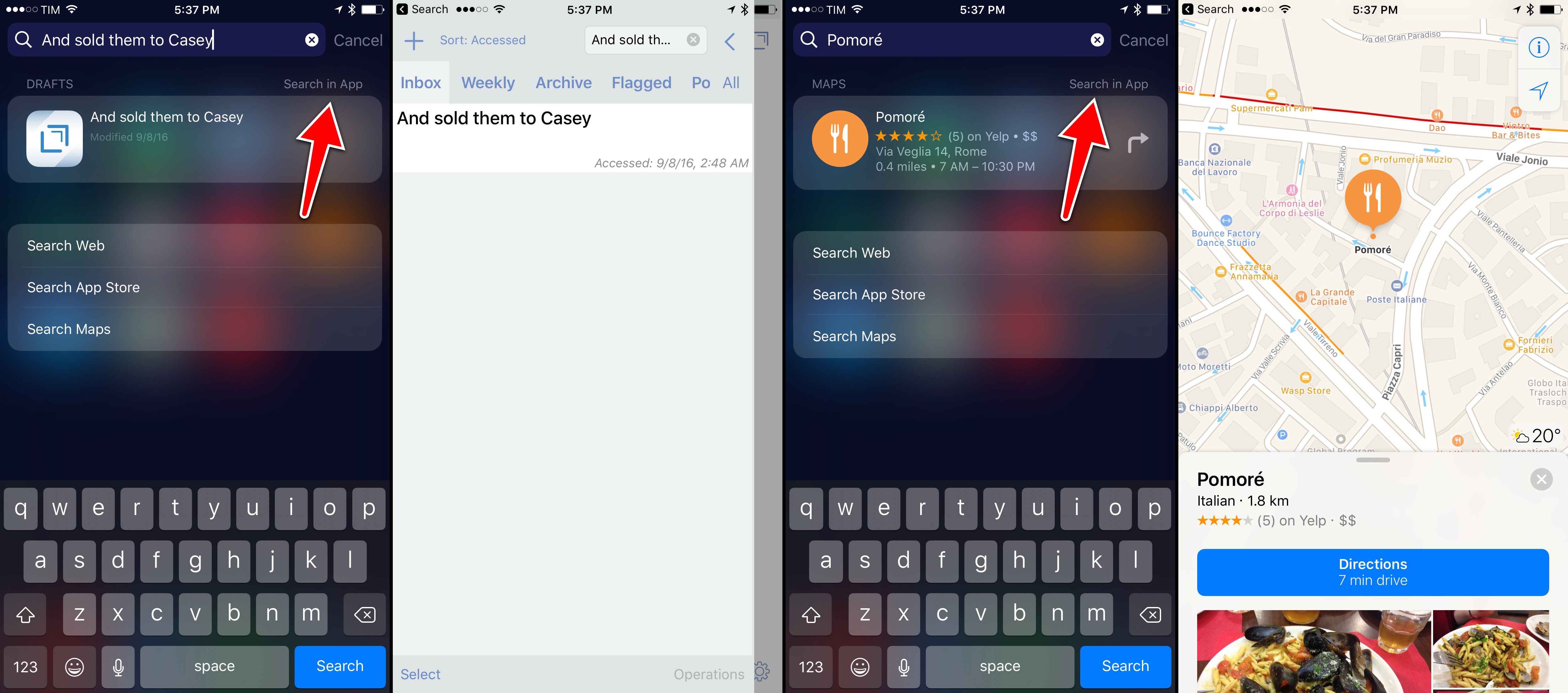

Searches for app content that began in Spotlight can now continue inside an app with the tap of a button.

Drafts uses the new Spotlight search continuation API to let users continue looking for content on the app’s own search page. Maps has also implemented search continuation to load places in the app.

Better clipboard detection

iOS 10 brings a more efficient way for apps to query the system pasteboard. Instead of reading clipboard data, developers can now check whether specific data types are stored in the pasteboard without actually reading them.

For example, a text editor can ask iOS 10 if the clipboard contains text before offering to import a text clipping; if it doesn’t, the app can stop the task before reading the pasteboard altogether. This API should help make clipboard data detection more accurate for a lot of apps, and it’s more respectful of a user’s privacy.

Print to PDF anywhere

A hidden feature of iOS 9 was the ability to 3D Touch on the print preview screen to pop into the PDF version of a document and export it. iOS 10 makes this available to every device (with and without 3D Touch) by pinching on the print preview to open Quick Look.

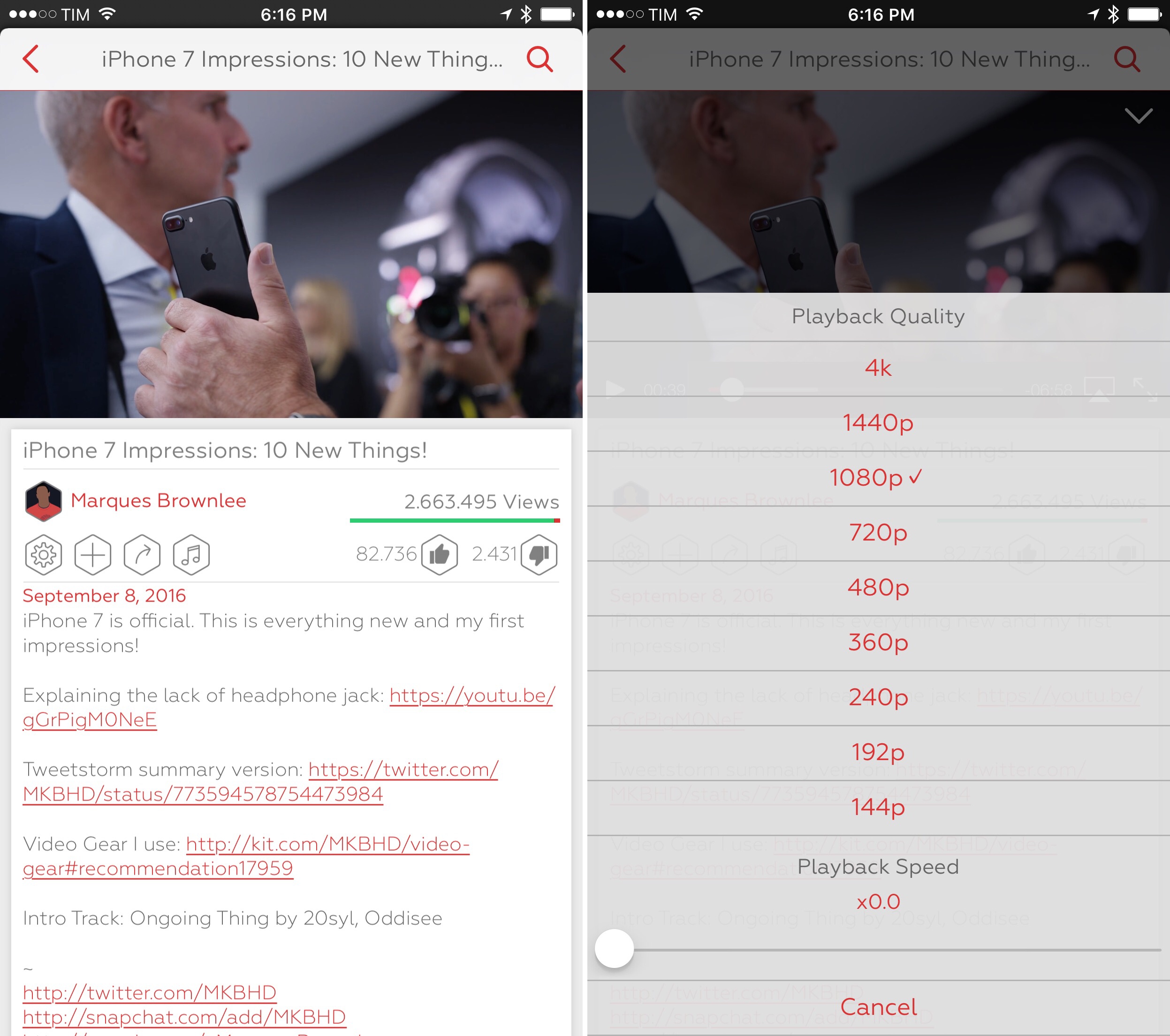

Videos cellular playback quality settings

If you use Apple’s Videos app to stream movies and TV shows, you can now choose from Good and Best Available settings. I wish this also affected playback quality of YouTube embeds in Safari.

HLS and fragmented MP4 files

Apple’s HTTP Live Streaming framework (HLS) has added support for fragmented MP4 files. In practical terms, this means more flexibility for developers of video player apps that want to stream movie files encoded in MPEG-4.

I tested a version of ProTube – the most powerful third-party YouTube client – with HLS optimizations for iOS 10. The upcoming update to ProTube will introduce streaming of videos up to 4K resolution (including 1440p) and 60fps playback thanks to changes in the HLS API.

If your favorite video apps use HLS and deal with MP4 files, expect to see some nice changes in iOS 10.

Touch ID for Apple ID settings

Settings > iTunes & App Store > View Apple ID no longer requires you to type a password. You can view and manage your account with Touch ID authentication. This one deserves a finally.

No more App Store password prompts after rebooting

In a similar vein, the App Store will no longer ask you for a password to download a new app after rebooting your device. You can just use Touch ID instead.

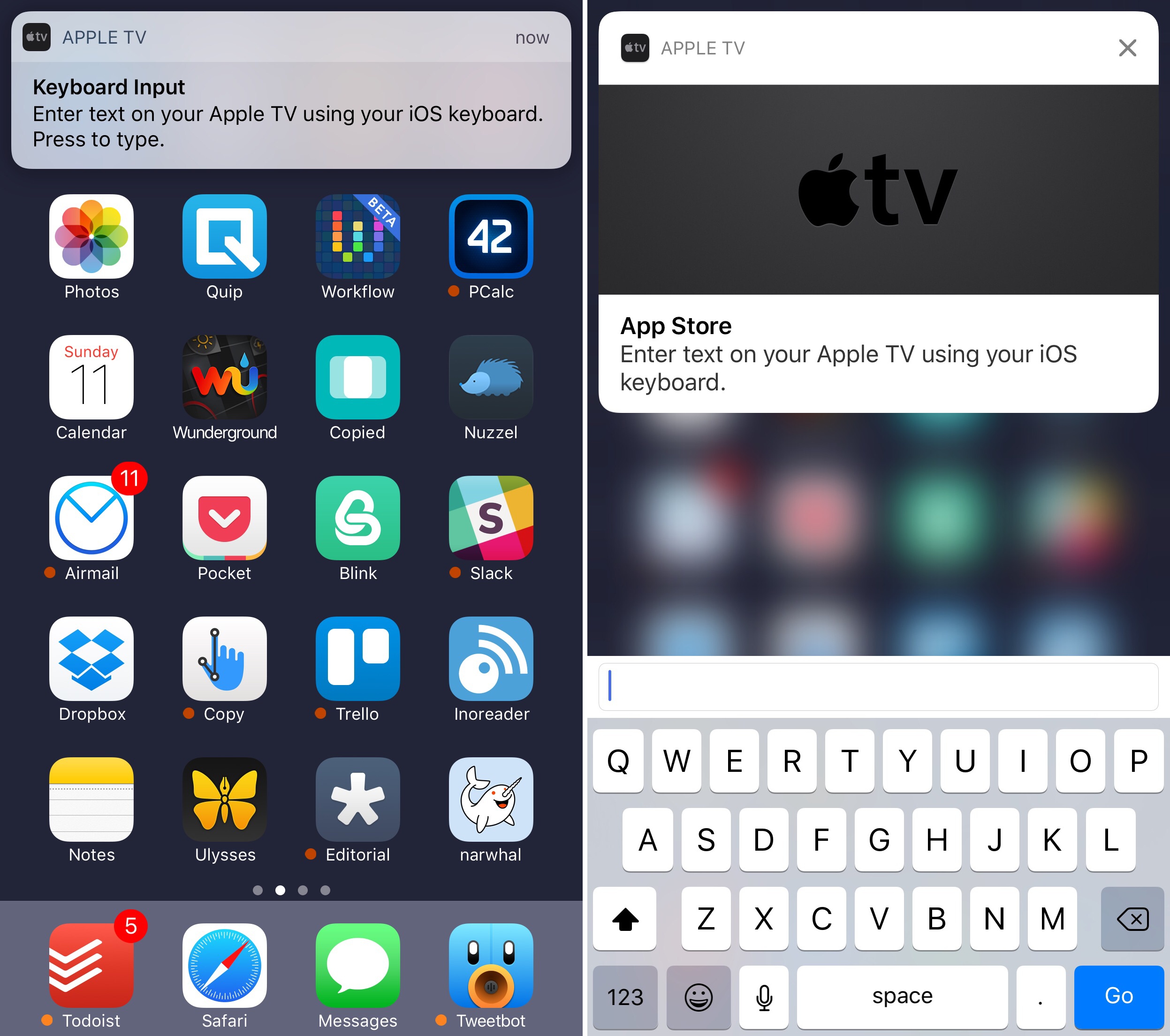

Continuity Keyboard for Apple TV

If your iPhone is paired with an Apple TV, you’ll get a notification whenever the Apple TV brings up a text field (such as search on the tvOS App Store).

You can press (or swipe down) the notification on iOS to start typing in the quick reply box and send text directly to tvOS. A clever and effective way to reduce tvOS keyboard-induced stress.

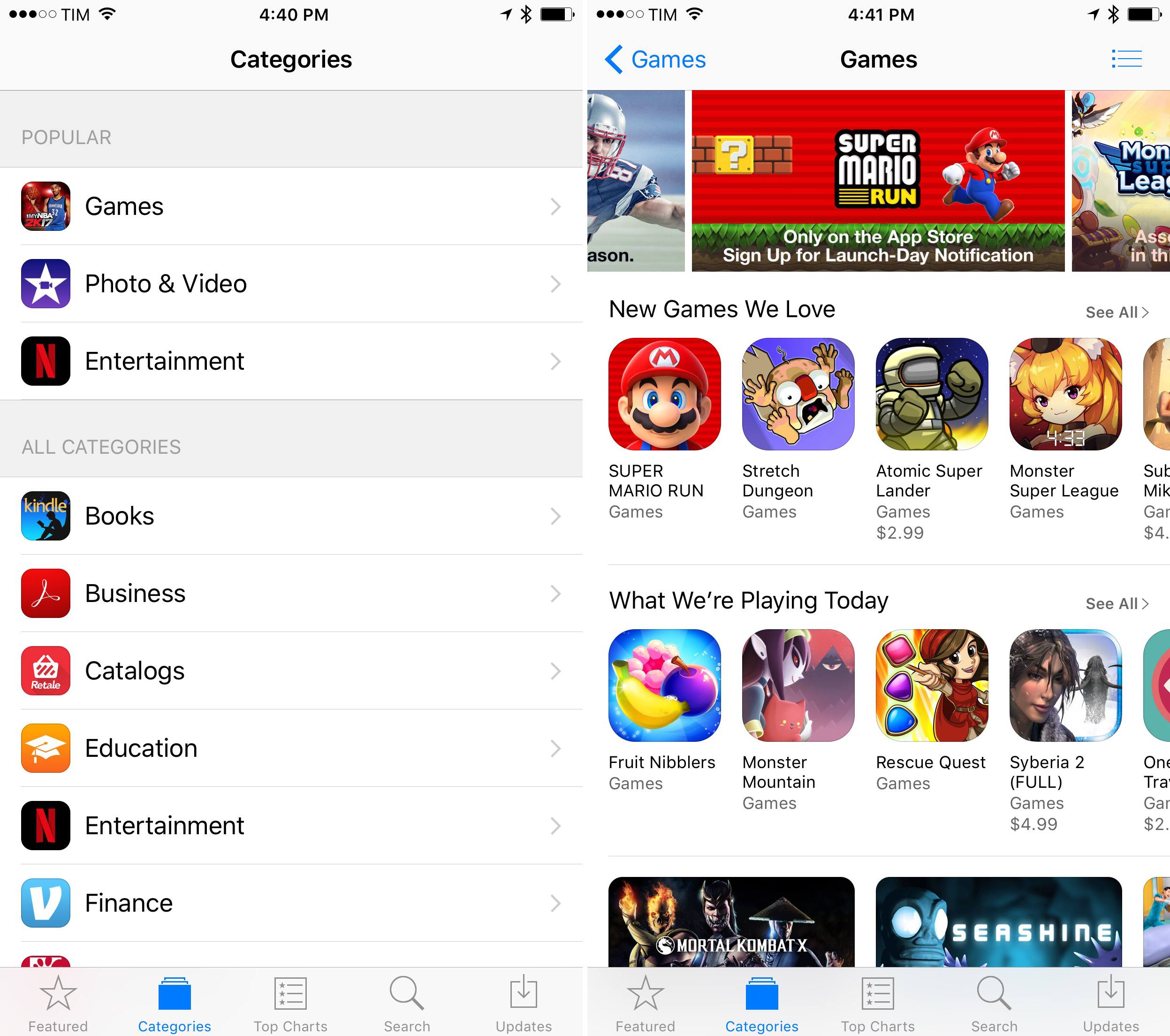

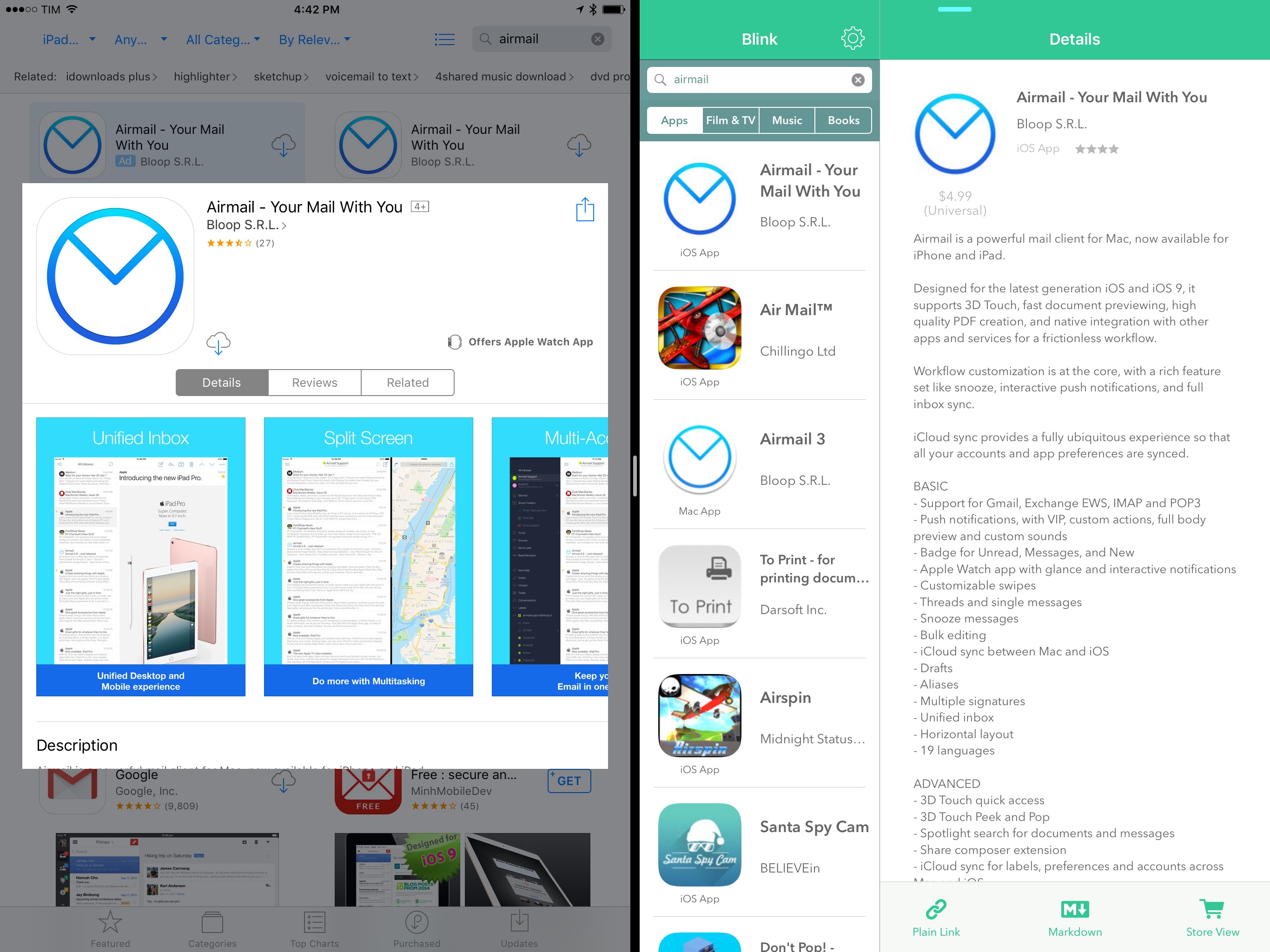

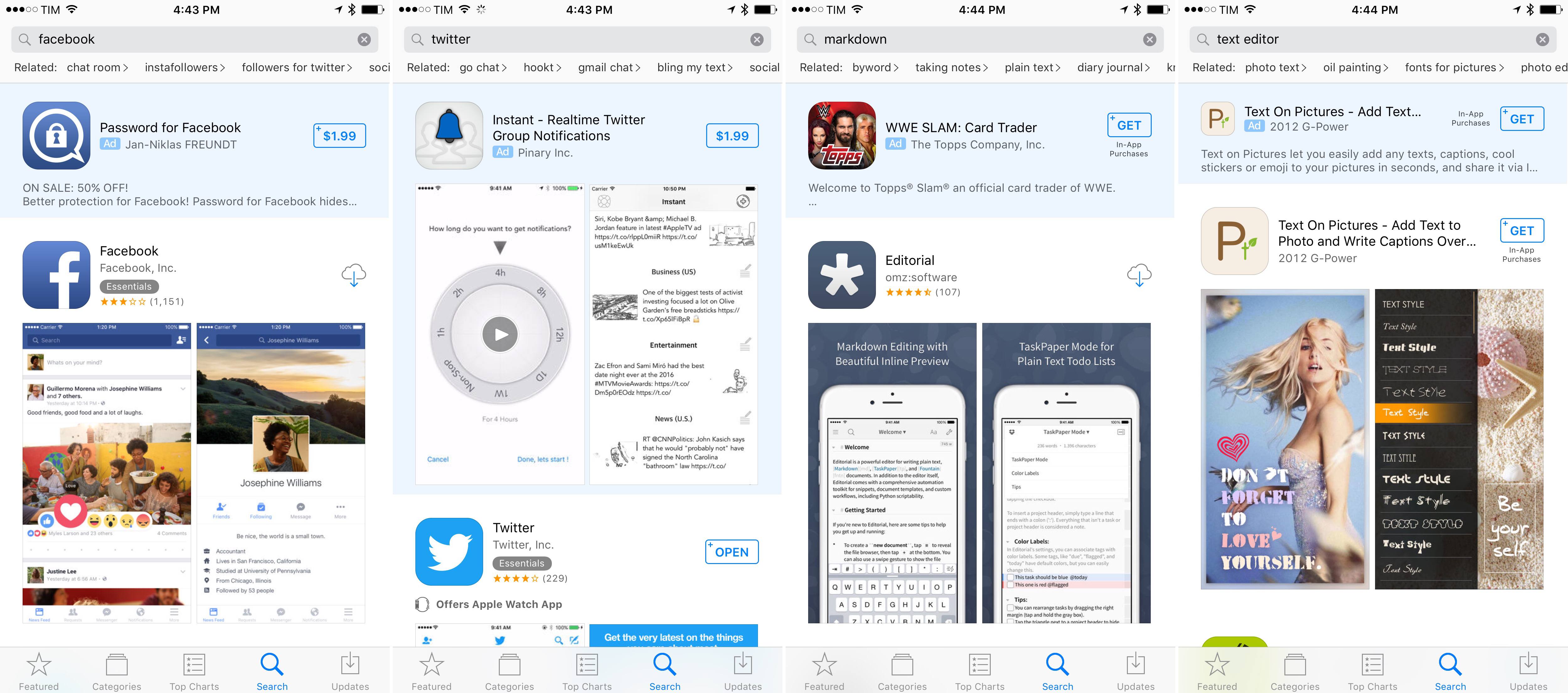

App Store categories, iPad, and search ads

The App Store’s Explore section, launched with iOS 8 and mostly untouched since, has been discontinued in iOS 10. Categories are back in the tab bar, with the most popular ones (you can count on Games always being there) available as shortcuts at the top.

Apple had to sacrifice the Nearby view to discover apps popular around you, but categories (with curated sections for each one of them) seem like the most popular choice after years of experiments.

On the iPad, the App Store now supports Split View so you can browse and search apps while working in another app.

This has saved me a few minutes every week when preparing the App Debuts section for MacStories Weekly.

Apple is also launching paid search ads on the App Store. Developers will be able to bid for certain keywords and buy paid placements in search results. Ads are highlighted with a subtle blue background and an ‘Ad’ label, and they’re listed before the first actual search result – like on Google search.

It’s too early to tell how beneficial App Store ads will be for smaller studios and indie developers that can’t afford to be big spenders in search ad bids. Apple argues that the system is aimed to help app discovery for large companies and small development shops alike, but I have some reservations.

As a user, I would have liked to see Apple focus on more practical improvements to App Store search, but maybe the company is right and all kinds of developers will benefit from search ads. We’ll follow up on this.

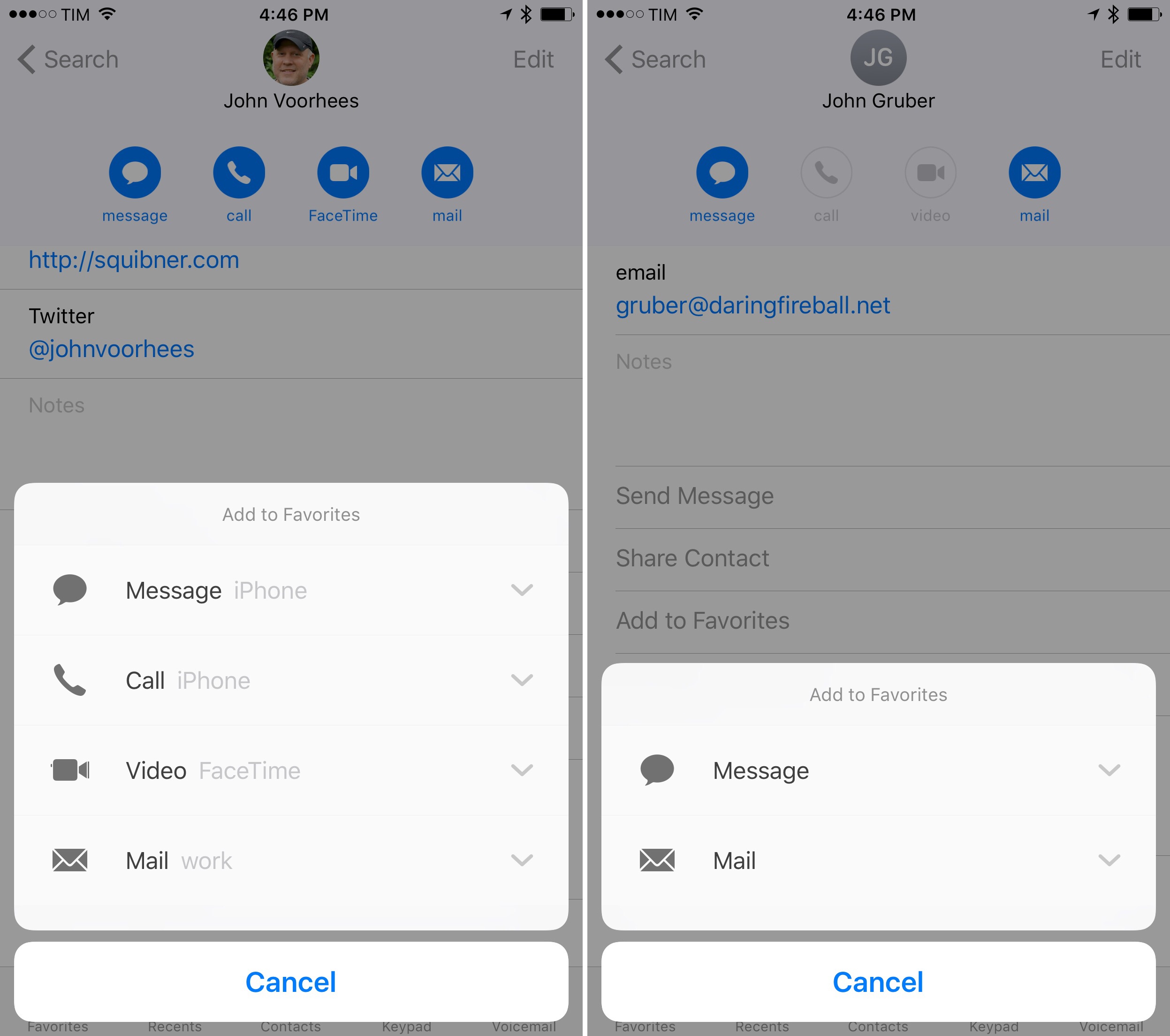

New ‘Add to Favorites’ UI

Similar to the 3D Touch menu for a contact card, the view for adding a contact to your favorites has been redesigned with icons and expandable menus.

More responsive collection views

Expect to see nice performance improvements in apps that use UICollectionView. iOS 10 introduces a new cell lifecycle that pre-fetches cells before displaying them to the user, holding onto them a little longer (pre-fetching is opt-out and automatically disabled when the user scrolls very fast). In daily usage, you should notice that some apps feel more responsive and don’t drop frames while scrolling anymore.

Security recommendations for Wi-Fi networks, connection status

If you connect to a public Wi-Fi network (such as a restaurant hotspot), iOS 10 will show you recommendations to stay secure and keep your wireless traffic safe. There’s also better detection of poor connectivity with an orange “No Internet Connection” message in the Wi-Fi settings.

Accessibility: Magnifier and Color Filters

There are dozens of Accessibility features added to iOS every year. I want to highlight three of them.

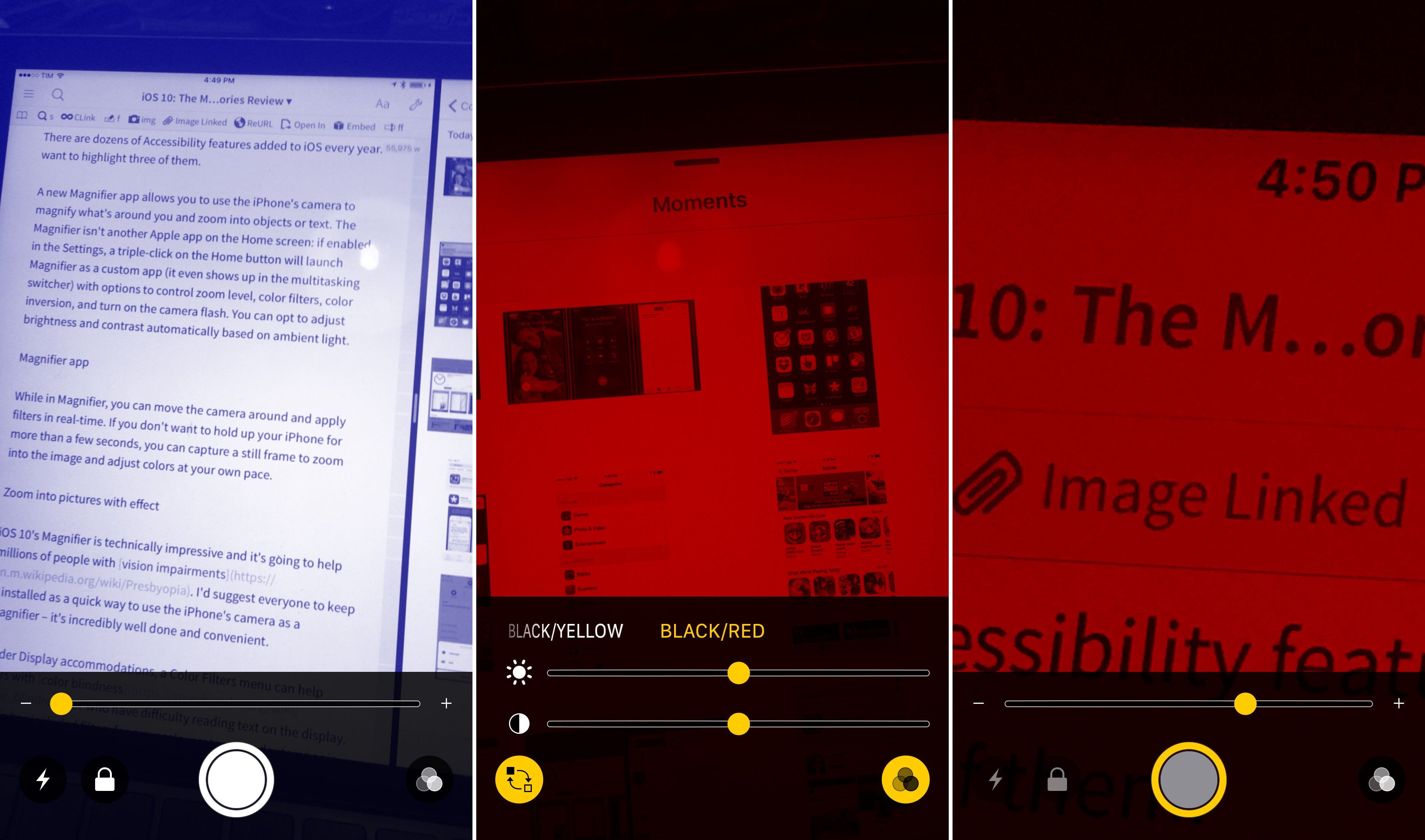

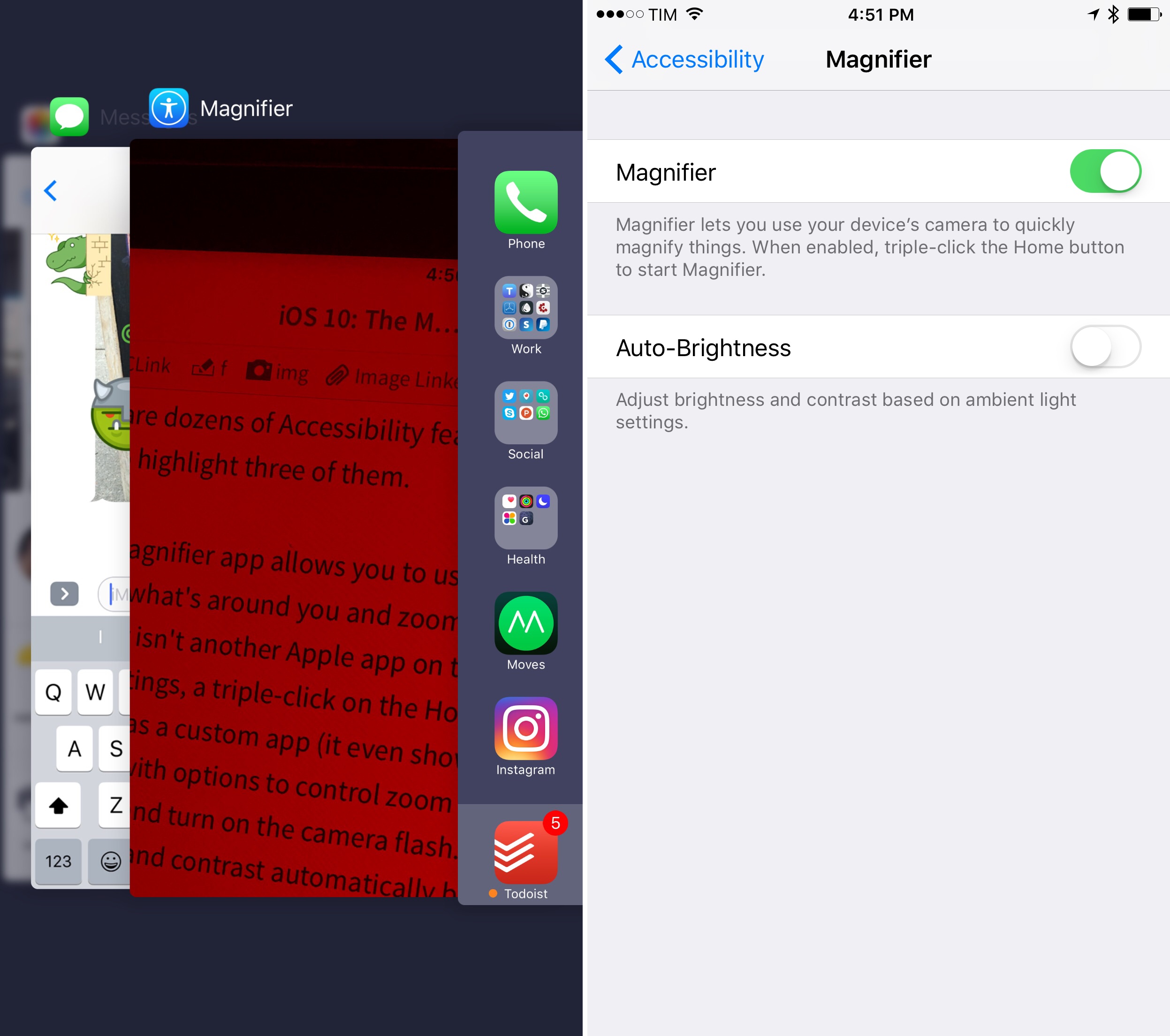

A new Magnifier app allows you to use the iPhone’s camera to magnify what’s around you and zoom into objects or text. The Magnifier isn’t another Apple app on the Home screen: if enabled in the Settings, a triple-click on the Home button will launch Magnifier as a custom app (it even shows up in the multitasking switcher) with options to control zoom level, color filters, color inversion, and turn on the camera flash. You can opt to adjust brightness and contrast automatically based on ambient light.

While in Magnifier, you can move the camera around and apply filters in real-time. If you don’t want to hold up your iPhone for more than a few seconds, you can capture a still frame to zoom into the image and adjust colors.

iOS 10’s Magnifier is technically impressive and it’s going to help millions of people with vision impairments. I’d suggest everyone to keep it installed as a quick way to use the iPhone’s camera as a magnifier – it’s incredibly well done and convenient.

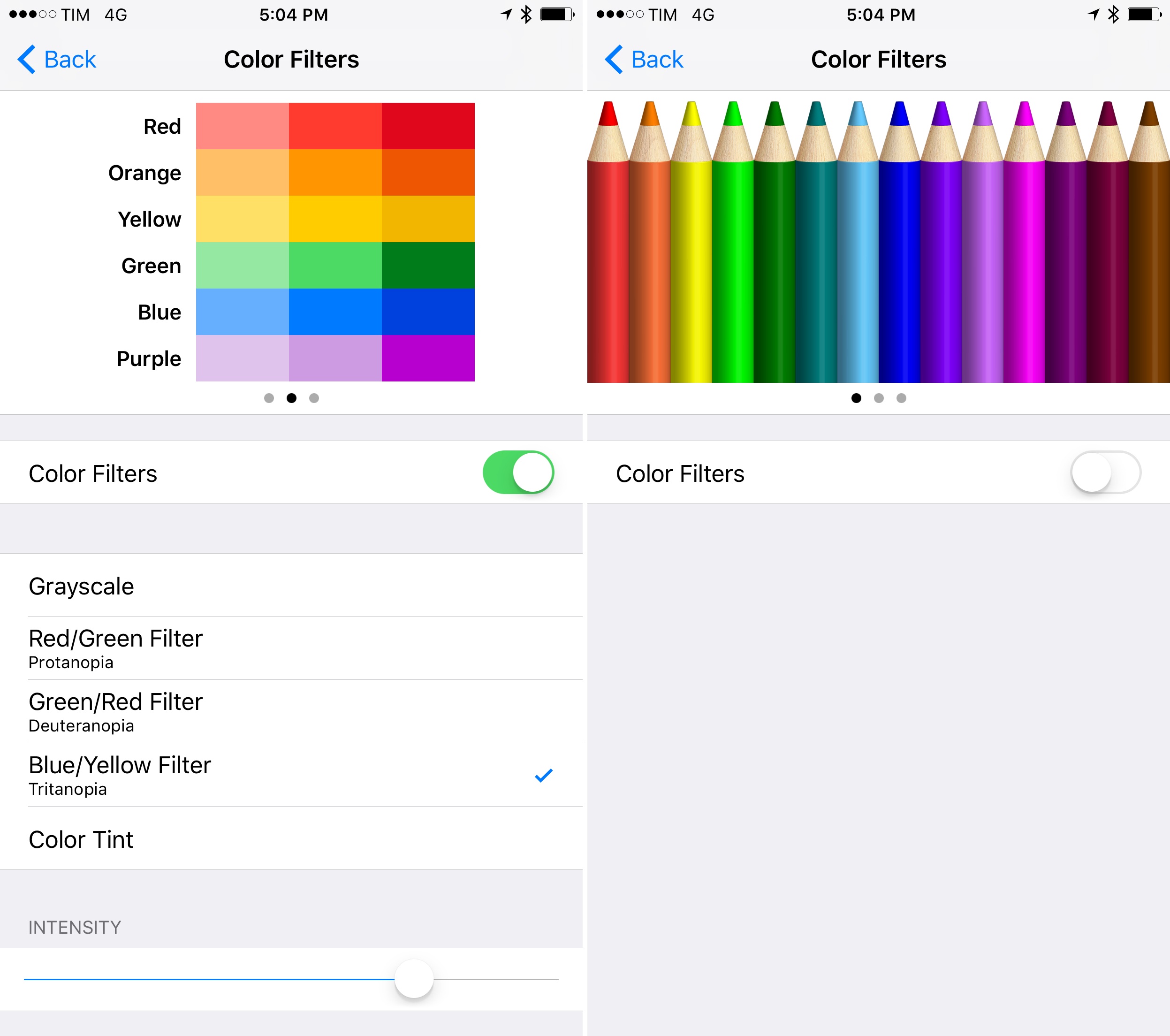

Under Display accommodations, a Color Filters menu can help users with color blindness or who have difficulty reading text on the display. Apple has included filters for grayscale, protanopia, deuteranopia, tritanopia, and color tint. It’s also a good reminder for developers that not all users see an app’s interface the same way.

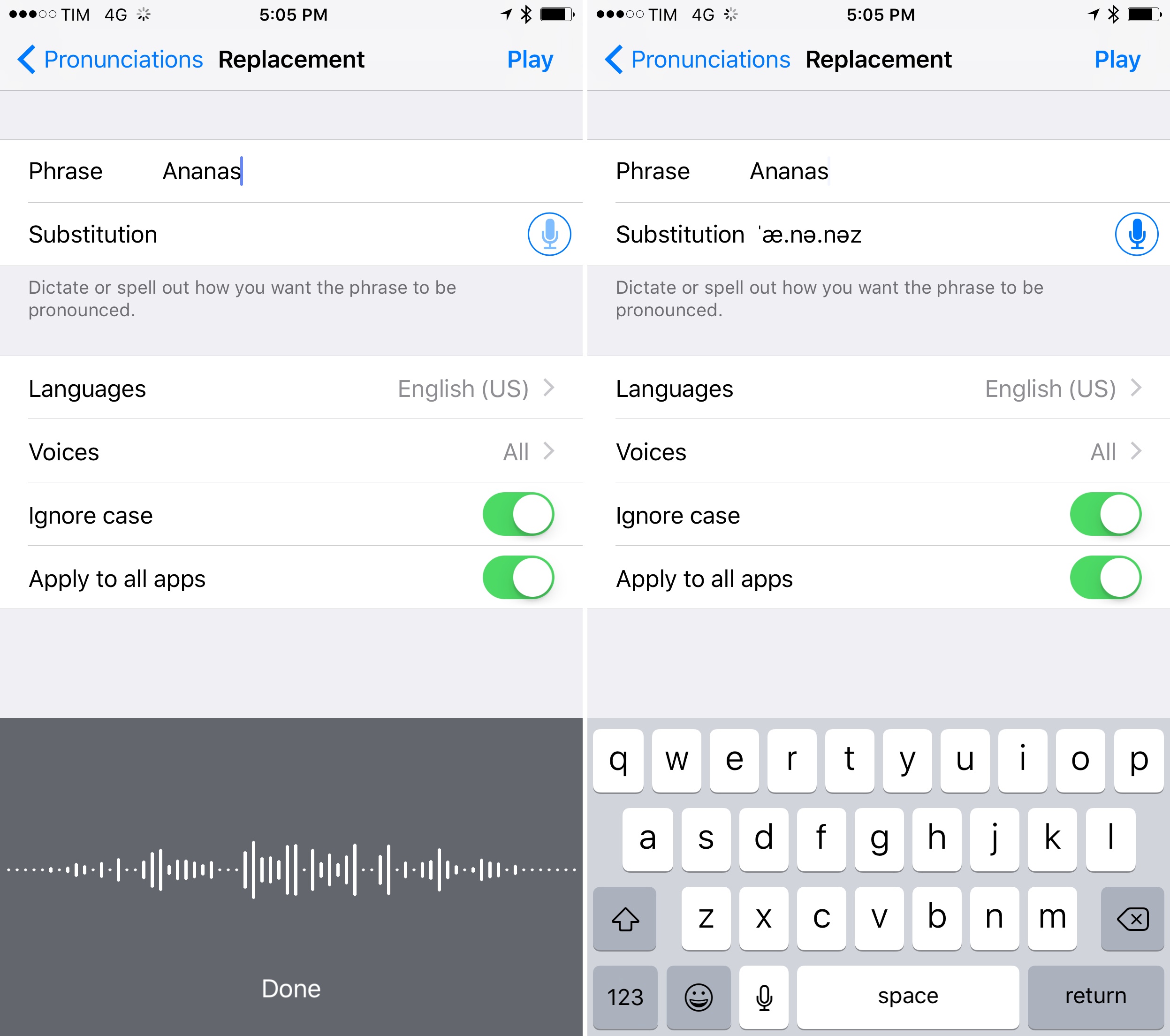

Finally, you can now define custom pronunciations to be used when iOS reads text aloud. Available in Settings > Accessibility > Speech > Pronunciations, you’ll be able to type a phrase and dictate or spell how you want it to be pronounced by the system voice.

Dictating a pronunciation is remarkable as iOS automatically inserts it with the phonetic alphabet after recognizing your voice. You can then choose to apply a custom pronunciation to selected languages, ignore case, and pick which apps need to support it.