Siri

The theme of Siri in iOS 11 is that it’s gradually expanding to fulfill more roles on the system. Certain functionalities are being labeled as ‘Siri’ even if they aren’t part of the main voice interface68, painting the assistant as a layer of intelligence spread across apps rather than a voice-only feature.

On the other hand, Siri’s primary function has also received some attention in iOS 11, although not to the extent we imagined in terms of new third-party integrations. With Apple focused on shipping its Siri-powered HomePod speaker later this year, a wider rollout of new SiriKit domains will likely have to wait until WWDC 2018. But that doesn’t mean there are no improvements to discuss for Siri in iOS 11.

Voice Features

Let’s start from the voice of Siri itself, which has been entirely rebuilt for iOS 11 to sound more natural and, well, human.

While Apple touted improvements to Siri’s voice in past versions of iOS, the new one in iOS 11 is a dramatic upgrade. The new Siri correctly pauses after a comma or period, constantly changing intonation and pitch so it doesn’t sound robotic or flat. Just listen for yourself to Siri’s evolution from iOS 9 to iOS 11, which was made possible by machine learning. Obviously, we’re still not at the point where we can‘t discern Siri from an actual person, but judging from the increase in voice quality in recent years, it doesn’t seem crazy to think we’ll get there soon enough. If you’re updating multiple devices to iOS 11, update them separately and compare Siri’s voice between iOS 10 and 11: you’ll be surprised by how much it’s evolved.

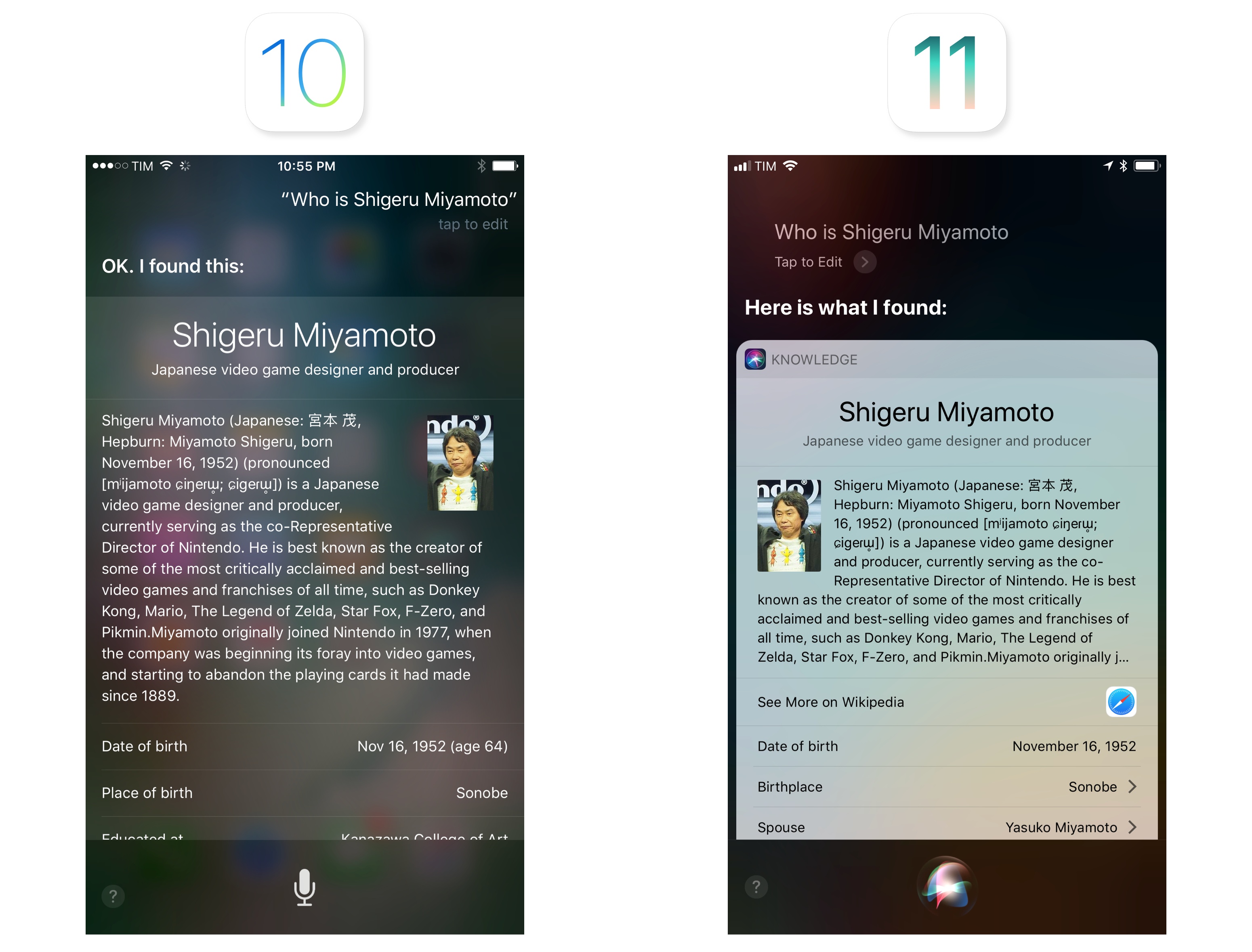

Siri also has an updated user interface, with brighter snippets for inline responses and richer graphics for a variety of results.

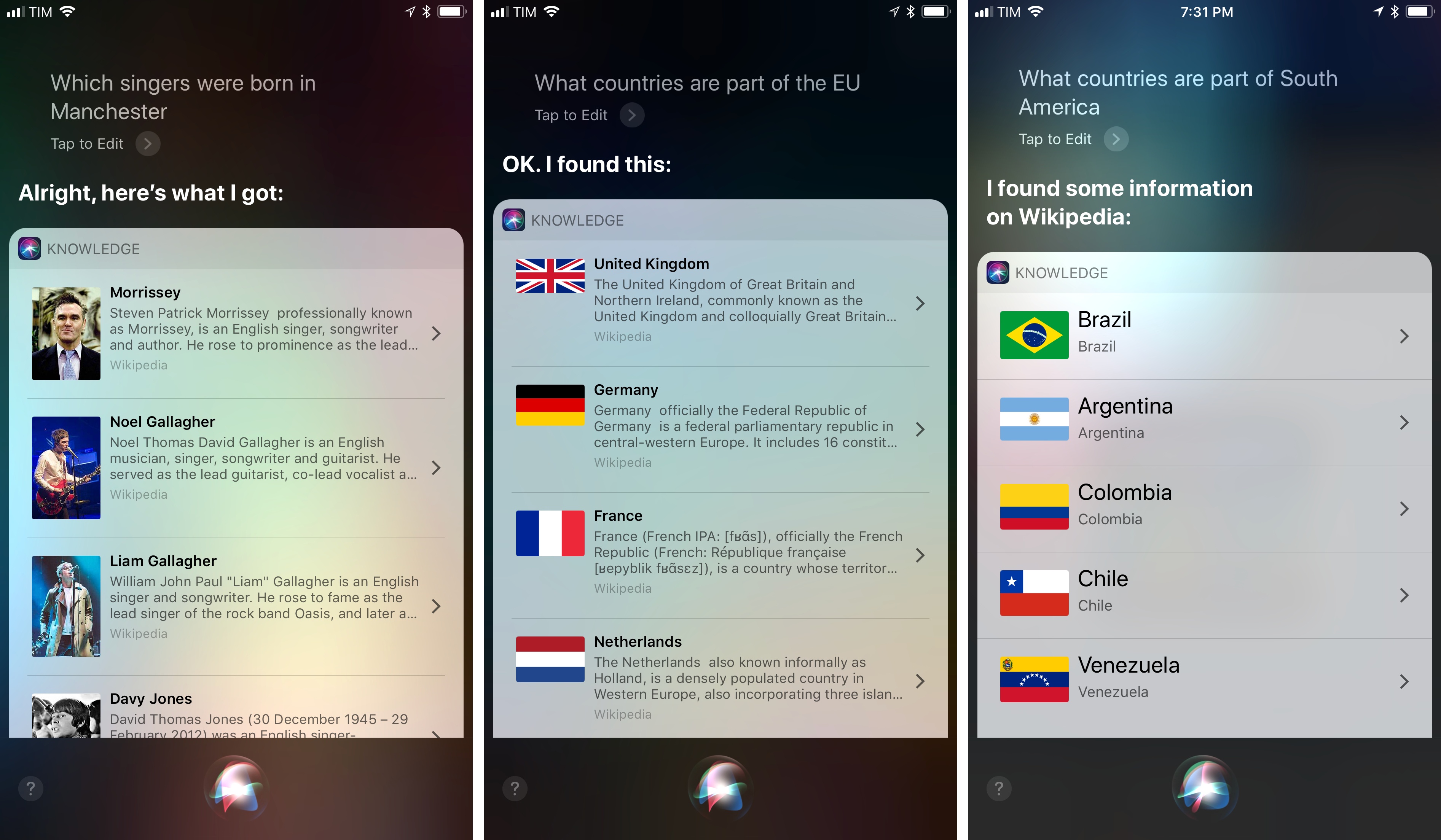

In iOS 11, Apple enhanced the integration of Siri’s knowledge with Wikipedia, giving its assistant the ability to display a list of results in addition to summaries of individual pages. You can test this by asking questions such as “Which singers were born in Manchester?”69 or “What countries are part of the EU?”.

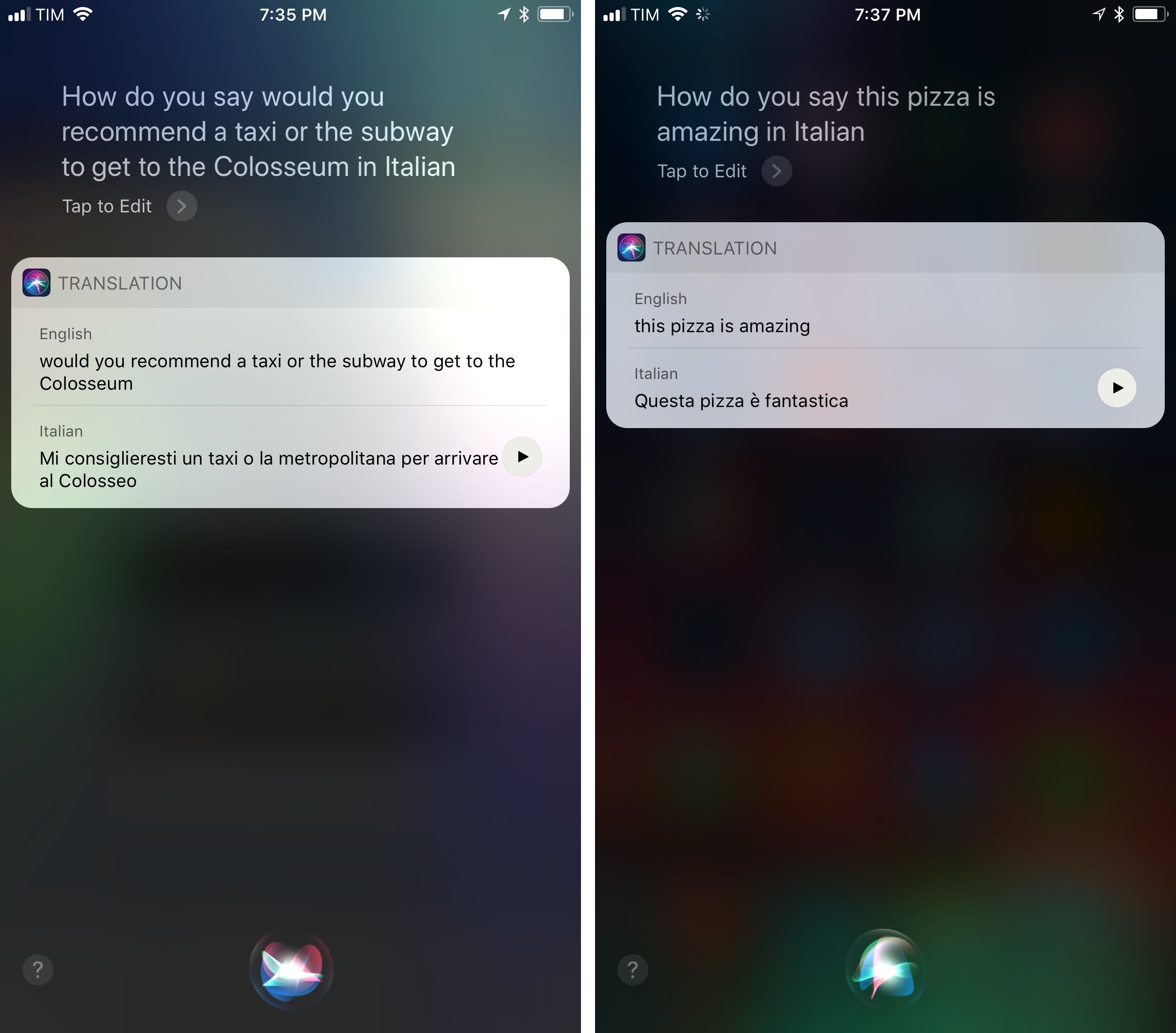

Translation is the perfect showcase for Siri’s stronger spoken language skills, as it’ll let you appreciate the changes to international voices too.

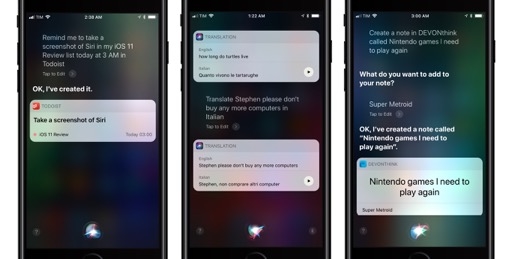

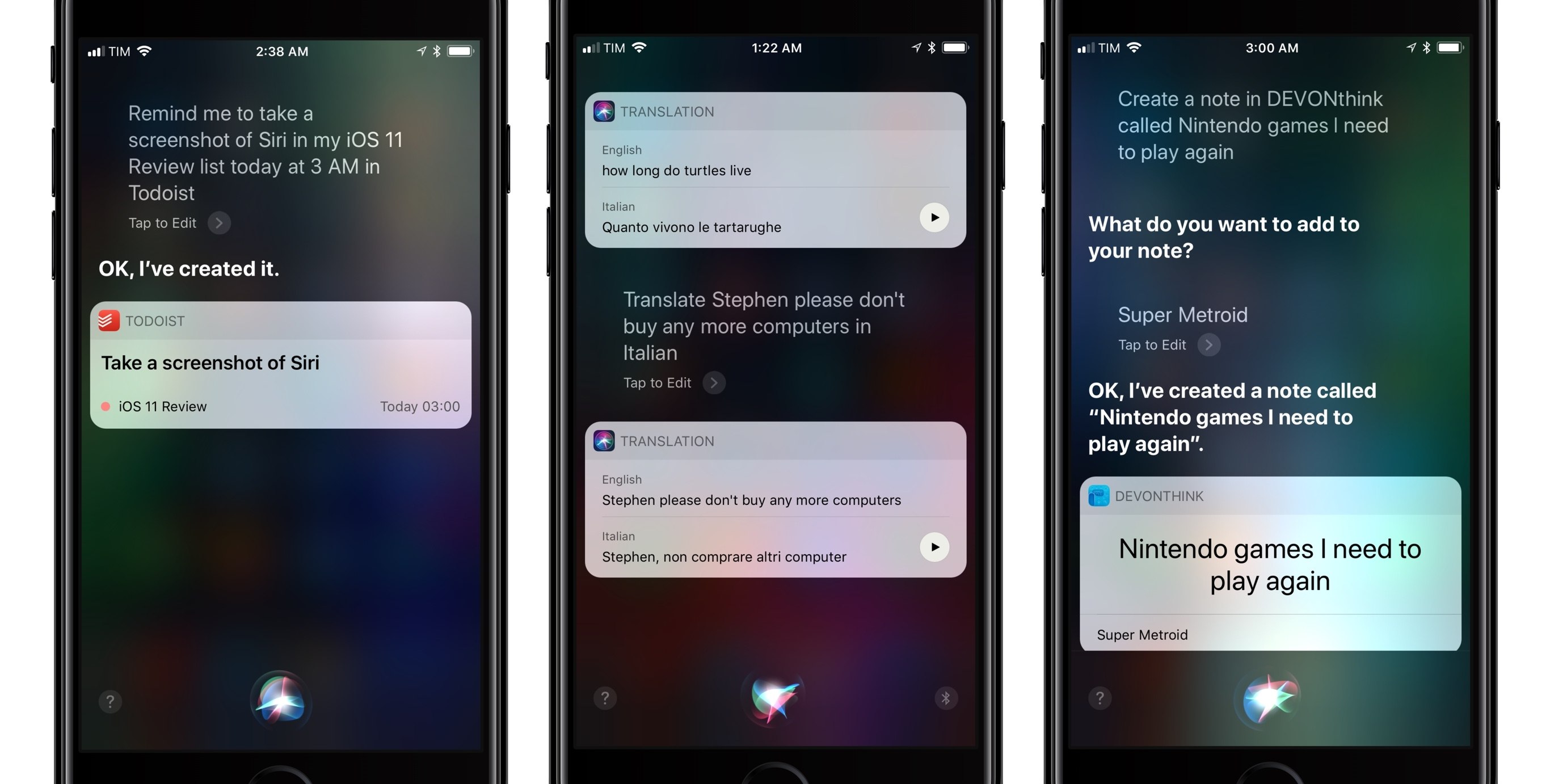

In iOS 11, Siri can translate sentences between six languages: English, French, German, Italian, Mandarin, and Spanish. To use translation, simply say something along the lines of “Translate Good evening in Italian” or “How do you say Pizza without pineapple in Italian”, and Siri will speak the translated sentence back to you as well as display it onscreen. There’s also a play button in the translated snippet so you can play the sentence back for someone else. As a feature best used in tourist-type situations, it’s good to have both visual and phonetic options when asking questions to other people.

As a multilingual iPhone user, I’ve been able to test the quality of Siri translations in Italian and English, and I’ve been pleasantly surprised with the work Apple has done. Siri can handle simple requests and more elaborate ones most of the time, providing accurate translations with a better Italian voice than iOS 10. There are occasional stumbles, but I’ve yet to find any translation service that passes the Turing test.

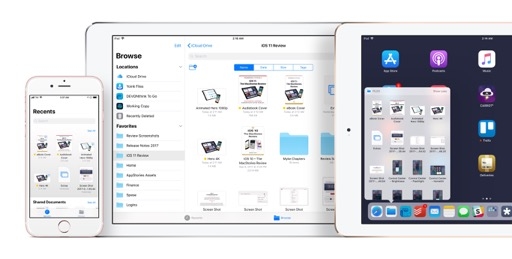

Siri translation is good enough, often great, and it’s amazing that we now have this feature for free on our devices without having to install an app or pay a subscription fee. In the future, I’d like to see Apple add more languages and, if possible, some degree of offline translation capabilities.

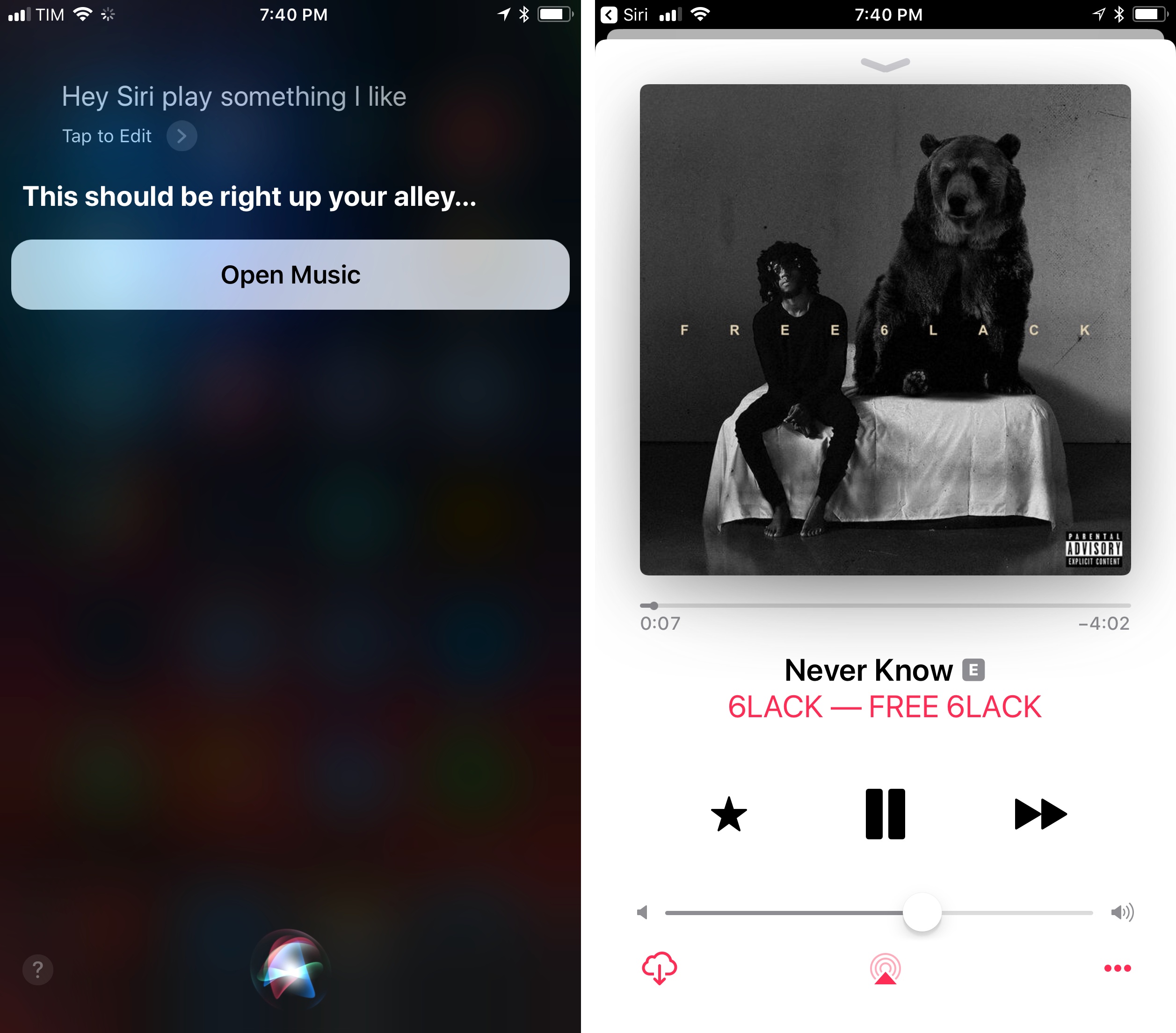

In preparation for the HomePod, Siri has also learned a new music skill: generating a mix of songs you may like based on your listening history from Apple Music. Ask Siri to “play something I like”, and, like a virtual DJ, it will play a personalized station of songs that match your taste and listening habits.

As you listen, you can tell Siri which songs you like, so you’ll get more similar ones down the queue. This feature has worked well in my tests and I look forward to asking Siri to play music on specific speakers in my house.

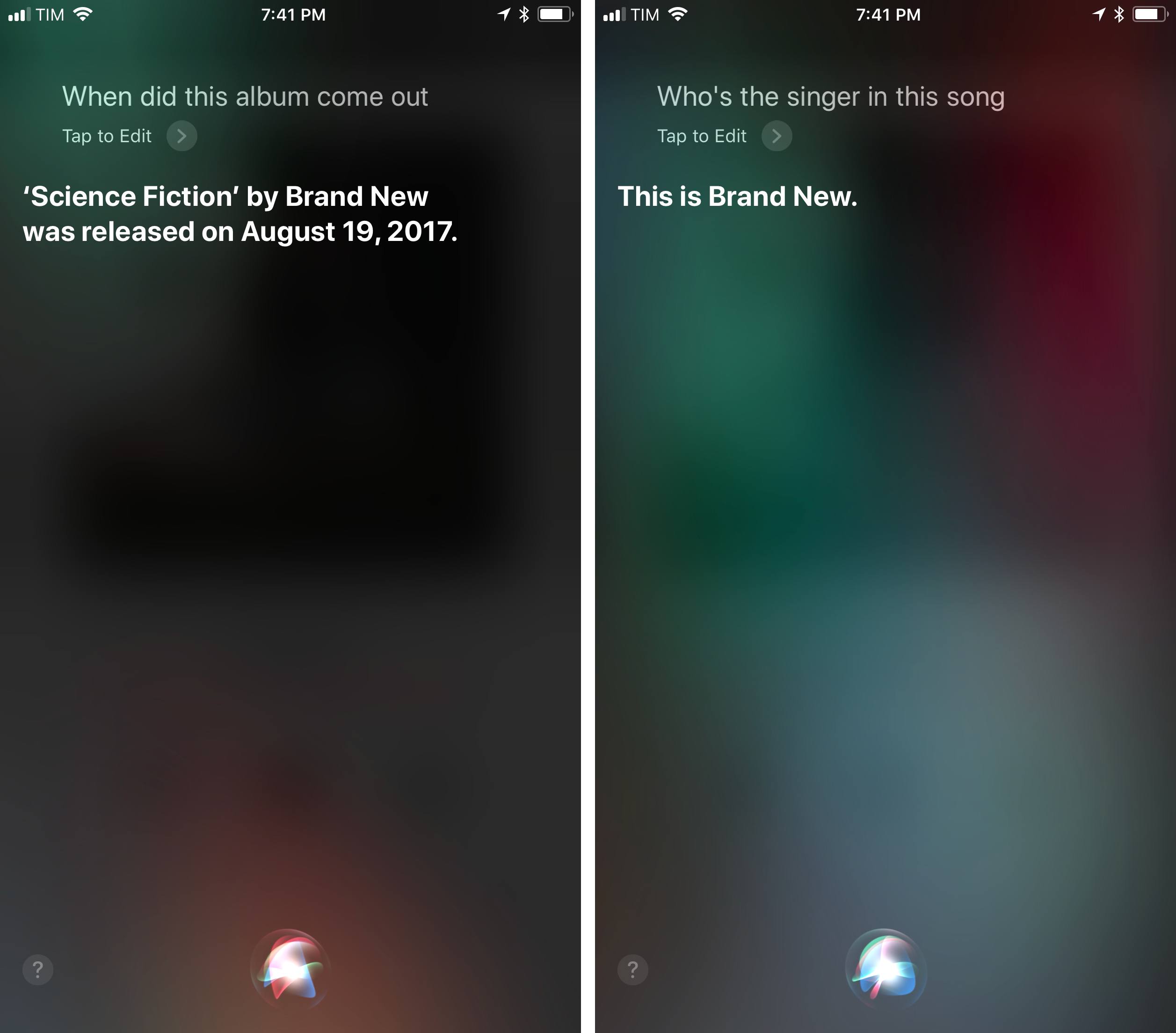

According to Apple, iOS 11’s Siri has also gained some of the musicologist capabilities originally advertised for the HomePod. In theory, these include answers to questions pertaining to the currently playing song such as “Who’s the drummer in this song?” or “When did this album come out?”.

In practice, I’ve only been able to receive correct answers for album release dates, suggesting that Siri’s support for music trivia will be fully rolled out in the coming months.

New Intelligence

Apple improved Siri’s intelligence on two fronts in iOS 11: data learned by Siri is now synced across your devices via iCloud, and the assistant should be more proactive in suggesting shortcuts or topics based on your current activity.

As Siri learns from your habits in iOS 11, it can apply that learning across all your devices signed into iCloud. This should result in a more consistent Siri experience as all your devices can now share the same pool of Siri knowledge. Unsurprisingly, Apple built this feature with end-to-end encryption, so your information stays private to your iCloud account.

The other intelligent functionalities advertised by Apple for iOS 11 have been hit or miss in my experience. For instance, Apple claims that Siri should be able to make article suggestions in Apple News for topics you browsed in Safari, but I’ve never seen such recommendations in the app. Similarly, Siri should be capable of proactively suggesting calendar events after confirming a reservation, appointment, or flight in Safari, but the feature didn’t work on my devices.

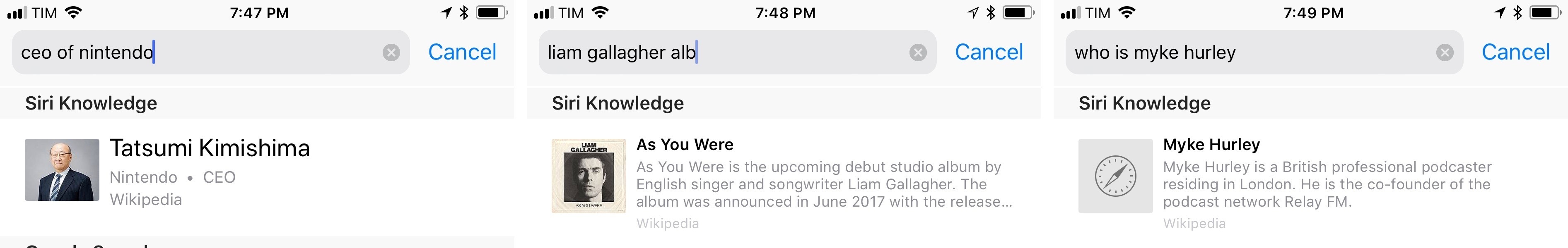

On the other hand, I’ve noticed that the Safari address bar can now present more Siri Knowledge results while you’re still entering a search query. In my tests, typing ’Liam Gallagher album’ displayed a snippet extracted by Siri from Wikipedia that correctly listed ‘As You Were’; the same technique worked for queries like ‘Capital of Spain’, ‘CEO of Nintendo’, or ‘Who is Myke Hurley’. The increase in Siri Knowledge snippets is an effective way to obtain results for your query without opening a search engine page.

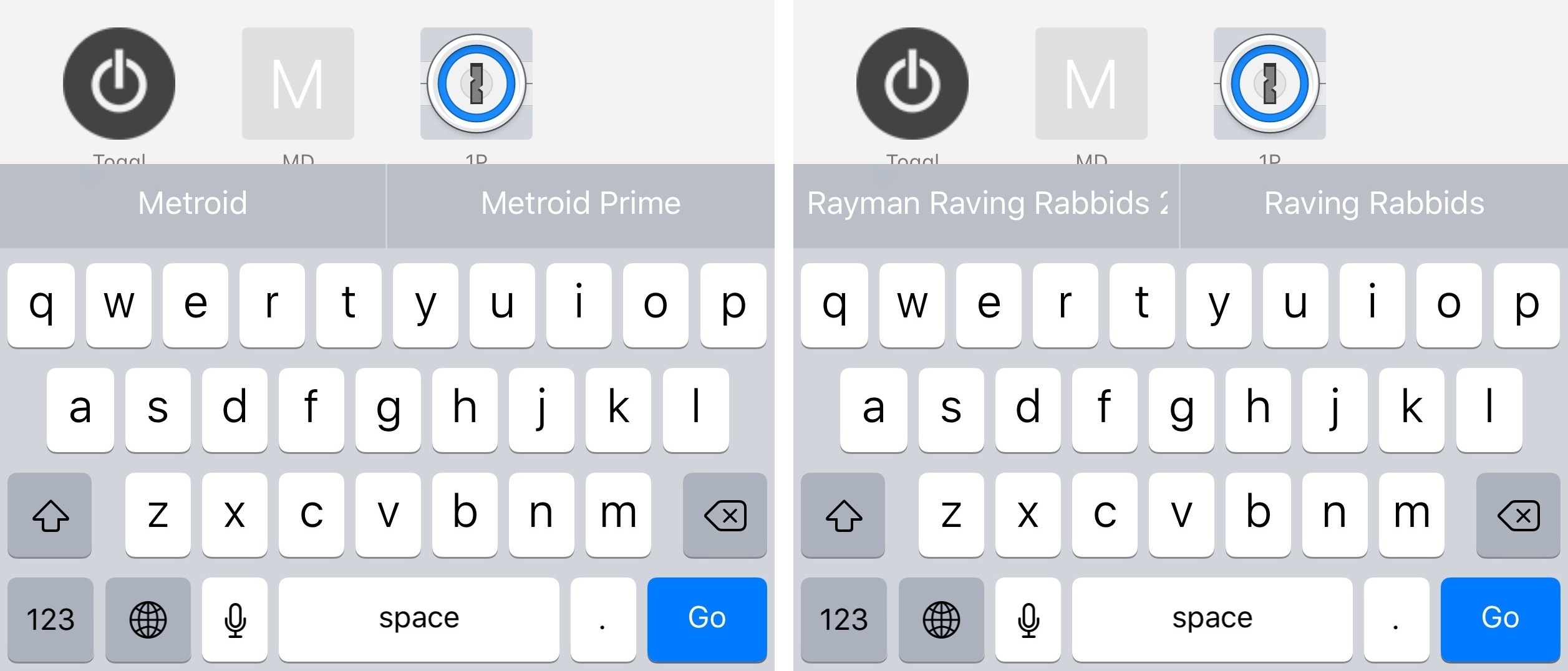

Siri can also inject topic suggestions in the QuickType keyboard when you’re reading an article in Safari. After a few seconds spent analyzing the current webpage, Siri will display shortcuts for potential search queries as soon as you tap the address bar to navigate somewhere else.

The proactivity of these suggestions is welcome, even though they tend to be somewhat generic. As I was reading an article about Metroid: Samus Returns, for example, Siri suggested ‘Metroid’ and ‘Metroid Prime’ in the keyboard – the names of the main videogame franchise. In an interview with Liam Gallagher, Siri recommended ‘Wonderwall (song)’ and ‘Oasis (band)’ – popular search queries associated with Mr. Gallagher. I would have liked Siri to suggest more timely queries – such as recent reviews for Nintendo’s latest Metroid game, or news about Gallagher’s upcoming album and tour – but the feature appears to be limited to general knowledge from Wikipedia.

Finally, topics learned by Siri should appear as contextual suggestions in other system apps such as Messages. This didn’t always work consistently in my tests, but when it did, it was remarkable.

In typing the sentence ‘Samus Aran is the star of Metroid’, Siri suggested ‘Aran’ after I typed ‘Samus’ and ‘Metroid’ after I entered the letters ‘Met’ in an iMessage thread. Considering how much this feature improved over the summer, I expect it to become much smarter over the coming months.

Type to Siri

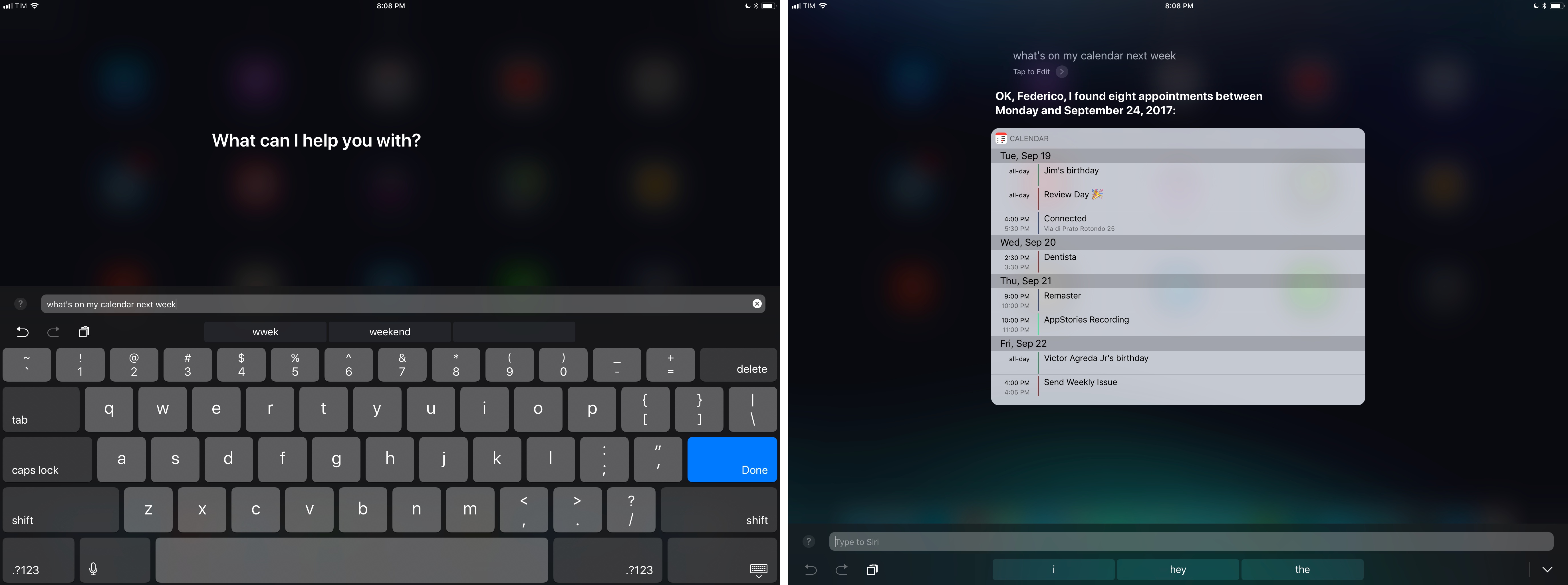

For years now, I’ve wanted Apple to add a textual mode to Siri so that we could ask questions without having to talk in public or quiet places. In iOS 11, the company has added a text-only Siri mode, but the feature comes with some limitations.

Under Settings ⇾ General ⇾ Accessibility ⇾ Siri, you’ll find a new Type to Siri option. Once enabled, this will turn off voice interactions when you invoke Siri via the Home button, showing the system keyboard instead; hands-free Hey Siri becomes the only way to summon the assistant in voice mode with Type to Siri activated.

Type to Siri works well: just as you’d expect, it’s nice to type a command when you can‘t talk and receive a rich snippet with a silent, visual response in a matter of seconds.

Type to Siri is ideal on the iPad, especially if you connect an external keyboard and set up text replacements.

The problem is that Type to Siri and normal voice mode are mutually exclusive unless you use Hey Siri. If you prefer to invoke Siri with the press of a button and turn on Type to Siri, you won’t be able to switch back and forth between voice and keyboard input from the same screen. If you keep Hey Siri disabled on your device and activate Type to Siri, you’ll have no way to speak to the assistant in iOS 11.

I think Apple’s Siri team has been led by a misguided assumption. I hold my iPhone and open Siri using the Home button both when I’m home and when I’m out and about. Hey Siri is often unreliable in situations where I can speak to Siri; thus, I’d still like to keep voice interactions with Siri tied to pressing a button, but I’d also want a quick keyboard escape hatch for those times when I can’t speak to the assistant at all. Type to Siri should be a feature of the regular Siri experience, not a separate mode.

A simple keyboard button in the regular Siri UI would have sufficed: Siri would come up listening by default, but I could tap the keyboard option to start typing. The process would be slower for typists, but at least it wouldn’t exclude one type of activation.70 Instead of choosing one method over the other, a different Type to Siri design could give us the best of both worlds – typing and voice.

Type to Siri shows that texting with a virtual assistant can be useful in certain contexts – not to mention its accessibility benefits. I’ve decided to keep it disabled for now71, and I hope Apple will reconsider it as an option for Siri’s standard voice interface.

SiriKit Improvements

iOS 11 brings neat, but minor changes to SiriKit domains launched in iOS 10. Apps that integrate with the payment domain can now support intents to transfer money between multiple user accounts, as well as search for account information. The latter will be interesting to see adopted by apps, as users can ask to bring up details for all accounts or only a specific one, show features like reward points and miles, and check their current balance.

There are two new SiriKit domains in iOS 11, one of which, I believe, is easily going to introduce millions of new users to SiriKit for third-party apps.

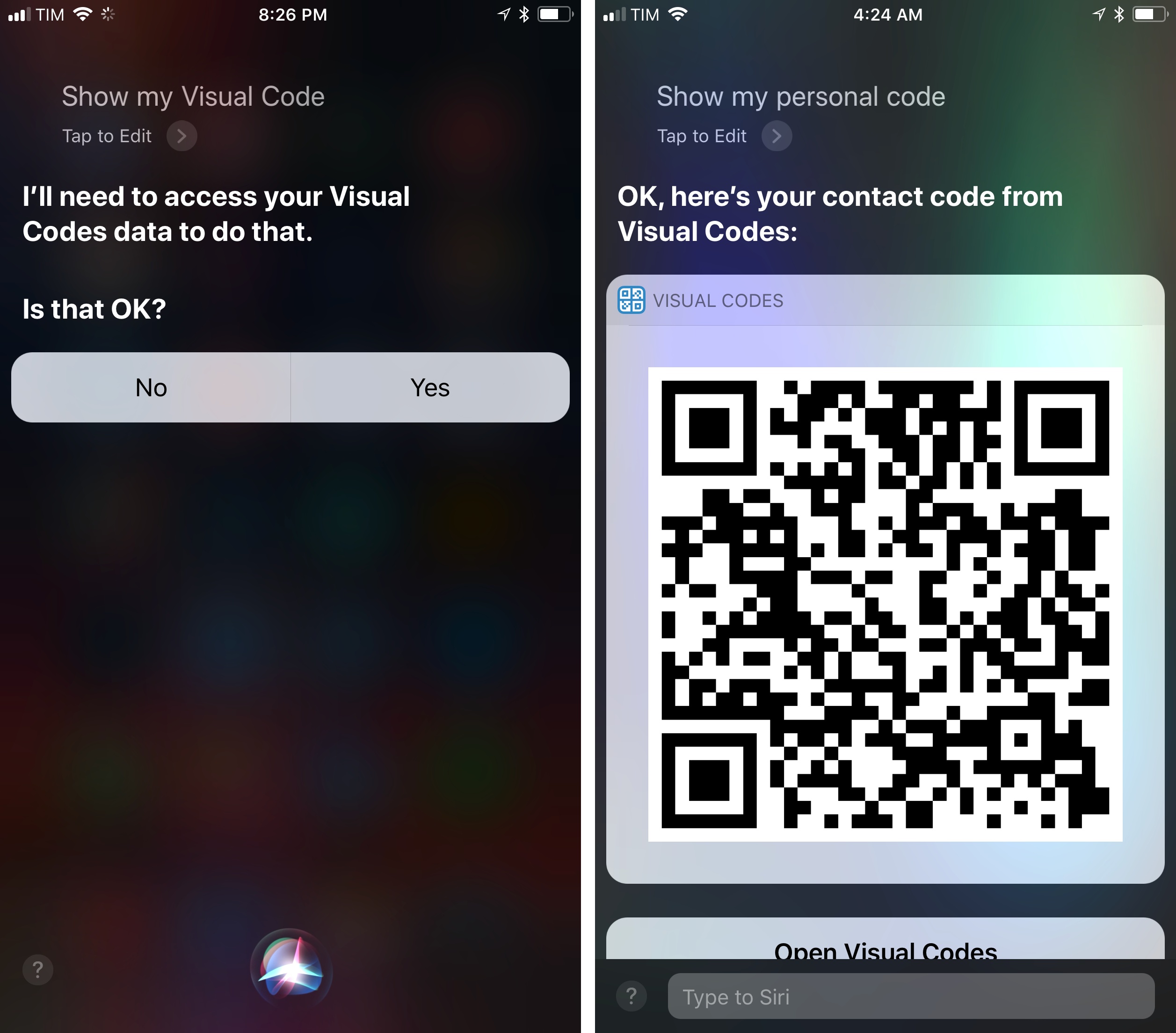

The first domain is Visual Codes. Apps that can generate QR-like codes to make payments, request them, or exchange contact information can now expose codes to Siri, which will display them to the user. This will be handy for social-type apps that tend to offer QR codes to share your profile. If Facebook Messenger adopts it, for instance, you’ll be able to say “Hey Siri, show my Messenger code” to retrieve your profile code and send it to someone else.

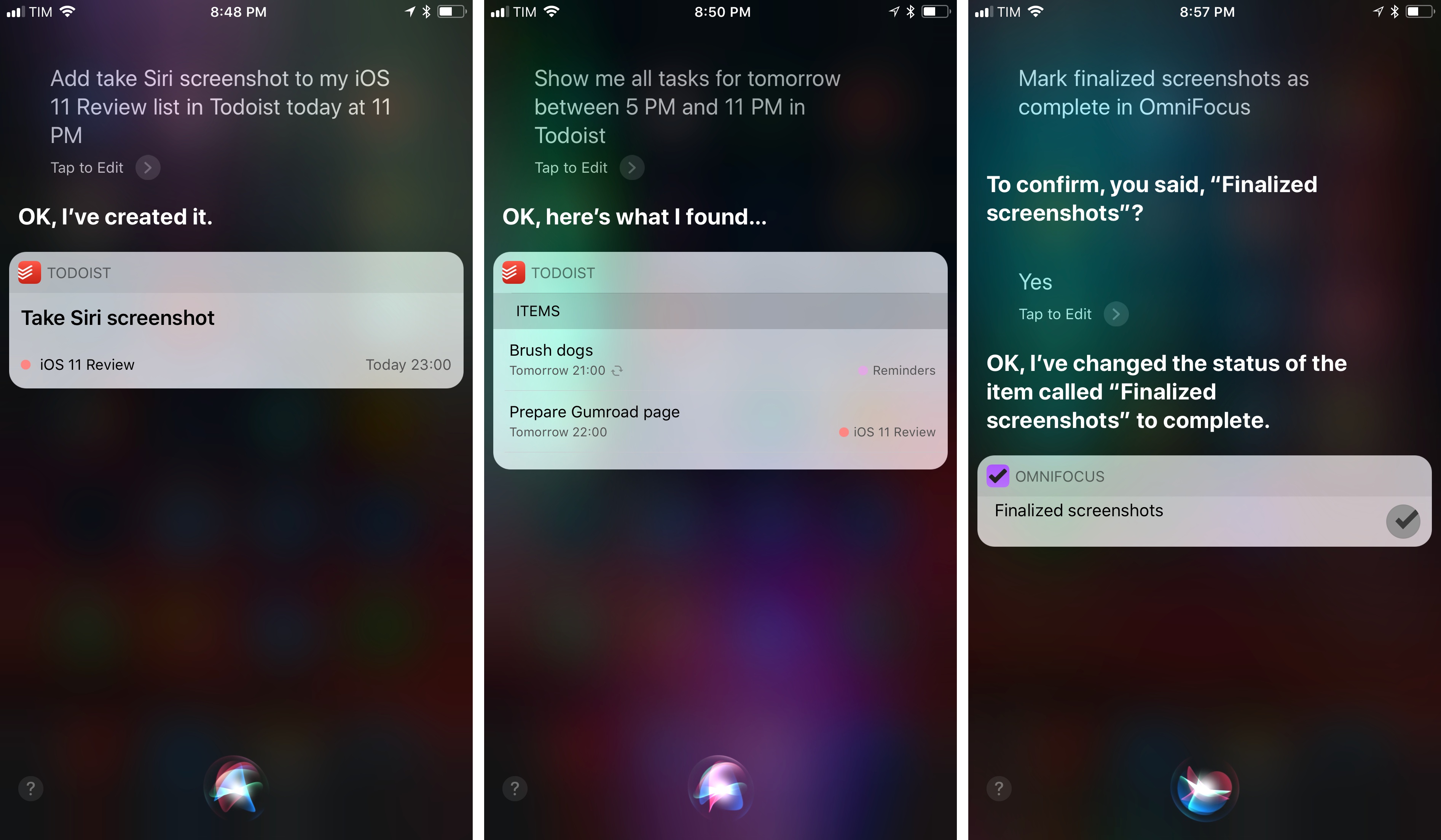

Then there’s Lists and Notes – one of the domains we’ve all been waiting for. With these intents, Siri in iOS 11 can create tasks and notes in third-party task managers and note-taking apps. The two intents are grouped in the same domain because they’re similar, but there are some slight differences to consider.

With lists, you can create, edit, manage, and search items in a to-do list. A variety of attributes can be associated with a to-do item in a list, including completion and time/location-based triggers for reminders. You can create a new list with multiple tasks inside it with one command, and you can also search for specific tasks and mark them as done from Siri.

If you’ve ever added Reminders via Siri, imagine the same kind of convenience, but available for task managers like OmniFocus, Things, or Todoist. As with Apple’s app, developers can support fixed due dates as well as repeating ones, which should provide enough options for everyone to start adding tasks via voice.

I was impressed by SiriKit in my tests with Todoist, which fully integrates with Apple’s assistant for task creation and retrieval in iOS 11. By adding “in Todoist” at the end of the same voice commands I’d use for Apple’s Reminders, I can create new tasks in a Todoist project and assign them a due date, or even ask Siri to “show me tasks for today between 1 PM and 6 PM in Todoist” to get an overview of my day.

The need to specify an app’s name at the end of the request is an annoying limitation (which stems from iOS’ lack of default apps for system features), but I got used to it quickly. The ability to use Todoist in Siri has vastly increased my usage of the assistant on a daily basis.

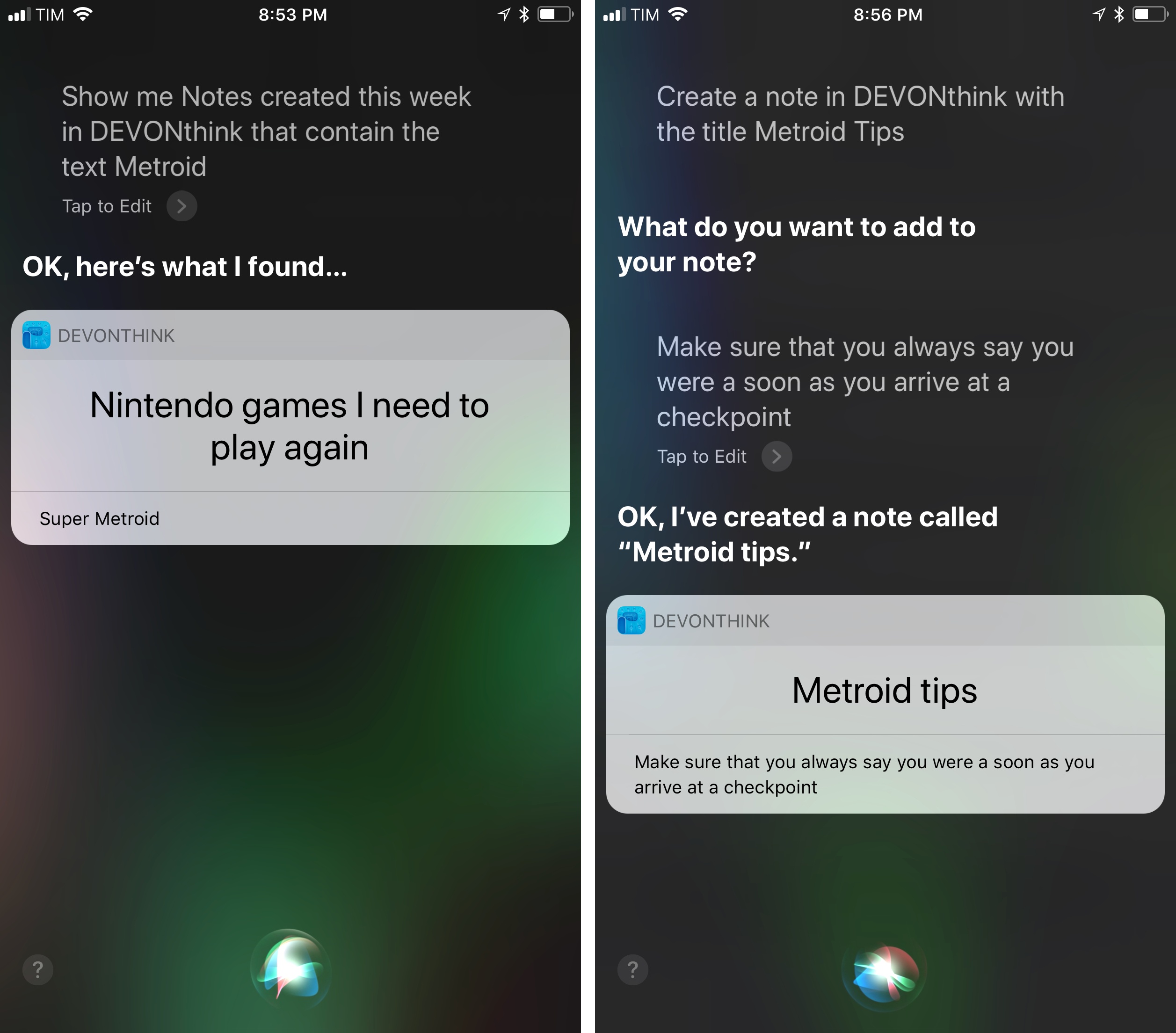

When you’re dealing with a Siri-enabled note-taking app, you can create notes that have a title and body text; optionally, they can be stored in a folder. Even better though, you can append text to the bottom of an existing note; this is perfect for my needs as I prefer to add new information and thoughts at the bottom of my documents.

Furthermore, you can search for all notes from an app and Siri will bring up a custom UI with results displayed inline. Just like you can search for specific types of tasks (“show me completed tasks”), you can filter notes by date and other properties, such as “notes created this week that contain the text Nintendo”.

After testing several task managers and note-taking apps with SiriKit extensions in iOS 11, I believe these intents are going to turn into my most used Siri commands. Particularly for adding tasks, SiriKit’s new intents allow me to stop relying on the Amazon Echo’s flaky Todoist skill.

It’ll also be fascinating to see how developers of other types of apps will implement Siri’s new domains. Last year’s messaging domain ended up being used by email clients and apps that wouldn’t normally be considered “messaging” – a nice side effect of SiriKit’s open format and versatility. Lists and notes are features that most productivity apps have to support in some form; I’m looking forward to some surprises in this area.

SiriKit may not be advancing at the same breakneck pace of Alexa skills, but whenever Apple rolls out a new domain, it’s generally well thought-out and it supports both custom interfaces and voice responses in dozens of languages. Siri has fewer domains than Amazon’s Alexa, but they’re high-quality ones and they’re more polished.

This, I think, represents Apple’s overall strategy with Siri: it’s built judiciously and iterated upon carefully; it may be behind competitors in raw numbers, but it works at a much bigger scale across multiple countries, with a deeper language model.

Siri still isn’t perfect, but it’s consistently getting better on an annual basis. With a new voice, translations, third-party task manager support, and a speaker on the horizon, this might be the year we’re going to use Siri a whole lot more.

- Just look at watchOS 4 and its new Siri watch face. ↩︎

- “Are you mad fer it?” ↩︎

- Yes, I could use dictation in the keyboard to “speak” to Siri even if typing mode is enabled. However, keyboard dictation still requires tapping a button in the keyboard, whereas I would want the opposite: voice by default, keyboard button to type. ↩︎

- On my iPhone. I have enabled Type to Siri on my iPad, which tends to be connected to a keyboard that lets me type requests quickly (although I wish iOS had a system-wide keyboard shortcut to open Siri on the iPad). Here’s a pro tip: enable Type to Siri on your iPad and set up some text replacements for common questions you ask Siri, such as “turn on the living room lights”. The shortcut will expand after it’s typed, and you’ll have an easy solution to the lack of presets for requests you frequently type. ↩︎