Search and Deep Linking

iOS 9 introduces a supercharged Spotlight that, under the umbrella of Search, aims to lay a new foundation for finding app content and connecting apps.

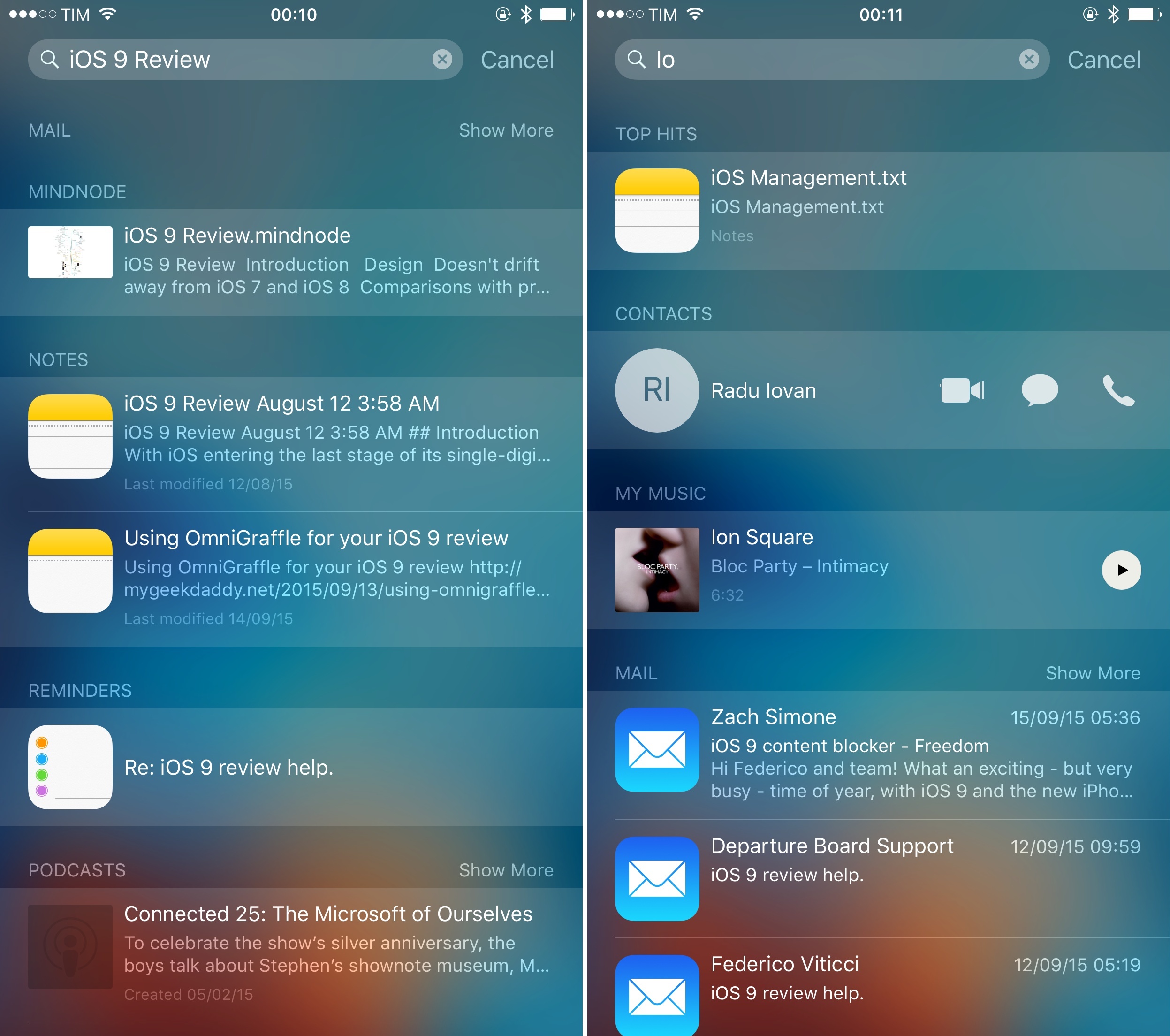

iOS Search is accessed by swiping down on any Home screen (like the existing Spotlight) or as a standalone page to the leftmost side of the first Home screen in a return to form that does more than just search. When swiping down from the Home screen, the cursor is immediately placed in the search box, ready to type; if opened from the dedicated page, you’ll have to tap the search box or swipe down to bring up the keyboard – an important difference geared at showcasing other features available in this screen. As far as searching is concerned, both modes lead to the same results.

On the surface, iOS 9 Search augments the existing Spotlight by extending its capabilities beyond launching apps and searching for data from selected partners. With iOS 9, you’ll be able to look for content from installed apps, such as documents from iCloud Drive, events from a calendar app, or direct messages from a Twitter client. This can be done by typing any query that may be relevant to the content’s title and description; results display rich previews in iOS 9, optionally with buttons to interact with content directly from search.

iOS Search is more than a fancier Spotlight. Changes in this release include new APIs for local apps and the open web, highlighting Apple’s interest in web search as an aid to apps. Some aspects of it aren’t clear yet – and Apple has been tweaking quite a few things over the summer – but the nature of the change is deep and intriguing.

A key distinction to note in Apple’s implementation of Search is that are two different indexes powering results that appear in Spotlight and Safari. A local, on-device index of private user content and data that is never shared with anyone or synced between devices; and a server-side, cloud index that is under Apple’s control and fed by the company’s Applebot web crawler.

Local Search: CoreSpotlight and User Activities

In iOS 9.0, the focus is mostly on the local index, which will power the majority of queries on user devices.

iOS 9 can build an index of content, app features, and activities that users may want to get back to with a search query. It’s comprised of two APIs: CoreSpotlight, an index of user content built like a database that can be periodically updated in the background; and our friend NSUserActivity, this time employed to index user activities in an app as points of interest.

From a user’s perspective, it doesn’t matter how an app indexes content – using the new search feature to find it always works the same way. Search results from apps in iOS 9 are displayed with the name of the app they’re coming from, a title, description, an optional thumbnail image, and buttons for calling and getting directions if those results include phone numbers or addresses. Visually, there is no difference between results powered by CoreSpotlight and those based on user activities: both display rich previews in iOS 9 and can be tapped to open them directly into an app.

On a technical level, the difference between CoreSpotlight and NSUserActivity for developers is that while activities are intended to be added to the on-device index as the user views content in an app, CoreSpotlight entries can be updated and deleted in the background even if the user isn’t doing anything in the app at the moment. For this reason, a todo app that uses sync between devices may want to adopt CoreSpotlight to maintain an index of up-to-date tasks: while entries in the index can’t be synced, developers can update CoreSpotlight in the background, therefore having a way to check for changes in an app – in this example, modified tasks – and update available results accordingly.

Apple is also giving developers tools to set expiration dates for the CoreSpotlight index (so entries in the database can be purged after some time to prevent the archive from growing too large) and they can rely on iOS’ existing background refresh APIs and combine them with CoreSpotlight background changes to keep the local index fresh and relevant.

Apple’s own apps make use of both CoreSpotlight and NSUserActivity to build an on-device index of user content. Mail, Notes, Podcasts, Messages, Health, and others allow you to look for things you’ve either created, edited, organized, or seen before, such as individual notes and messages, but also the Heart Rate pane of the Health app or an episode available in Podcasts.

I’ve also been able to try apps with support for local indexing on iOS 9. Drafts 4.5 enables you to search for text from any draft stored in the app. Clean Shaven Apps has added CoreSpotlight indexing support to Dispatch, allowing you to look for messages already stored in the inbox and a rolling archive of the latest messages from another mailbox. On iOS 9, iThoughts lets you search for any node in a mind map and jump directly to it from search, bypassing the need to find a file in the app, open it, and find the section you’re looking for.

WhereTo’s iOS 9 update was perhaps the most impressive search-related update I tested: the app lets you search for categories of businesses nearby (such as restaurants, coffee shops, supermarkets, etc.) as points of interest, but you can also get more detailed results for places you’ve marked as favorites, with a button to open directions in Maps from search with one tap.

Apple has given developers a fairly flexible system to index their app content, which, with a proper combination of multiple APIs, should allow users to find what they’re expected to see in their apps.

However, this isn’t all that Apple is doing to make iOS Search richer and more app-aware. Apple is building a server-side index of crawled web content that has a connection to apps – and that’s where their plans get more confusing.

Apple’s Server-Side Index

In building iOS 9 Search, Apple realized that apps often have associated websites where content is either mirrored or shared. For the past several months, Apple has been crawling websites they deemed important to index their content with a crawler called Applebot; now, they’re ready to let every website expose its information to Applebot via web markup. The goal is the same: to provide iOS Search with rich results – in this case culled from a much larger source.

The server-side index is a database in the cloud of public content indexed on the web. Unlike a traditional search engine like Google, though, Apple’s primary motivation to keep a cloud index is to find web content that has an app counterpart, so users can easily view it in a native app.

Think of all the services that have native apps for content that is also available on the web: from music services to online publications and websites like Apple’s online store or Foursquare, many of the apps we use every day are based on content that comes from the web and is experienced in an iOS app. From such perspective, Apple’s goal is simple: what about content that can be viewed in an app but that hasn’t been experienced yet by the user? What about a great burger joint near me listed in Foursquare that I still haven’t seen in the Foursquare app, or an article about Italian pasta that I haven’t read in my favorite site’s app yet?

Instead of having to search Google or use each app’s search feature, Apple is hoping that the iOS Search page can become a universal starting point for finding popular content that can be also be opened in native apps.

Because the web is a big place, to understand the relationship between websites and apps Apple has started from an unexpected but obvious place: iTunes Connect. When they submit an app to the App Store, developers can provide URLs for marketing and support websites of an app; Apple can match those websites with the app in their index and crawl them with Applebot for content that could enrich Search. These pieces of content – such as listings from Airbnb or places in Foursquare – will then be available in the Search page and Safari (the browser’s search feature can only search this type of content, as it doesn’t support local app search) and will open in a native app or on the indexed webpage if the app isn’t installed.

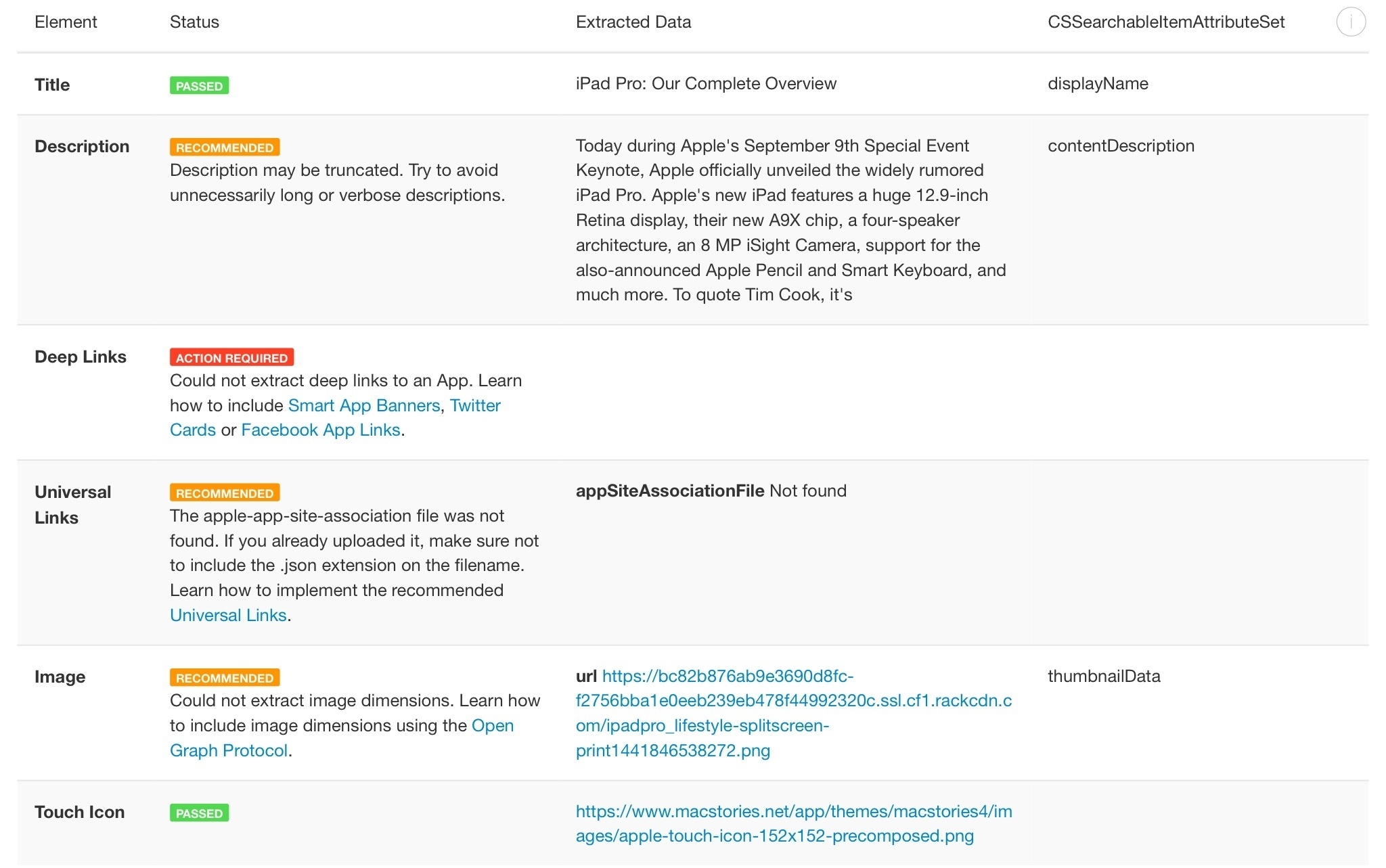

To teach Applebot how to crawl webpages for iOS Search and give results some structure, Apple has rolled out support for various web markup technologies. Developers who own websites with content related to an app will be able to use Smart App Banners, App Links, and Twitter Cards to describe deep links to an app; the schema.org and Open Graph standards are used to provide metadata for additional result information.

Apple calls these “rich results”. With schema.org, for instance, Applebot is able to recognize tagged prices, ratings, and currencies for individual listings on a webpage, while the Open Graph image tag can be used as an image thumbnail in search results. The goal is to make web-based results rich in presentation as their native counterparts. When you see rich descriptions and previews for links shared on Twitter, Facebook, or Slack, Open Graph and schema.org are usually behind them. Apple wants the same to be true for iOS search results. They’ve even put together a search API testing tool for developers to see how Applebot crawls their webpages.

In practice, it’s been nearly impossible for me to test the server-side index this summer. In my tests from June to early September, I was never able to consistently find results from popular online services (Airbnb, Foursquare, eBay, or Apple’s own online store) that were relevant to my query or capable of enriching the native experience of an app on my device. In the majority of my tests, web results I managed to see in Search were either too generic, not relevant anymore, or simply not performing as advertised.

For example, when typing “Restaurants Rome Foursquare” without having the Foursquare app installed on my device, I got nothing in iOS Search. My assumption was that popular Foursquare results would be available as Applebot-crawled options in Search, but that wasn’t the case. Same for Airbnb, except that I occasionally managed to see listings for apartments fetched from the web, but they weren’t relevant to me (one time I typed “Airbnb Rome Prati”, and I somehow ended up with an apartment in France. The result was nicely displayed in Search though, with a directions button for Maps).

I’ve started seeing some web results show up in iOS Search over the past couple of days under a ‘Suggested Website’ section. Starting Monday (September 14th), I began receiving results from the Apple online store, IMDb, and even MacStories. I searched for content such as “House of Cards”, “iPad Air 2”, and “MacStories iPad”, and web-based results appeared in Search with titles, descriptions, and thumbnails. In all cases, tapping a result either took me to Safari or to the website’s native app (such as the IMDb app for iOS 9 I had installed). Results in Search were relevant to my query, and they populated the list in a second when typing.

The issues I’ve had with web results in iOS 9 Search this summer and the late appearance of “suggested websites” earlier this week leads me to believe that server-side results are still rolling out.

The most notable example is that Apple’s own demonstration of web results in search, the Apple online store, isn’t working as advertised in the company’s technical documentation. The web results I saw didn’t appear under a website’s name in Search, but they were categorized under a general ‘Suggested Website’. In Apple’s example, Beats headphones should appear under an ‘Apple Store’ source as seen from the web, but they don’t. My interpretation is that proper server-side results with rich previews are running behind schedule, and they’ll be available soon.

A change in how NSUserActivity was meant to enhance web results adds further credence to this theory. As I explored in my story from June, Apple announced the ability for developers to tag user activities in their apps as public to indicate public content that was engaged with by many users. According to Apple, they were going to build a crowdsourced database of public user activities, which could help Applebot better recognize popular webpages.

Here’s how Apple updated its documentation in August:

Activities marked as eligibleForPublicIndexing are kept on the private on-device index in iOS 9.0, however, they may be eligible for crowd-sourcing to Apple’s server-side index in a future release.

Developers are still able to tag user activities as public. What is Apple doing with those entries, exactly? Another document explains:

Identifying an activity as public confers an advantage when you also add web markup to the content on your related website. Specifically, when users engage with your app’s public activities in search results, it indicates to Apple that public information on your website is popular, which can help increase your ranking and potentially lead to expanded indexing of your website’s content.

If this sounds confusing, you’re not alone. To me, this points to one simple explanation: Apple has bigger plans for web results and the server-side index with a tighter integration between native apps (public activities) and webpages (Applebot), but something pushed them back to another release. The result today is an inconsistent mix of webpages populating Search, which, as far as web results alone are concerned, is far from offering the speed, precision, and dependability of Google or DuckDuckGo in a web browser.

There’s lots of potential for a search engine that uses web results linked to native apps without any middlemen. If working as promised, iOS Search could become the easiest way to find any popular content from the web and open it in a native app, which in turn could have huge consequences on app discoverability and traffic to traditional search engines – more than website suggestions in Safari have already done.

However, this isn’t what iOS Search is today, and it’s not fair to judge the feature based on the merit of its future potential. The server-side index is clearly not ready yet.