Files

Strap in, because this is a big one, too: there are two new features worth noting in Files this year.

The first one is “band selection” with the pointer on iPad, which I’ve already covered in Other iPadOS Changes.

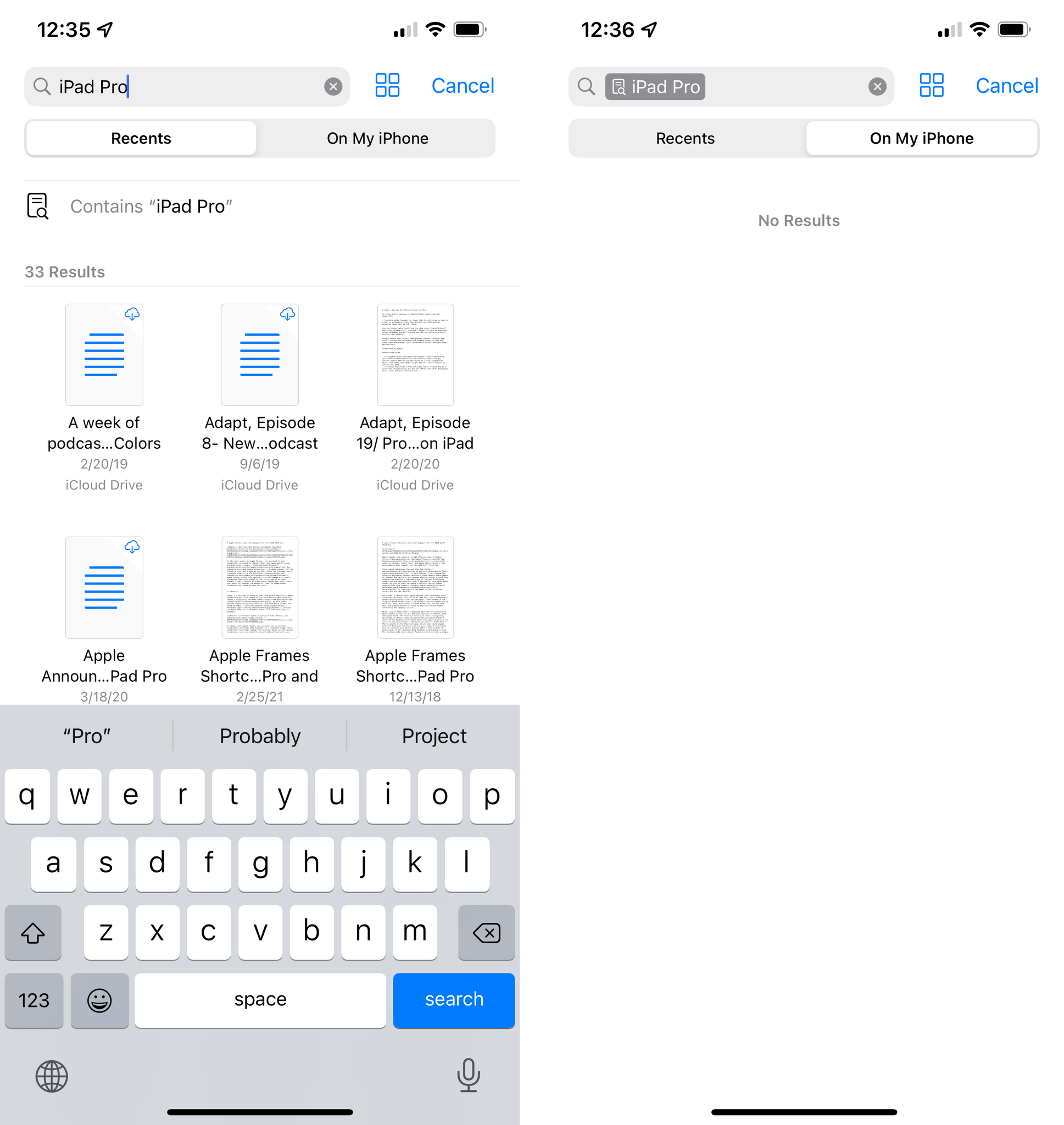

Then, in theory, you should be able to search for documents contents by typing a keyword in the search field and selecting the ‘Contains…’ filter. I say “in theory” because, as of the latest version of iOS and iPadOS 15, I see this filter in Files search, but it’s not working for me.

Sadly, this is it for Files. Apple didn’t add support for Smart Folders, despite their presence in Notes and Reminders; they didn’t add support for shortcuts as Quick Actions, despite their availability in the Finder for macOS Monterey; they didn’t add support for versions, despite the fact they’ve existed for years on the Mac.

There’s always iOS 16, I suppose.

Podcasts

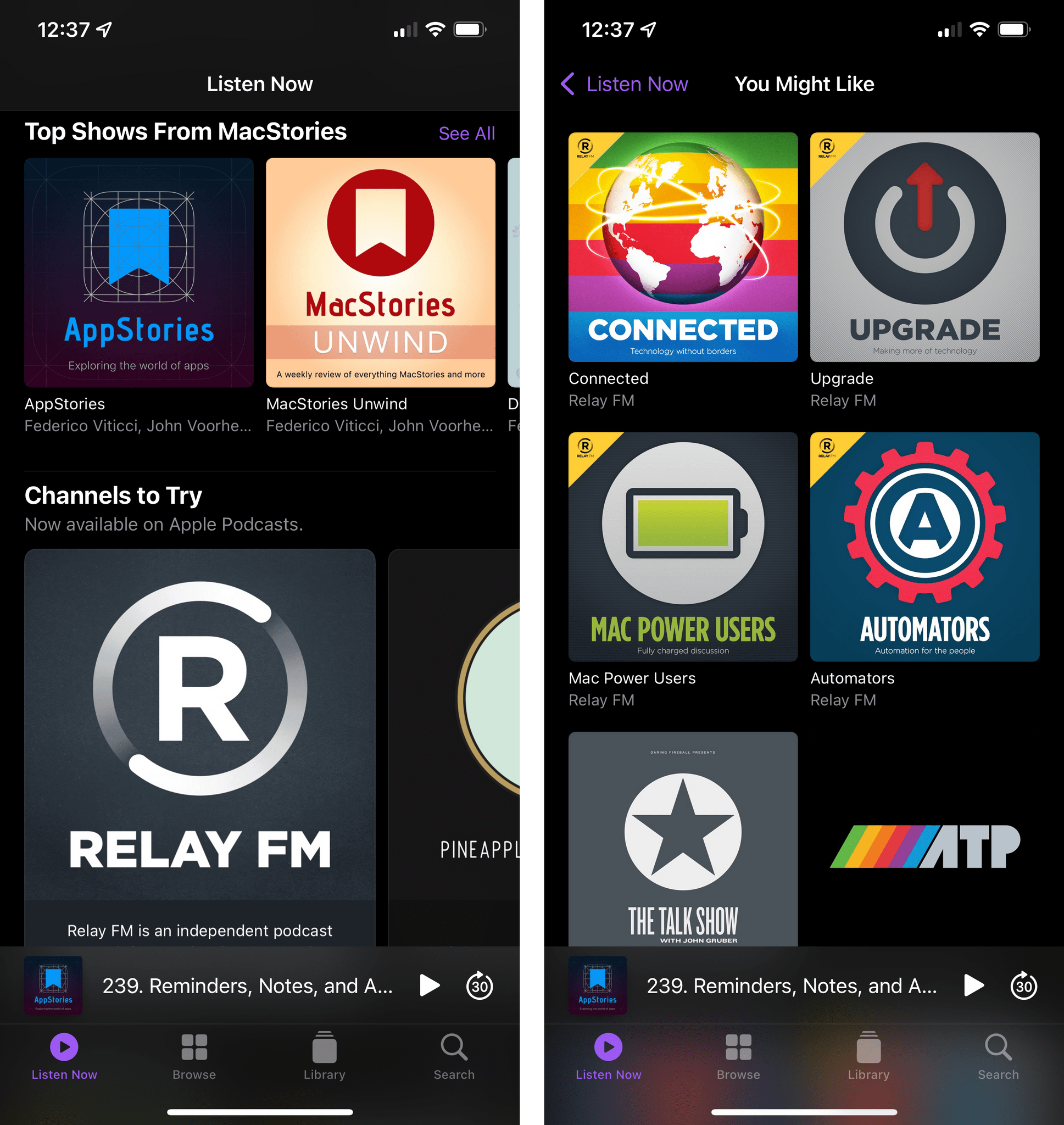

Very little has changed in Apple’s heavily criticized new version of the Podcasts app that launched earlier this year alongside Apple Podcasts Subscriptions. In fact, the “changes” in iOS 15 seemingly revolve around an updated recommendation engine that should provide you with more personalized suggestions for podcasts and channels.

Podcasts featured show recommendations before, so I’m not sure what, exactly, should make this updated version more accurate than iOS 14. In my experience with the app, I got a handful of podcast recommendations based on my listening activity that were pretty accurate but not spot-on, plus a bunch of channel recommendations that just felt like a way to showcase random creators who have channels and subscriptions. If I were Apple, I’d focus on simplifying library and queue management for users as well as bringing audio effects from Voice Memos to Podcasts.

Passwords

Okay, I know what you’re thinking: we’re all surprised by this, but the Passwords feature of Settings on iOS and iPadOS isn’t technically an app. But that’s the thing: as I’ve been writing for the past few years, it really should become a standalone Passwords app given its feature set and importance to the Apple ecosystem. Passwords’ potential remains largely untapped as a simple section of the Settings app. So, for this reason, I’ve decided I’m going to start covering the Passwords feature in the Apps chapter of my review until my wish comes true.

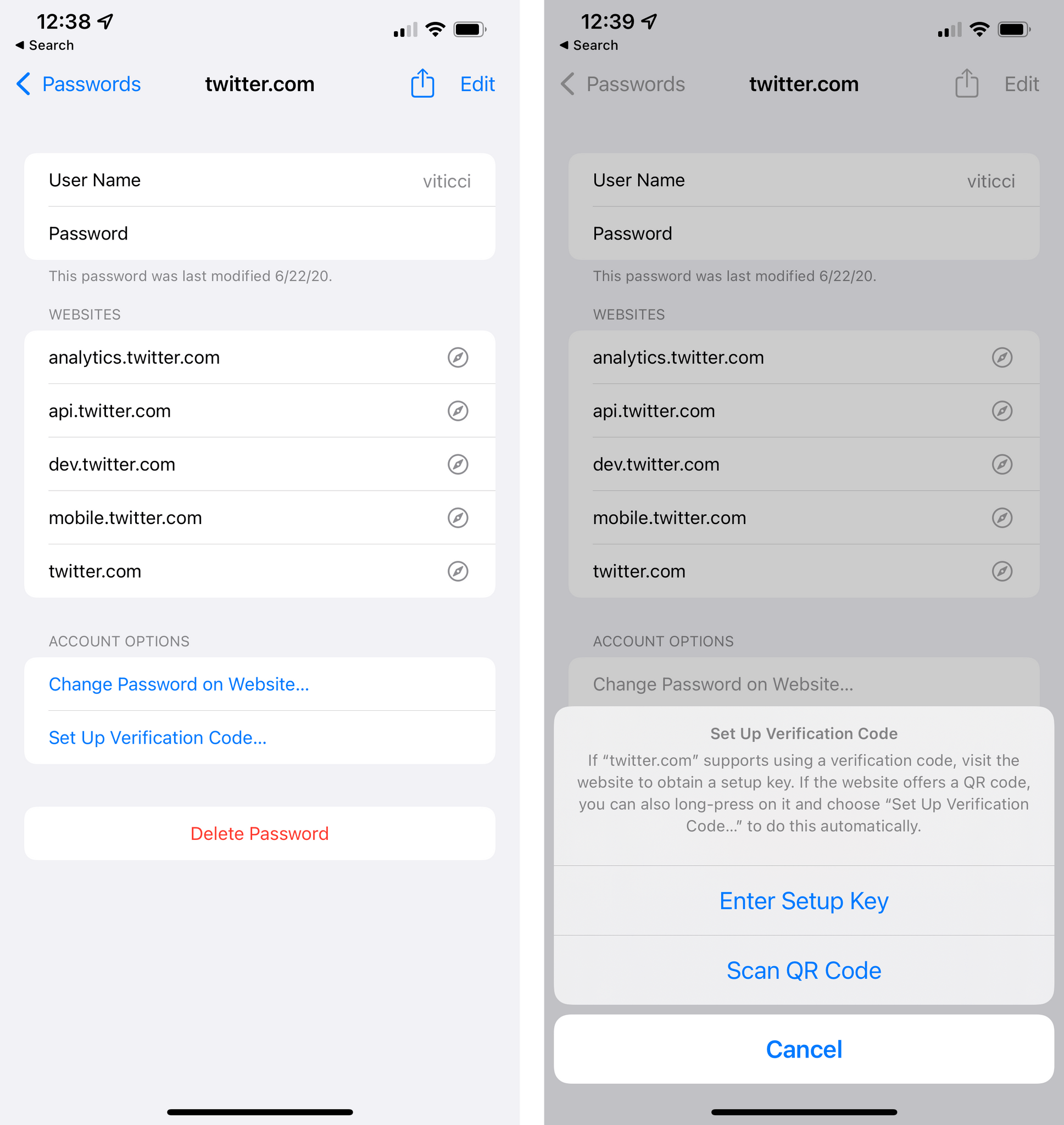

This year, Passwords has received support for what was, arguably, its last big missing feature compared to third-party password managers: support for two-factor verification codes. Setting up codes in Passwords couldn’t be easier: under a new ‘Account Options’ section for a login you’ve saved, you’ll find a new ‘Set Up Verification Code’ button. Tap it, and you’ll be presented with the ability to either manually enter a setup key or scan a QR code.

If you’ve ever set up two-factor authentication codes for your logins in 1Password, you know how this works. Apple also says that if a website offers a QR code, you should be able to long-press it in Safari and start the verification code setup from there, but that didn’t work for me when I added two-factor authentication to my Twitter account. Once a code is set up, you’ll find it in Passwords, and it’ll auto-fill in Safari and compatible apps via the QuickType bar, just like usernames and passwords.

I’m glad Apple added native support for verification codes to Passwords since more people will be able to use it as their only password manager now. I don’t plan on moving away from 1Password anytime soon because I use it with my family and team, but I continue to be impressed by the system integration of Passwords, and I hope it finally graduates to a full app in the near future. The time feels right for Password to leave its Settings nest and try something bolder.

Find My

There are two features worth noting in the Find My app for iOS 15.

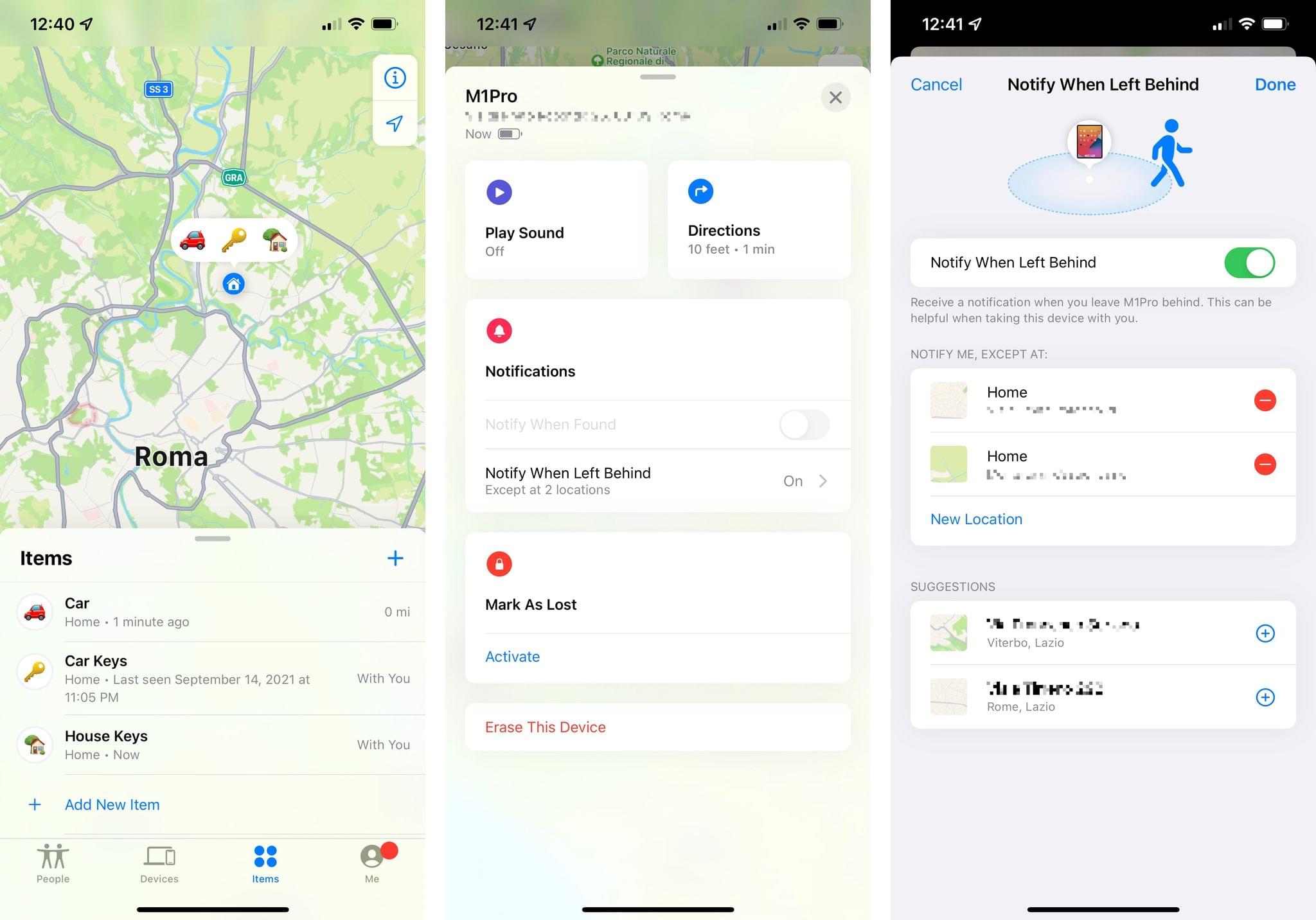

First, Apple added separation alerts for when you’re leaving a specific device or item behind. Literally labeled ‘Notify When Left Behind’, this option is available for devices running the latest OS update or firmware, and you can turn it on to be notified if you’re leaving a particular location without taking a device or Find My-compatible item with you. For instance, you may want to rely on these alerts if you’re leaving your iPhone or keys behind; as soon as you exit a geofence around your current location, you’ll get a notification that you left an item behind.

In a nice touch, you can exclude locations from being considered by Find My. Maybe you don’t want to be notified if you leave your laptop at home or at the office; in that case, you can manually enter an address or choose from a list of suggested locations (based on your history) and exclude it.

The second addition to Find My this year is the ability to leave an iPhone in a “findable” state even after it’s powered off. You can read more about this, and optionally disable it, in the shutdown screen before powering off an iPhone.

My understanding of this feature is that Apple primarily added it for all those times when your iPhone’s battery is about to give out. Before the iPhone shuts down, iOS 15 will leave just a tiny bit of battery available to keep the device in an ultra low-power state that will make it findable on the Find My network for days, not hours, as some people initially suspected. This should also come in handy if an iPhone gets stolen and quickly powered off to take it offline; with iOS 15, unless the option is manually disengaged, the iPhone will continue to show up on the Find My network by default.

Camera

In addition to Focus and Notifications, the other new iOS 15 feature that will likely push millions of people to upgrade is found in the Camera app, and it’s called Live Text.

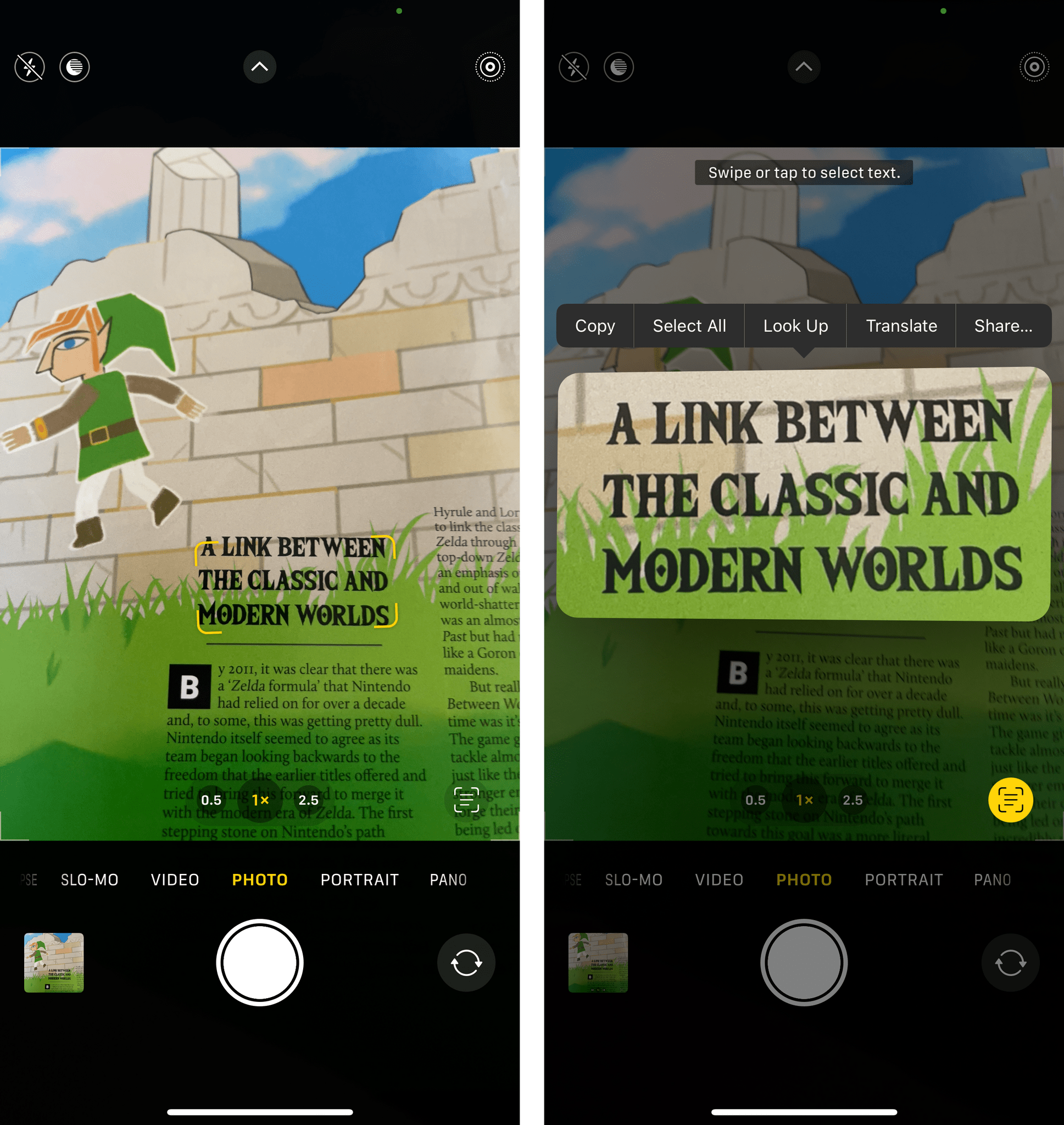

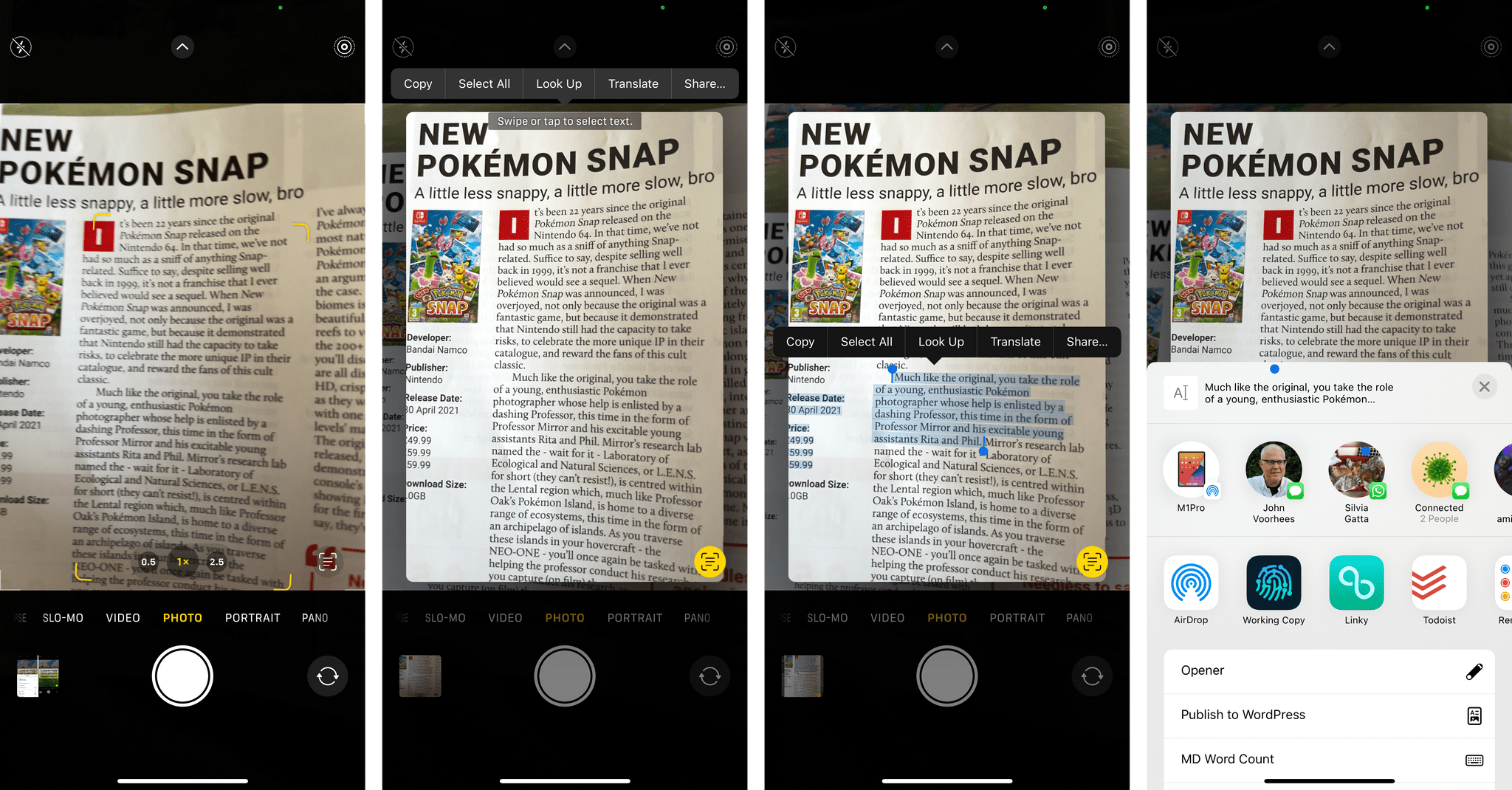

With Live Text, your iPhone’s camera turns into a real-time scanner that can recognize text anywhere, in multiple languages, as you’re holding your iPhone’s camera up to a page, sign, book, or whatever may contain some text. Live Text works offline with on-device processing, and it’s been integrated system-wide in the Photos app, Quick Look previews, Safari, and more. Live Text is, in my opinion, the most technically remarkable feature of iOS 15; once you see it in action, you’ll want to have it on your phone too.

Here’s how Live Text works: every time you’re taking a picture of something that has text in it (we’ve all done this with receipts, documents, business cards, etc.), and if you have an iPhone XS or later, you’ll see the viewfinder focus on recognized text – in real-time – and a document button appear in the lower-right corner of the Camera UI. Press the button, and you’ll freeze the frame for the recognized text.

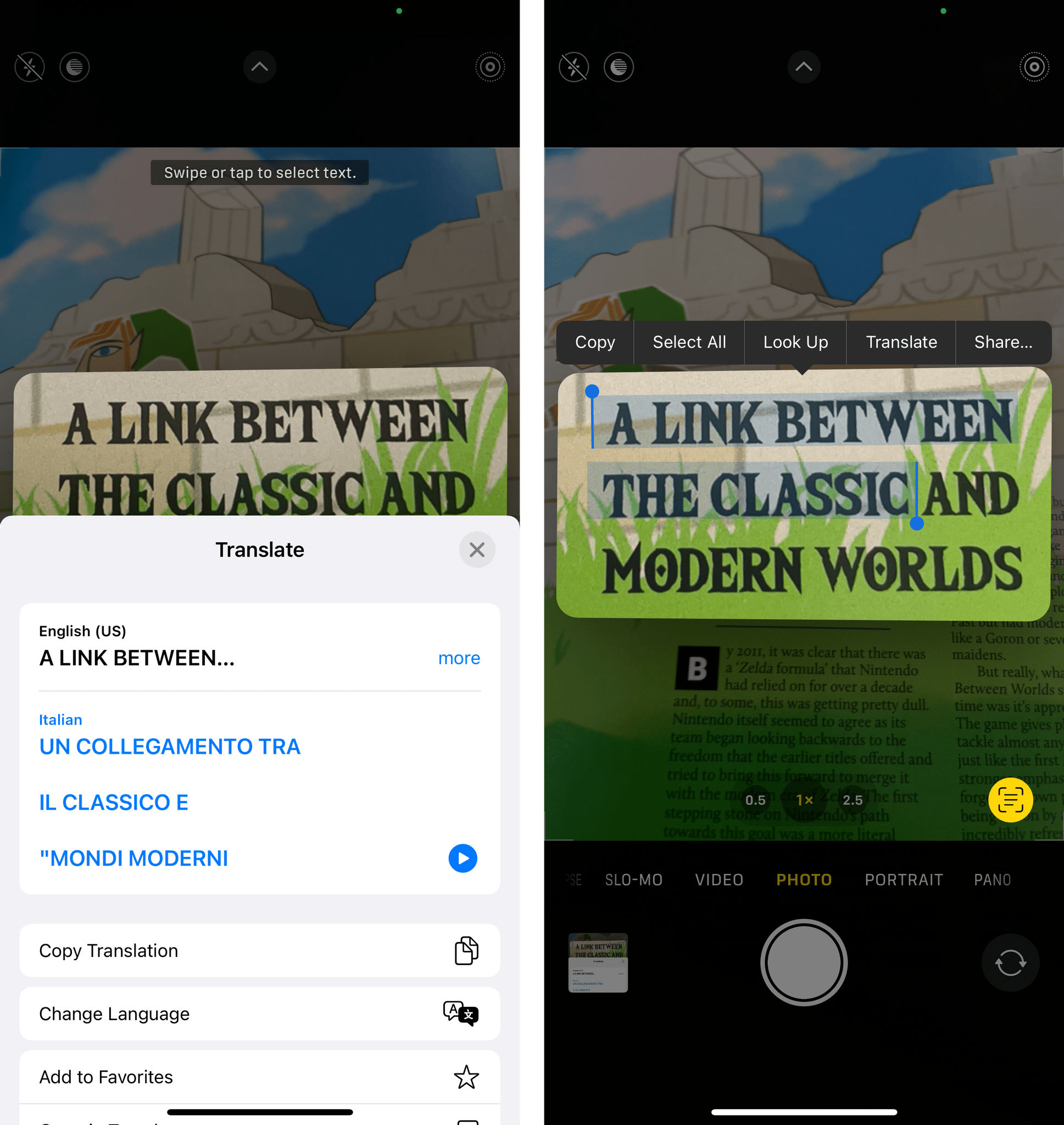

This would be cool enough as a way to freeze-frame documents and inspect them more carefully, but Apple went far beyond that with Live Text. The text recognized by the Camera is actual text you can select, copy, share, and even translate inline with the system-wide translation offered by the Translate app. Imagine this common scenario: you take a picture of a sign or menu in a foreign country, and you need to understand what it means. Previously, you would have had to go find a third-party for this on the App Store, or maybe run a manual Google Translate query.

With iOS 15 and Live Text, here’s what you do: hold up the menu in front of you, tap the document button, then tap Translate in the Live Text preview. Done.

Because Live Text returns plain, selectable text in an instant, consider all the potential variations of the workflow I described above. You can use Live Text as built-in OCR in the Camera app to take a picture of a full document, select all its text, and copy it to the clipboard. You no longer need a scanner app for that. You could scan the page of a book you’re reading, highlight a specific word, and find out its meaning with the Look Up button. You don’t need a third-party dictionary app for that anymore, either. And the best part is: when you freeze-frame with Live Text, you don’t have to save the picture to your photo library: you can just select text, do what you need to do with it, and dismiss Live Text without saving anything.

You can use Live Text to select text, copy it, translate it, look it up, and even share it with extensions.

The integration of Live Text with the live Camera preview is outstanding, but it doesn’t stop there. Live Text has been integrated throughout iOS as a way to find, select, and share text in multiple places and apps.

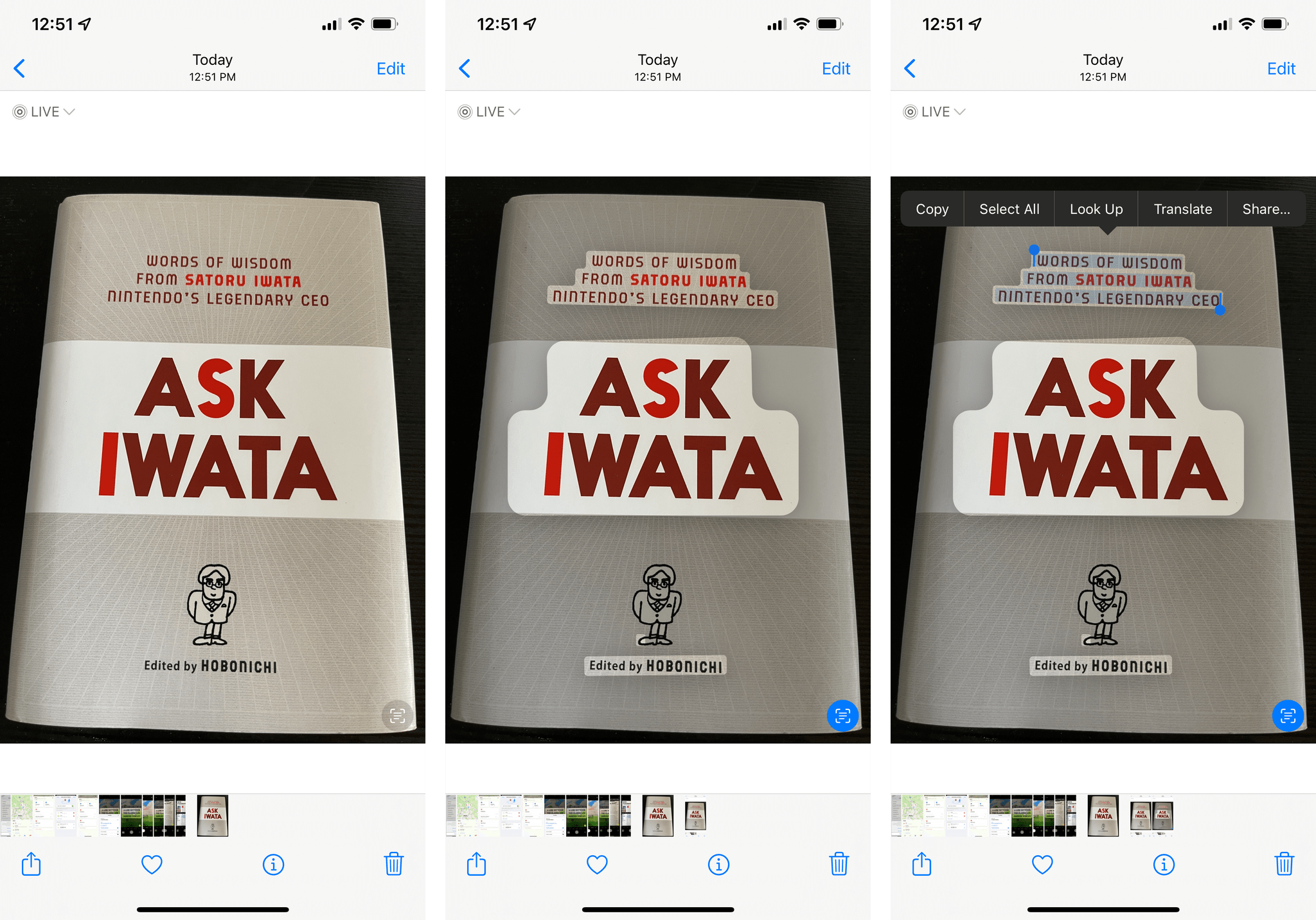

First, there’s the Photos app. In any photo that contains text, you can just hold down your finger (or pointer, since this part also works on iPad) to select text and display the copy and paste menu with additional options. This used to require dedicated OCR apps; now, you can do just do in Photos by dragging your finger across an image. If you want to see all the text recognized in a photo, you can tap the Live Text button in the lower-right corner, which will highlight all text found in the image.

In Photos, you can highlight text found in images and perform the same actions supported in the Camera app.

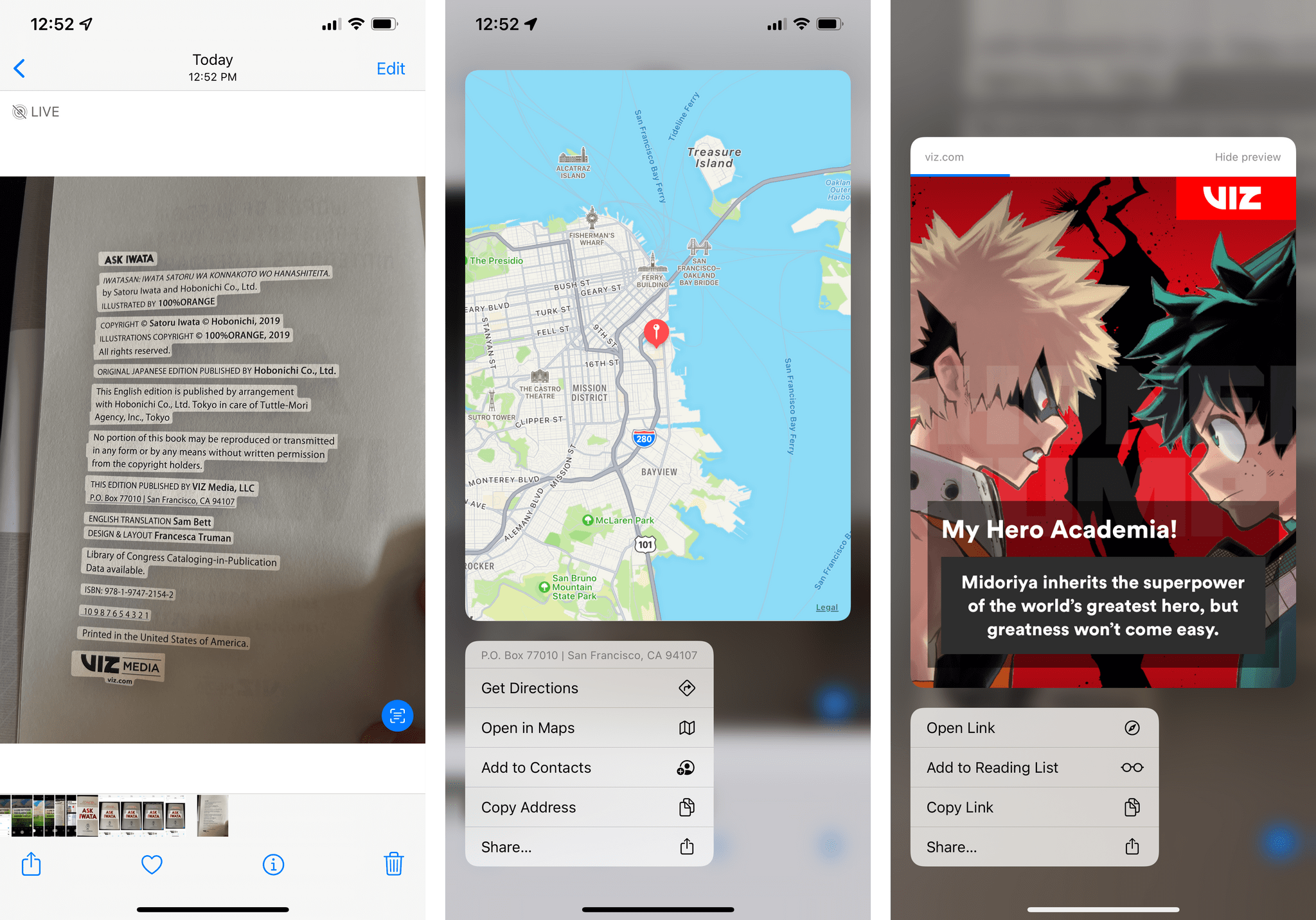

What’s even more impressive here is that data detectors, a long-standing iOS feature that was most recently adopted by Notes handwriting, are fully supported by Live Text too. This means that if a phone number, URL, street address, flight number, date, or other “special” bits of text are found by Live Text, they will also be underlined so you can tap on them and take action immediately. You see where this is going? If you’ve taken a picture of a business card, you can tap the phone number and call it from Photos thanks to Live Text. Taken a screenshot of a date? Tap it in Photos, and you can create a calendar event for it. You can even long-press URLs you have photographed to preview them with a Safari view inside Photos.

With data detectors, underlined text found by Live Text becomes a tappable item that integrates with other iOS features. In this case, I was able to preview an address and a URL inside Photos.

You don’t have to save an image to the Photos app to access these data detectors. By far, the easiest way to use Live Text in iOS and iPadOS 15 is to take a screenshot of something, open the Markup screenshot UI, then tap Live Text. All data detectors will work here, and when you’re done, you can just delete the screenshot.

Live Text integration in the screenshot markup UI.Replay

This kind of seamless integration between hardware and software – the on-device processing, data detectors, text selection, translations – is where Apple’s ecosystem strategy shines.

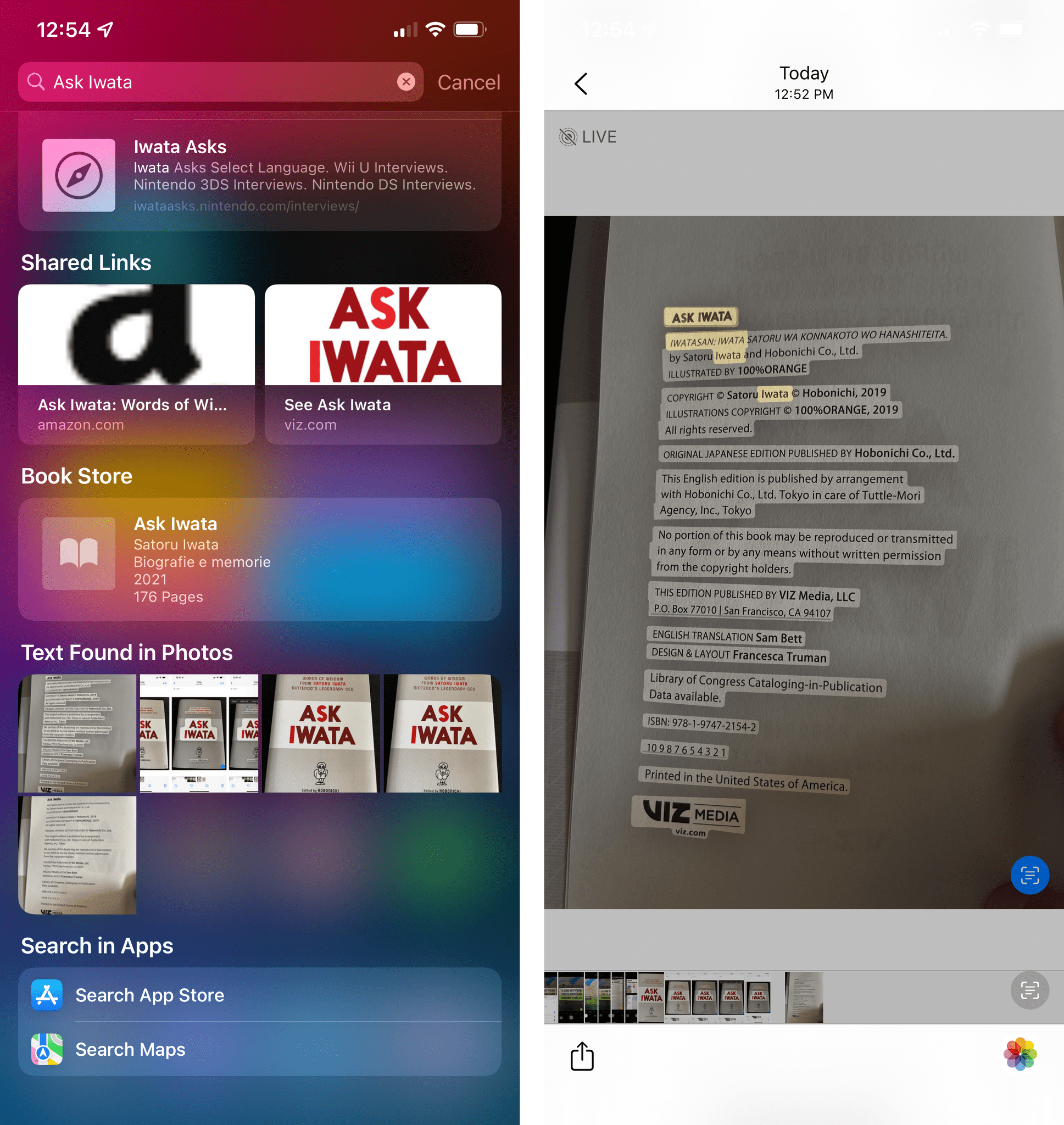

But I’m not done. Text found by Live Text in Photos can be searched from Spotlight. If you remember having taken a picture of something a while back, you can just pull down on your Home Screen, search, and find the matching image in Photos in a couple seconds. It’s pretty amazing.

What if you need to transcribe the text at which you’re looking, but don’t want to do so manually using the iPhone’s keyboard? Apple thought of this aspect too for iOS 15. Tap your finger once in any editable text field in any app, and you’ll see a new Live Text button in the copy and paste menu (it’s also labeled ‘Scan Text’). Press it, and the keyboard will turn into a camera view that scans for text in real-time and updates it inside the text field as you move the camera around. You can tap an ‘Insert’ button to confirm scanned text, or you can freeze-frame it and select text manually. This is a fantastic time-saving tool that is best appreciated in action:

Inserting text into a text field using the camera and Live Text. Magic.Replay

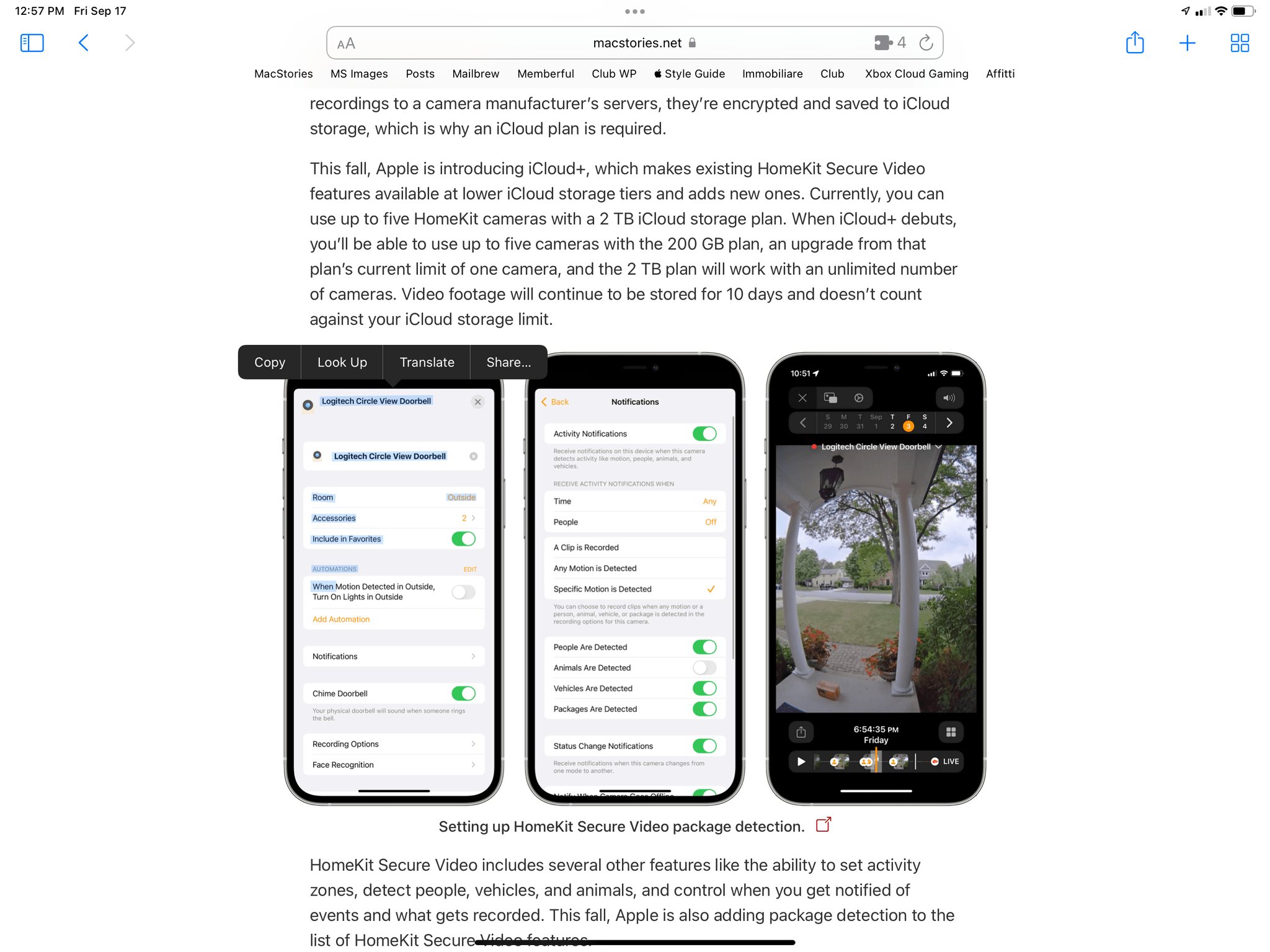

Live Text has even been integrated with Quick Look previews in apps and images embedded in webpages open in Safari. In the browser, you can just swipe your finger over any image that contains text, and you’ll be able to select it; data detectors work for Safari images too. Alternatively, you can long-press an image and hit ‘Show Text’ to open a standalone Quick Look preview for the image featuring a standard Live Text button.

If you don’t want to use Live Text, you can disable it in Settings ⇾ General ⇾ Language and Region.

In case it’s not clear by now, I think Live Text is an incredible feature that Apple engineers should be proud of. Live Text isn’t just “cool” (although it is!) – it’s a genuinely useful, empowering feature that will help a lot of people who, for a variety of reasons, need an easier way to scan, type, or paste text.

I love Live Text. If you need help convincing a relative to update to iOS 15, run a demo of Live Text for them.

Other Camera Changes

There are some other changes to the Camera app in iOS 15 I want to point out:

Zoom during QuickTake video. When you’re shooting a QuickTake video by holding down the shutter button, you can now swipe up and down to zoom. You’ve probably been doing this for years now with Instagram and Snapchat. Now you can do it with the Camera app too. Which means this kind of video is now possible:

Ginger would probably be upset if she knew about this video.Replay

New UI for scanning QR codes. There’s a brand new, more powerful interface for scanning QR codes in the Camera app that lets you preview the content of a code without opening it in the associated app. Apple did this by adding a badge that, for instance, shows you the domain of a URL-based code or the name of a contact embedded in the code. There’s also a new QR button in the lower-right corner that appears when codes are recognized by the Camera, which you can press to access a context menu with different options. These new features make the Camera the best QR code scanner you can use on iOS – even better than Apple’s own, built-in Code Scanner app.

Scanning QR codes in iOS 15.Replay

Preserve settings for exposure, night mode, and portrait zoom. If you want to keep your exposure adjustment, night mode, or portrait zoom permanently set to a specific mode, you can now do so from the Preserve Settings screen of Settings ⇾ Camera. Notably, this lets you keep night mode persistently disabled if you never want to use it.

Photos

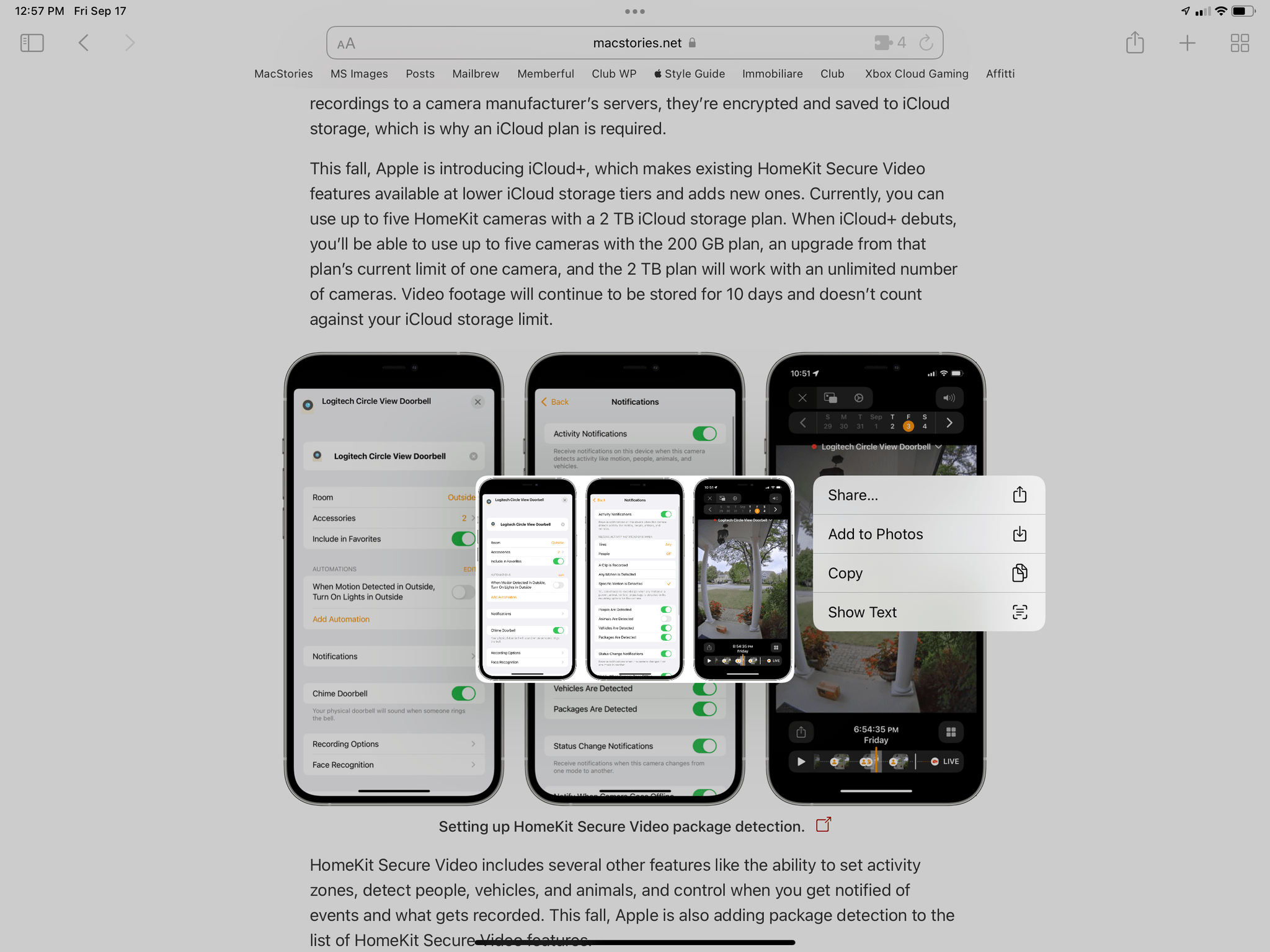

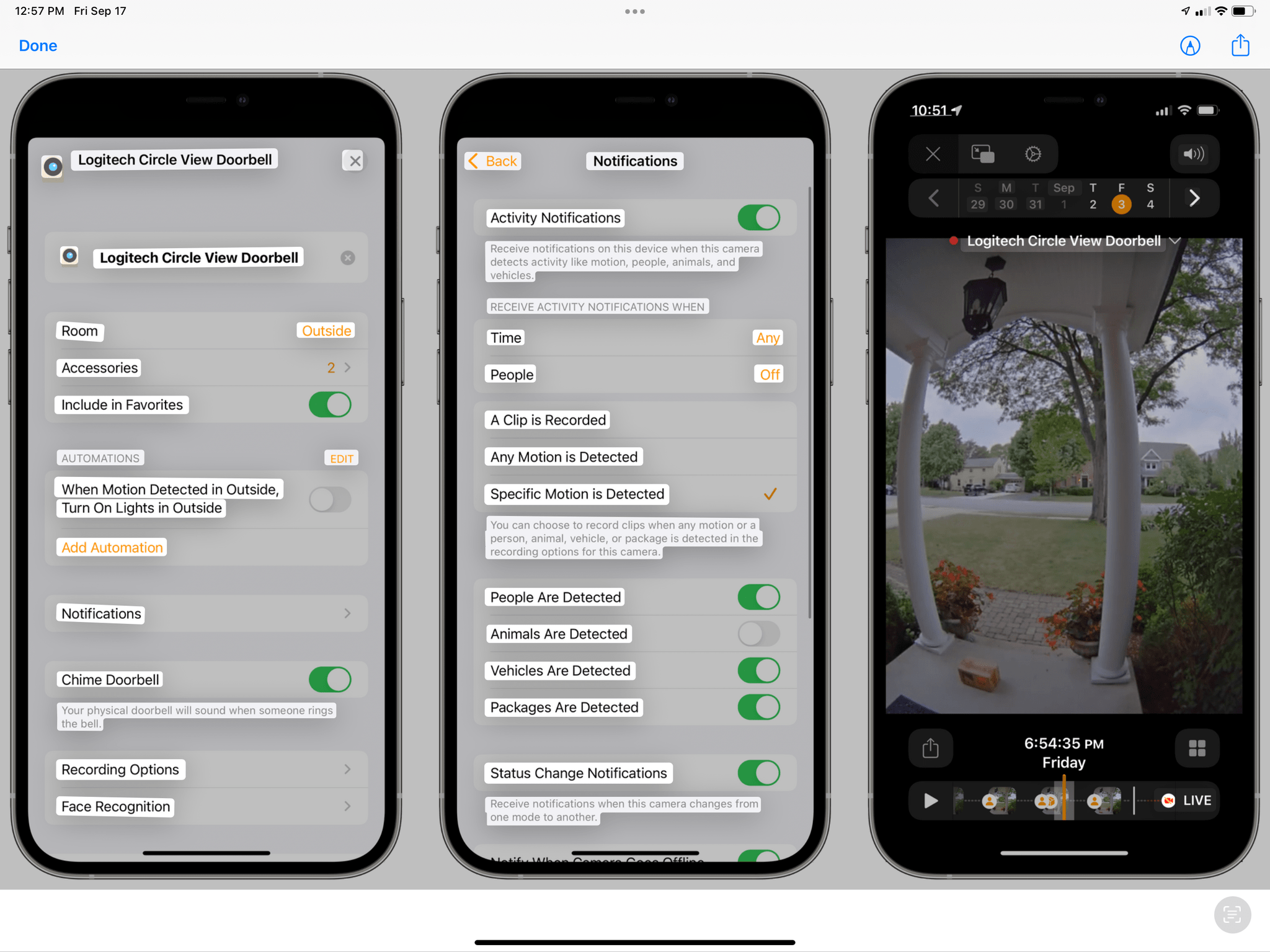

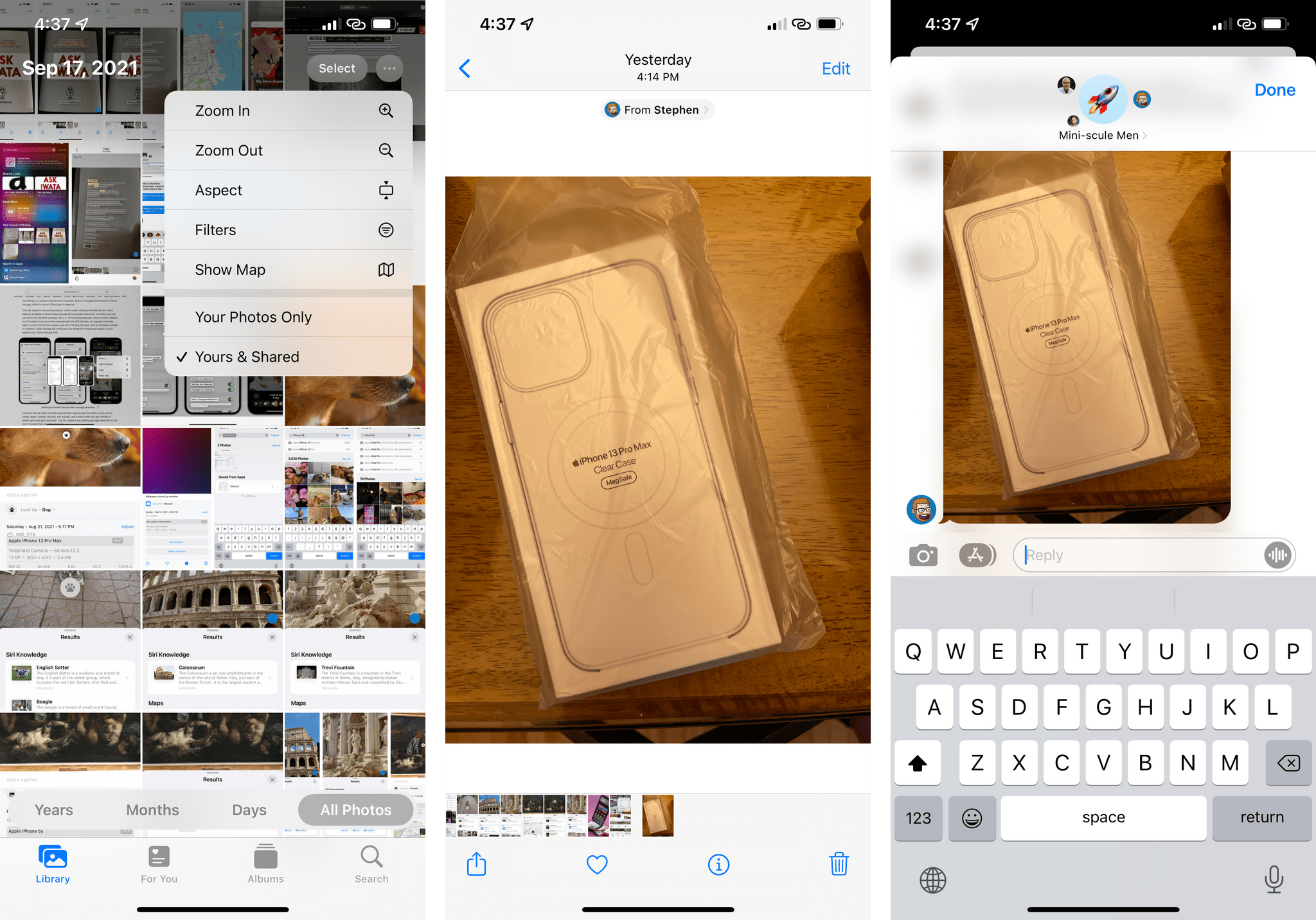

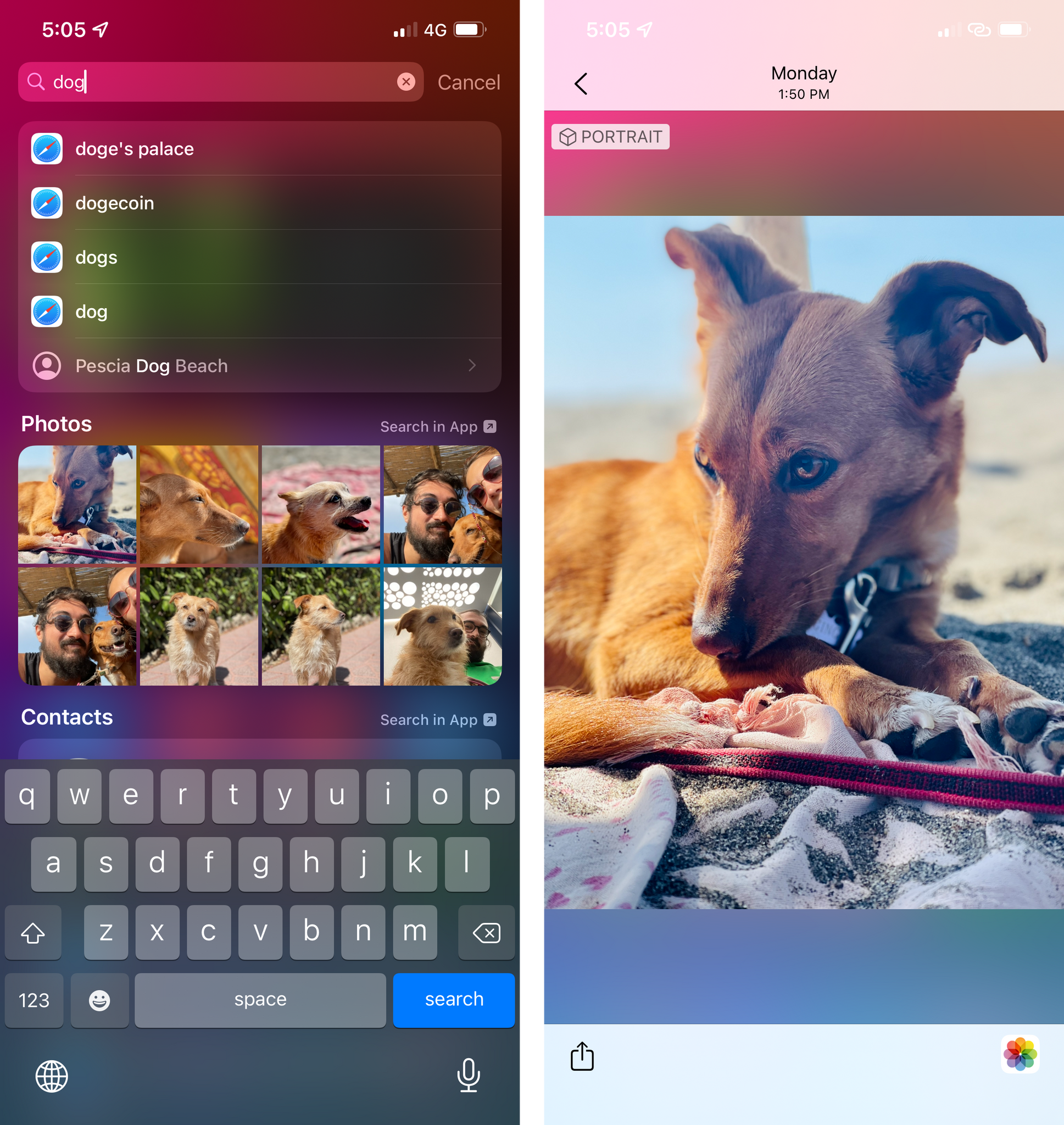

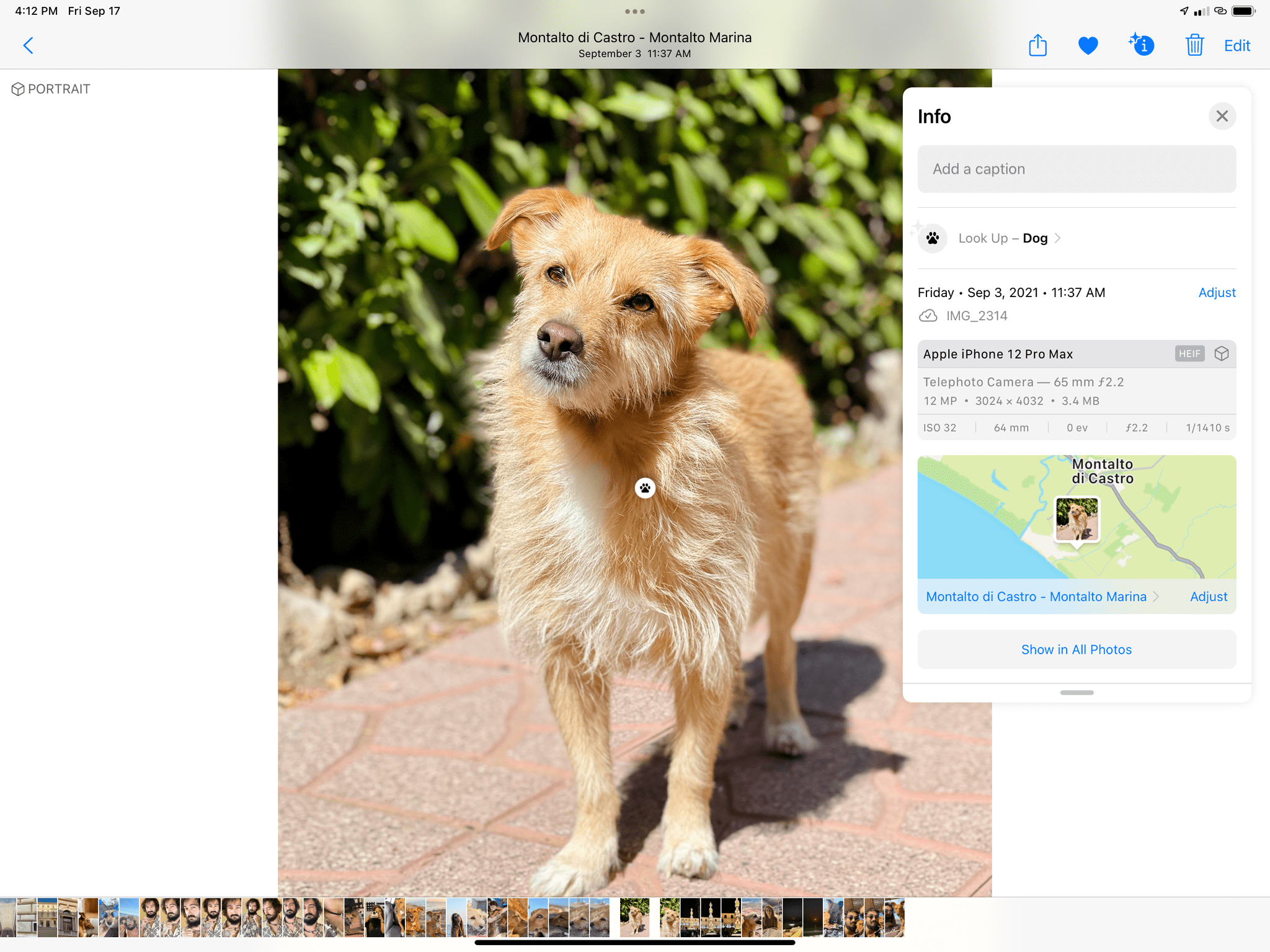

Besides Live Text, the most relevant changes to Photos this year involve integration with Look Up and, at long last, the ability to view rich metadata for images inside the app.

Tap the new ‘i’ button or swipe up on a photo, and you’ll see the new metadata panel. This feature has long been available on macOS, and it’s now on iPhone and iPad too.14 You can view the filename of an image, its timestamp, file format, location, and even camera model and lens used to capture it. For the first time, Apple is exposing EXIF metadata natively in the Photos app, and I’ve enjoyed being able to easily double-check whether a great photo in my library was taken with the wide or telephoto camera, for example.

On iPadOS 15, the panel is floating and resizable (see the pulling indicator). Could this kind of floating accessory become an API for developers next year?

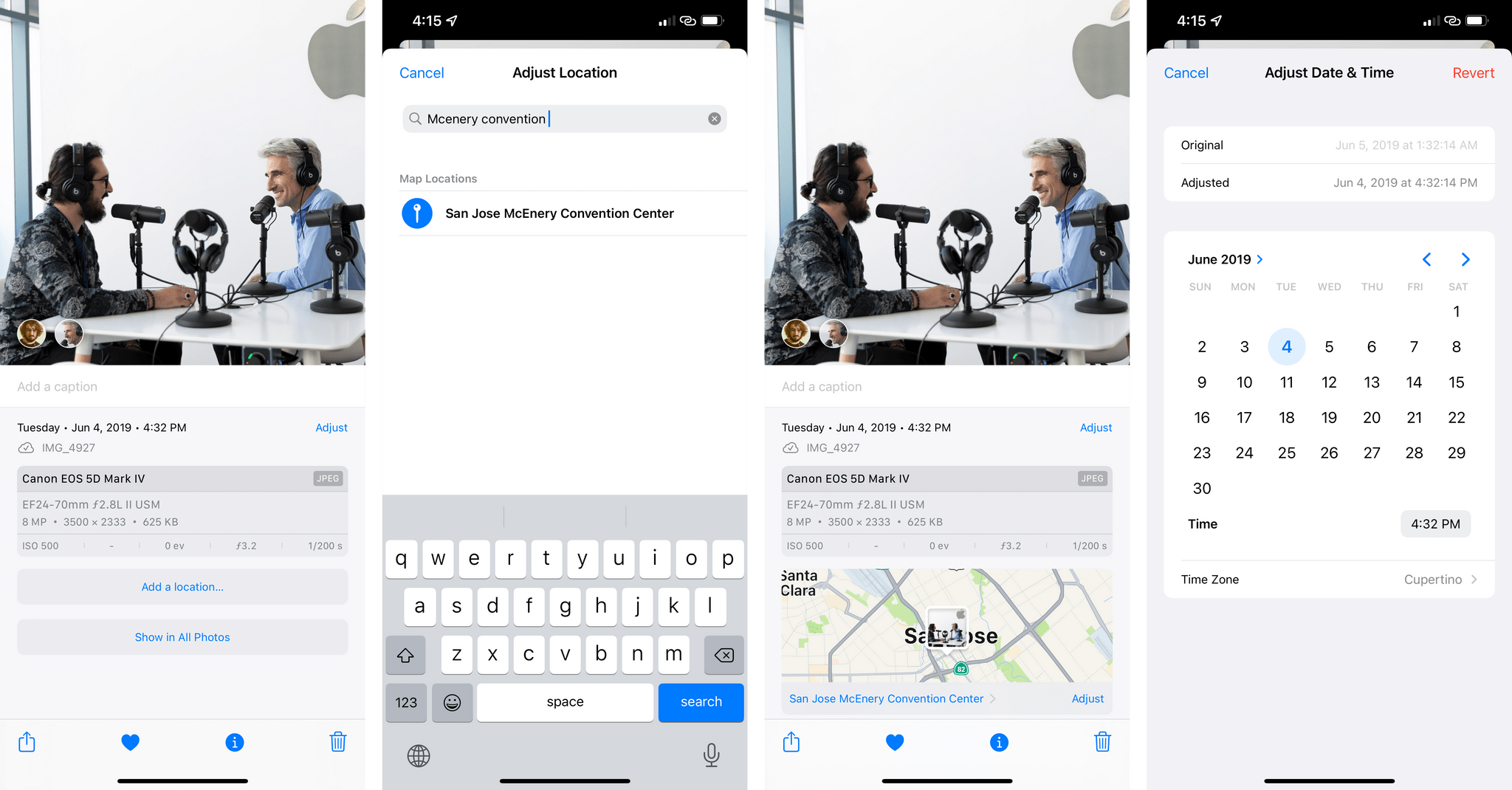

The Info panel also lets you manually adjust dates and locations: this is particularly useful for old photos that may not have metadata attached or pictures that don’t have GPS data embedded inside them. Now you can add, remove, and modify dates and locations with a native ‘Adjust’ button in Photos

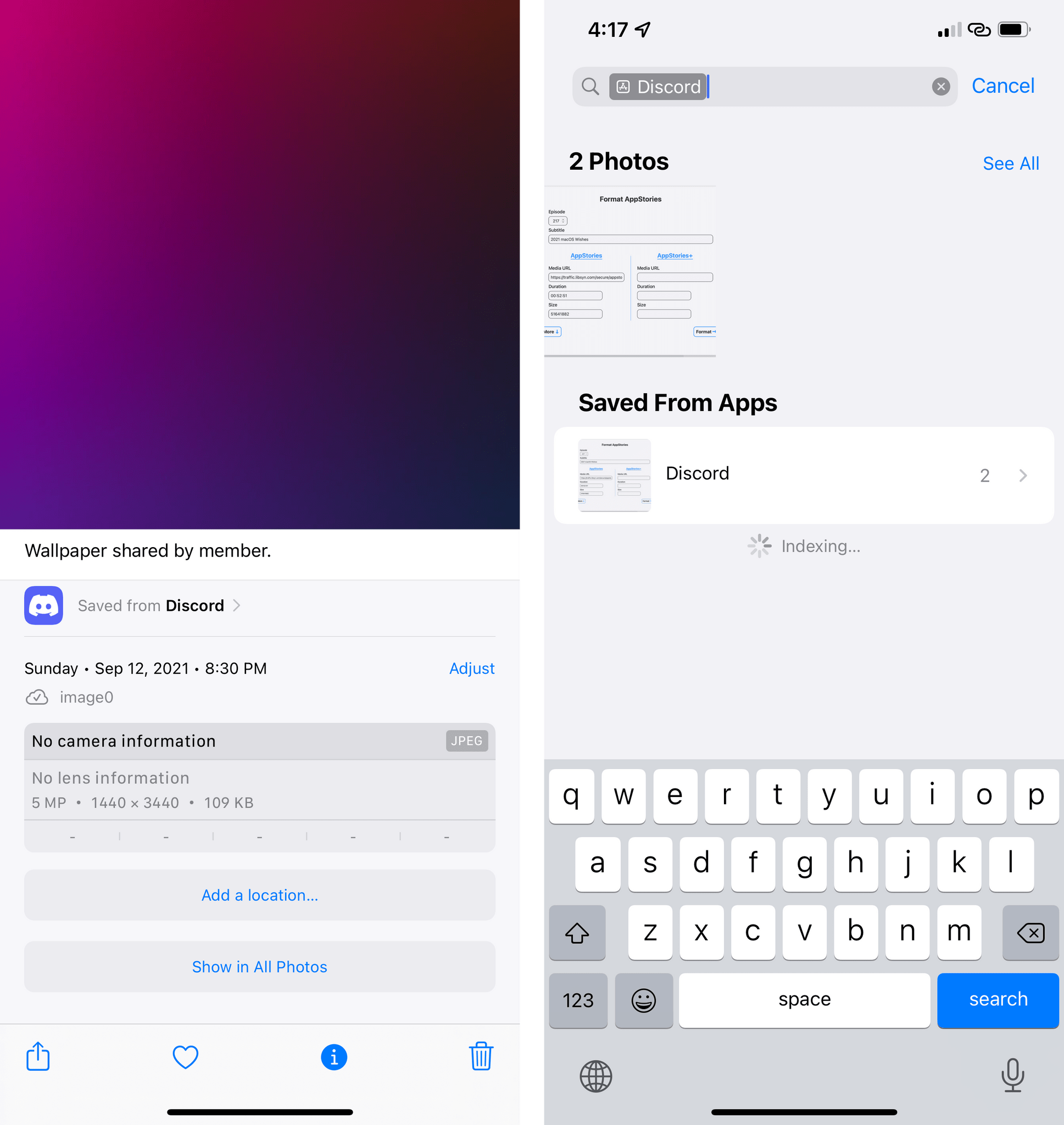

Notably, the metadata panel even tells you if an image was saved from a particular app on your device. This is part of a broader initiative in Photos for iOS 15, which can now identify the “source app” used to save a photo in your library and allow you to search for them. Type ‘Safari’ in Photos’ search field, for instance, and you’ll find all images saved from the web; type ‘Discord’, and you’ll find all the silly memes your friends sent you that you also saved.

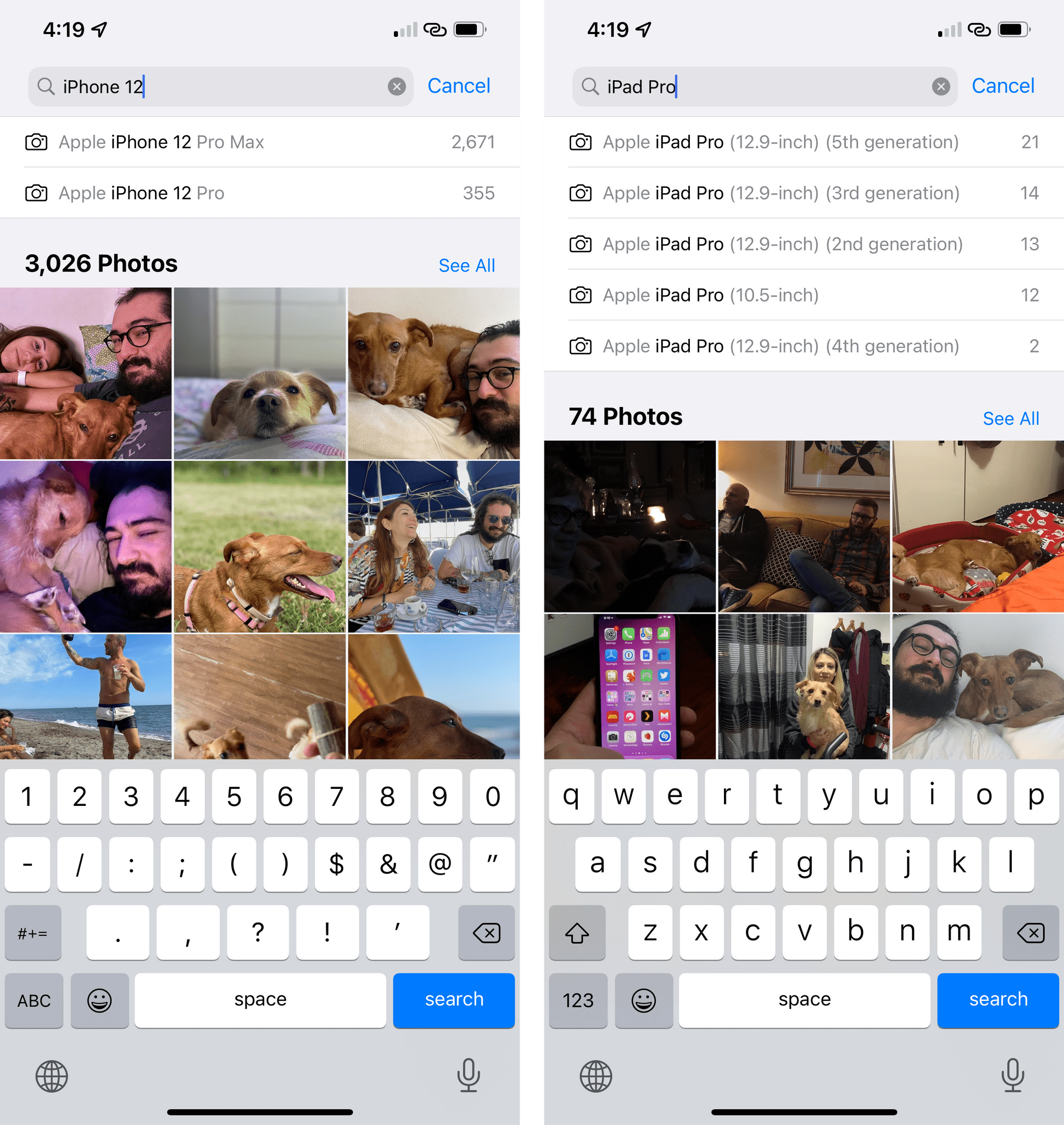

The same feature also works for camera names: type ‘iPhone’ in search, and Photos will let you browse images captured from specific iPhone models. I like this option as a way to quickly compare photos across multiple generations of iPhones.

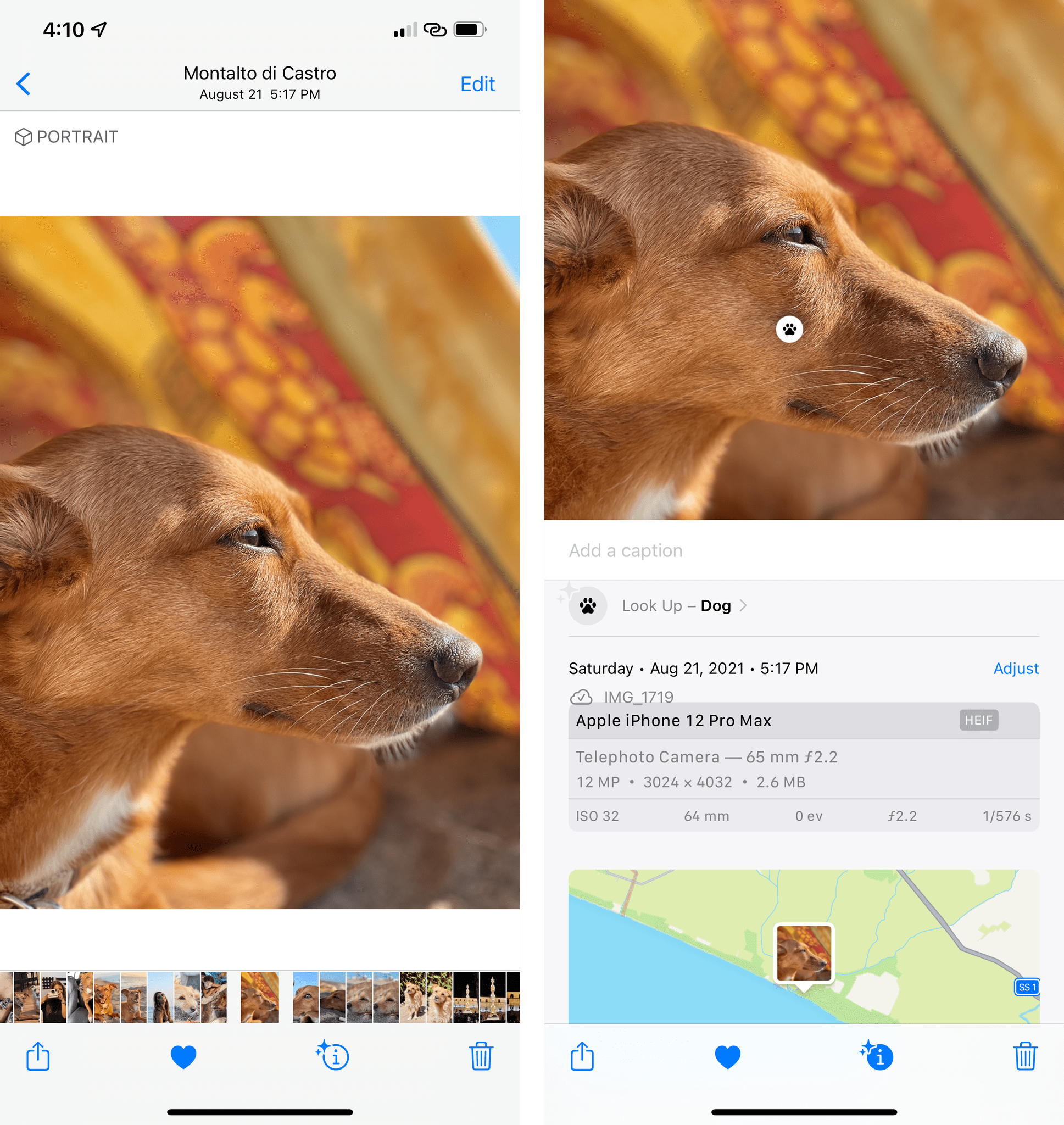

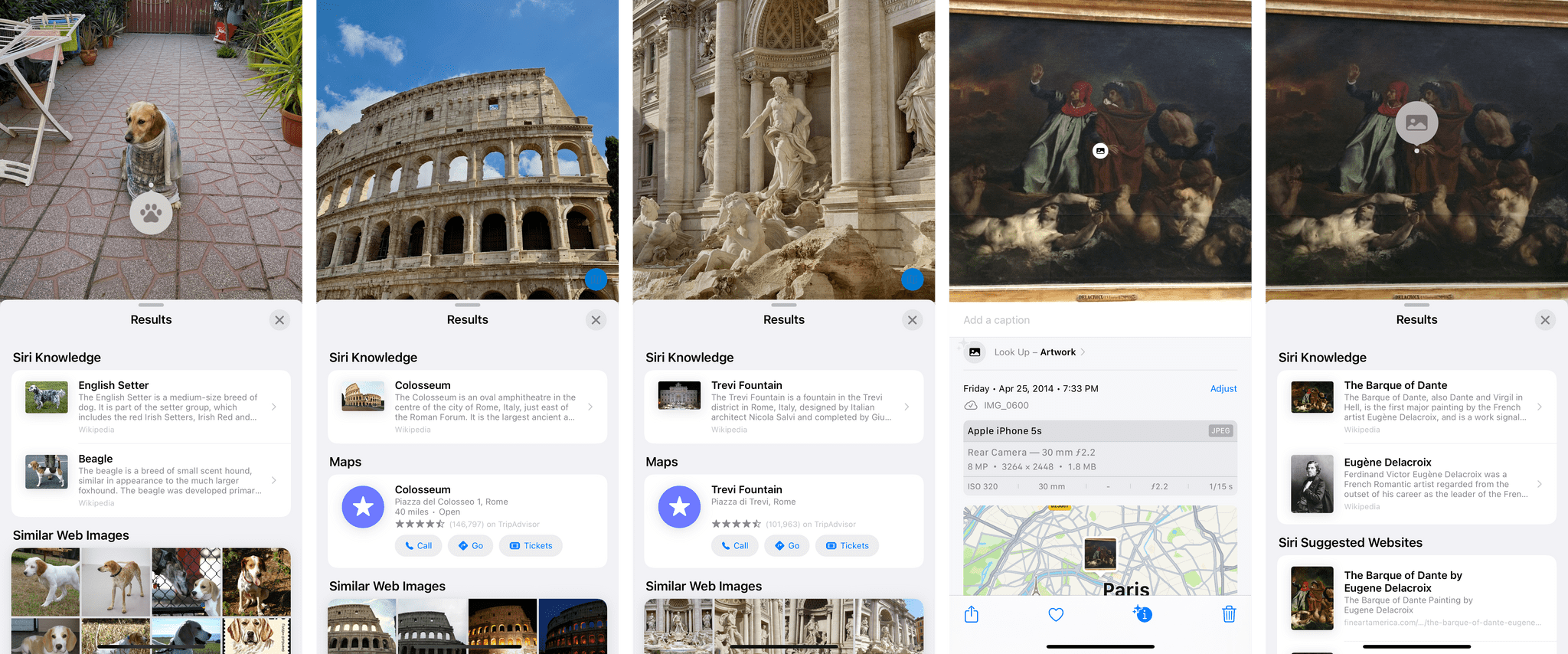

The other noteworthy addition is the integration between Photos and Look Up. According to Apple, the system’s Look Up engine can now find information such as popular art, books, landmarks around the world, flowers, and breeds of pets in your photos. Results are, as you’d expect, fairly hit or miss, but when Look Up works, it’s quite impressive. I was able to find the names of paintings I photographed at the Louvre museum years ago, confirm that my friends’ dog Wendy is, indeed, a Golden Retriever, and read Wikipedia’s entry for the Colosseum, which was featured in some of my photos.

When Look Up – which is powered by Siri knowledge – doesn’t know what’s in an image, results tend to be pretty generic and not that useful. My dog Ginger, for example, isn’t of any particular breed, but Look Up insists on showing my entries for Vizsla and Dachsund, neither of which looks anything like Ginger. There are also similar images from the web, which are…somewhat random, to be honest, so I’m not sure why they’re there. Still, it’s fun to play around with Look Up and see what it discovers in the Photos app.

Other Photos Changes

There are some other changes to the Photos app in iOS 15 worth covering:

Shared with You. By integrating with Messages’s Shared with You functionality, the app can show you photos shared with you alongside other images in your library. In theory, Shared with You should surface photos you care about, such as the ones where you’re also the subject, but I turned this off immediately: the way I see it, my photo library is mine, and I don’t want photos sent by my contacts to end up in it. You can turn off Shared with You for Photos from Settings ⇾ Messages ⇾ Shared with You; alternatively, you can keep it enabled but filter what you see in Photos by tapping More ⇾ Your Photos Only in the app.

Updates to the photo picker. In iOS 15, apps that request access to the native photo picker can now configure it for ordered selection with numeric badges. This can be especially useful for those times when you want to upload photos to a service in a specific order. The Photos picker in the Messages app now uses this method in iOS 15.

Improved people recognition. According to Apple, the Photos app can now detect people in extreme poses, wearing accessories, or with a partially-occluded face. Maybe I’m not cool enough to have photos of people in extreme poses on my iPhone, but I haven’t been able to tell the difference from iOS 14 here.

Open photos from Spotlight. You can now search for photos that match specific metadata (or text found via Live Text) from Spotlight. When you click an image from search results, it opens inline with a full-screen preview, and there’s a button to reopen it directly in the Photos app.

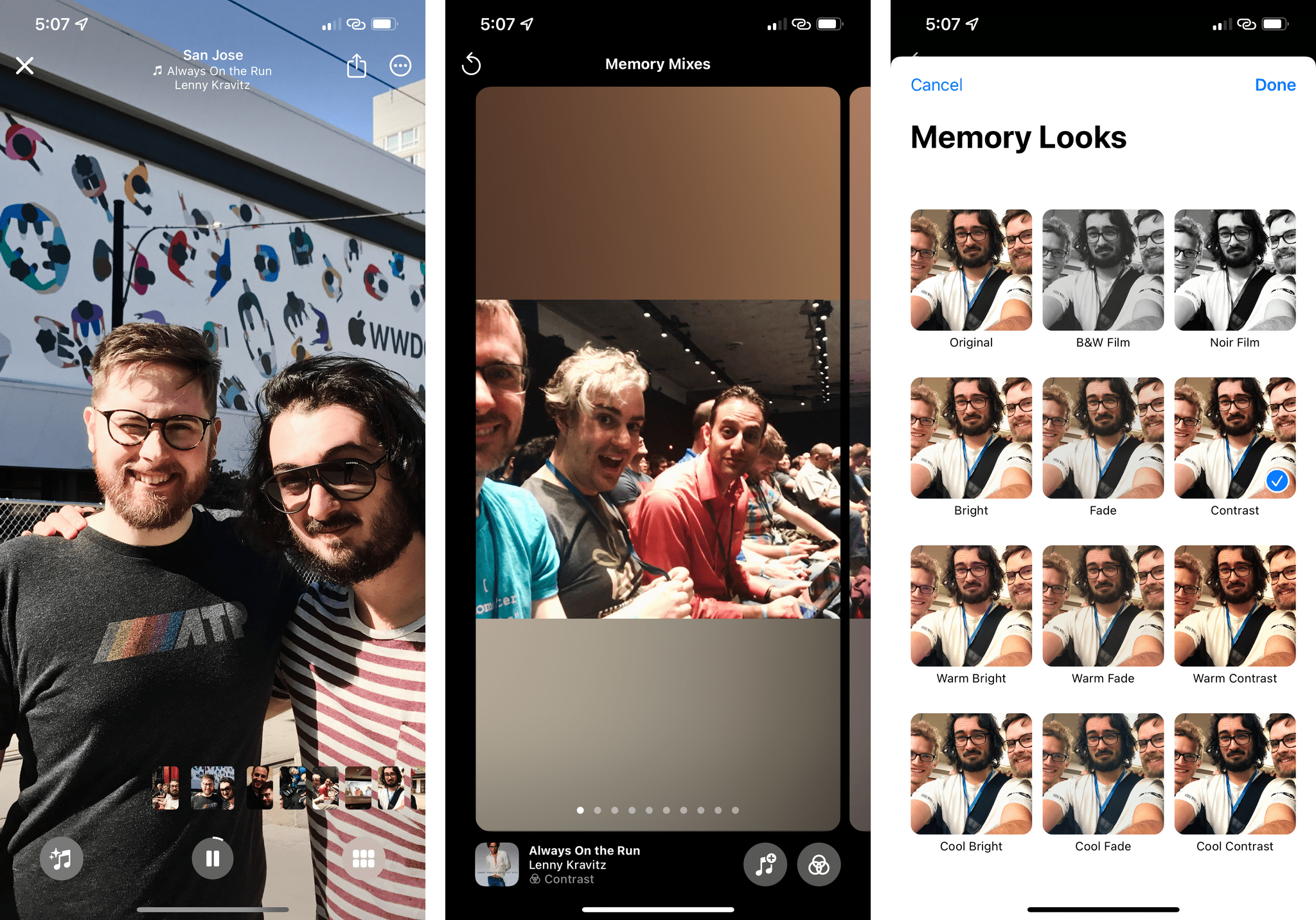

Updates to Memories. Apple continues to invest a lot of engineering and marketing resources on Memories, the feature of the For You page that lets you generate animated slideshows from your photos and videos. I’ve never seen a single person in real life use this functionality, but it has to be popular, otherwise I don’t know why Apple would care about it so much.

In iOS 15, a new option called Memory Mixes lets you try out different soundtracks for a memory video, and you can even change the appearance of the memory with effects called Memory Looks. For the first time, you can also choose any song from Apple Music as the soundtrack for a memory, but the system won’t allow you to share a video with Apple Music songs in them due to copyright reasons.

The company also claims “expert film and TV music curators” selected a set of default songs for Memories, which are combined with your music tastes, the contents of your photos, their original date, and their location to recommend songs that where popular when and where you captured an image or video.

I don’t know how to put this: either these curators need a crash course in music industry news, or the algorithm is way off on my iPhone. For a memory video created for WWDC 2019, the Memory Mixes feature suggested Lenny Kravitz’s 1991 song Always on the Run and Eurythmics’ 1983 hit Sweet Dreams (Are Made of This). Let’s just say that my iPhone’s Neural Engine was feeling nostalgic and wrap it up here.

Messages

Much to my surprise, changes to the Messages app this year do not improve upon the confusing thread interface launched last year, performance of search, or the way iMessage deals with group chats and voice messages. Instead, we’re only getting some Memoji updates and a system-wide feature Apple is heavily pushing as an iMessage-exclusive perk this year: Shared with You.

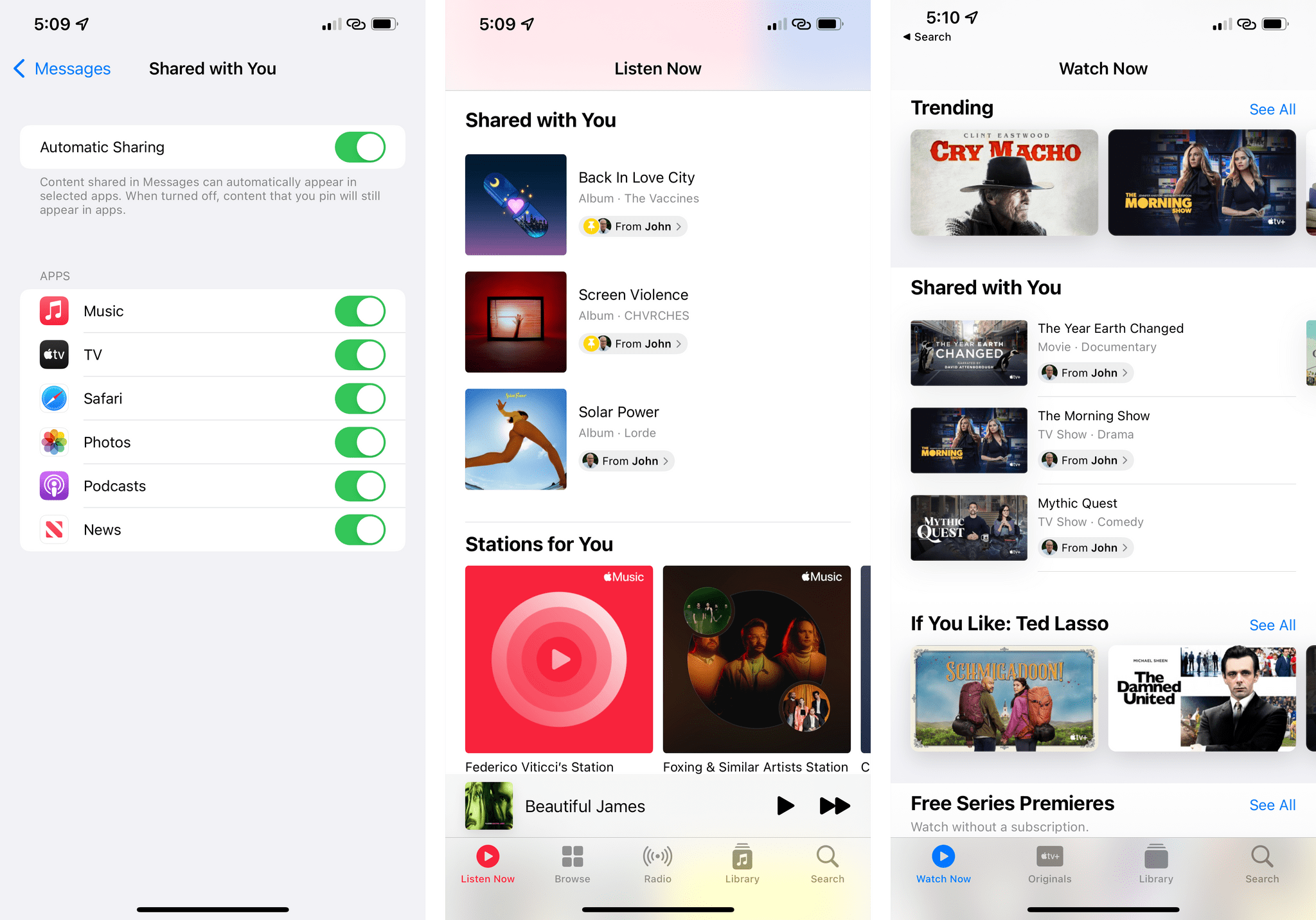

Shared with You

Think of Shared with You as an inbox for things your friends send you on iMessage that is scattered throughout the system and split up across multiple apps. Your spouse sent you a link to a TV show on Apple TV+? That link ends up in the Shared with You section of the TV app. Your roommate discovered a cool new band and sent you a link to their EP on iMessage? That link will be featured in the Shared with You section of the Music app. And so forth for all the apps that support Shared with You in iOS 15, which are:

- Music

- TV

- Safari

- Photos

- Podcasts

- News

The idea behind Shared with You is ingenious, and it fits with Apple’s broader vision for its ecosystem in that iMessage serves as an aggregator for links to different media services all managed by Apple. I think it’s a clever idea; I’m just not sure how useful it’ll be in practice.

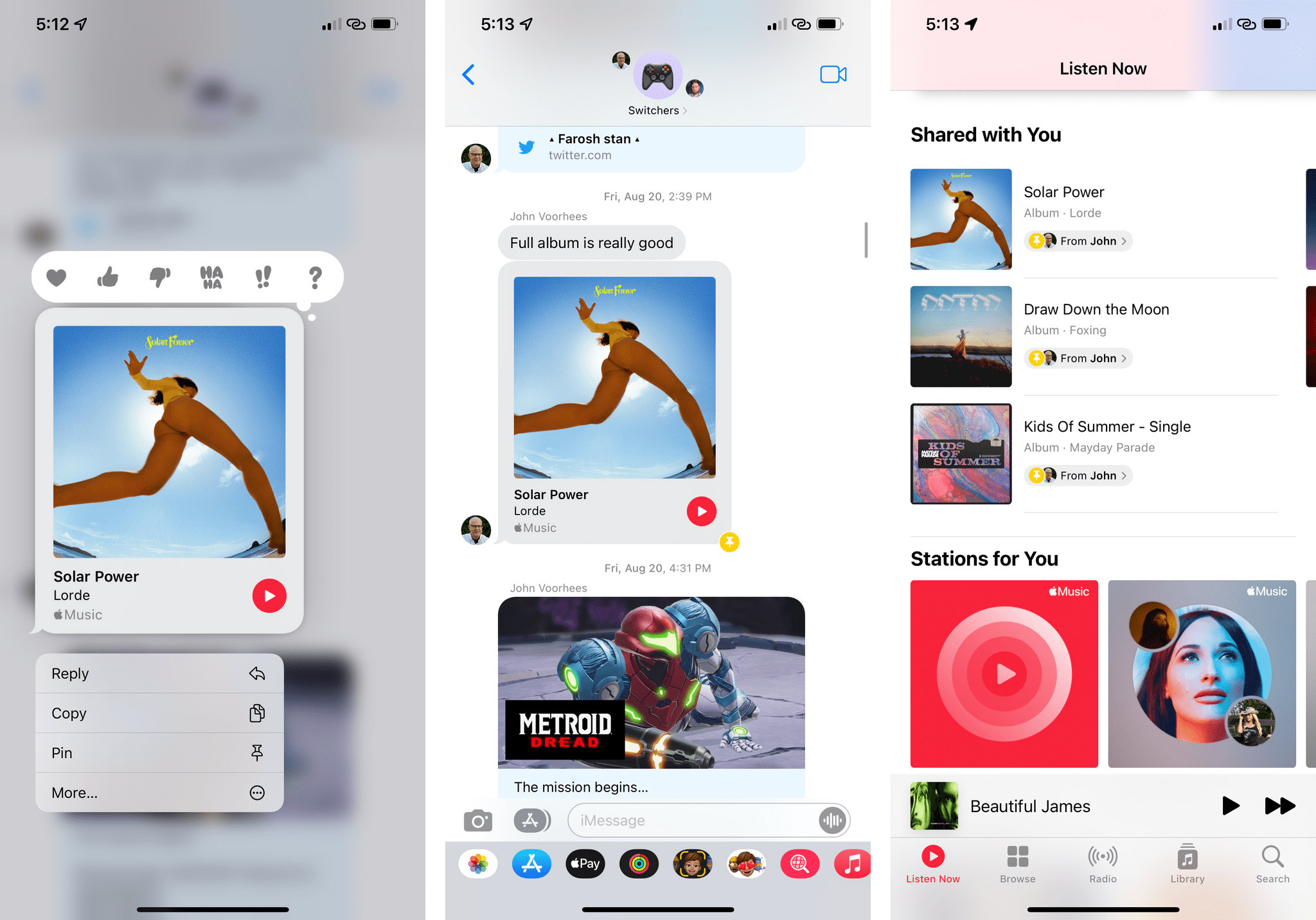

I’ve already covered how Shared with You appears in other apps, so I don’t want to repeat that here, but I want to point out a few implementation details worth your attention. First, the advantage of Shared with You over, say, saving links to read-later or bookmarking services is that you have a two-way integration between the ‘media’ app and iMessage. In every Shared with You section, you’ll see the item that was shared with you and a ‘From’ label that indicates who sent it to you. This label is a button you can tap to reopen an iMessage popup that shows you the item in the original context of the message that was shared with you.

This is my favorite aspect of Shared with You since it allows me to “catch up” with items shared with me at my own pace and follow up with my friends about them days later. I do this in the only app where I keep Shared with You enabled, which is Music: once I’ve had the time to listen to something John sent me, I can re-surface the original message, even days after it was sent to me, and reply to it. No other bookmarking service has this kind of integration, which only Apple can offer thanks to its lock-in effect between services.

The other detail of Shared with You I appreciate is how you can choose to make it work automatically for all links, enable it for some apps only, or set it to manual mode. In Settings ⇾ Messages ⇾ Shared with You, you’ll find an Automatic Sharing toggle that is tuned on by default. This means that Shared with You is always active and enabled for all compatible apps. If you want, you can disable Shared with You for specific apps if you’re not interested in them, but the automatic content sharing will continue to work.

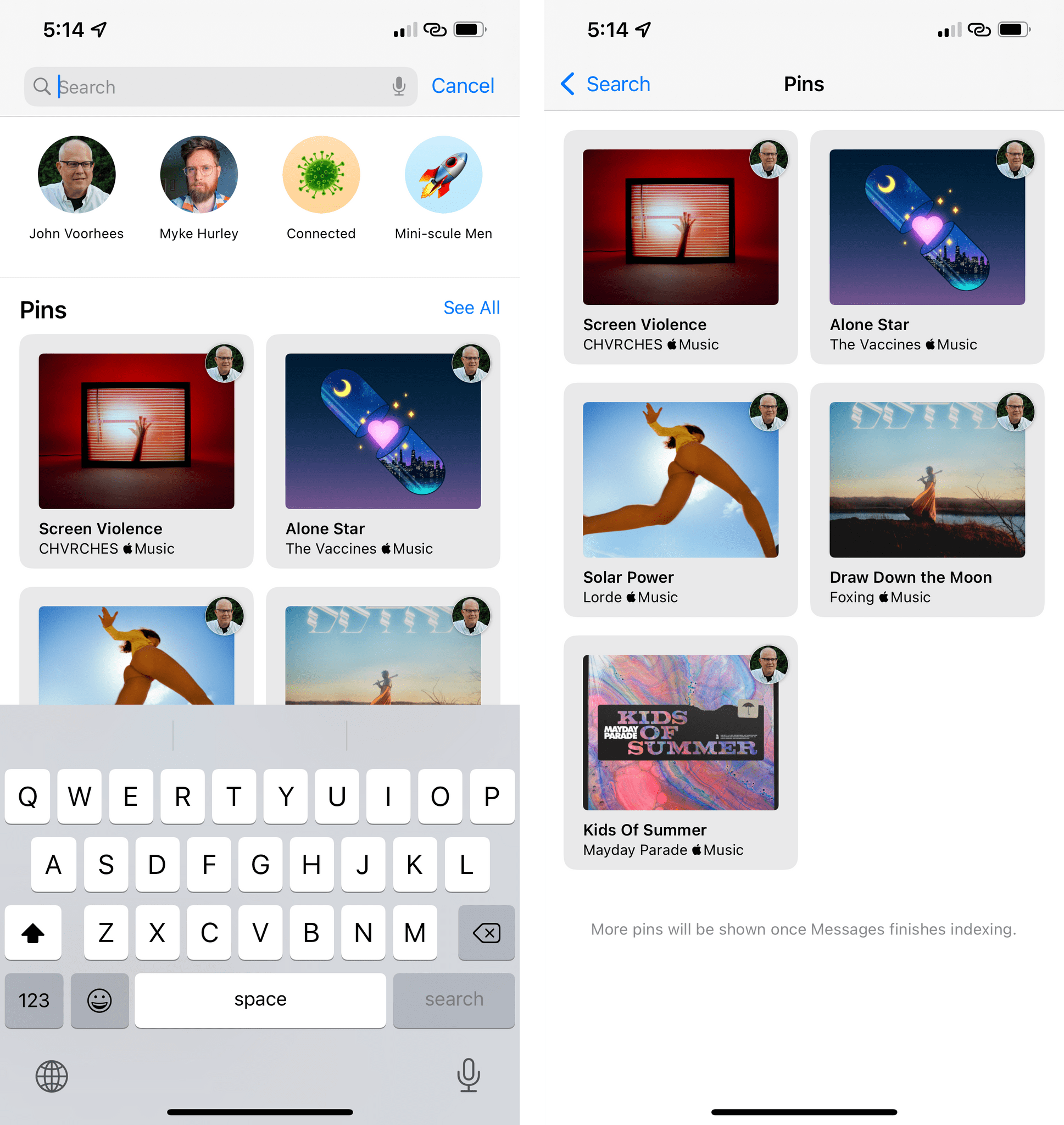

Alternatively, you can turn off Automatic Sharing and Shared with You will be off by default. But – and here’s the feature I like – you can still take advantage of Shared with You by manually pinning items you want to save for later. To do this, you have to long-press a link in an iMessage conversation and select the new ‘Pin’ option. Pins are, effectively, manual overrides for when Shared with You is disabled, which is how I like to use this feature. I keep it always turned off, but when my friends send me something I want to see later in Apple’s media apps, I manually pin it so it can be saved in Shared with You until I’m done with it.

There are two more details I want to mention. You can view all your pins by selecting the Search field at the top of the Messages app:

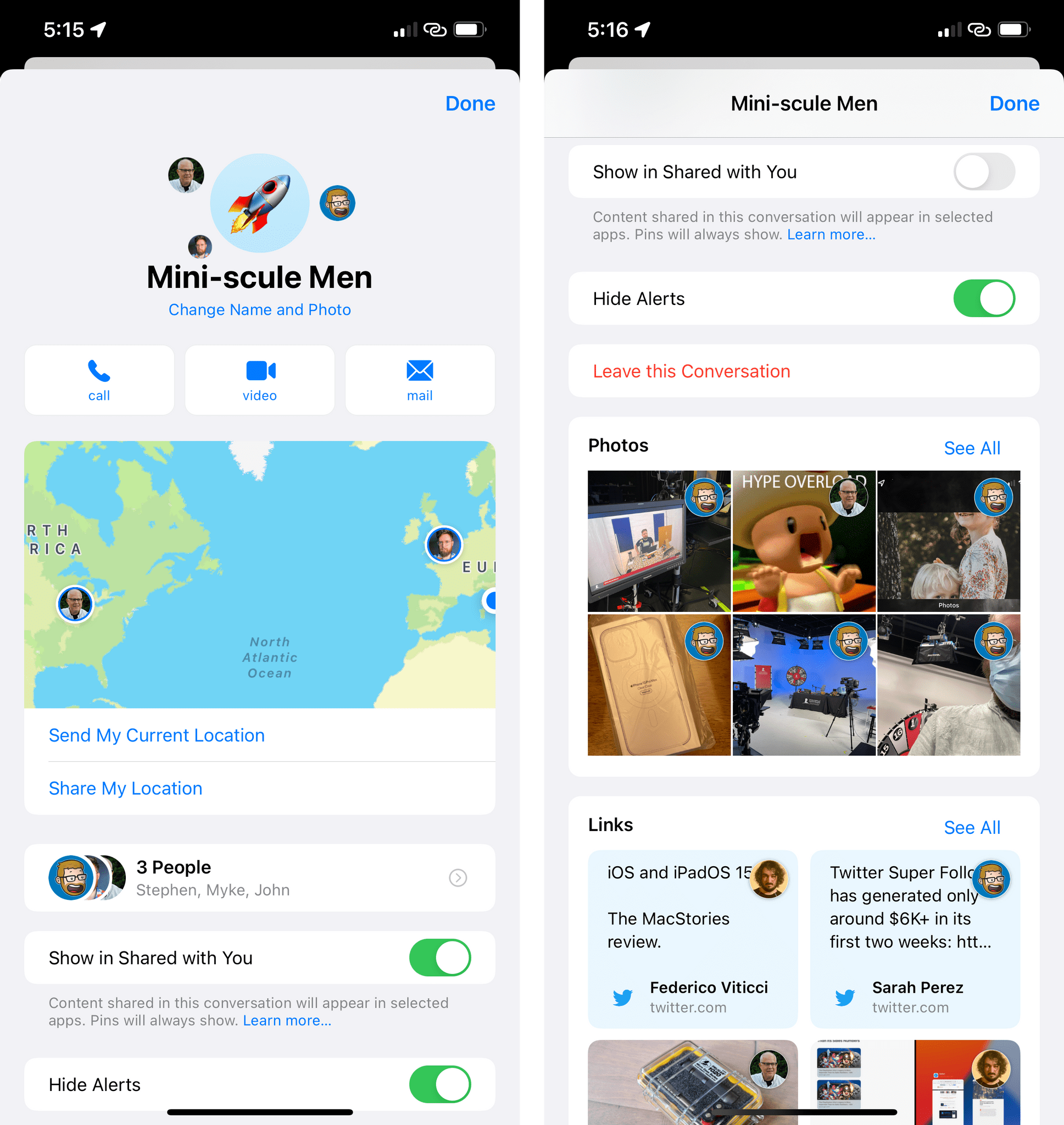

And you can also override Shared with You for individual conversations and group threads by hitting the profile photo at the top of the app and unchecking ‘Show in Shared with You’:

Other Messages Changes

There are some other changes to the Messages app in iOS 15 worth covering:

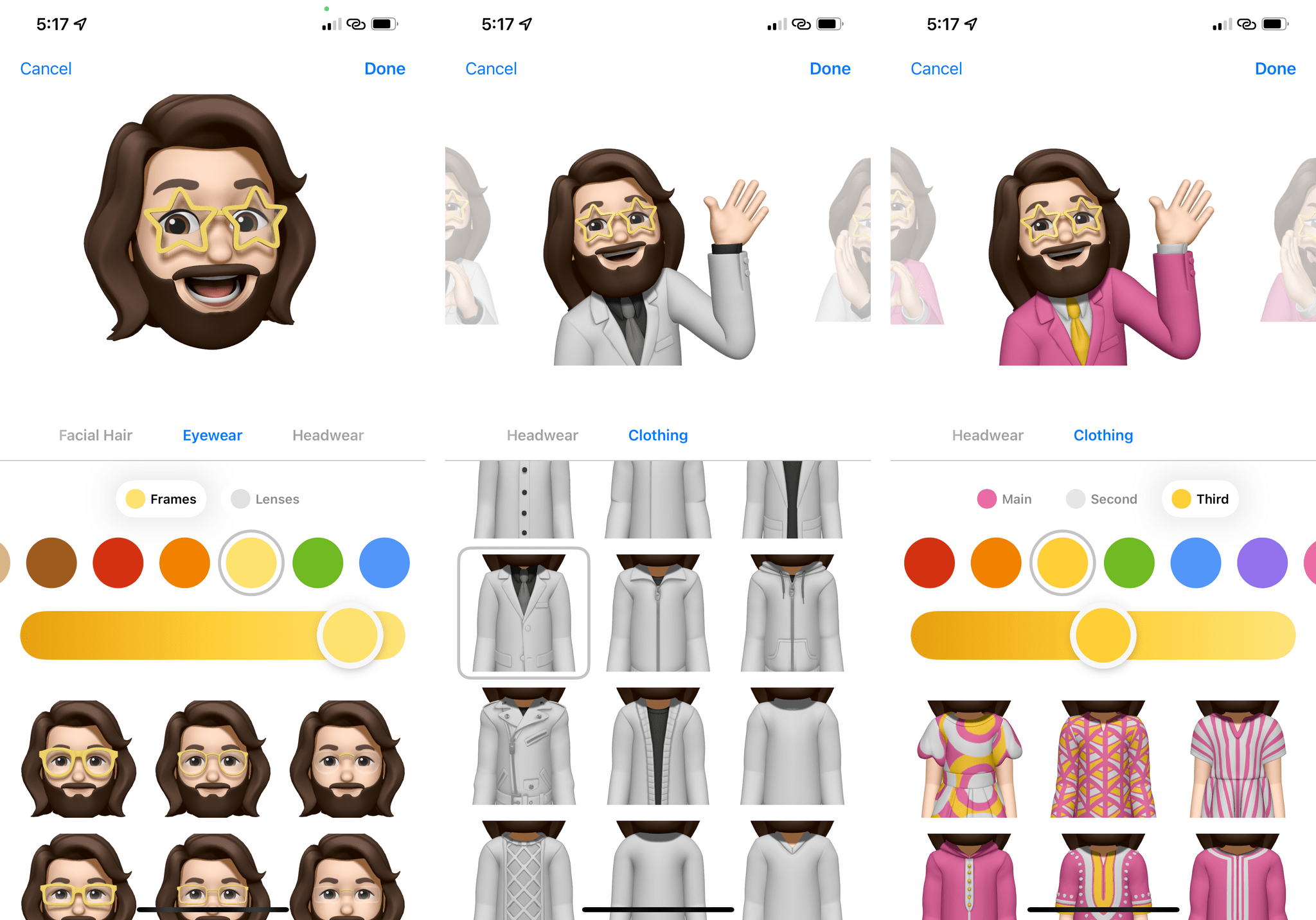

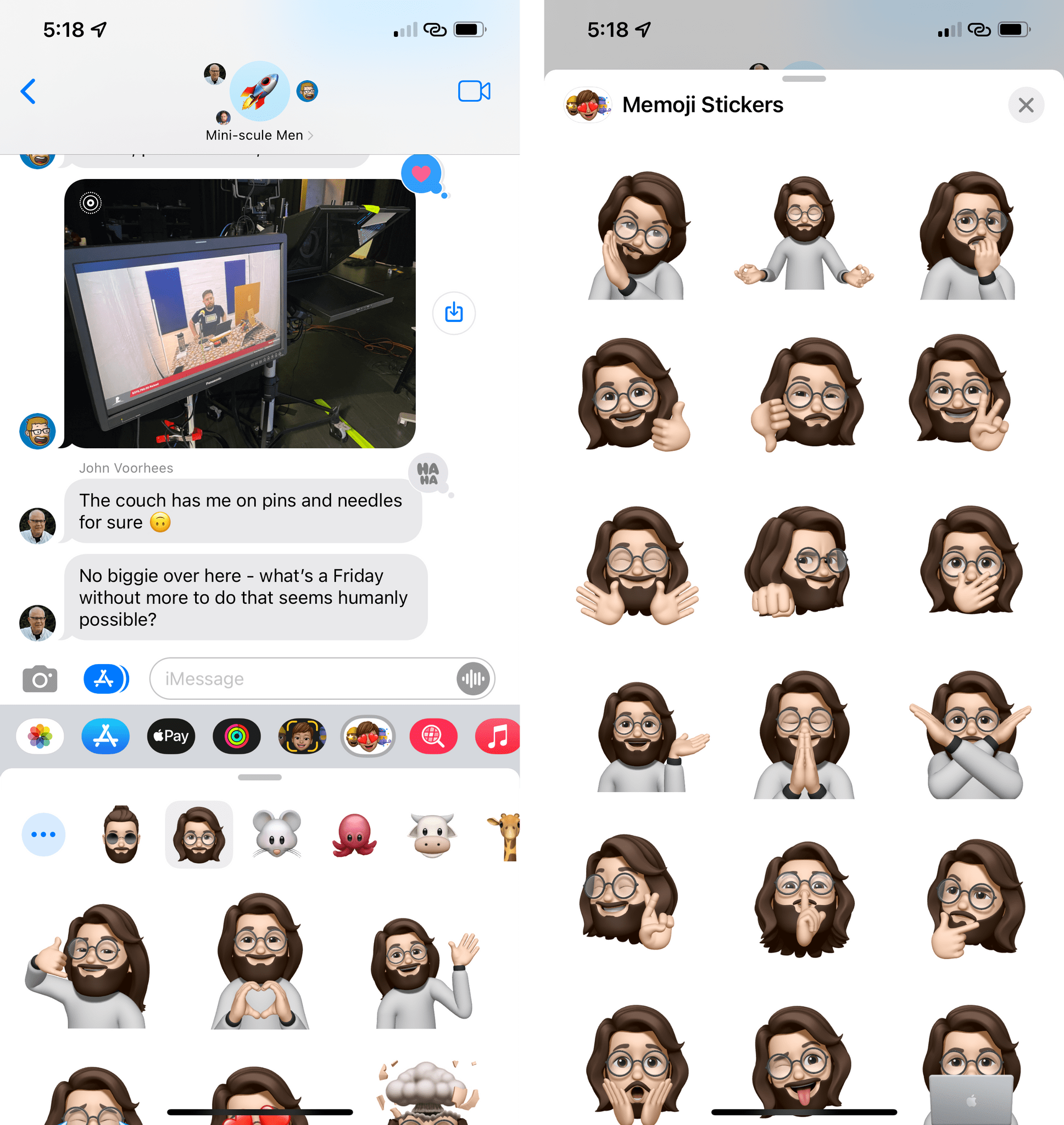

Memoji updates. I’d be remiss if I didn’t mention Apple’s annual updates to Memoji. For the first time, you can select an outfit for your Memoji character: there are over 40 clothing options to choose from, which will be reflected when your character is displayed in stickers. You can select up to three different colors for your outfit; the multi-color option has been extended to headwear as well this year.

There are new glasses to choose from (heart, star, and retro-shaped options), new accessibility options we previously detailed (cochlear implants, oxygen tubes, and soft helmets), and you can select a different color for your left eye and right eye.

You’ll also find nine new iMessage stickers featuring your Memoji in some…interesting poses:

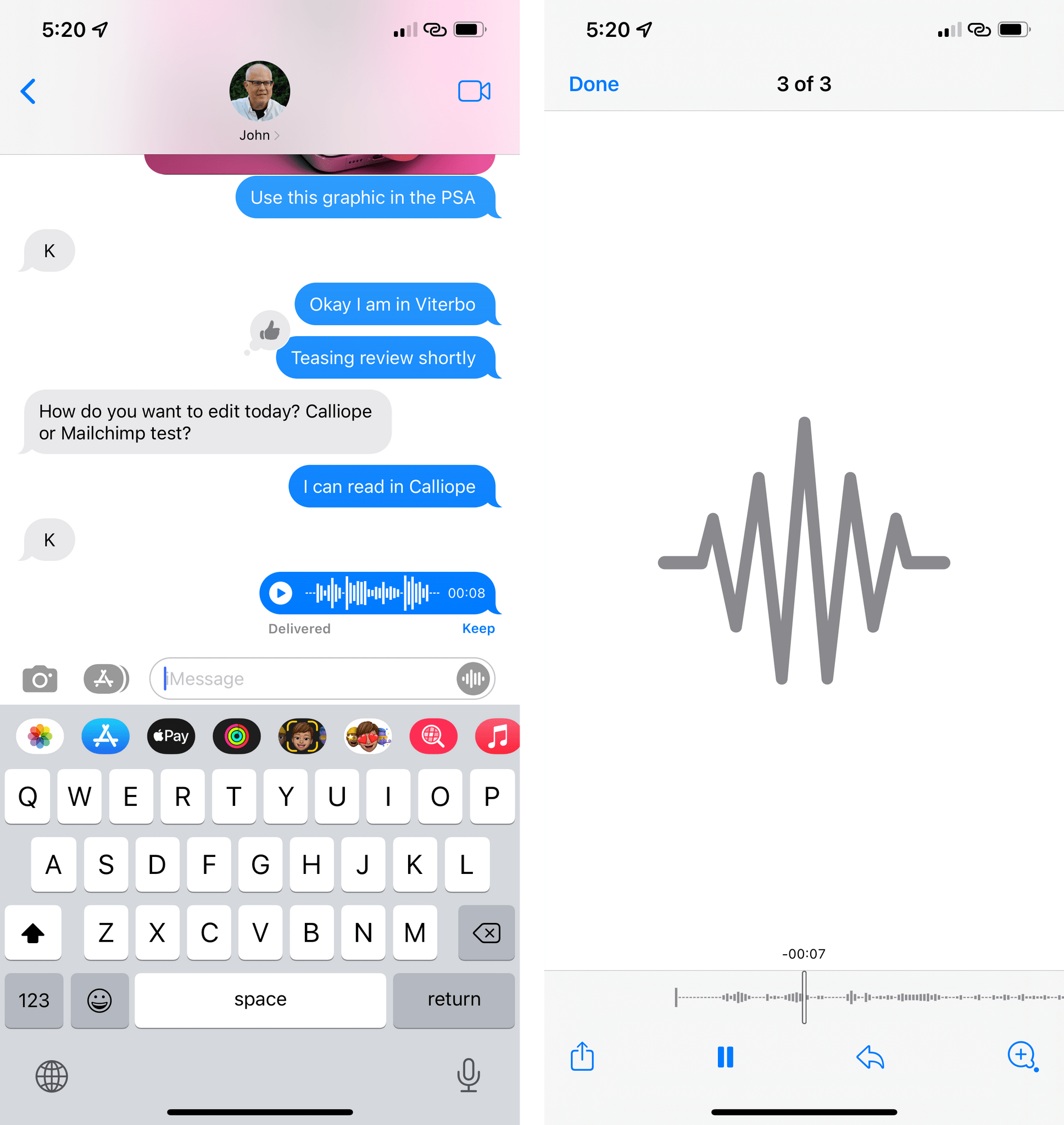

Voice messages and updated previews. I shared my appreciation for voice messages earlier this year, and although Apple’s implementation in iMessage still isn’t as good as WhatsApp’s, it’s getting better. In iOS 15, you can long-press a voice message and open it with an audio player in full-screen. The player is a native Quick Look preview, so you can play, pause, and scrub through audio as always.

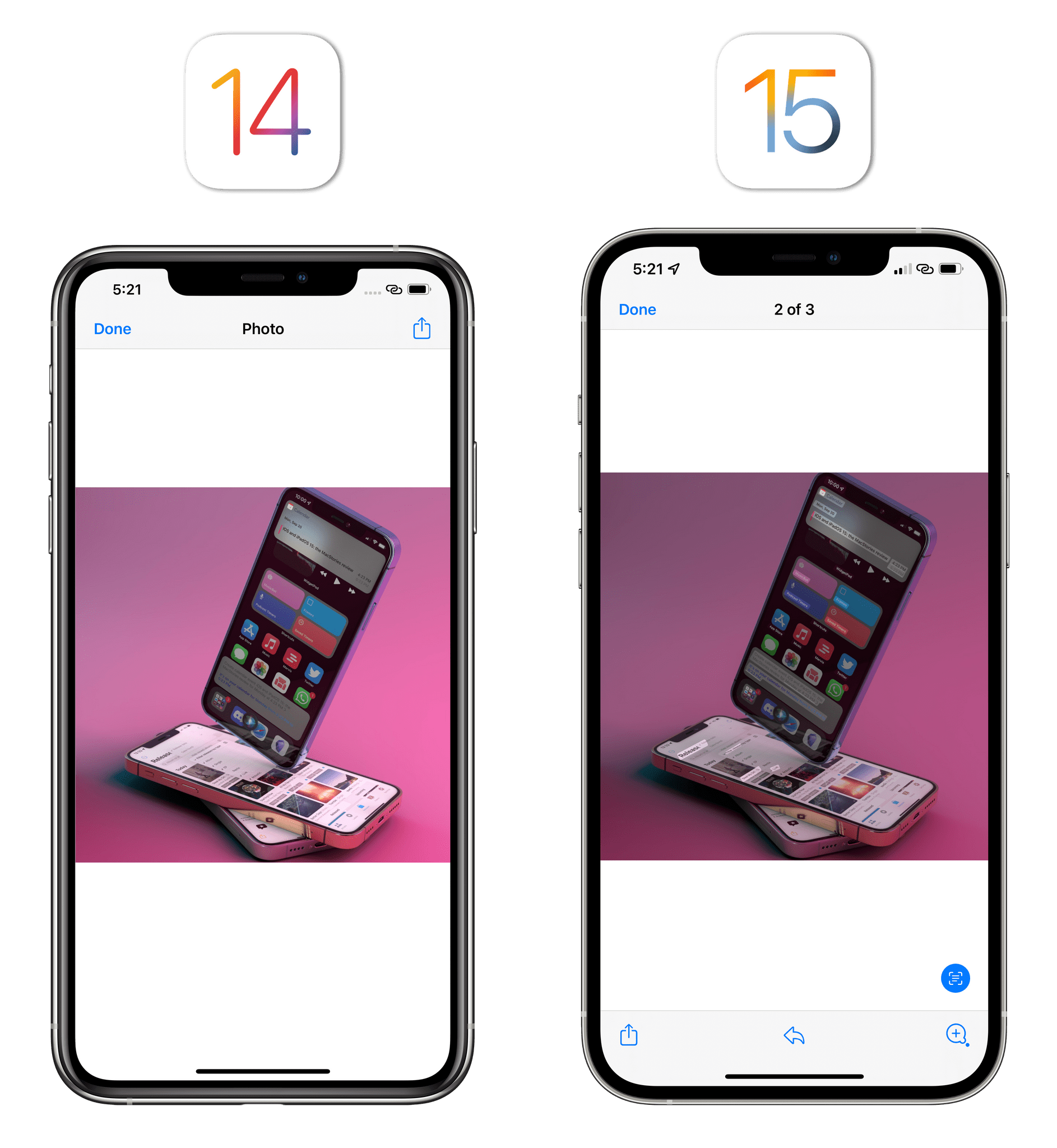

Also new in iOS 15: Quick Look previews in iMessage have been updated with the ability to reply to the original message or add a Tapback reaction from the full-screen view, which I find very convenient.

Improved Quick Look previews in iOS 15 even support Live Text (which can find text in all sorts of orientations, as seen here).

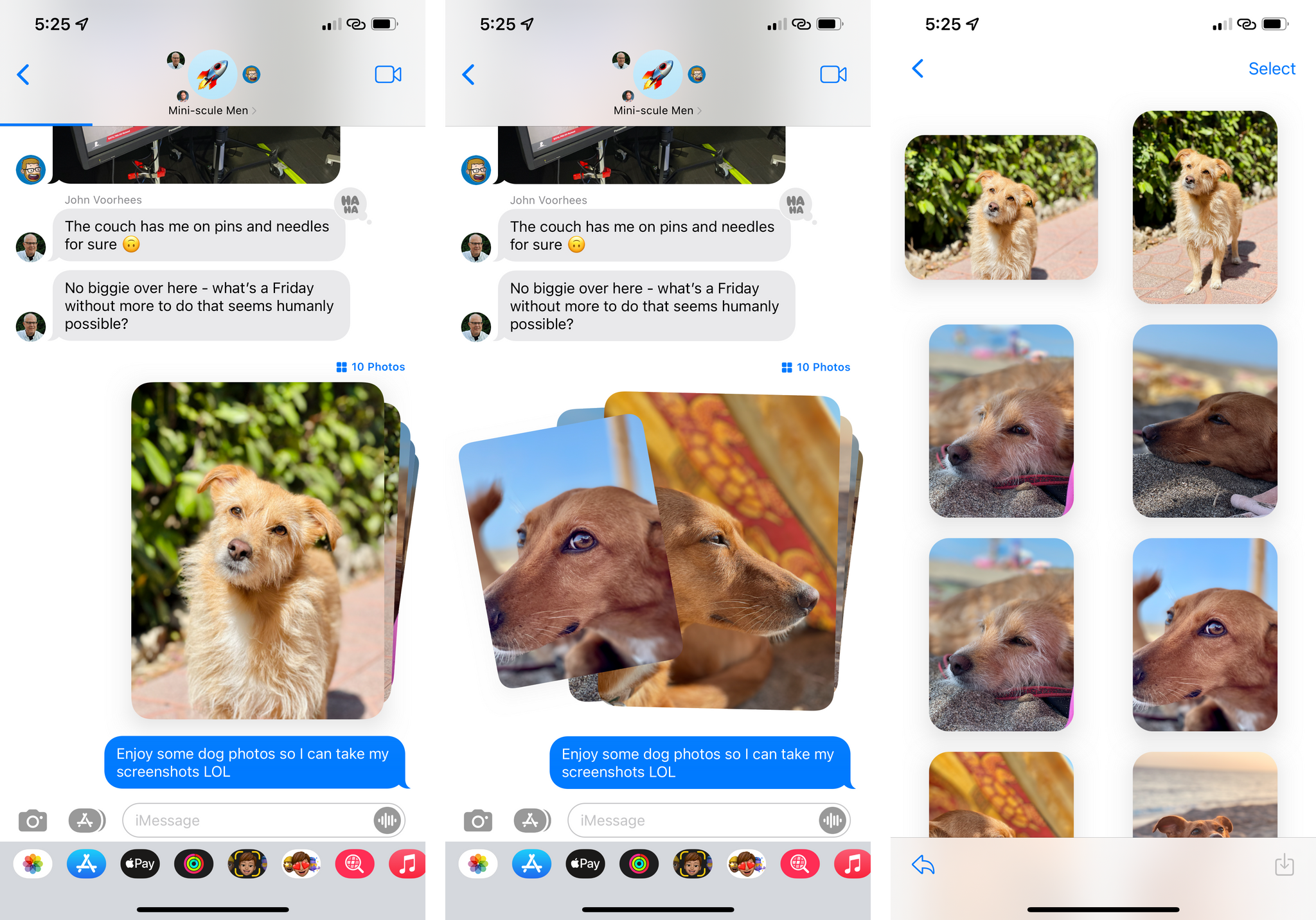

Photo stacks. Lastly, Apple has introduced a new way to preview multiple photos sent by someone as a single message. Instead of taking over the entire iMessage transcript, multiple photos will be collected in a single “deck of cards” that only takes up the space of a single photo in the conversation.

You can quickly swipe through these large thumbnails – just like cards – to preview them, which results in a lovely animation I want everyone to appreciate:

There’s also a download button that lets you add all photos to your library with one tap and label you can tap to view all photos as a grid.

I feel like this is the kind of feature that wants to solve a very specific problem (friends and family sending you all the photos of you they have from a particular event), and it’s well done.

- This is a floating 'Info' panel on iPadOS. Interesting design decision! ↩︎