Keyboard

Some fascinating features went into the system keyboard this year. Although I continue to think that typing on Android is now generally more accurate than iOS, I also appreciate the integrations between different system services that are appearing in the iOS keyboard.

For people like me, there is a big change this year: bilingual keyboards. Now, don’t get me wrong. It was already possible to type in multiple languages on iOS without having to manually switch keyboards every time. Here’s how I described iOS 10’s support for multilingual typing eight years ago:

In iOS 10, Apple is taking the first steps to build a better solution: you can now type in multiple languages from one keyboard without having to switch between international layouts. You don’t even have to keep multiple keyboards installed: to type in English and French, leaving the English one enabled will suffice. Multilingual typing appears to be limited to selected keyboards, but it works as advertised, and it’s fantastic.

The idea is simple enough: iOS 10 understands the language you’re typing in and adjusts auto-correct and QuickType predictions on the fly from the same keyboard. Multilingual typing supports two languages at once, it doesn’t work with dictation, but it can suggest emoji in the QuickType bar for multiple languages as well.

In iOS 18, the feature has been simplified and reached its logical conclusion: you can now set up one software keyboard in Settings that contains multiple languages. The system doesn’t have to remember languages per-contact or per-app. It just lets you switch between words of different languages as type, mid-sentence, without correcting you for using words that don’t belong to one of the two languages.

I can’t even begin to describe how much of a positive impact this small addition to the iOS typing experience has had on my life lately. Bilingual users will relate. I text my partner dozens of times each day using a weird – but familiar to us – combination of Italian and English, because that’s just the person I’ve become negli ultimi anni. With iOS 18, the system is much more flessibile about accepting mixed sentences with words from two linguaggi; it can even dynamically switch between two languages in the same sentence, without underlining parts of it as errori grammaticali. It’s remarkable.

As I said above, I still feel like autocorrect and predictive text are generally better on Android, especially for longer strings of text in just one language, but for iMessage? The bilingual keyboard Apple built is terrific for short messages that mix and match languages. Apple did build the unified keyboard I wanted after all.

Beyond languages, the keyboard’s growing supply of smart QuickType suggestions now includes mathematical operations powered by Math Notes. Type any simple or complex operation in any text field, and after entering the equal sign, you’ll see a suggestion above the keyboard to solve it for you.

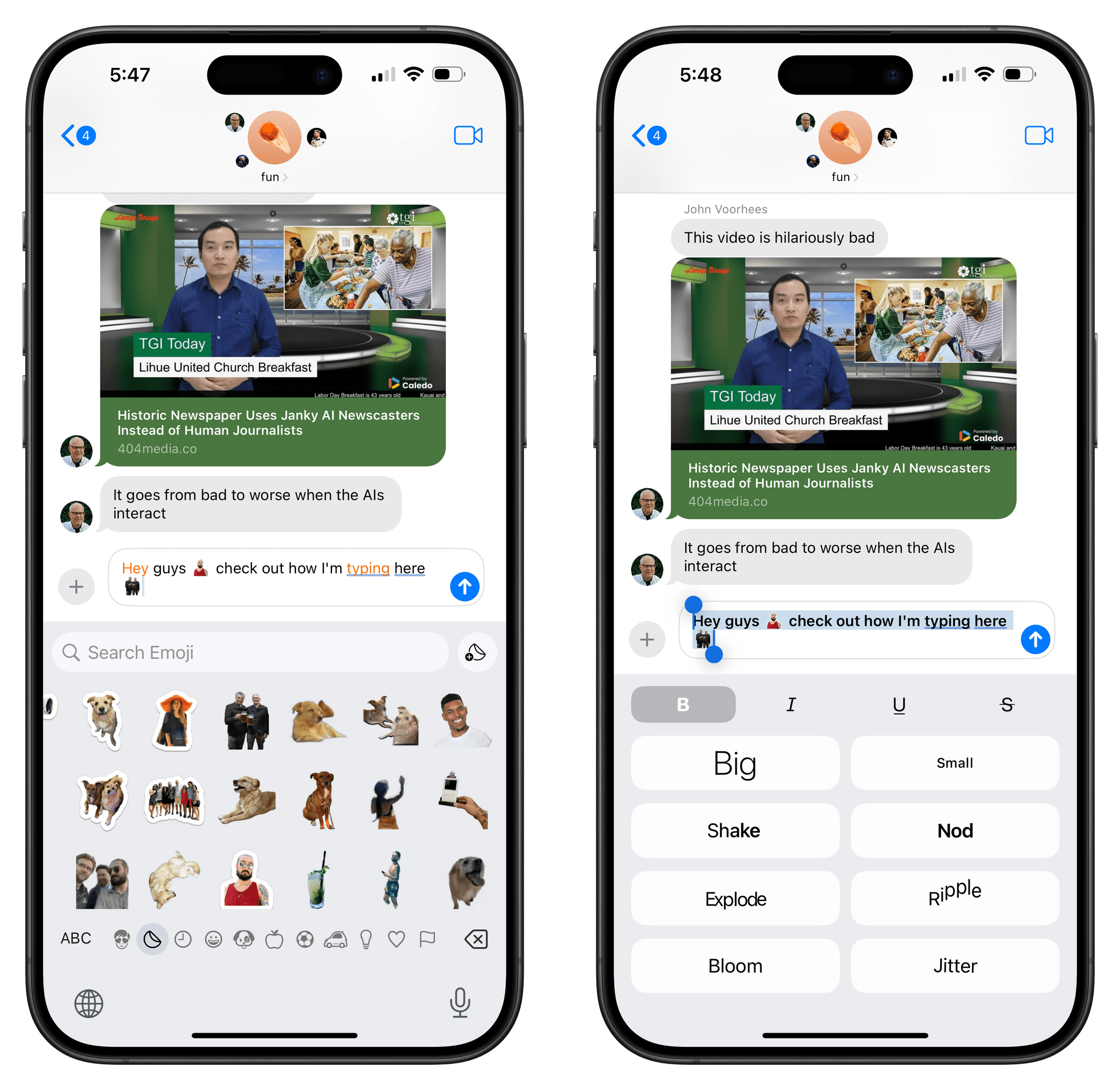

Additionally, in preparation for Genmoji, Apple has rolled out support for inserting stickers and Memoji inline with text, just like any other regular emoji. This is what I mean:

I mention Genmoji because the fact that stickers already work with this system is no coincidence. For the upcoming Genmoji feature, Apple created a new technology called Adaptive Glyph that allows tiny images to coexist on the same line as attributed text. Based on a square image format that supports multiple resolutions, alignment metrics for different scripts, and metadata for accessibility, this API lets stickers and Genmoji be displayed alongside text, attributed strings, and even HTML. They’re not Unicode characters; they’re something else entirely that Apple built specifically for its platforms.

I tested this feature in Messages, Notes, and Mail (three apps that support attributed text), and it worked perfectly. It’ll be interesting to see if third-party apps and social media clients will be able to integrate with this tech down the road, especially looking ahead to the launch of Genmoji.

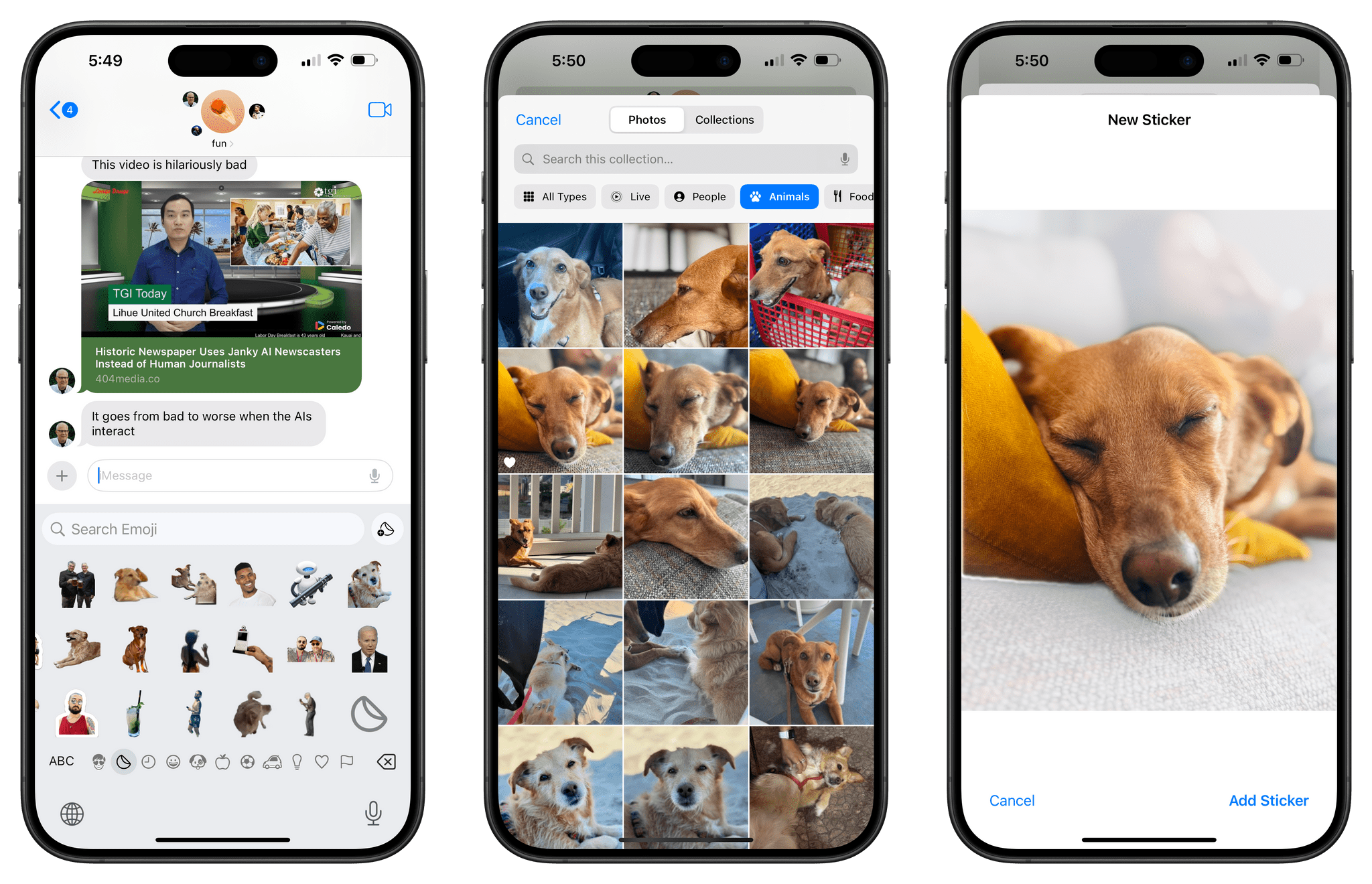

Speaking of stickers, I should note that it is now possible to create a new sticker directly from the keyboard’s sticker panel by pressing the ‘+’ button. Doing this will bring up the image picker, and the system will take care of isolating the subject of your selected image and making a sticker out of it.

You can now create stickers directly from the unified keyboard by tapping the button next to the search bar (left).

Screen Sharing with Remote Control

The ability to share your screen with someone else over FaceTime was introduced as part of the SharePlay rollout in iOS and iPadOS 15.1. In iOS and iPadOS 18, the screen sharing feature is getting a major update with the ability to tap and draw on someone else’s screen and even ask to control their device remotely.

Let’s remember how it all began. Here’s what I wrote three years ago, which still holds up very well today:

In my tests with screen sharing in FaceTime over Wi-Fi, image quality from John’s iPad was great, with minimal degradation that did not prevent me from reading small text such as text inside widgets on John’s Home Screen. When I was watching John’s screen, I could still see him in a floating window in the corner of the FaceTime UI.

I had a feeling this would be the case, but after testing it, I’m convinced that screen sharing will be the most popular and useful SharePlay feature for all Apple users. Screen sharing built into iOS and iPadOS will be incredible for tech support with family members or friends who are having issues with their devices; starting with iOS 15.1, you can just tell them to hop on FaceTime, press the rectangle icon, and you’ll be able to see their screen. Whoever thought of building this feature inside FaceTime at Apple should get an award. I love it, and I look forward to solving my mom’s iPhone problems with it.

Over the past three years, I did end up solving my mom’s iPhone problems remotely (and Silvia’s mom’s, too) thanks to screen sharing in FaceTime. But you know what was missing? The ability to say, “I’ll just do it myself”, or, “tap the thing I’m pointing at”. The updated screen sharing fixes all of this, and once again, I think it’s going to be an outstanding addition for all of us who serve as remote IT people for our parents.

When you start observing another person’s screen in iOS 18, the system will tell you that you can now tap and draw on their screen. Taps are drawn as purple circles that disappear quickly – they’re subtle, but noticeable.

Drawings are also represented by purple ink, which then disappears into a cloud of magic dust that’s reminiscent of Digital Touch, Safari’s Distraction Control or, if you will, the Thanos snap. Pick your favorite reference.

With the ability to control another person’s device, you literally take over their interactions until they interact with the device again (which can be a tap or even taking a screenshot on their end).

After pressing the control button in screen sharing, the other person will be asked if they know you and want to grant you the ability to control the screen:

Once they approve, you’ll be staring at the other person’s screen…on your screen, with the ability to remotely use the device. The first time you swipe on the other device’s Home indicator to go back to the Home Screen and it does so, remotely, it can be quite freaky. There is a bit of lag, obviously, but it’s completely acceptable. Remember: this feature is supposed to replace clunky VNC clients with terrible image quality, high latency, and laborious installation flows. The fact that this just works in FaceTime, for free, with multiple levels of protection for the person who shares their screen is, honestly, incredible.

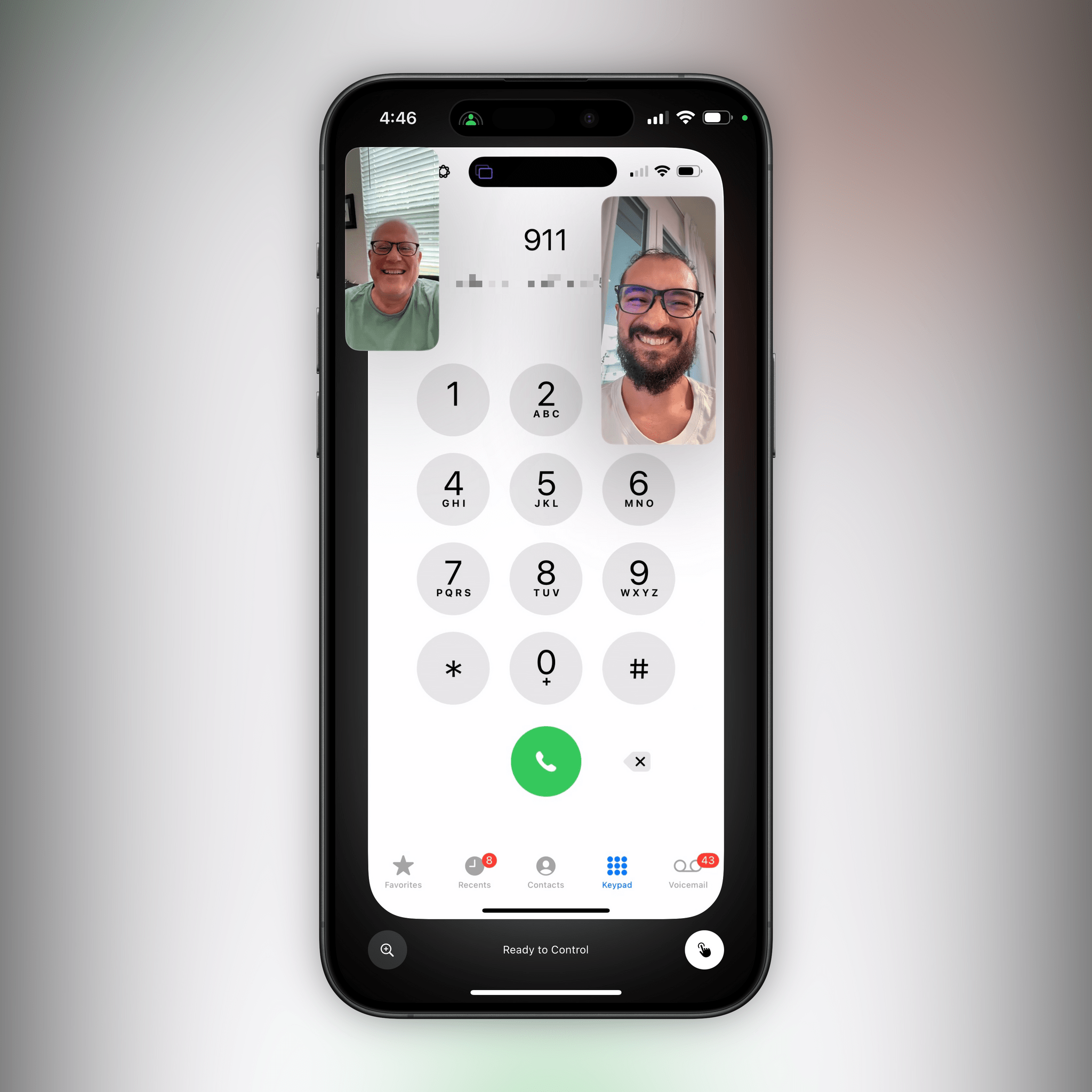

I was able to test remote control with John over FaceTime, and I noticed some interesting details in Apple’s implementation. I was able to open John’s Camera app, have him move the phone to look around his office, and remotely press the shutter button. I couldn’t, however, type in Spotlight search or see the Passwords app. When I opened it, I got a message telling me that I had to wait for John to proceed.

These restrictions indicate that Apple thought about the potential for misuse of this functionality against relatives or friends who may not know all the details of their phones. I found it amusing, however, that I was able to open the Phone app and dial 911.10 I didn’t fumble around to find out if it would have worked, but boy was I tempted.

I look forward to fixing my mom’s Wi-Fi connection and updating her apps remotely thanks to the addition of remote control in FaceTime’s screen sharing.

Live Activities

Finally, there are a couple of additions to Live Activities I want to mention. When I was setting up my Apple Watch after a reset earlier this summer, I noticed a Live Activity pop up after I closed the Watch app, showing me the sync progress for the Apple Watch:

I’m sure you’ve seen this elsewhere, but Apple has designed a beautiful, ridiculously over-the-top custom Live Activity for…the flashlight. When you turn the flashlight on, you’ll see a special Live Activity in the Dynamic Island that you can interact with. Swipe up and down on the flashlight to change its intensity, or swipe horizontally to control its cone of light.

This Live Activity didn’t have to go so hard, and I love it. As long as I have this weird and powerful flashlight, I don’t need Apple Intelligence.

- On a more serious note, I have to wonder if the ability to call 911 while controlling another person's device is actually a feature. What are the chances that Apple designed this option in case someone is unable to speak, but can tap the screen to accept a FaceTime call and wants to let you control their device to call emergency services (which can locate the original device's location)? I couldn't find out, but I wouldn't be surprised to hear Apple thought about this – or, perhaps it's just bad design. ↩︎