Hipstamatic, a photo sharing app for the iPhone that allows users to apply vintage/analog effects and filters to their photos, has become the first app to directly integrate with Instagram. The popular iPhone-only sharing service, now boasting over 27 million users and on the verge of releasing an Android app, has so far allowed third-party developers to integrate their apps with the Instagram API to only visualize a user’s photos or feed. The API hasn’t allowed for the creation of real Instagram clients for other devices, in that uploading could be done exclusively using Instagram’s own app.

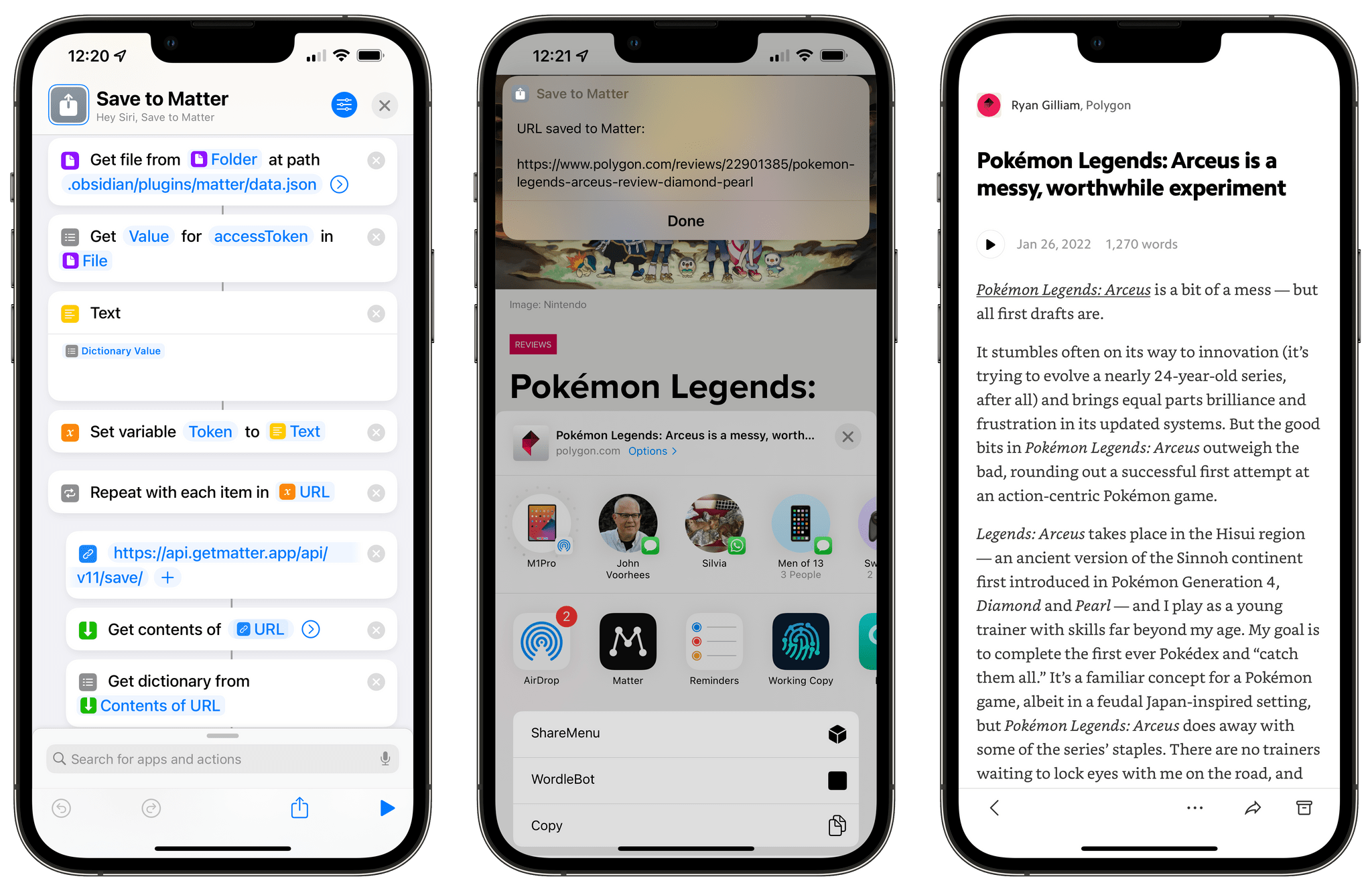

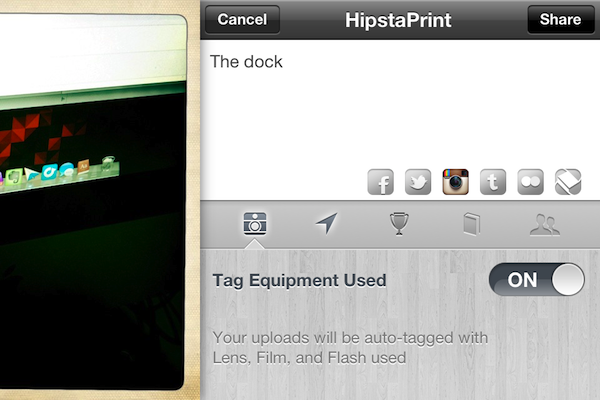

Today, however, an update to Hipstamatic and a collaboration between the two services first reported by Fast Company might signal an important change in Instagram’s direction and nature as a photo sharing service. The new Hipstamatic, available now on the App Store, comes with a redesigned “HipstaShare” system to send photos to various social networks including Facebook, Twitter, and Flickr. Among the supported services, a new Instagram option now enables you to log into your Instagram account, and upload photos directly within Hipstamatic, without leaving the app. There is no “forwarding” of files to the Instagram app, nor does Hipstamatic asks you to download the Instagram app from the App Store – this is true uploading to Instagram done by a third-party, via the API.

Unlike most photo sharing apps these days, Hipstamatic puts great focus on recreating the analog experience of shooting photos and carefully selecting the equipment you’d like to shoot with. With a somewhat accurate representation of vintage films, lenses, camera cases, and flash units, Hipstamatic wants to appeal to that kind of userbase that is not simply interested in capturing a fleeing moment and share it in seconds; rather, as famous appearances on publications like The New York Times confirm, the Hipstamatic crowd is more of a passionate gathering of 4 million users looking to spend minutes, if not hours, trying to achieve the perfect setup for each occasion, spending one dollar at a time on in-app purchases that unlock different filters and “parts” of the cameras supported in Hipstamatic. Unlike Instagram or, say, Camera+, Hipstamatic isn’t built to shoot & share; the ultimate goal is undoubtedly sharing, but it’d be more appropriate to describe Hipstamatic’s workflow as “set up, shoot, then share”.

Hipstamatic seems to have realized, however, that sharing can’t be relegated to a simple accessory that has a second place behind the app’s custom effects and unlockable items. Whilst in-app purchases and fancy graphics may have played an important role in driving Hipstamatic’s success so far, apps can’t go without a strong sharing and social foundation nowadays, and since its launch two years ago, Instagram has seen tremendous growth for being only an iPhone app. With this update, Instagram and Hipstamatic are doing a favor to each other: Instagram gets to test the waters with an API that now allows for uploading through other clients that support similar feature sets; Hipstamatic maintains its existing functionalities, but it adds a new social layer that plugs natively into the world’s hottest photo sharing startup.

Looking at the terms of the “deal” (I don’t think any revenue sharing is taking place between the two parties), it appears both sides got the perks they wanted. This native integration comes with an Instagram icon in Hipstamatic’s new sharing menu, which, when tapped, will let you log into your account. Once active, each “Hipstaprint” (another fancy name for photos) can be shared on a variety of networks, with Facebook even supporting friend tagging. You can upload multiple photos at once if you want, too. In the sharing panel, you can optionally decide to activate “equipment tagging” – this option will, alongside the client’s information, include #hashtags for the lens, film and other equipment that you use in your Hipstamatic camera.

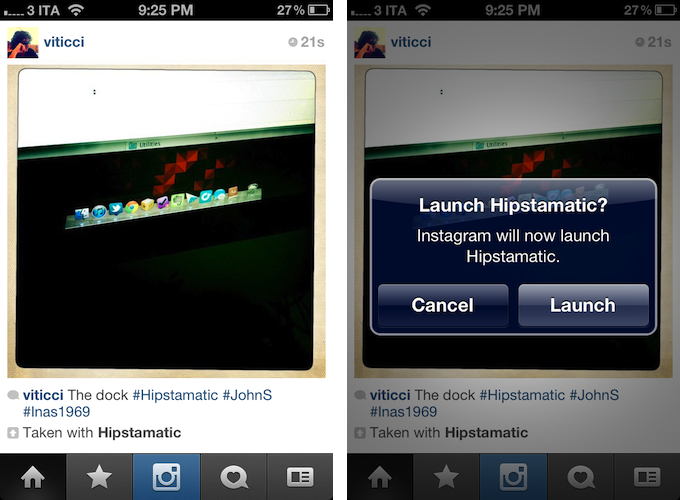

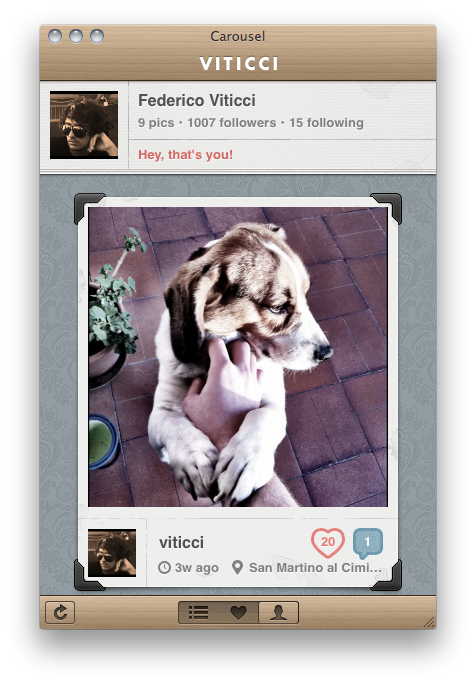

On the Instagram’s side, things get a little more interesting. Hipstamatic photos get uploaded respecting Instagram’s photo sizes, and they get a border around the image to, I guess, indicate their “print” nature. Together with the title, Instagram will display the aforementioned tags for equipment, and a “Taken with Hipstamatic” link that, when tapped, will ask you to launch Hipstamatic. If you don’t have Hipstamatic installed on your iPhone, this link will take you to the App Store page for the app.

Overall, what really intrigues me about this collaboration isn’t the Hipstamatic update per se – version “250” of the app is solid and well-built, but I don’t use Hipstamatic myself on a regular basis, as I prefer more direct tools like Instagram, Camera+, or even the Facebook app for iOS. What I really think could be huge, both for the companies involved and the users, is the API that Hipstamatic is leveraging here. Hipstamatic is doing the right thing: sharing has become a fundamental part of the mobile photo taking process, and it would be foolish to ignore Instagram’s popularity and come up with a whole new network.

Instagram, on the other hand, is taking an interesting path (no pun intended) that, sometime down the road, might turn what was once an iPhone app into a de-facto option for all future social sharing implementations. A few months from now, would it be crazy to think Camera+ could integrate with Instagram to offer antive uploads? Or to imagine built-in support for Instagram photo uploads in, say, iOS, Twitter clients, and other photo apps? I don’t think so. Just as “taken with Hipstamatic” stands out in today’s Instagram feeds, “Upload to Instagram” doesn’t sound too absurd at this point.