Tim Hardwick, writing for MacRumors, on a strange limitation of the Apple Intelligence rollout earlier this week:

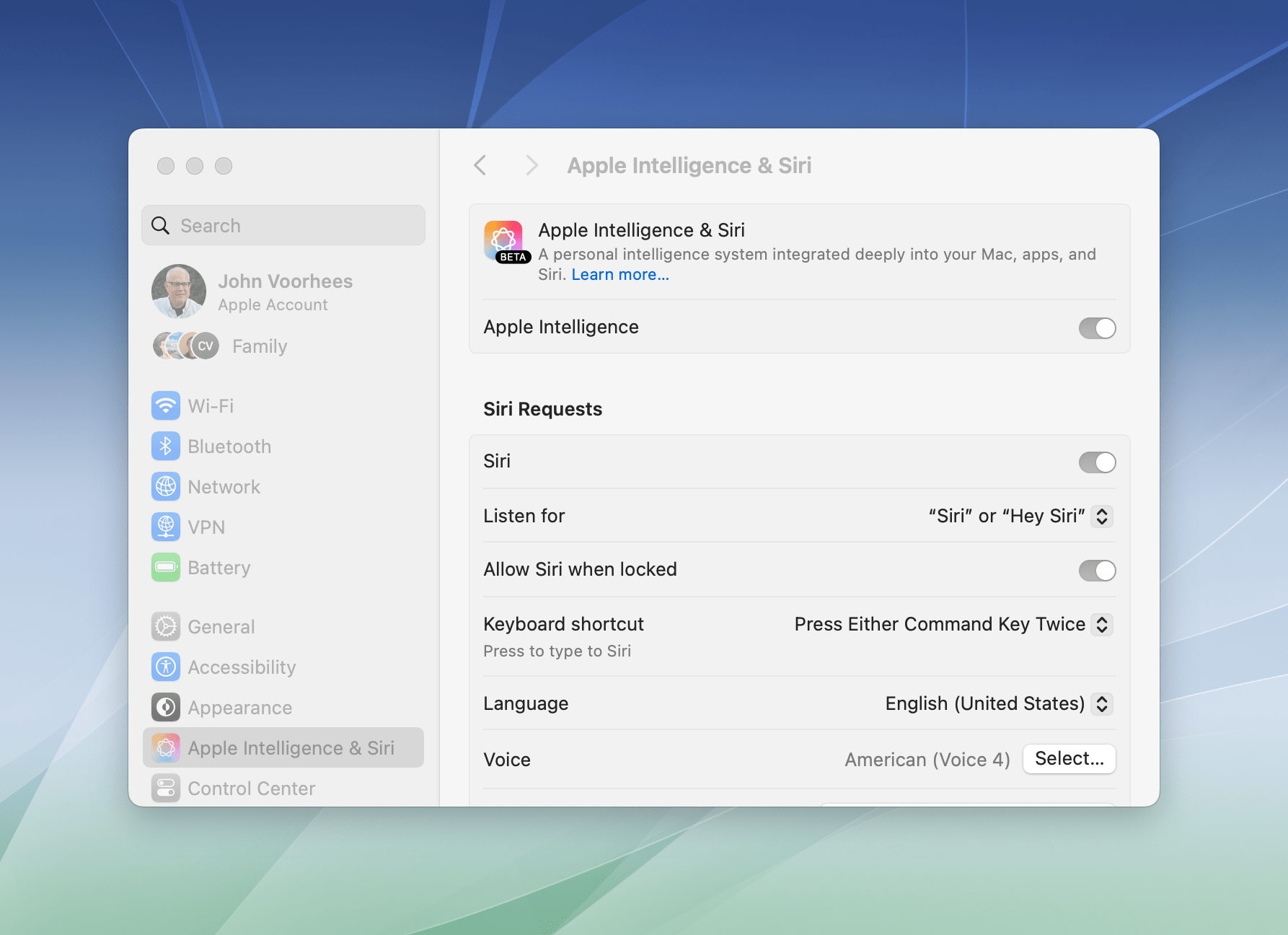

Apple’s new Mail sorting features in iOS 18.2 are notably absent from both iPadOS 18.2 and macOS Sequoia 15.2, raising questions about the company’s rollout strategy for the email management system.

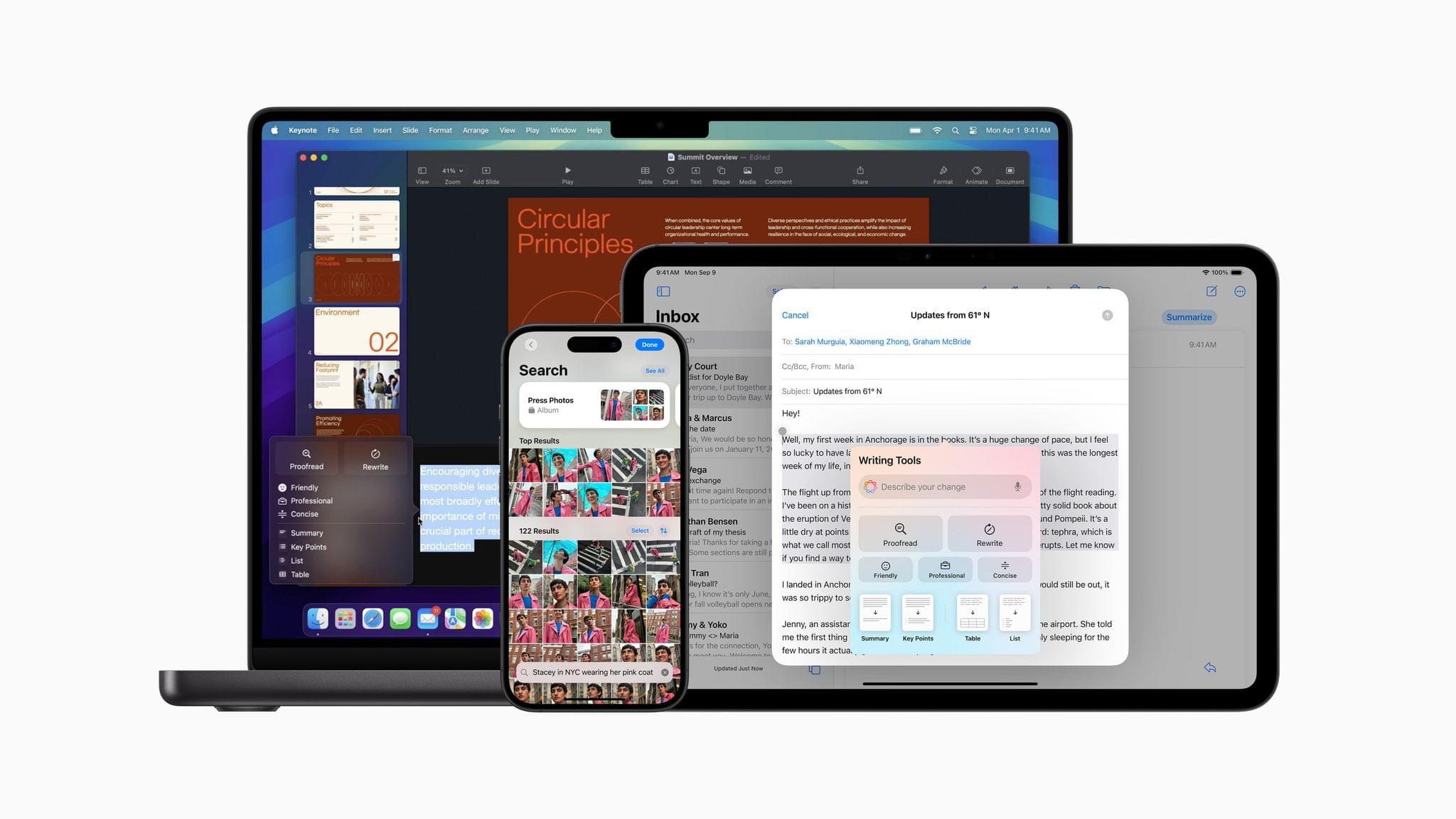

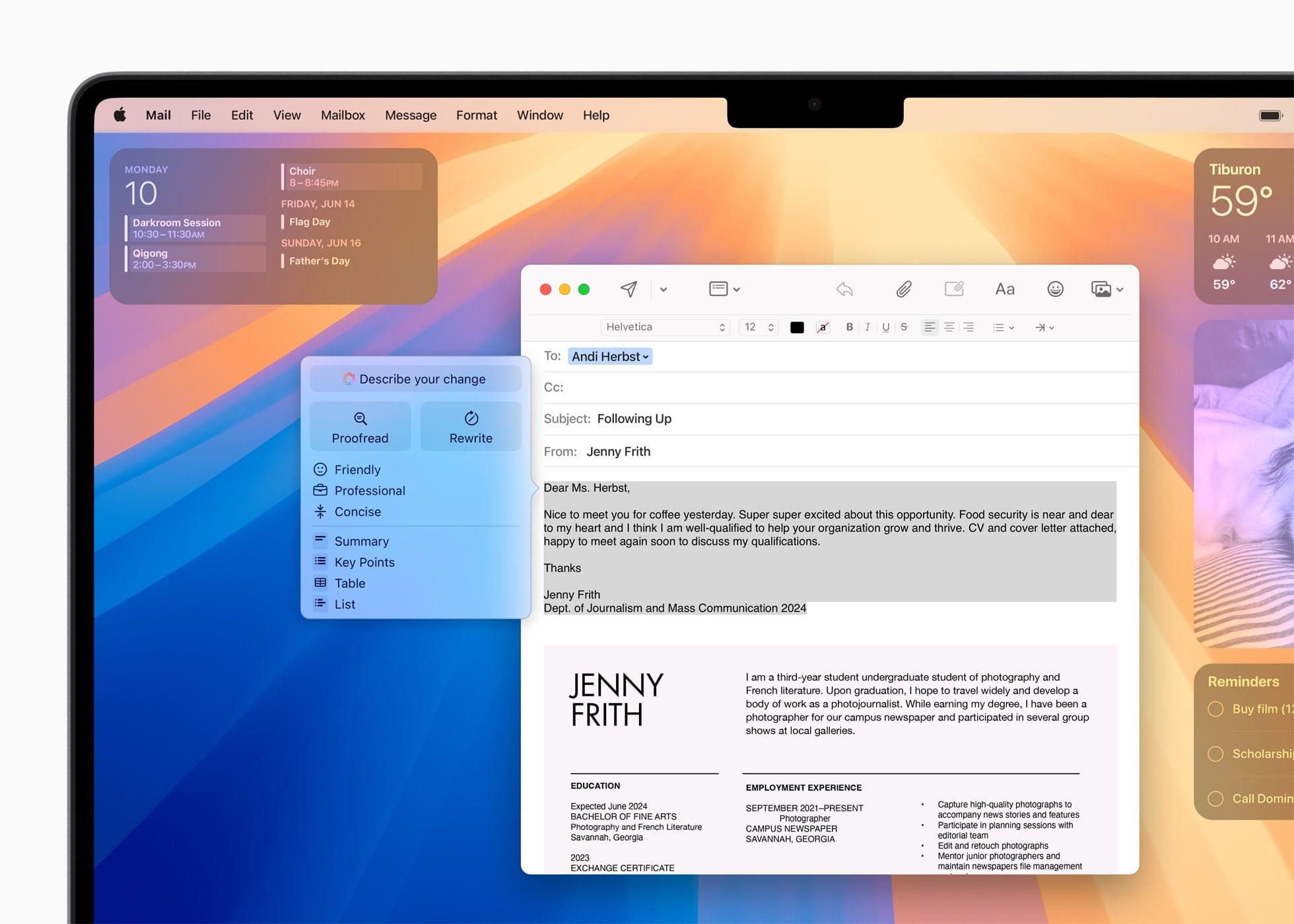

The new feature automatically sorts emails into four distinct categories: Primary, Transactions, Updates, and Promotions, with the aim of helping iPhone users better organize their inboxes. Devices that support Apple Intelligence also surface priority messages as part of the new system.

Users on iPhone who updated to iOS 18.2 have the features. However, iPad and Mac users who updated their devices with the software that Apple released concurrently with iOS 18.2 will have noticed their absence. iPhone users can easily switch between categorized and traditional list views, but iPad and Mac users are limited to the standard chronological inbox layout.

This was so odd during the beta cycle, and it continues to be the single decision I find the most perplexing in Apple’s launch strategy for Apple Intelligence.

I didn’t cover Mail’s new smart categorization feature in my story about Apple Intelligence for one simple reason: it’s not available on the device where I do most of my work, my iPad Pro. I’ve been able to test the functionality on my iPhone, and it’s good enough: iOS occasionally gets a category wrong, but (surprisingly) you can manually categorize a sender and train the system yourself.

(As an aside: can we talk about the fact that a bunch of options, including sender categorization, can only be accessed via Mail’s…Reply button? How did we end up in this situation?)

I would very much prefer to use Apple Mail instead of Spark, which offers smart inbox categorization across platforms but is nowhere as nice-looking as Mail and comes with its own set of quirks. However, as long as smart categories are exclusive to the iPhone version of Mail, Apple’s decision prevents me from incorporating the updated Mail app into my daily workflow.