iPhone photography has come a long way in the past 13 years. The original iPhone had a 2 MP camera that produced images that were 1600 x 1200 pixels. Today, the wide-angle camera on an iPhone 12 Pro has a 12 MP camera that can take shots that are 4032 x 3024 pixels.

Hardware advancements have played a big role in iPhone photography, but so has software. The size of an iPhone and physics limit hardware advances, resulting in diminishing returns year-over-year. Consequently, Apple and other mobile phone makers have turned to computational photography to bring the power of modern SoCs to bear, improving the quality of images produced by iPhones with software.

Computational photography has advanced rapidly, pushed forward by the increasingly powerful chips that power our iPhones. Every time you take a photo with your iPhone, it’s actually taking several, stitching them together, using AI to compute adjustments to make the image look better, and presenting you with a final product. The process feels instantaneous, but it’s the result of many steps that begin even before you press the shutter button.

However, the simplicity and efficiency of computational photography come with a tradeoff. That pipeline from the point you press the Camera app’s shutter button until you see the image you took involves a long series of steps. In turn, each of those steps involves a series of judgment calls and the application of someone else’s taste about how the photo should look.

Apple has made great strides in computational photography in recent years, but it also means someone else’s taste is being applied to your images. Source: Apple.

In many circumstances, the editorial choices made by the Camera app result in great photos, but not always, and the trouble is, your ability to tweak the images you take in compressed file formats is limited. A more flexible alternative is to shoot in a RAW file format that preserves more data, allowing for a greater range in editing options, but often, the friction of editing RAW images isn’t worth it. The Camera app is good enough most of the time, so we tolerate the shots that don’t look great.

However, what if you could have the best of both worlds? What if you could capture a lightweight, automatically-adjusted photo and an editing-friendly RAW image at the same time, allowing you to pick the right one for each image you take? If you like the JPEG or HEIC image produced by Apple’s computational photography workflow, you could keep it, but you could always fall back to the RAW version if you want more editing latitude. That way, you could rely on the editorial choices baked into iOS where you like the results but retain control for those times when you don’t like them.

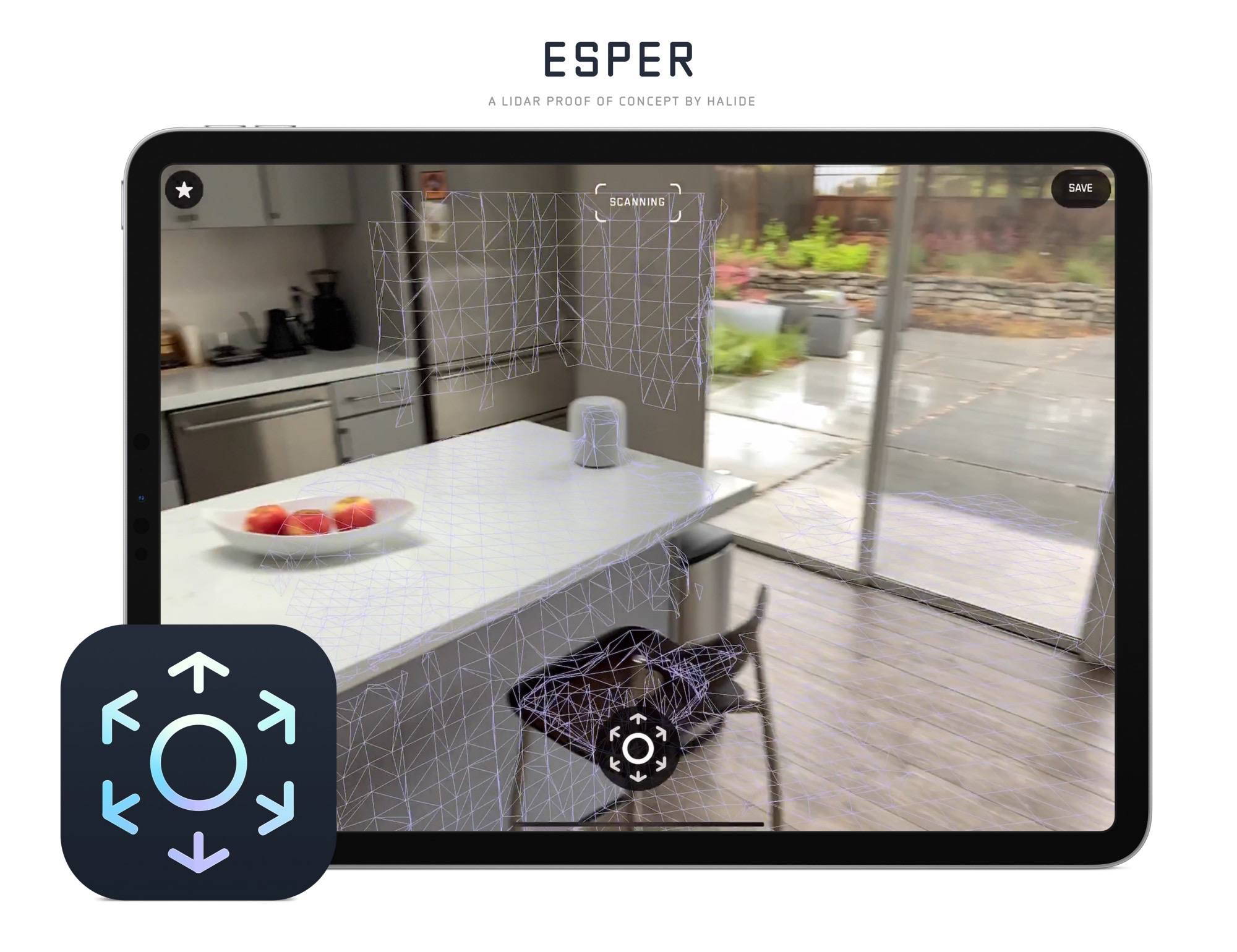

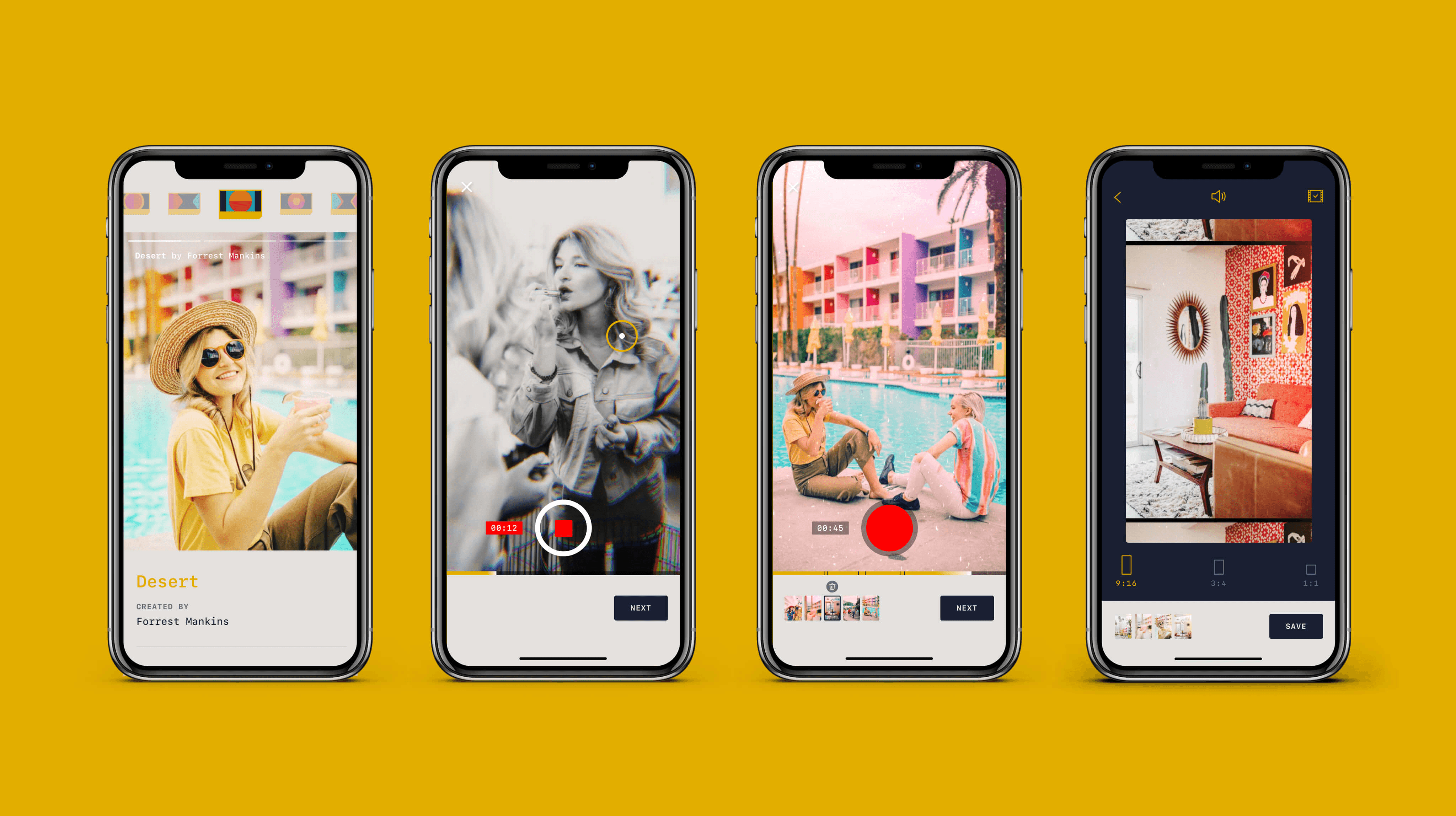

That’s what Halide Mark II by Lux sets out to accomplish. Halide is a MacStories favorite that we’ve covered many times in the past, but Mark II is something special. The latest update is an ambitious reimagining of what was already a premier camera app, building on what came before but with a simpler and easier to learn UI. Halide Mark II puts more control than ever into the hands of photographers, while also making it easy to achieve beautiful results with minimal effort. Halide also seeks to educate through a combination of design and upcoming in-app photography lessons.

By and large, Halide succeeds. Photography is a notoriously jargon-heavy, complex area. It’s still possible to get bogged down, fretting over which settings are best in what circumstances. However, Halide provides the most effective bridge from point-and-shoot photography to something far more sophisticated than any camera app I’ve used. The result is a camera app that gives iPhone photographers control over the images they shoot in an app that’s a pleasure to use and encourages them to learn more and grow as a photographer.

](https://cdn.macstories.net/001/IMG_0551-1603223192212.jpg)