We first reviewed Halide, the powerful third-party camera app by Ben Sandofsky and Sebastiaan de With, when it debuted in the summer of 2017, providing a powerful and elegant alternative to Apple’s Camera app that fully embraced RAW photography and advanced controls in an intuitive interface. We later showcased Halide’s iPhone X update as one of the most thoughtful approaches to adapting for the device’s Super Retina Display; to this day, Halide is a shining example of how the iPhone X’s novel form factor can aid, instead of hindering, complex app UIs.

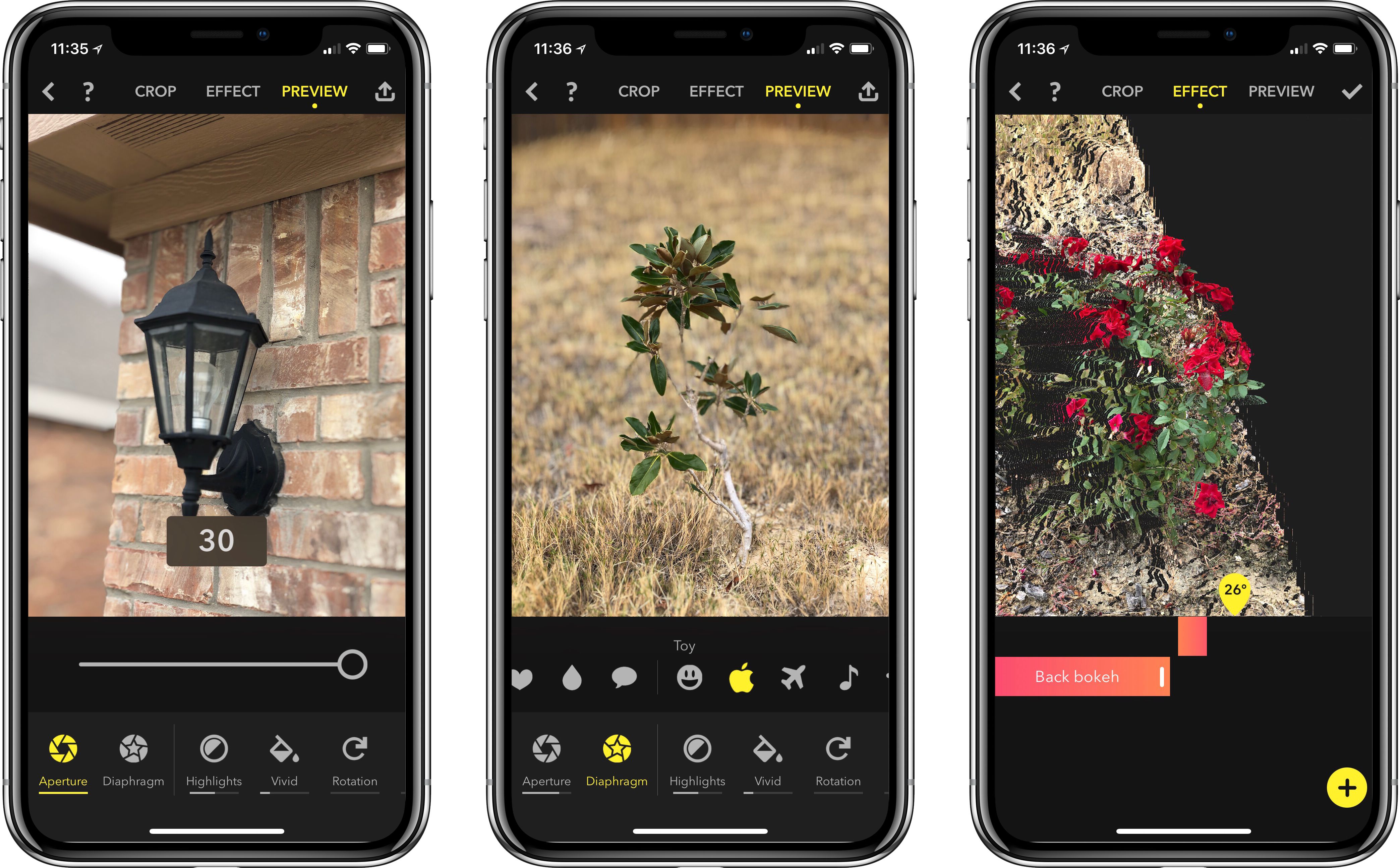

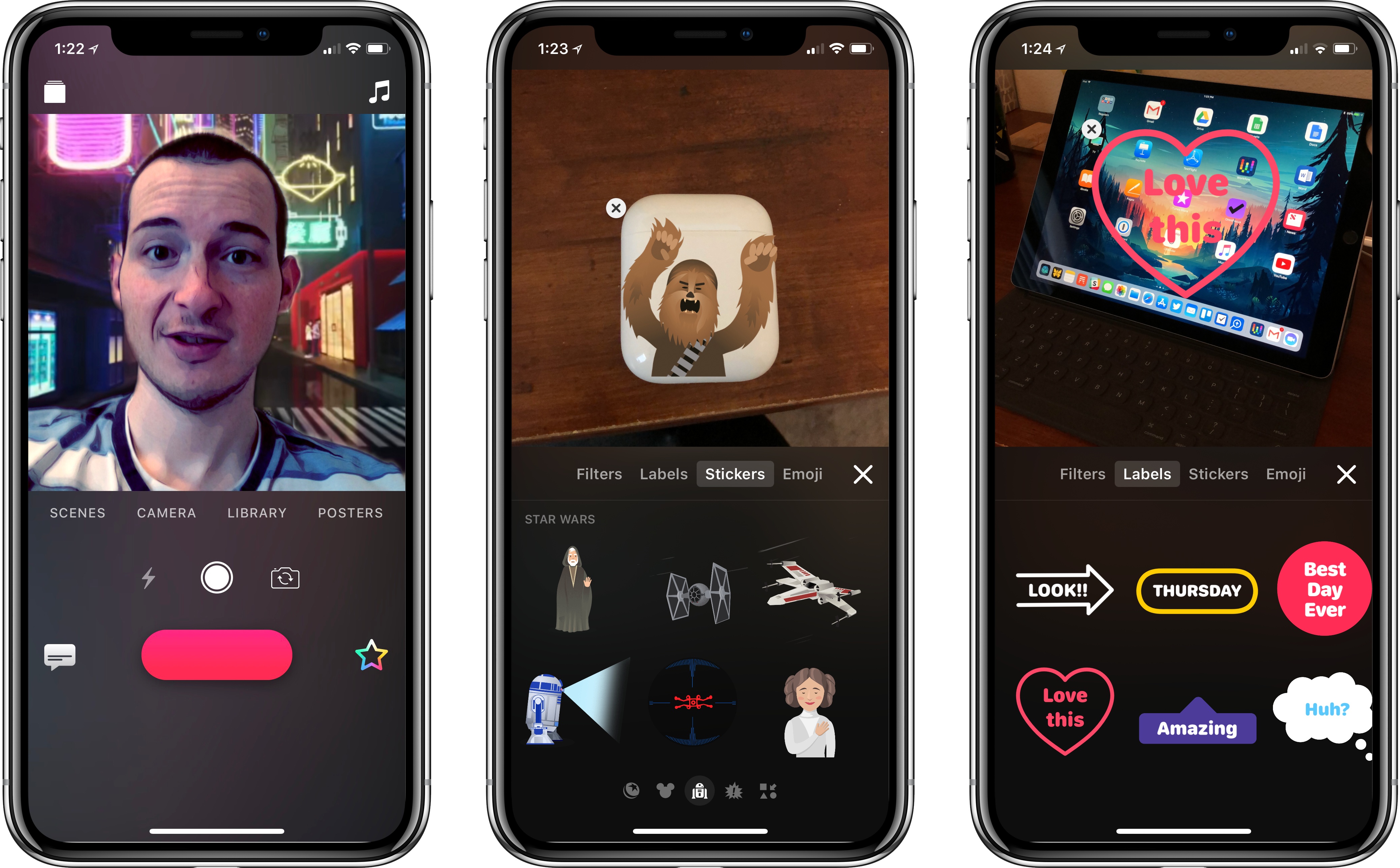

While Halide was already regarded as an appealing alternative to Apple’s stock app for professional photographers and RAW-curious iPhone users (something that designer de With covered in depth in his excellent guide), it was lacking a handful of key features of the modern iPhone photography experience. Sandofsky and de With want to eliminate some of these important gaps with today’s 1.7 update, which focuses on bringing the power of Portrait mode to Halide, supporting the iPhone X’s TrueDepth camera system, and extending the app’s integrations via a special ARKit mode, new export options, and native integration with the popular Darkroom photo editing tool.