Just over a year ago, I wrote about the poor performance of TestFlight, the app that App Store developers rely on for beta testing their own apps. Today, thanks to a couple rounds of Feedback submissions, TestFlight is working better than before, but it’s not fixed. With WWDC around the corner, I thought I’d provide a quick update and share a few suggestions for fixes and features I’d like to see Apple implement.

One of the benefits of writing about TestFlight last year was that it became clear to me that, although my use of the app was unique, I wasn’t alone. Other writers who test a lot of apps and super fans who love trying the latest versions of their favorite apps got in touch sharing similar experiences, which convinced me that the issue was related to the number of betas I had in TestFlight. My experience was one of the worst, but with others in a similar boat, I took the time to file a Feedback report to see if there was anything that could be done to improve TestFlight.

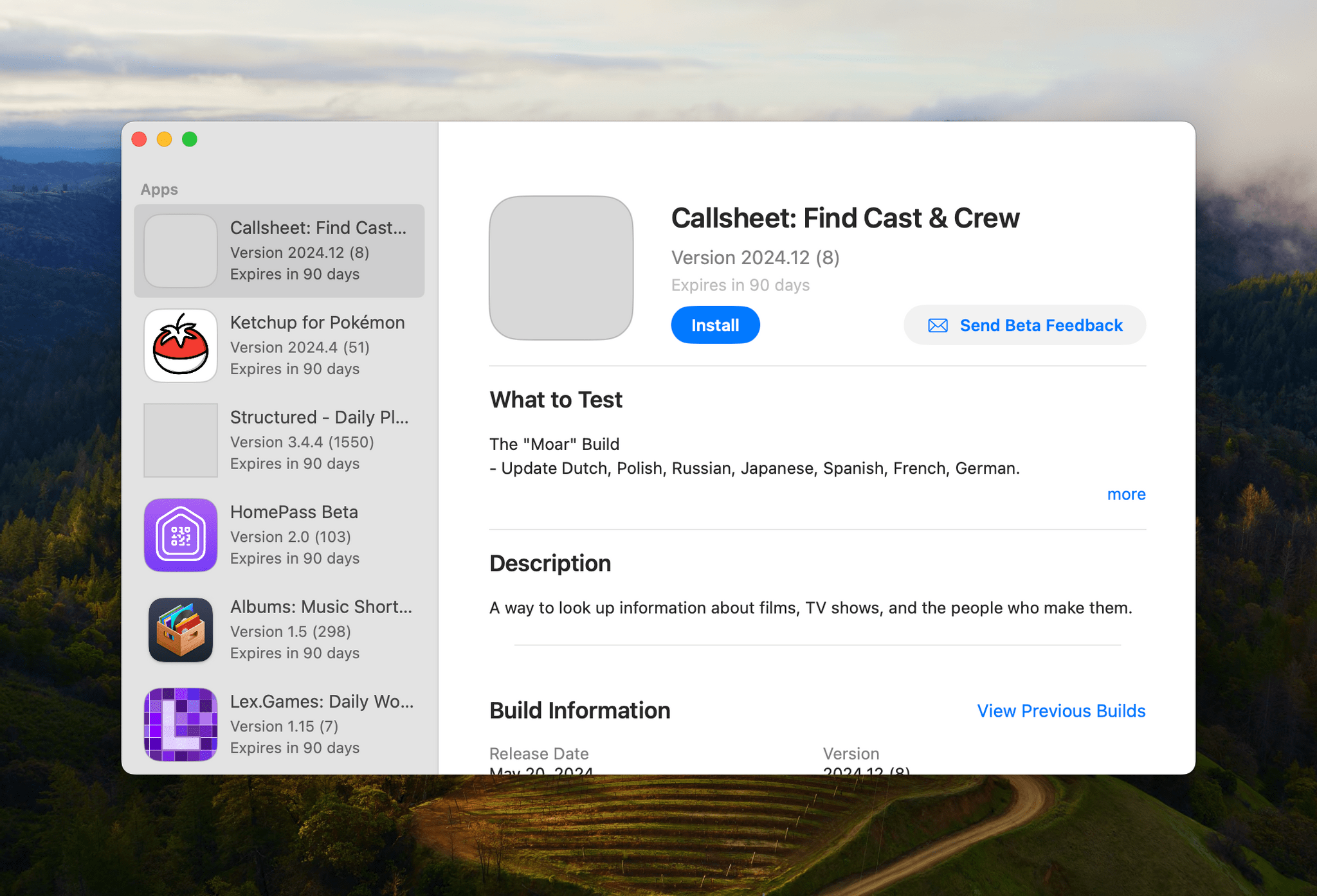

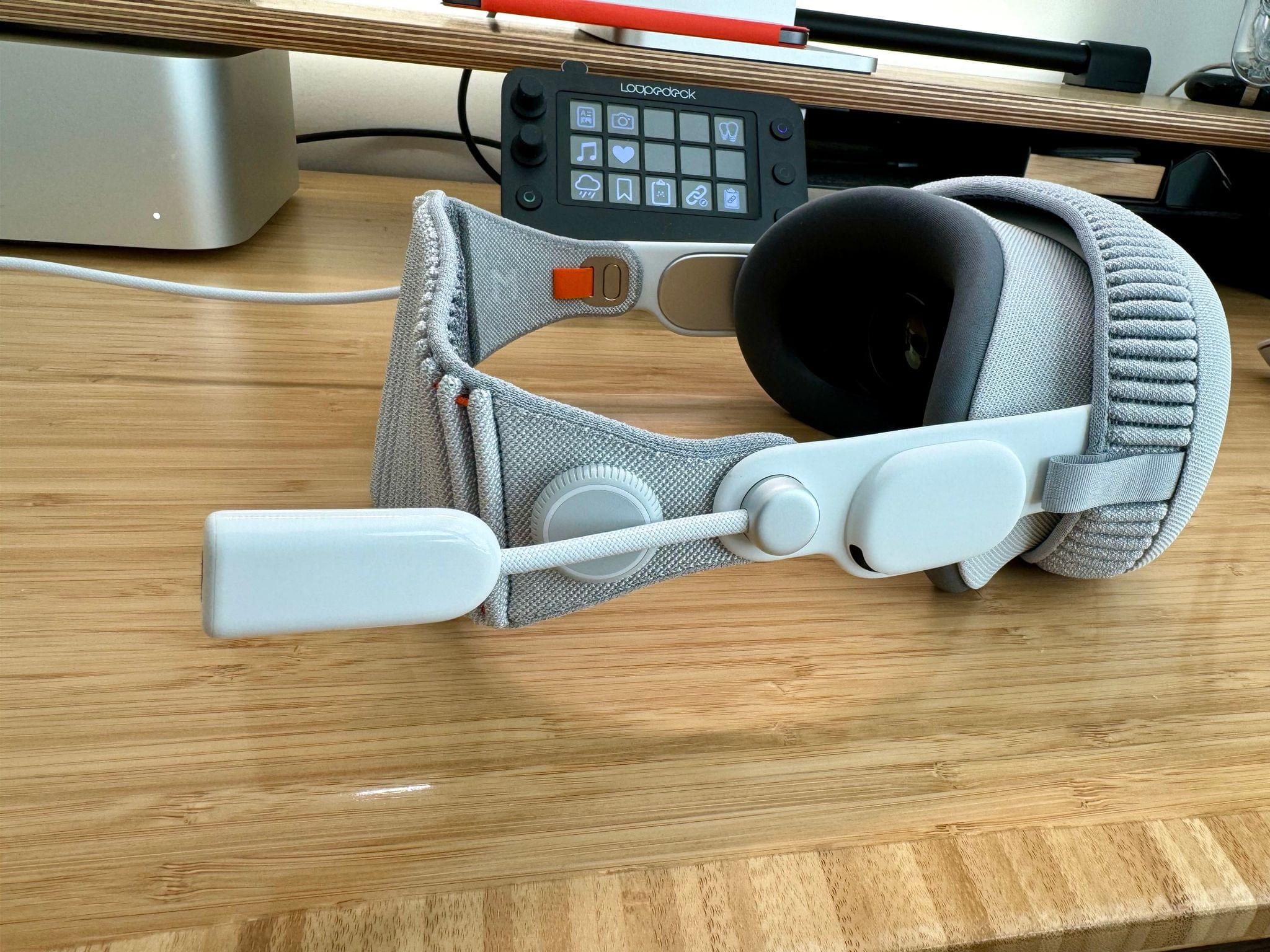

That initial Feedback attempt ultimately went nowhere. Then, I got busy and resigned myself to getting by as best I could. However, getting by was no longer an option as the Vision Pro’s release date approached. That added a significant number of new betas to my TestFlight collection. By March, the Mac version of TestFlight had stopped working entirely. With apps lined up in my review queue, that posed a problem I couldn’t work around.

I removed inactive betas using my iPhone and removed myself from testing as many active betas as I could bear. However, nothing worked, so I filed another report with the black box known as Feedback. Fortunately, this time, it worked. After some back-and-forth sharing logs and screen recordings of TestFlight failing to load any content, I received a message that something had been adjusted on Apple’s end to shake things loose. Just like that, TestFlight was working again, although sluggishly.

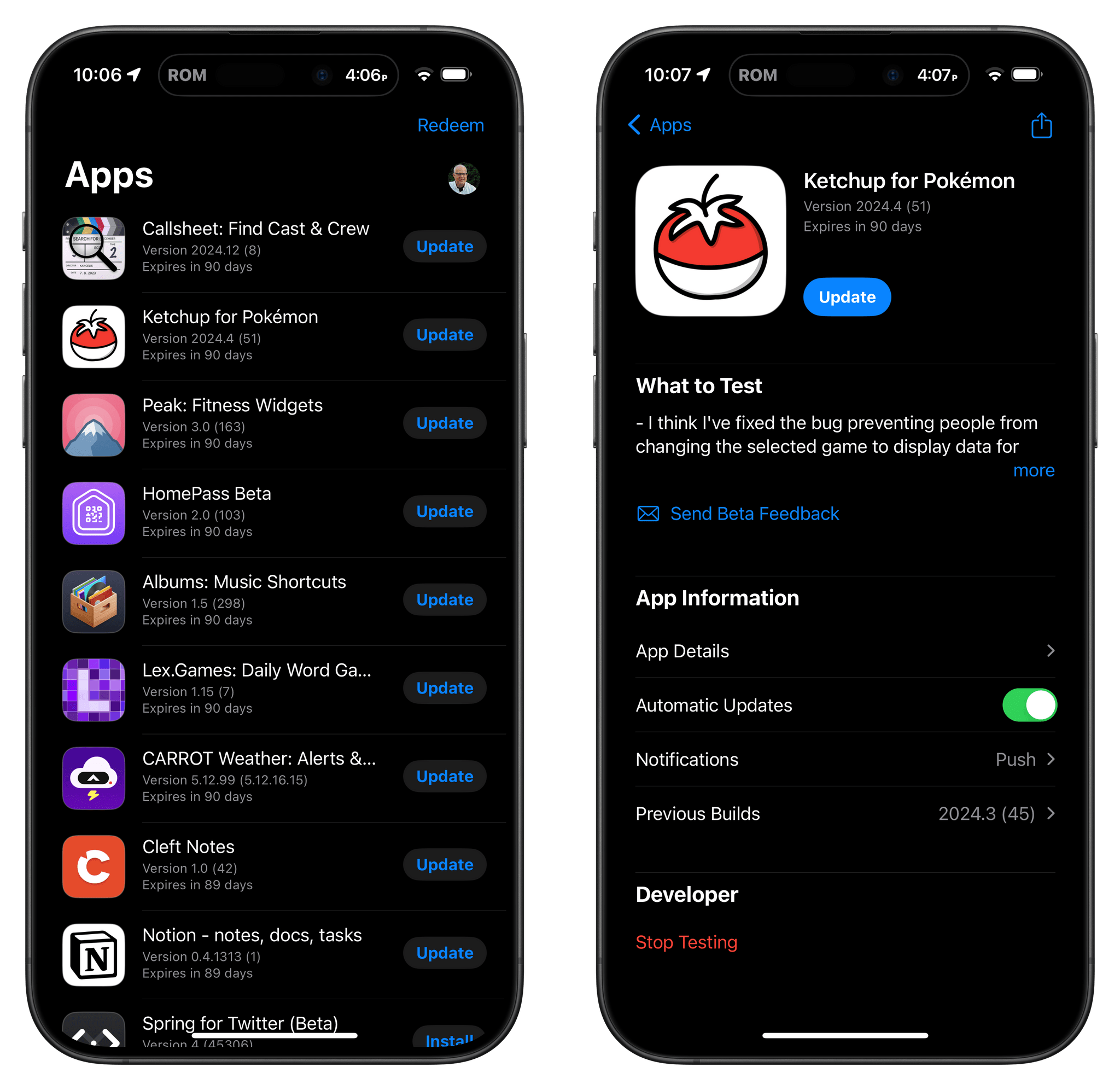

My immediate problem is fixed, and I’ve been managing old betas more carefully to avoid a repeat of what happened on the Mac before. However, it’s clear that TestFlight needs more than just the quick fix that solved the worst of my problems. First of all, although TestFlight works again on my Mac, it’s slow to load on all OSes and clearly in need of work to allow it to handle larger beta collections more gracefully. And there’s a lot of other low-hanging fruit that would make managing large beta collections better on every OS, including:

- the addition of a search field to make it easier to quickly locate a particular app

- sorting by multiple criteria like developer, app name, and app category

- filtering to allow users to only display installed or uninstalled betas

- a single toggle in the Settings app to turn off all existing and future email notifications of new beta releases

- attention to the automatic installation of beta updates, which has never worked consistently for me

- a versioning system that allows users to see whether the App Store version of an app has caught up to its beta releases

- automatic installation of betas after an OS update or ‘factory restore’ because currently, those apps’ icons are installed, but they are not useable until they’re manually re-installed from TestFlight one-by-one

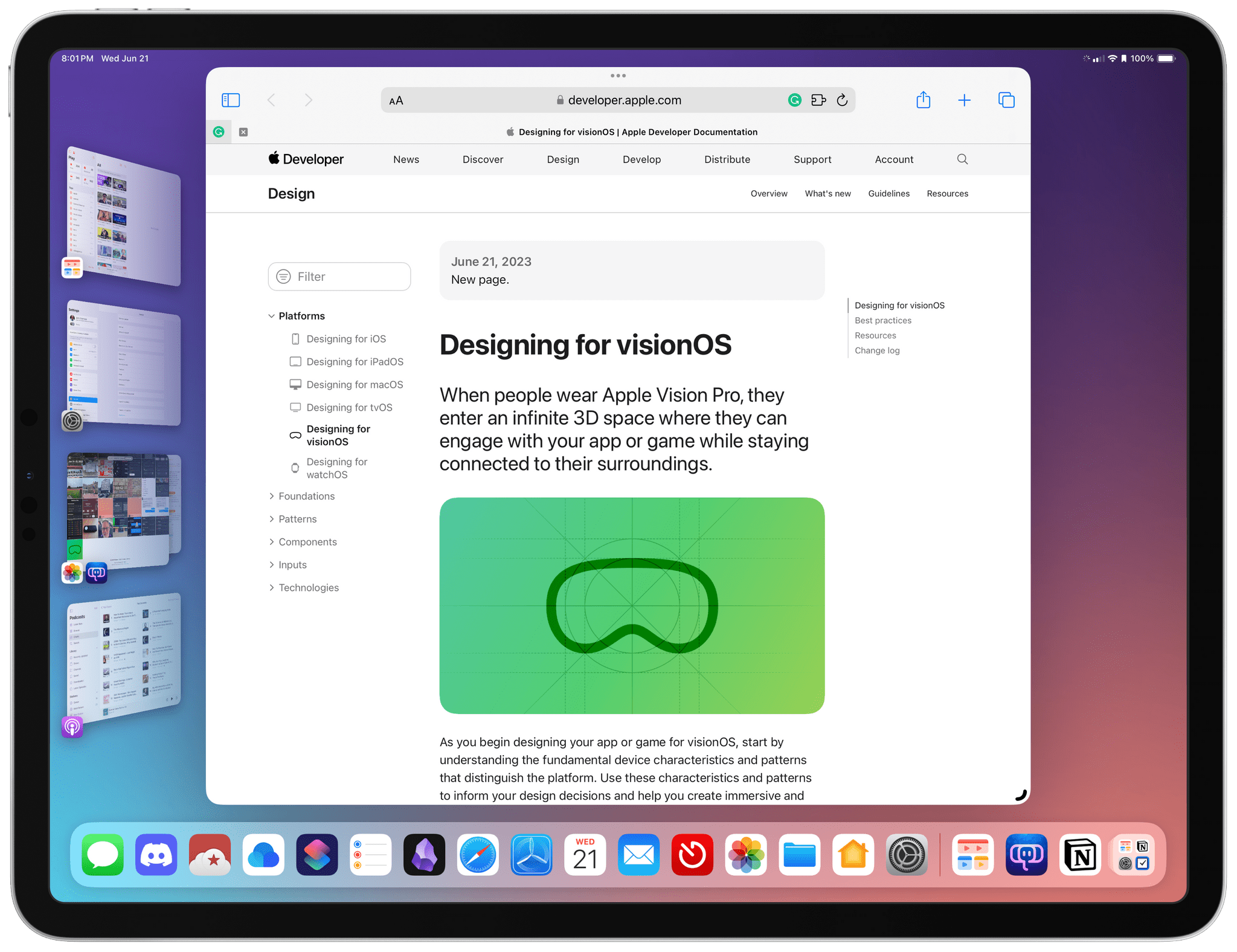

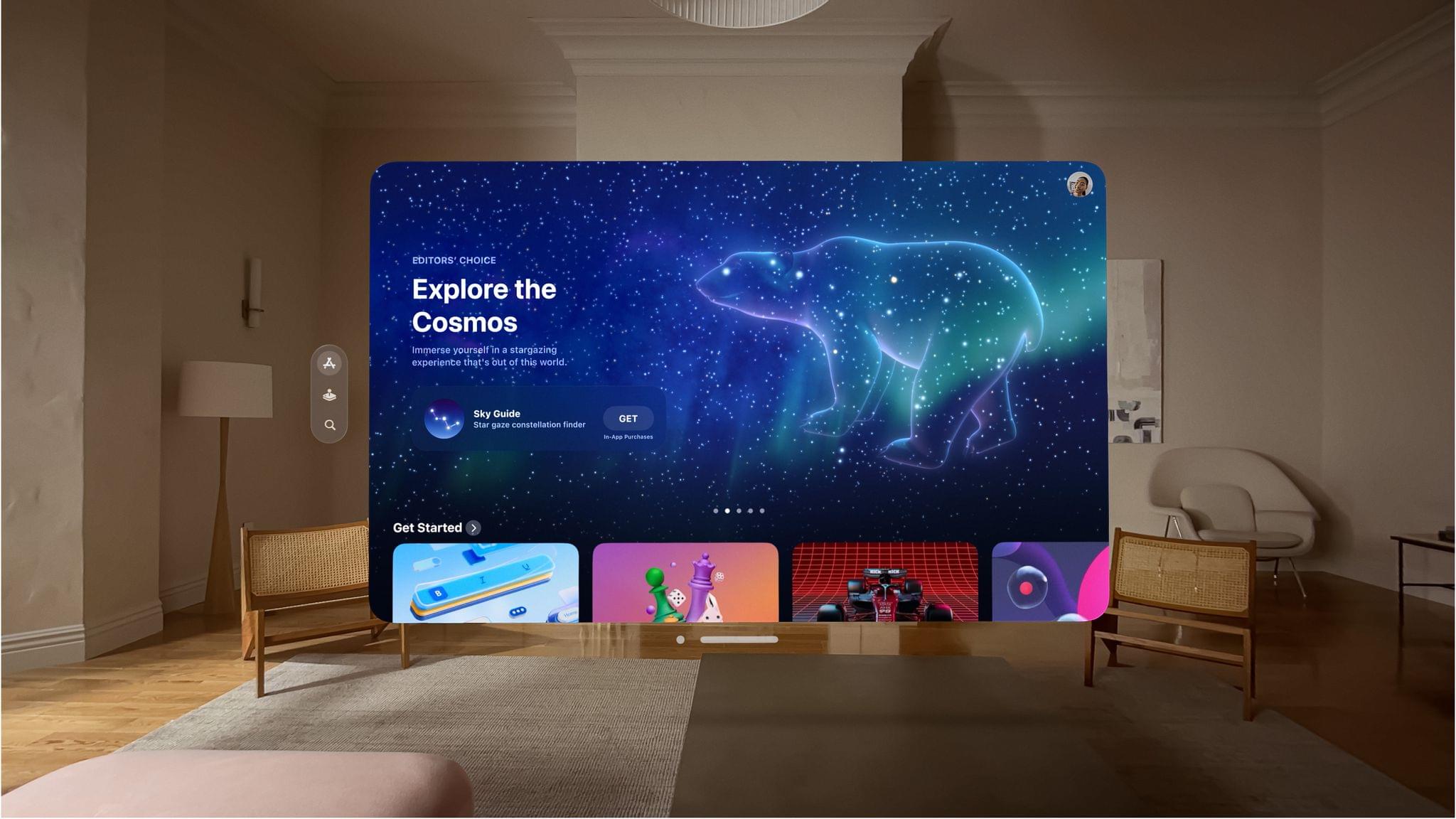

It’s time for Apple to spend some time updating TestFlight beyond the band-aid fix that got it working again for me. It’s been a full decade since Apple acquired TestFlight. Today, the app is crucial to iOS, iPadOS, watchOS, and visionOS development, and while it’s not as critical to macOS development, it’s used more often than not by Mac developers, too. Apple has gone to great lengths to explain the benefits of its developer program to justify its App Store commissions generally and the Core Technology Fee in the EU specifically. TestFlight is just one piece of that program, but it’s an important one that has been neglected for too long and no longer squares with the company’s professed commitment to developers.