MacStories readers and listeners of Connected are no strangers to my criticism towards Google’s Docs suite on iOS. For months, the company has been unable to properly support the iPad Pro and new iOS 9 features, leaving iOS users with an inferior experience riddled with a host of other inconsistencies and bugs.

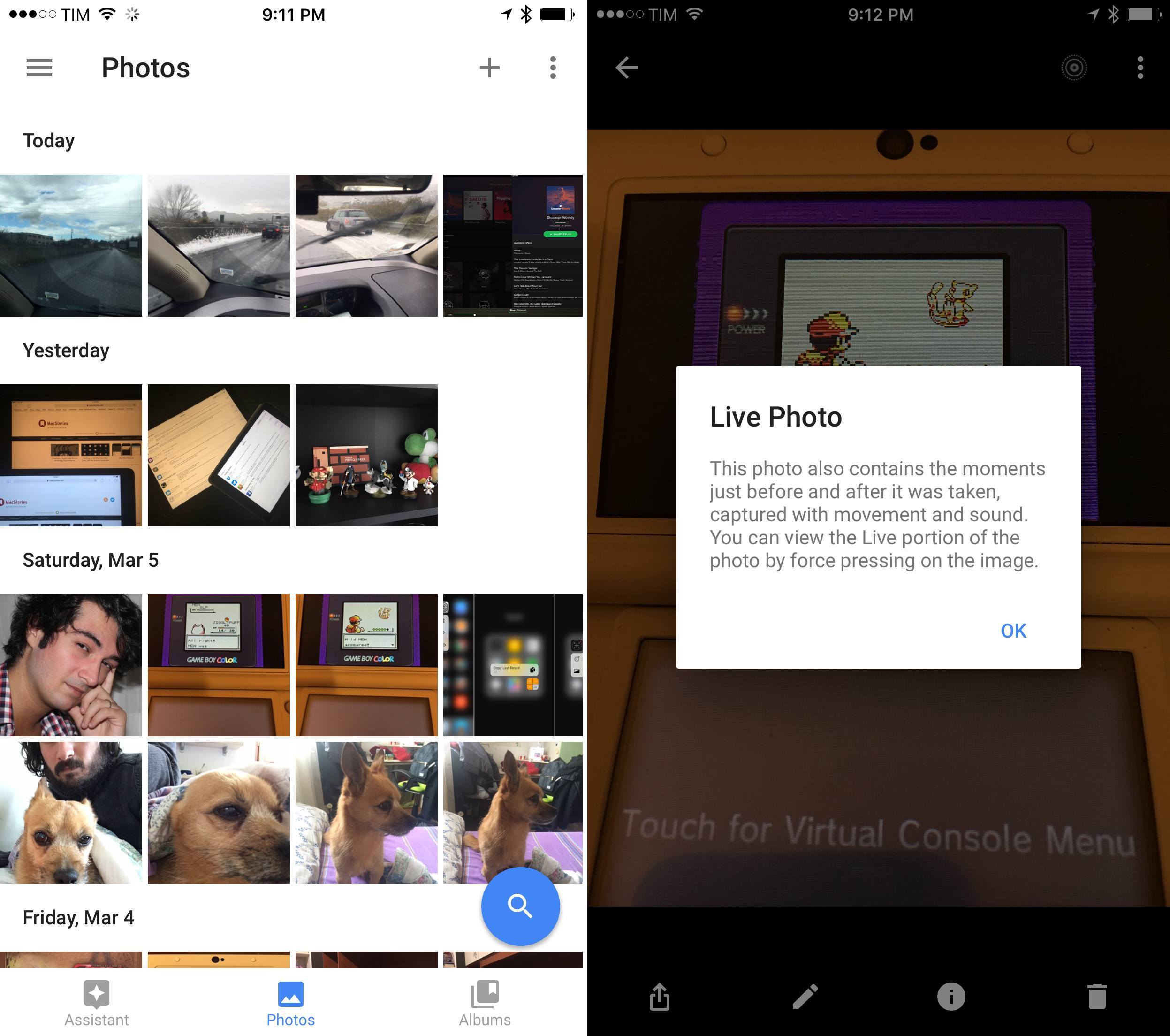

Earlier today, Google brought native iPad Pro resolution support to their Docs apps – meaning, you’ll no longer have to use stretched out apps with an iPad Air-size keyboard on your iPad Pro. While this is good news (no one likes to use iPad apps in compatibility mode with a stretched UI), the updates lack a fundamental feature of the post-iOS 9 world: multitasking with Slide Over and Split View. Unlike the recently updated Google Photos, Docs, Sheets, and Slides can’t be used alongside other apps on the iPad, which hinders the ability to work more efficiently with Google apps on iOS 9.

Today’s Google app updates highlight a major problem I’ve had with Google’s iOS software in the past year. One of the long-held beliefs in the tech industry is that Google excels at web services, while Apple makes superior native apps. In recent years, though, many have also noted that Google was getting better at making apps faster than Apple was improving at web services. Some have said that Google had built a great ecosystem of iOS apps, even.

Today, Google’s iOS apps are no longer great. They’re mostly okay, and they’re often disappointing in many ways – one of which1 is the unwillingness to recognize that adopting new iOS technologies is an essential step for building solid iOS experiences. The services are still amazing; the apps are too often a downright disappointment.2

No matter the technical reason behind the scenes, a company the size of Google shouldn’t need four months (nine if you count WWDC 2015) to ship a partial compatibility update for iOS 9 and the iPad Pro. Google have only themselves to blame for their lack of attention and failure to deliver modern iOS apps.