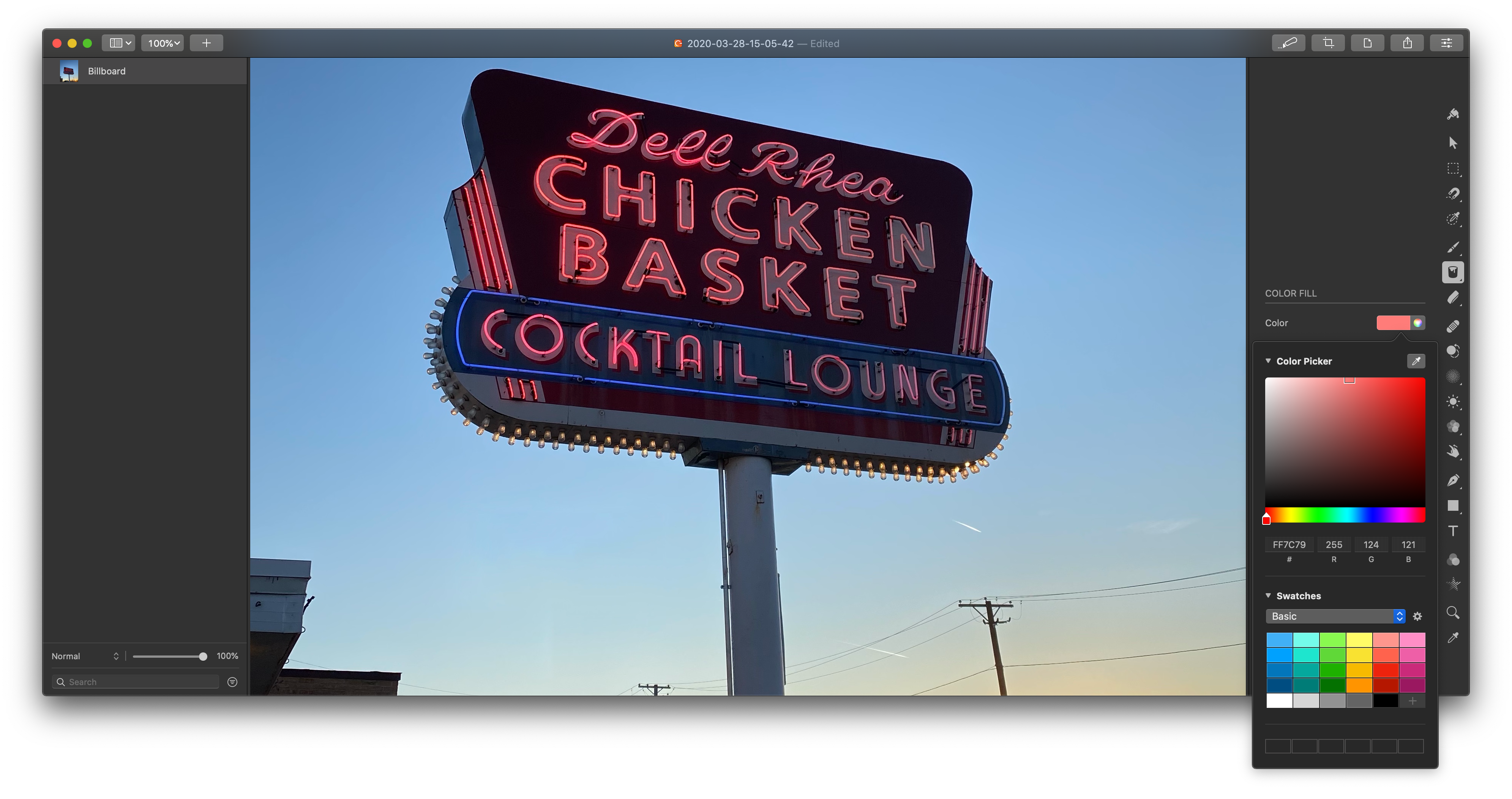

Pixelmator Pro 1.6 has been released with an all-new color picker that streamlines color management in the app, plus an improved way to select multiple objects in an image.

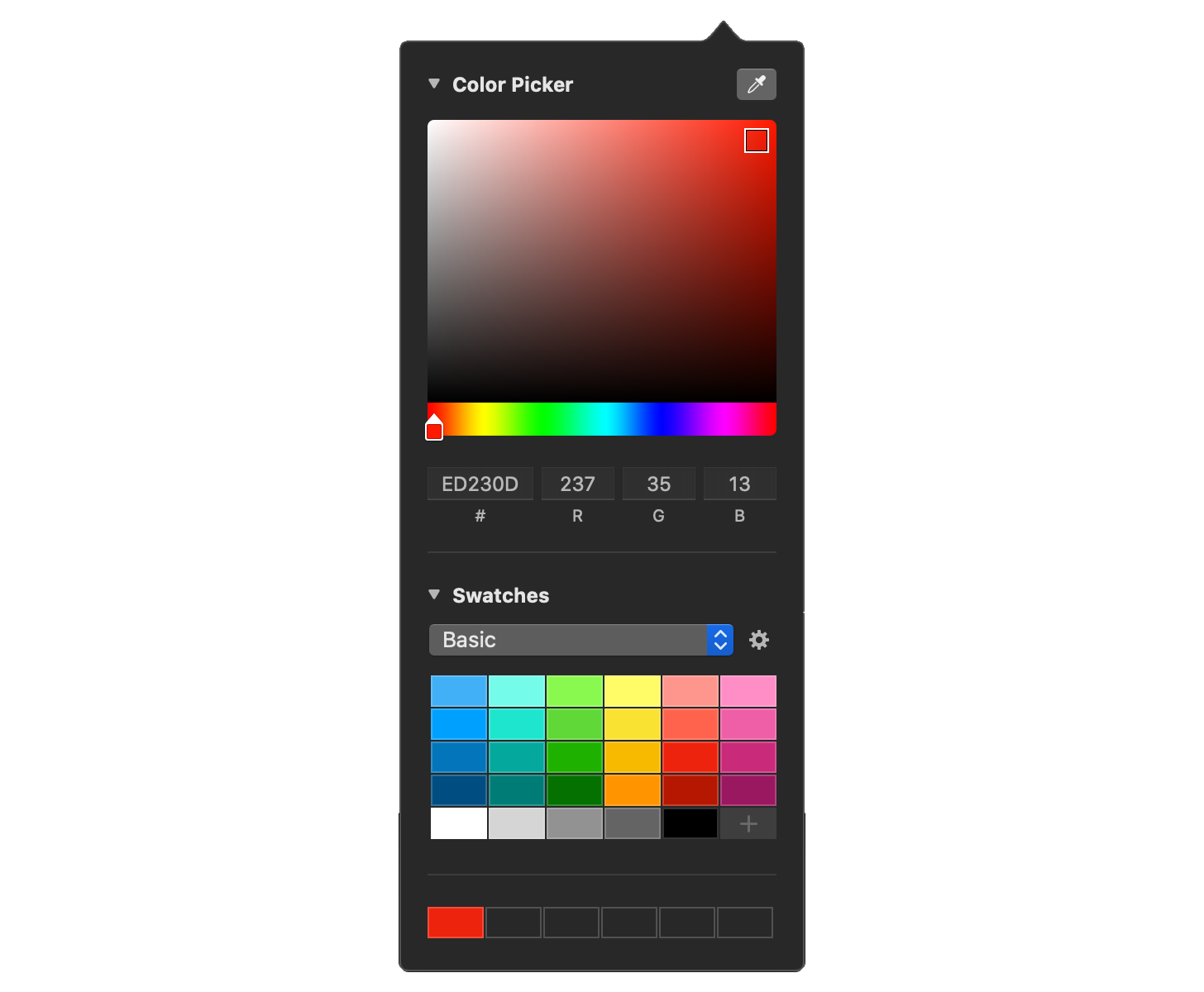

The lion’s share of Pixelmator Pro 1.6 is focused on the app’s new color picker that consolidates multiple tools in one place. The color square section of Pixelmator Pro’s color picker allows users to pick a hue, adjust its saturation and brightness, displays HEX and RGB values, provides for the creation of color swatch collections, and displays the six most recently used colors.

There’s also a dedicated color picker tool at the bottom of the tools on the right-hand edge of the app’s window. With the new tool open, the eyedropper remains active, improving the process of picking colors. The new color picker tool also keeps your swatches and color-picking settings available in the sidebar.

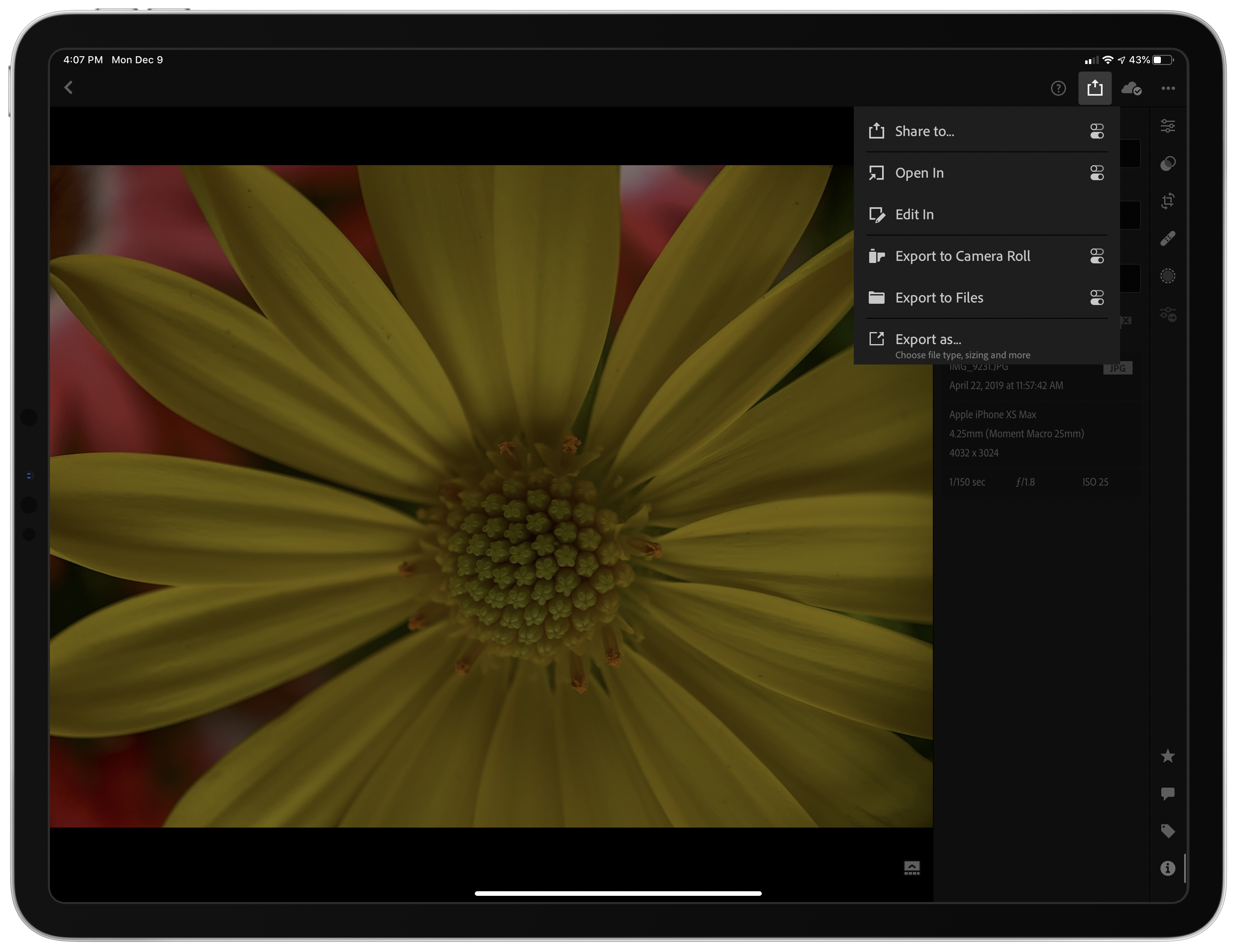

Pixelmator Pro 1.6 includes a number of other refinements too. Among them, multiple objects can also be selected now simply by dragging over them, and there’s a new tool for replacing missing fonts in a project more easily.

I’ve only spent a short time with Pixelmator Pro 1.6 so far, but even with my limited use, the new color tools a clear improvement. By consolidating everything into a custom picker, selecting and managing colors is vastly simpler than in the past. To learn more, check out the Pixelmator team’s post that additional details about the update.

Pixelmator Pro is available as a free update on the Mac App Store.