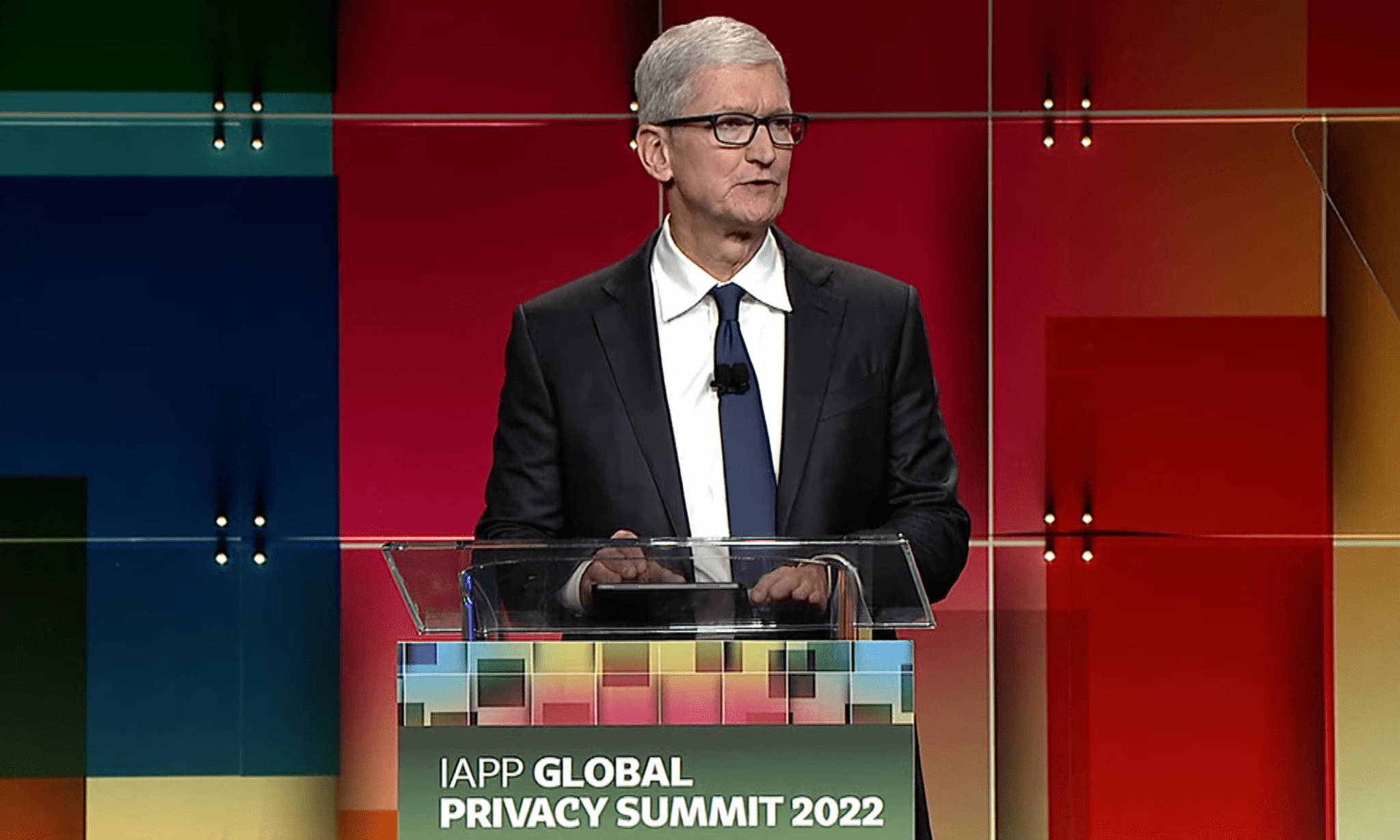

Today at the International Association of Privacy Professionals’ Global Privacy Summit, Apple CEO Tim Cook delivered a keynote speech on privacy. The IAPP’s mission is to act as a resource for privacy professionals to help them in their efforts to manage information privacy risks and protect data for their organizations.

Cook’s speech, which was livestreamed on the IAPP’s YouTube channel, began with a recap of Apple’s efforts to protect user privacy, including App Tracking Transparency, alluding to the “A Day in the Life of Your Data” white paper the company published early last year.

Cook told the assembled crowd that Apple supports privacy regulation, including GDPR in the EU and privacy laws in the US. However, Cook also expressed concern about unintended consequences that laws being considered in the US and elsewhere might cause, calling out sideloading proposals in particular. Cook said that although Apple supports competition, alternate app stores and sideloading are not the solution because they would open devices up to apps that sidestep the company’s tracking protections and can expose users introduce malware.

Concluding his remarks, Cook called on tech companies and governments to work together to fashion policies that don’t undermine user privacy. Ending on an optimistic note, Cook told the gathering that although the world is at a pivotal moment for privacy, the end of privacy as we know it is not inevitable.