iWatch Potential

Bruce “Tog” Tognazzini, Apple employee #66 and founder of the Human Interface Group, has published a great post on the potential of the “iWatch” – a so-called smartwatch Apple could release in the near future (via MG Siegler). While I haven’t been exactly excited by the features offered by current smartwatches – namely, the Pebble and other Bluetooth-based watches – the possibilities explored by Bruce made me think about a future ecosystem where, essentially, the iPhone will “think” in the background and the iWatch will “talk” directly to us. I believe that having bulky smartwatches with high-end CPUs won’t be nearly as important as ensuring a reliable, constant connection between lightweight wearable devices and the “real” computers in our pocket – smartphones.

The entire post is worth a read, so I’ll just highlight a specific paragraph about health tracking:

Having the watch facilitate a basic test like blood pressure monitoring would be a god-send, but probably at prohibitive cost in dollars, size, and energy. However, people will write apps that will carry out other medical tests that will end up surprising us, such as tests for early detection of tremor, etc. The watch could also act as a store-and-forward data collector for other more specialized devices, cutting back the cost of specialized sensors that would then need be little more than a sensor, a Blue Tooth chip, and a battery. Because the watch is always with us, it will be able to deliver a long-term data stream, rather than a limited snapshot, providing insight often missing from tests administered in a doctor’s office.

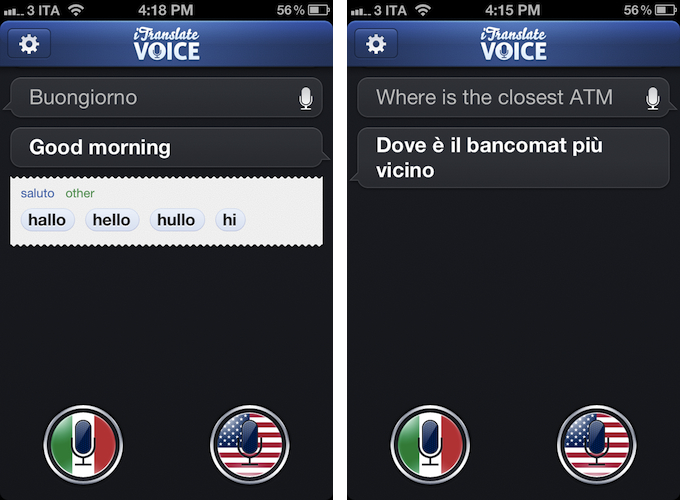

Dealing with all sorts of blood, temperature, and pressure tests on a regular basis, I can tell you that data sets that span weeks and months – building “archives” of a patient with graphs and charts, for instance – has, nowadays, too much friction. Monitoring blood pressure is still done with dedicated devices that most people don’t know how to operate. But imagine accurate, industry-certified, low-energy sensors capable of monitoring this kind of data and sending it back automatically to an iPhone for further processing, and you can see how friction could be removed while a) making people’s lives better and b) building data sets that don’t require any user input (you’d be surprised to know how much data can be extrapolated from the combination of “simple” tests like blood pressure monitoring and body temperature).

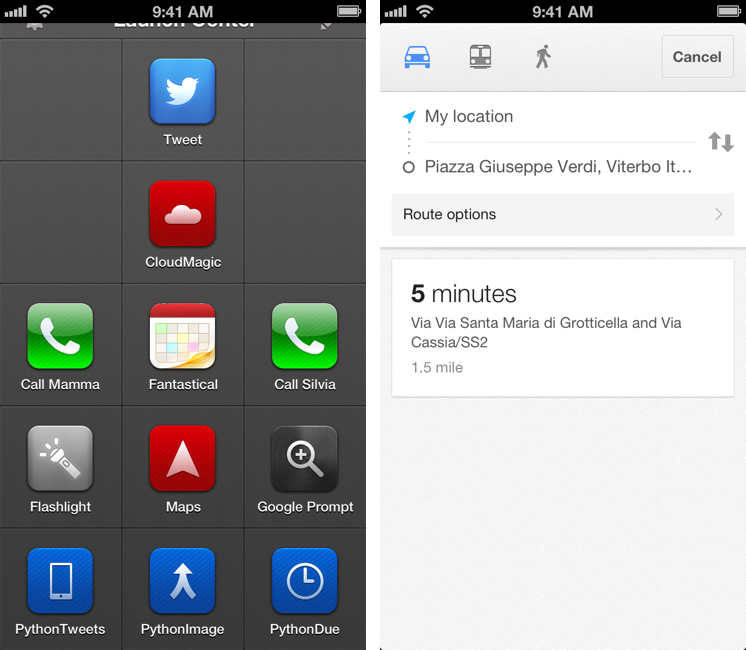

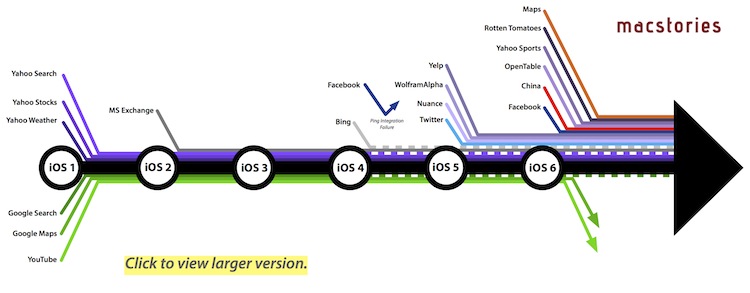

The health aspect of a possible “iWatch” is just a side of a device that Apple may or may not release any time soon. While I’m not sure about some of the ideas proposed by Bruce (passcode locks seem overly complex when the devices themselves could have biometric scanners built-in; Siri conversations in public still feel awkward and the service is far from responsive, especially on 3G), I believe others are definitley in the realm of technologically feasible and actually beneficial to the users (and Apple). Imagine crowdsourced data from the iWatch when applied to Maps or the iWatch being able to “tell us” about upcoming appointments or reminders when we’re driving so we won’t have to reach out to an iPhone (combine iWatch vibrations and “always-on” display with Siri Eyes Free and you get the idea).

As our iPhones grow more powerful and connected on each generation, I like to think that, in a not-so distant future, some of that power will be used to compute data from wearable devices that have a more direct connection to us and the world around us.