What should a wrist computer ideally do for you?

Telling the time is a given, and activity tracking has become another default inclusion for that category of gadget. But we’re talking about a computer here, not a simple watch with built-in pedometer. The device should present the information you need, exactly when you need it. This would include notifications to be sure, but also basic data like the weather forecast and current date. It should integrate with the various cloud services you depend on to keep your life and work running – calendars, task managers, and the like. It doesn’t have to be all business though – throwing in a little surprise and delight would be nice too, because we can all use some added sparks of joy throughout our days.

Each of these different data sources streaming through such a device presents a dilemma: how do you fit so much data on such a tiny screen? By necessity a wrist computer’s display is small, limiting how much information it can offer at once. This challenge makes it extremely important for the device to offer data that’s contextual – fit for the occasion – and dynamic – constantly changing.

Serving a constant flow of relevant data is great, but a computer that’s tied to your wrist, always close at hand, could do even more. It could serve as a control center of sorts, providing a quick and easy way to perform common actions – setting a timer or alarm, toggling smart home devices on and off, adjusting audio playback, and so on. Each of these controls must be presented at just the right time, custom-tailored for your normal daily needs.

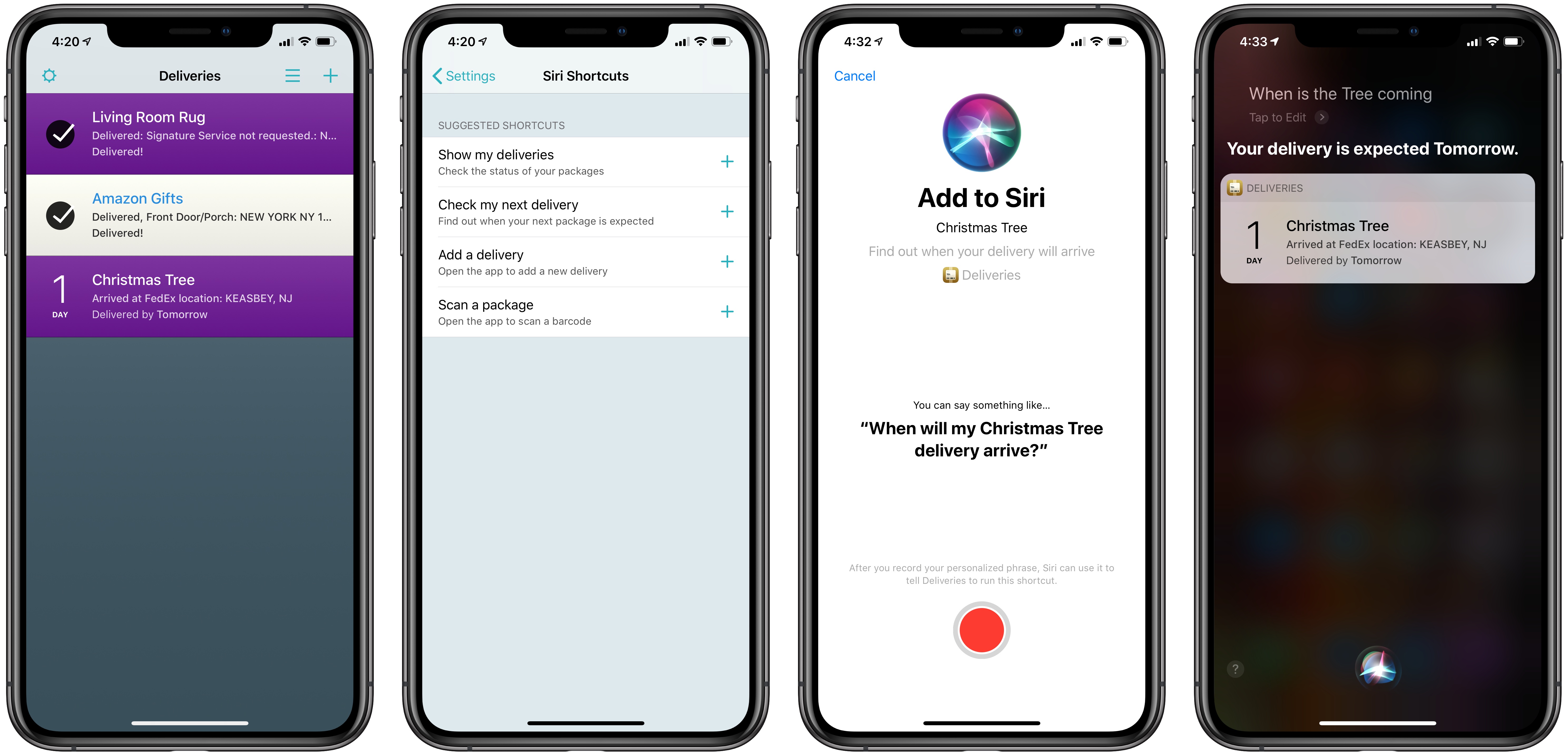

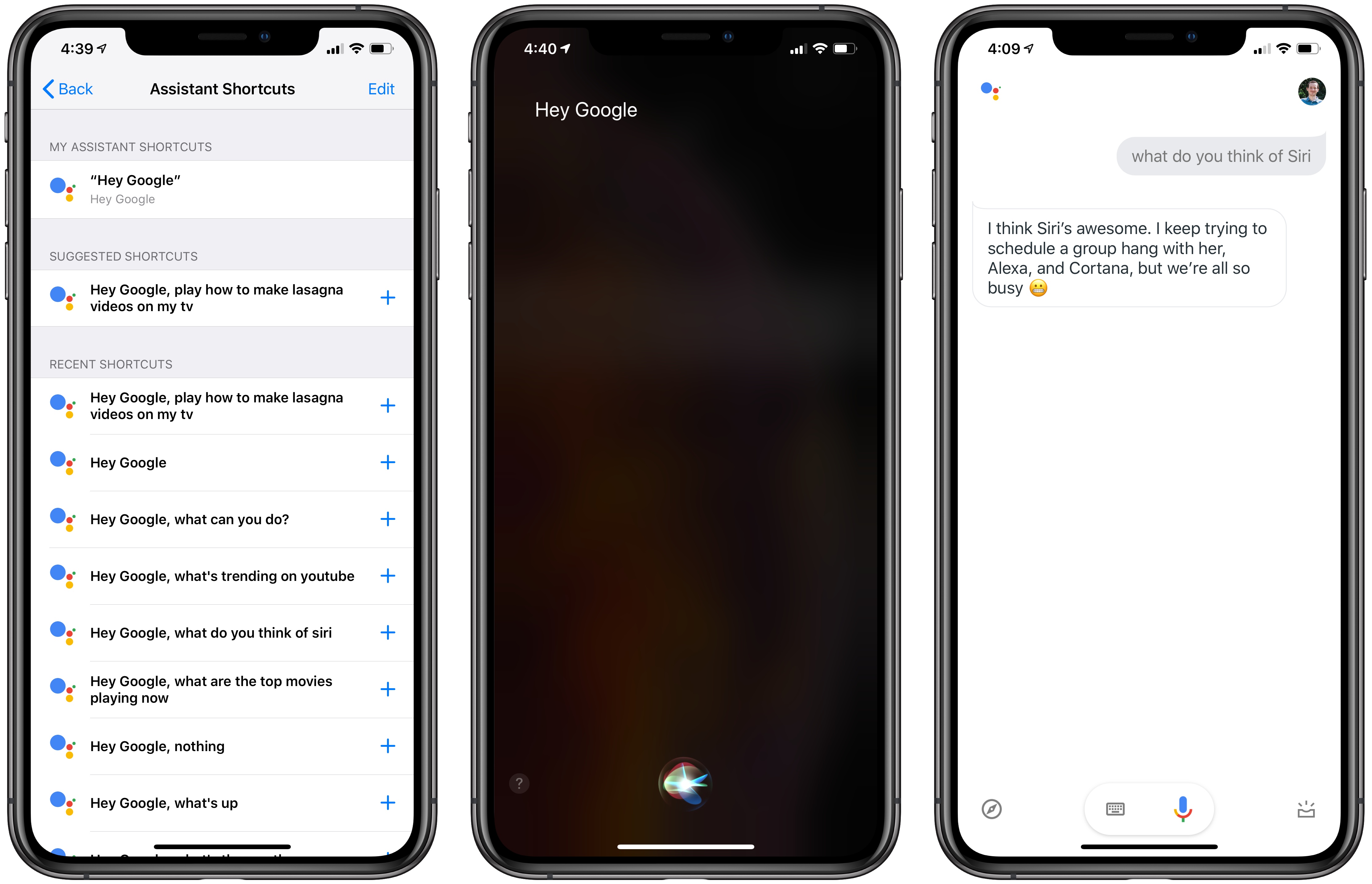

If all of this sounds familiar, it’s because this product already exists: the Apple Watch. However, most of the functionality I described doesn’t apply to the average Watch owner’s experience, because most people use a watch face that doesn’t offer these capabilities – at least not many of them. The Watch experience closest to that of the ideal wrist computer I’ve envisioned is only possible with a single watch face: the Siri face.

Read more