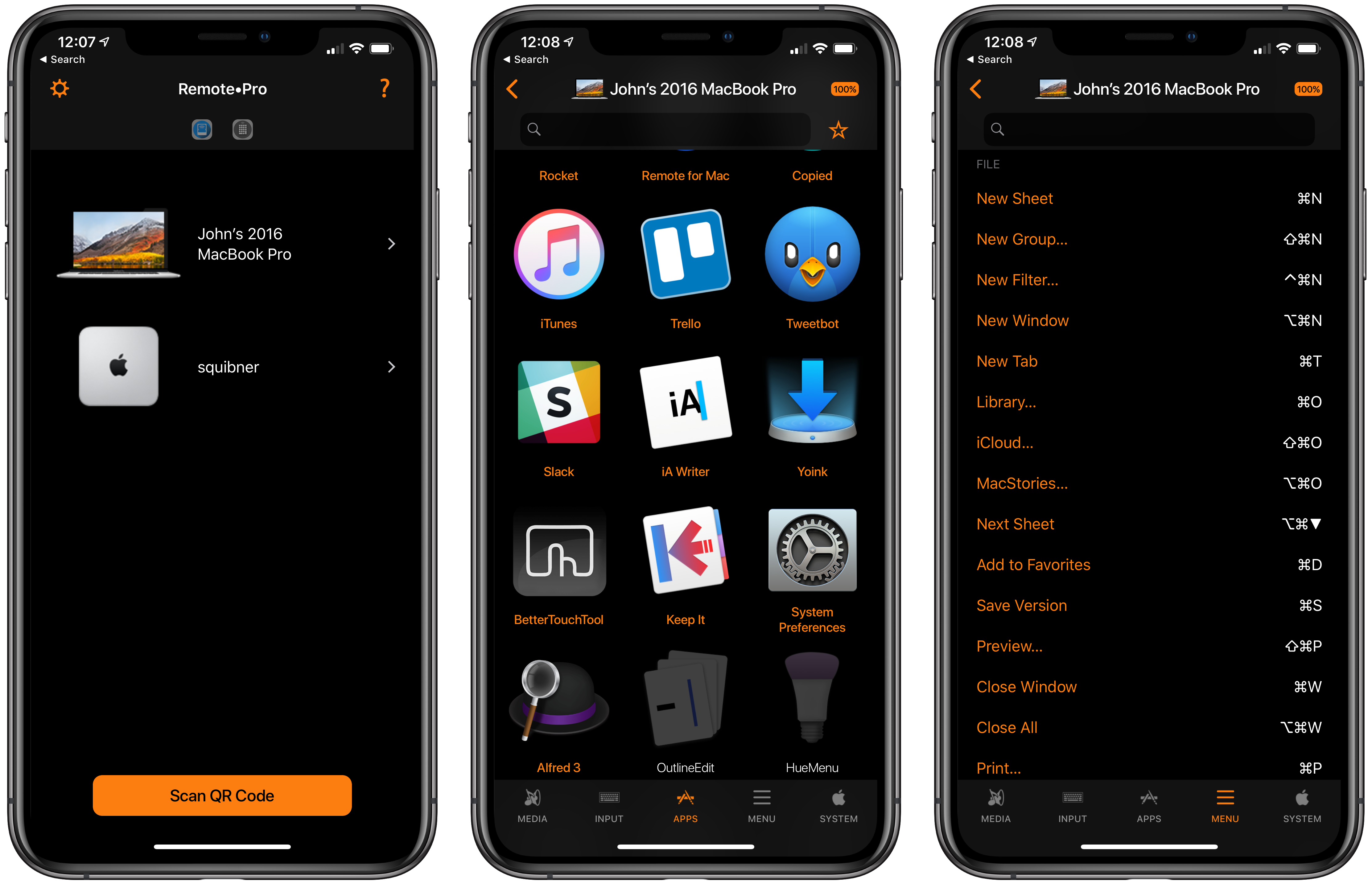

Evgeny Cherpak’s iOS app, Remote Control for Mac, has been updated with Siri shortcut support, which opens up some interesting ways to control a Mac with shortcuts. I’ve been using the app’s new Siri shortcuts for about a week and, as I covered on AppStories today, the shortcuts I’ve created that incorporate Remote’s functionality are already ones that I use every day.

Posts tagged with "siri"

Running a Mac from an iPad or iPhone with Remote Control for Mac

HomeCam 1.5 Adds Shortcuts to View Live HomeKit Camera Feeds in Siri, Search, and the Shortcuts App

I previously covered HomeCam, a HomeKit utility by indie developer Aaron Pearce, as a superior way to watch live video streams from multiple HomeKit cameras. In addition to a clean design and straightforward approach (your cameras are displayed in a grid), what set HomeCam apart was the ability to view information from other HomeKit accessories located in the same room of a camera and control nearby lights without leaving the camera UI. Compared to Apple’s approach to opening cameras in the clunky Home app, HomeCam is a nimble, must-have utility for anyone who owns multiple HomeKit cameras and wants to tune into their video feeds quickly. With the release of iOS 12, HomeCam is gaining one of the most impressive and useful implementations of Siri shortcuts I’ve seen on the platform yet.

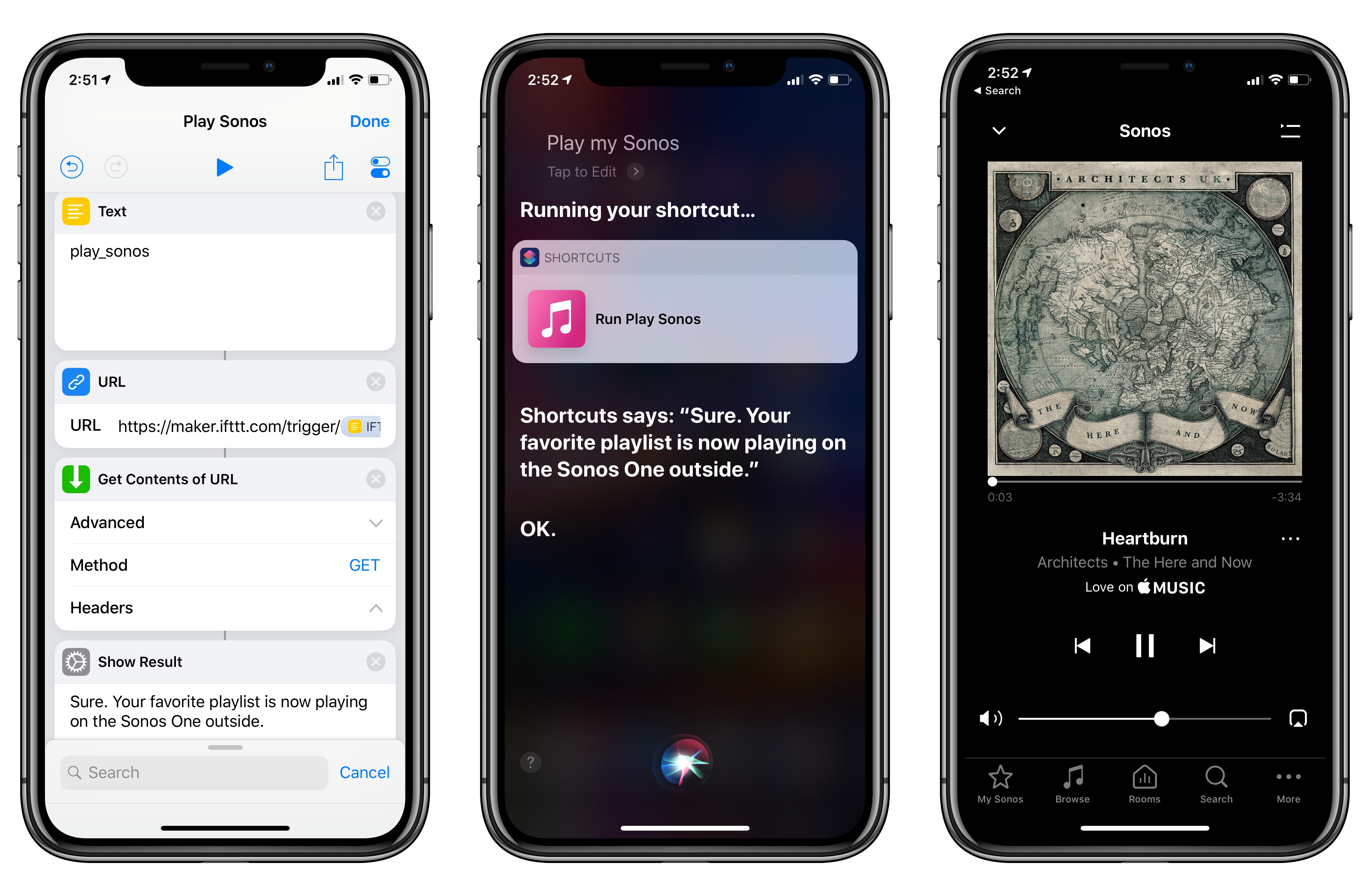

How to Trigger IFTTT Applets with iOS 12’s New Shortcuts App and Siri

Among the actions that didn’t make the transition from Workflow to the new Shortcuts app for iOS 12, built-in support for triggering IFTTT applets (formerly known as “recipes”) is perhaps the most annoying one. With just a few taps, Workflow’s old ‘Trigger IFTTT Applet’ action allowed you to assemble workflows that combined the power of iOS integrations with IFTTT’s hundreds of supported services. The IFTTT action acted as a bridge between Workflow and services that didn’t offer native support for the app, such as Google Sheets, Spotify, and several smart home devices.

Fortunately, there’s still a way to integrate the just-released Shortcuts app with IFTTT. The method I’m going to describe below involves a bit more manual setup because it’s not as nicely integrated with Shortcuts as the old action might have been. In return however, you’ll unlock the ability to enable IFTTT triggers using Siri on your iOS devices, Apple Watch, and HomePod – something that was never possible with Workflow’s original IFTTT support. Let’s take a look.

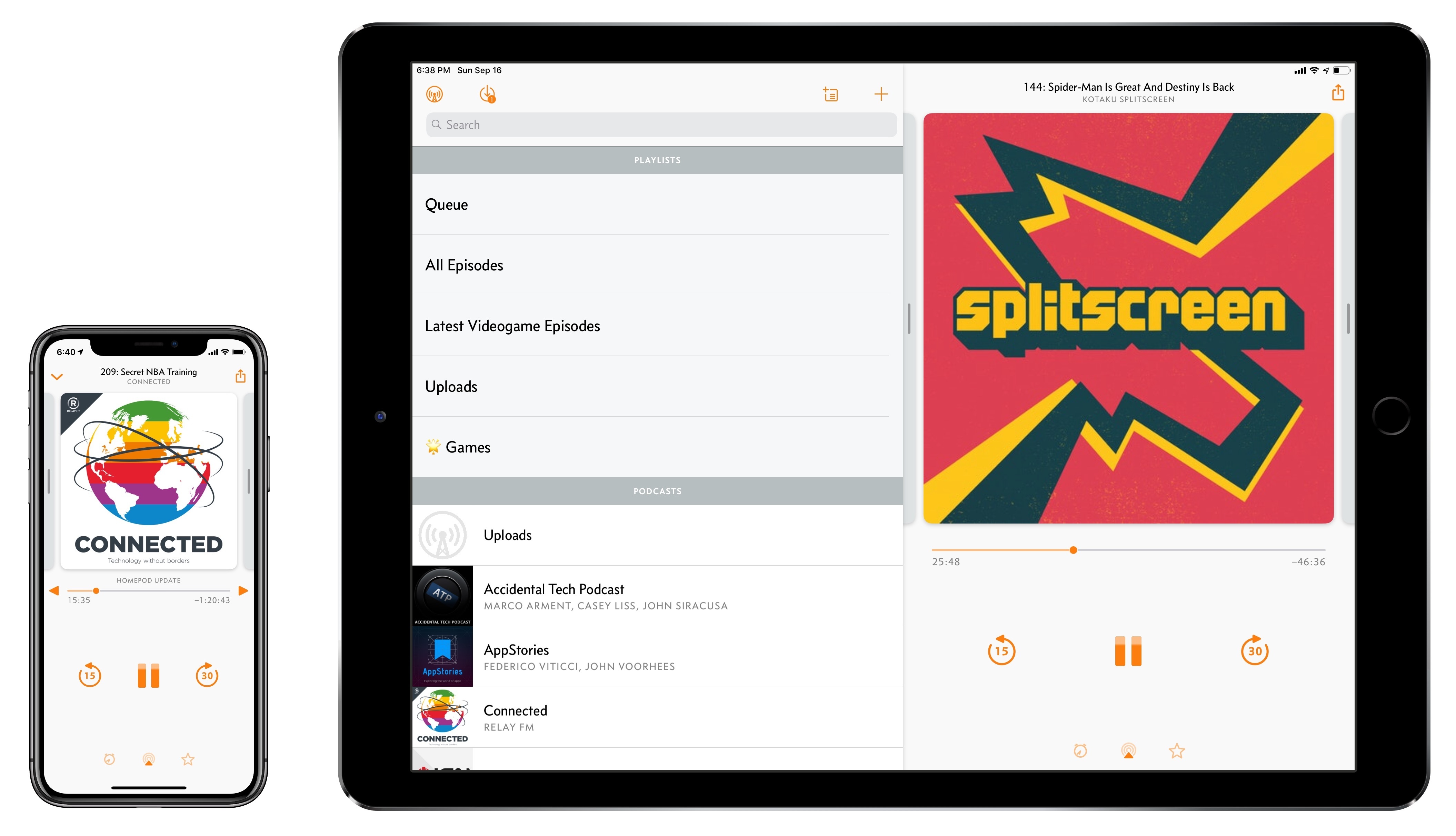

Overcast 5: Redesigned Now Playing Screen, Search, Siri Media Shortcuts, and More

Overcast, Marco Arment’s popular podcast client for iPhone and iPad, received a major update today to version 5. While I’ve long praised Apple’s work on their built-in Podcasts app for iOS – particularly since getting three HomePods and leveraging Podcasts’ support for AirPlay 2 – I also recognize the appeal of Overcast’s advanced features and powerful audio effects. Sprinkled throughout Overcast’s release history are design details and enhancements big and small that make it a sophisticated, versatile client for podcast aficionados who don’t want to settle for a stock app. From this standpoint, despite welcome improvements to Podcasts in iOS 12, changes in Overcast 5 make it an even more attractive option that has caused me second-guess my decision to embrace Apple’s native app.

Tom Gruber, Co-Founder of Siri, Retires from Apple

The Information reports that Tom Gruber, Apple’s head of the Siri Advanced Developments group, has retired to pursue personal interests including photography and ocean conservation. Gruber joined Apple as part of the company’s acquisition of Siri in 2010 along with his co-founders Dag Kittlaus and Adam Cheyer, who previously left Apple in 2011 and 2012 respectively. In addition to Gruber, The Information reports that Vipul Ved Prakash, Apple’s head of search, has left the company. Apple confirmed both departures to The Information.

Siri, which Apple incorporated into iOS in 2011, has been through recent leadership changes as it has fallen behind voice assistants like Amazon’s Alexa and Google Assistant. In 2017, Craig Federighi, Apple’s Senior Vice President of Software Engineering, took over Siri’s oversight from Eddy Cue. Just this past May, Apple hired John Giannandrea, Google’s former Chief of Search and Artificial Intelligence to be Apple’s Chief of Machine Learning and AI Strategy. Last week, Giannandrea showed up on the leadership page on Apple.com, and, according to a TechCrunch story, the Siri team now reports to him.

With all of Siri’s co-founders departed from the company, it will be interesting to see in what direction Giannandrea and the Siri team take Apple’s voice assistant.

Shortcuts: A New Vision for Siri and iOS Automation

In my Future of Workflow article from last year (published soon after the news of Apple’s acquisition), I outlined some of the probable outcomes for the app. The more optimistic one – the “best timeline”, so to speak – envisioned an updated Workflow app as a native iOS automation layer, deeply integrated with the system and its built-in frameworks. After studying Apple’s announcements at WWDC and talking to developers at the conference, and based on other details I’ve been personally hearing about Shortcuts while at WWDC, it appears that the brightest scenario is indeed coming true in a matter of months.

On the surface, Shortcuts the app looks like the full-blown Workflow replacement heavy users of the app have been wishfully imagining for the past year. But there is more going on with Shortcuts than the app alone. Shortcuts the feature, in fact, reveals a fascinating twofold strategy: on one hand, Apple hopes to accelerate third-party Siri integrations by leveraging existing APIs as well as enabling the creation of custom SiriKit Intents; on the other, the company is advancing a new vision of automation through the lens of Siri and proactive assistance from which everyone – not just power users – can reap the benefits.

While it’s still too early to comment on the long-term impact of Shortcuts, I can at least attempt to understand the potential of this new technology. In this article, I’ll try to explain the differences between Siri shortcuts and the Shortcuts app, as well as answering some common questions about how much Shortcuts borrows from the original Workflow app. Let’s dig in.

Apple Hires John Giannandrea, Google’s Chief of Search and Artificial Intelligence→

According to The New York Times, Apple has hired John Giannandrea, Google’s chief of search and artificial intelligence. In a memo obtained by The Times, Tim Cook said:

“Our technology must be infused with the values we all hold dear,” Mr. Cook said in an email to staff members obtained by The New York Times. “John shares our commitment to privacy and our thoughtful approach as we make computers even smarter and more personal.”

Giannandrea joined Google in 2010 as part of the company’s acquisition of Metaweb and is credited with infusing artificial intelligence across Google’s product line. Giannandrea will report directly to Apple CEO Tim Cook.

This is a huge ‘get’ for Apple and comes fast on the heels of reports that the company is hiring over 100 engineers to improve Siri.

Erasing Complexity: The Comfort of Apple’s Ecosystem

Every year soon after WWDC, I install the beta of the upcoming version of iOS on my devices and embark on an experiment: I try to use Apple’s stock apps and services as much as possible for three months, then evaluate which ones have to be replaced with third-party alternatives after September. My reasoning for going through these repetitive stages on an annual basis is simple: to me, it’s the only way to build the first-hand knowledge necessary for my iOS reviews.

I also spent the past couple of years testing and switching back and forth between non-Apple hardware and services. I think every Apple-focused writer should try to expose themselves to different tech products to avoid the perilous traps of preconceptions. Plus, besides the research-driven nature of my experiments, I often preferred third-party offerings to Apple’s as I felt like they provided me with something Apple was not delivering.

Since the end of last year, however, I’ve been witnessing a gradual shift that made me realize my relationship with Apple’s hardware and software has changed. I’ve progressively gotten deeper in the Apple ecosystem and I don’t feel like I’m being underserved by some aspects of it anymore.

Probably for the first time since I started MacStories nine years ago, I feel comfortable using Apple’s services and hardware extensively not because I’ve given up on searching for third-party products, but because I’ve tried them all. And ultimately, none of them made me happier with my tech habits. It took me years of experiments (and a lot of money spent on gadgets and subscriptions) to notice how, for a variety of reasons, I found a healthy tech balance by consciously deciding to embrace the Apple ecosystem.

Siri Struggles with Commands Handled by the Original 2010 App→

Nick Heer at Pixel Envy tested how well 2018 Siri performs commands given to the voice assistant in a 2010 demo video. The video takes Siri, which started as a stand-alone, third-party app, through a series of requests like ‘I’d like a romantic place for Italian food near my office.’ Just a couple of months after the video was published, Siri was acquired by Apple and the team behind it, including the video’s narrator, Tom Gruber, began integrating Siri into iOS.

That was eight years ago. Inspired by a tweet, Heer tested how well Siri performs when given the same commands today. As Heer acknowledges, the results will vary depending on your location, and the test is by no means comprehensive, but Siri’s performance is an eye-opener nonetheless.

What’s clear to me is that the Siri of eight years ago was, in some circumstances, more capable than the Siri of today. That could simply be because the demo video was created in Silicon Valley, and things tend to perform better there than almost anywhere else. But it’s been eight years since that was created, and over seven since Siri was integrated into the iPhone. One would think that it should be at least as capable as it was when Apple bought it.

Eight years is an eternity in the tech world. Siri has been fairly criticized recently for gaps in the domains it supports and their balkanization across different platforms, but Heer’s tests are a reminder that Siri still has plenty of room for improvement in how it handles existing domains too. Of course, Siri can do things in 2018 that it couldn’t in 2010, but it still struggles with requests that require an understanding of contexts like location or the user’s last command.

Voice controlled assistants have become a highly competitive space. Apple was one of the first to recognize their potential with its purchase of Siri, but the company has allowed competitors like Amazon and Google catch up and pass it in many respects. The issues with Siri aren’t new, but that’s the heart of the problem. Given the current competitive landscape, 2018 feels like a crucial year for Apple to sort out Siri’s long-standing limitations.