On the heels of a feature story in Wired last week, Apple executives and engineers opened up about how Siri works in interviews with Fast Company. As the publication explained it, a narrative has emerged that Apple’s AI work is behind other companies’ efforts because of its dedication to user privacy.

In an interview with Fast Company, Apple’s Greg Joswiak disagrees:

“I think it is a false narrative. It’s true that we like to keep the data as optimized as possible, that’s certainly something that I think a lot of users have come to expect, and they know that we’re treating their privacy maybe different than some others are.”

Joswiak argues that Siri can be every bit as helpful as other assistants without accumulating a lot of personal user data in the cloud, as companies like Facebook and Google are accustomed to doing. “We’re able to deliver a very personalized experience … without treating you as a product that keeps your information and sells it to the highest bidder. That’s just not the way we operate.”

The article provides concrete examples of how Siri works and the advances that have been made since it was introduced with a level of detail that has not been shared before.

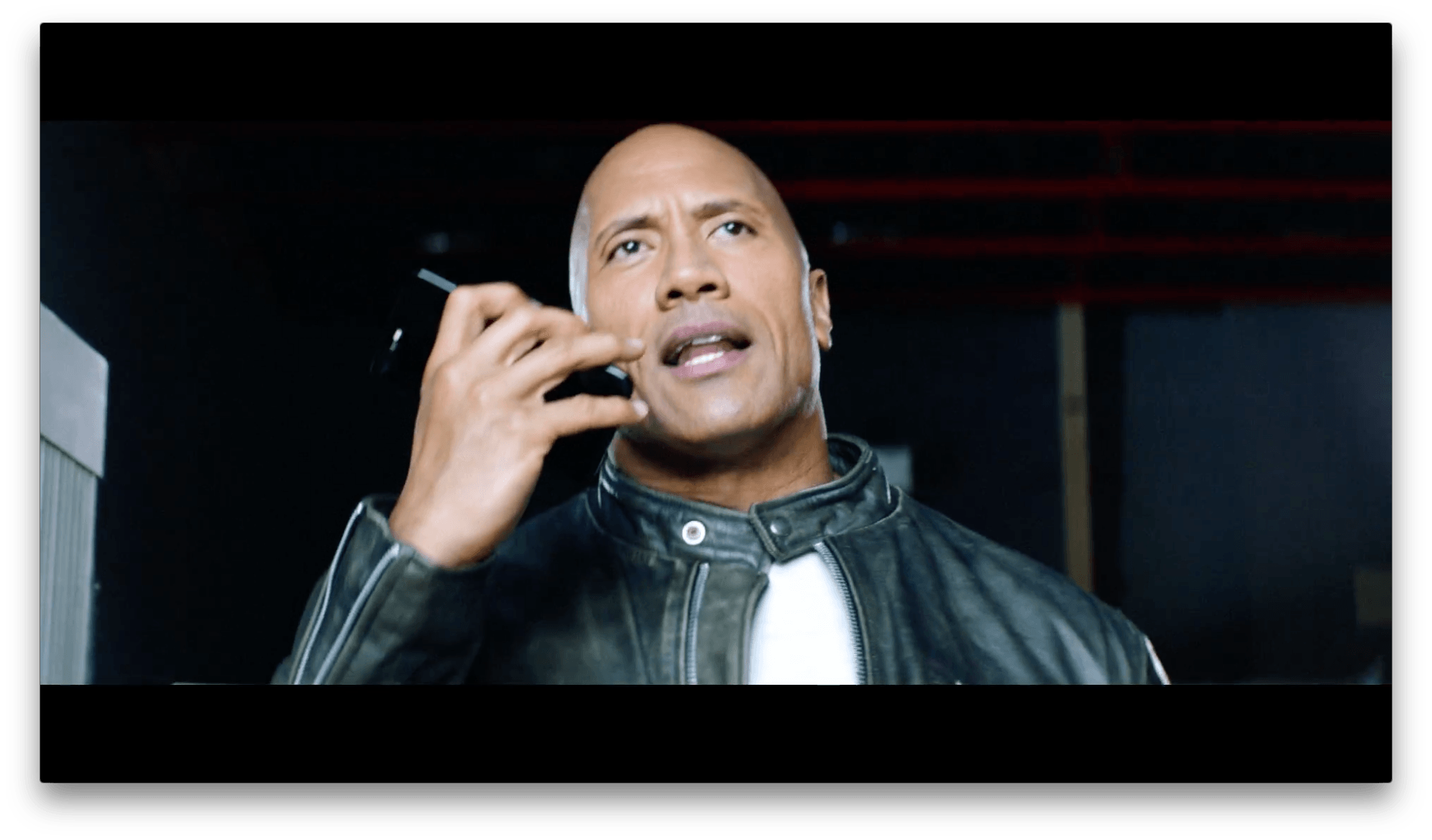

The effectiveness of Siri and Apple’s machine learning research is an area where Apple’s culture of secrecy has hurt it. Apple seems to have recognized this and has made a concerted effort to turn perceptions around with interviews like the ones in Wired and Fast Company. Apple employees have also begun to engage in more public discussion of the company’s machine learning and AI initiatives through outlets like its recently-introduced journal and presentations made by Apple employees. Apple even enlisted The Rock to help it get the word out about Siri’s capabilities. Competition for virtual personal assistant supremacy has heated up, and Apple has signaled it has no intention of being left out or backing down.