Steven Levy, writing for Backchannel, interviewed Apple’s Phil Schiller for the tenth anniversary of the iPhone’s introduction:

“If it weren’t for iPod, I don’t know that there would ever be iPhone.” he says. “It introduced Apple to customers that were not typical Apple customers, so iPod went from being an accessory to Mac to becoming its own cultural momentum. During that time, Apple changed. Our marketing changed. We had silhouette ads with dancers and an iconic product with white headphones. We asked, “Well, if Apple can do this one thing different than all of its previous products, what else can Apple do?’”

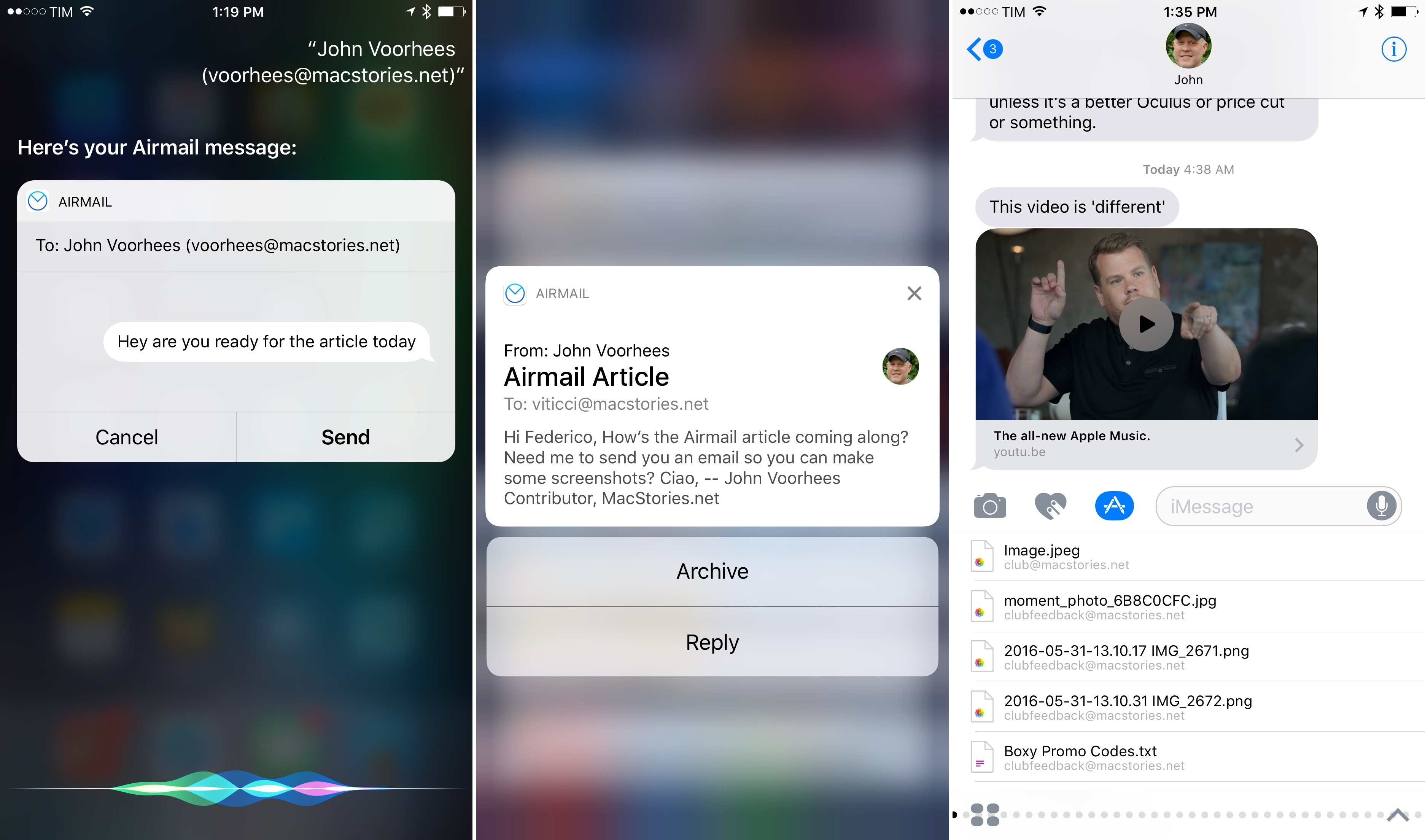

In the story, Schiller also makes an interesting point about Siri and conversational interfaces after being asked about Alexa and competing voice assistants:

“That’s really important,” Schiller says, “and I’m so glad the team years ago set out to create Siri — I think we do more with that conversational interface that anyone else. Personally, I still think the best intelligent assistant is the one that’s with you all the time. Having my iPhone with me as the thing I speak to is better than something stuck in my kitchen or on a wall somewhere.”

[…]

“People are forgetting the value and importance of the display,” he says “Some of the greatest innovations on iPhone over the last ten years have been in display. Displays are not going to go away. We still like to take pictures and we need to look at them, and a disembodied voice is not going to show me what the picture is.”