Siri Vs. Google Voice Search, Four Months Later

Rob Griffiths, comparing Siri to Google Voice Search at Macworld:

Because of the speed, accuracy, and usefulness of Google’s search results, I’ve pretty much stopped using Siri. Sure, it takes a bit of extra effort to get started, but for me, that effort is worth it. Google has taken a key feature of the iOS ecosystem and made it seem more than a little antiquated. When your main competitor is shipping something that works better, faster, and more intuitively than your built-in solution, I’d hope that’d drive you to improve your built-in solution.

When the Google Search app was updated with Voice Search in October 2012, I concluded saying:

Right now, the new Voice Search won’t give smarter results to international users, and it would be unfair to compare it to Siri, because they are two different products. Perhaps Google’s intention is to make Voice Search a more Siri-like product with Google Now, but that’s another platform, another product, and, ultimately, pure speculation.

When Clark Goble posted his comparison of Siri Vs. Google Voice Search in November, I summed up my thoughts on the “usefulness” of both voice input solutions:

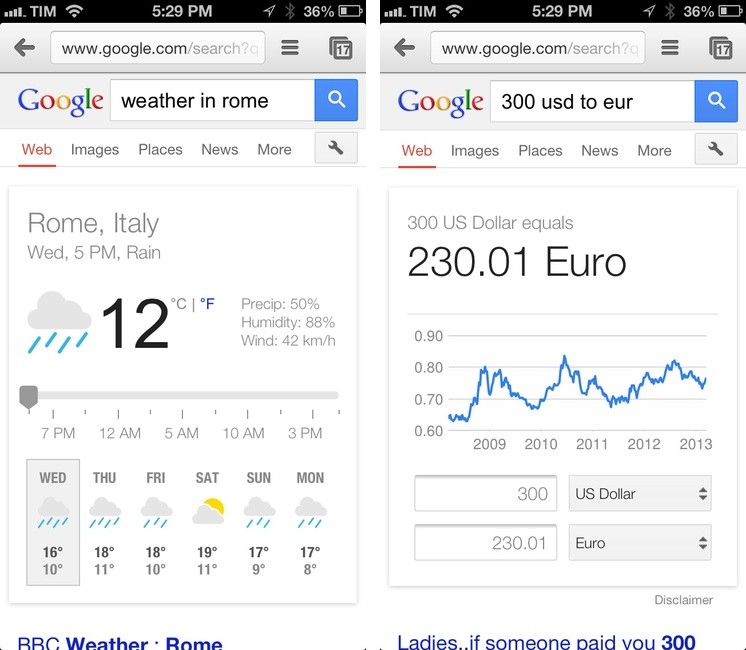

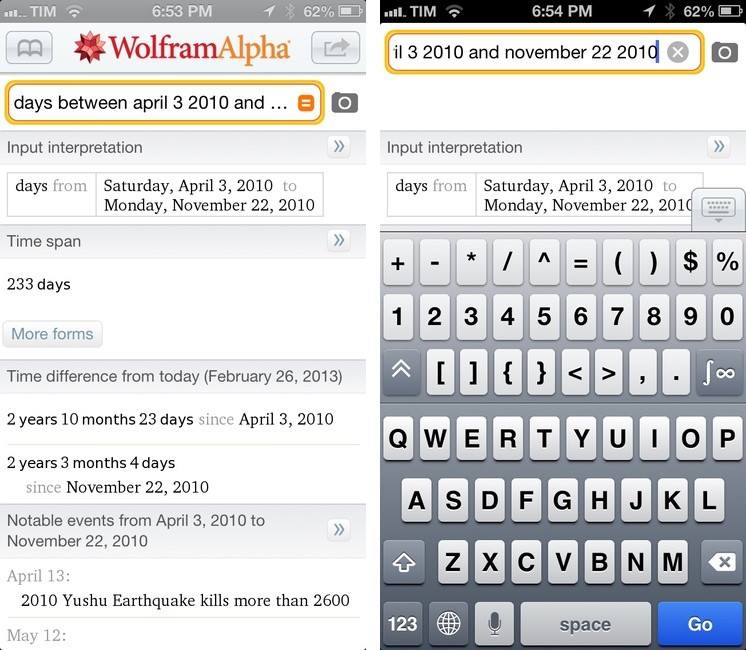

I’m always around a computer or iOS device, and the only times when I can’t directly manipulate a UI with my hands is when I’m driving or cooking. I want to know how Siri compares to Google in letting me complete tasks such as converting pounds to grams and texting my girlfriend, not showing me pictures of the Eiffel Tower.

From my interview with John Siracusa:

And yet the one part of Google voice search that Google can control without Apple’s interference — the part where it listens to your speech and converts it to words — has much better perceptual performance than Siri. Is that just a UI choice, where Apple went with a black box that you speak into and wait to see what Siri thinks you said? Or is it because Google’s speech-to-text service is so much more responsive than Apple’s that Google could afford to provide much more granular feedback? I suspect it’s the latter, and that’s bad for Apple. (And, honestly, if it’s the former, then Apple made a bad call there too.)

Now, four months after Google Voice Search launched, I still think Google’s implementation is, from a user experience standpoint, superior. While it’s nice that Siri says things like “Ok, here you go”, I just want to get results faster. I don’t care if my virtual assistant has manners: I want it to be neutral and efficient. Is Siri’s distinct personality a key element to its success? Does the way Siri is built justify the fact that Google Voice Search is almost twice as fast as Siri? Or are Siri’s manners just a way to give some feedback while the software is working on a process that, in practice, takes more seconds than Google’s?

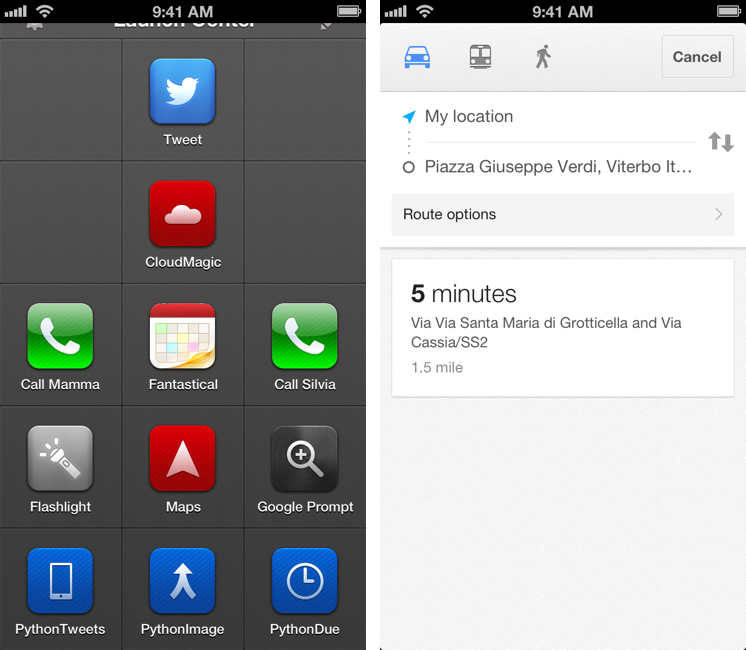

I still believe that Siri’s biggest advantage remains its deep connection with the operating system. Siri is faster to invoke and it can directly plug into apps like Reminders, Calendar, Mail, or Clock. Google can’t parse your upcoming schedule or create new calendar events for you. It’s safe to assume Apple’s policy will always preclude Google from having that kind of automatic, invisible, seamless integration with iOS.

But I have been wondering whether Google could ever take the midway approach and offer a voice-based “assistant” that also plays by Apple’s rules.

Example: users can’t set a default browser on iOS but Google shipped Chrome as an app; the Gmail app has push notifications; Google Maps was pulled from iOS 6 and Google released it as a standalone app. What’s stopping Google from applying the same concept to a Google Now app? Of course, such app would be a “watered down” version of Google Now for Android, but it could still request access to your local Calendar and Reminders like other apps can; it would be able to look into your Contacts and location; it would obviously push Google+ as an additional sharing service (alongside the built-in Twitter and Facebook). It would use the Google Maps SDK and offer users to open web links in Google Chrome. Search commands would be based on Voice Search technology, but results wouldn’t appear in a web view under a search box – it would be a native app. The app would be able to create new events with or without showing Apple’s UI; for Mail.app and Messages integration, it would work just like Google Chrome’s Mail sharing: it’d bring up a Mail panel with the transcribed version of your voice command.

Technically, I believe this is possible – not because I am assuming it, but because other apps are doing the exact same thing, only with regular text input. See: Drafts. What I don’t know is whether this would be in Google’s interest, or if Apple would ever approve it (although, if based on publicly-available APIs and considering Voice Search was approved, I don’t see why not).

If such an app ever comes out, how many people would, like Rob, “pretty much stop using Siri”? How many would accept the trade-off of a less integrated solution in return of speed and more reliability?

An earlier version of this post stated that calendar events can’t be created programmatically on iOS. That is possible without having to show Apple’s UI, like apps such as Agenda and Fantastical have shown .