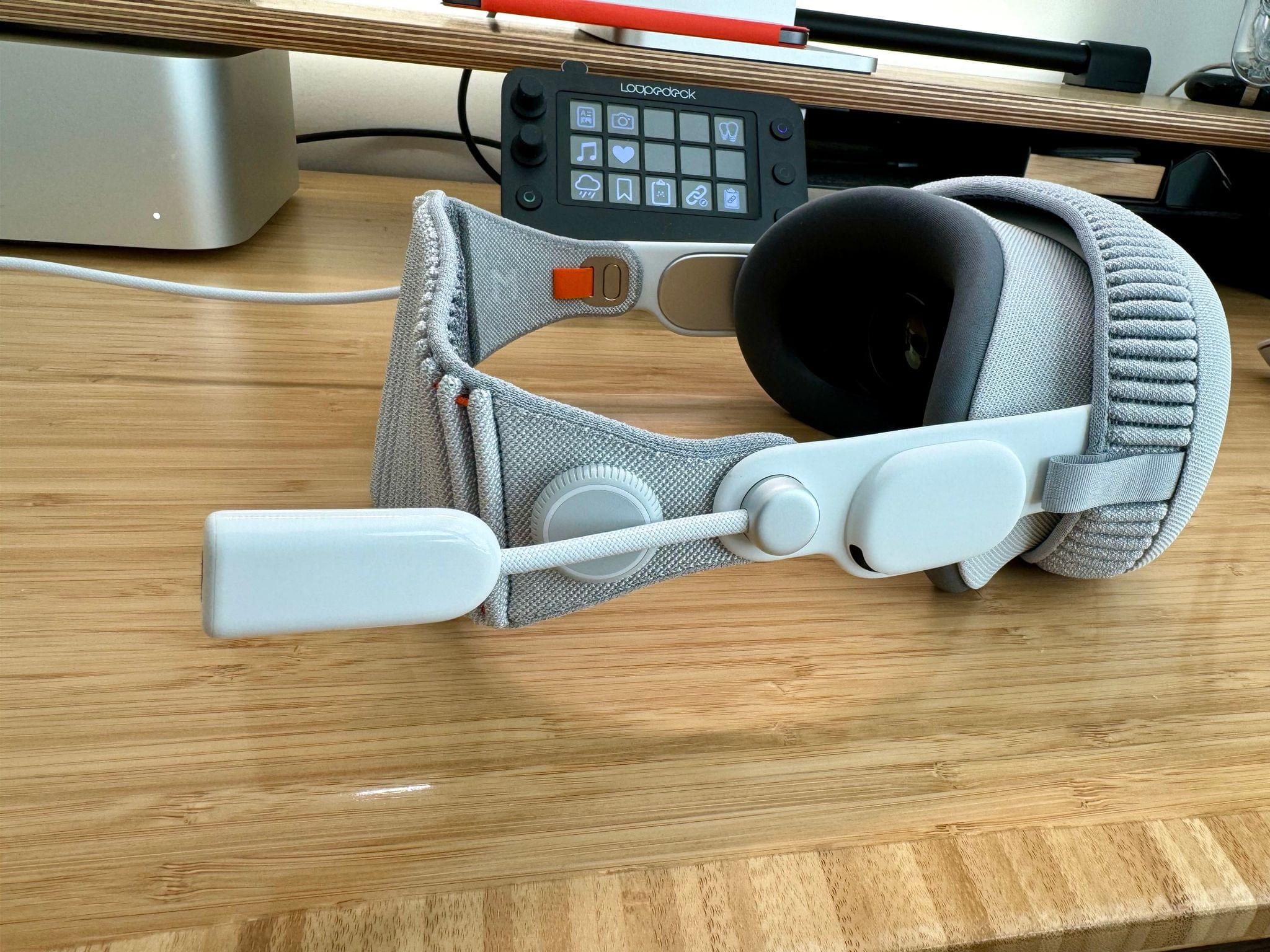

Parchi is a new visionOS sticky note app by Vidit Bhargava, the maker of the dictionary app LookUp. The app is a straightforward, but handy utility for jotting down a quick note using your voice.

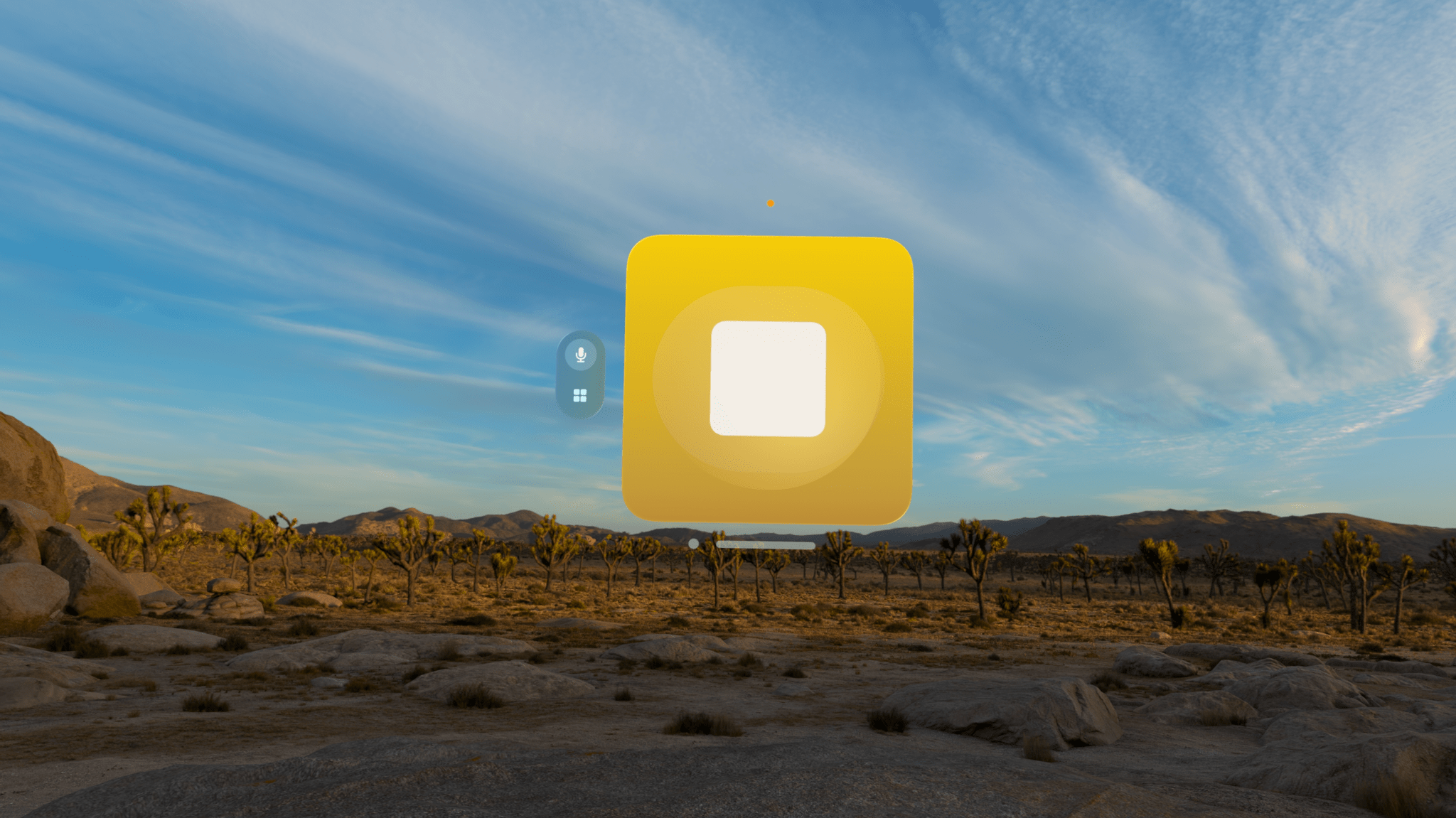

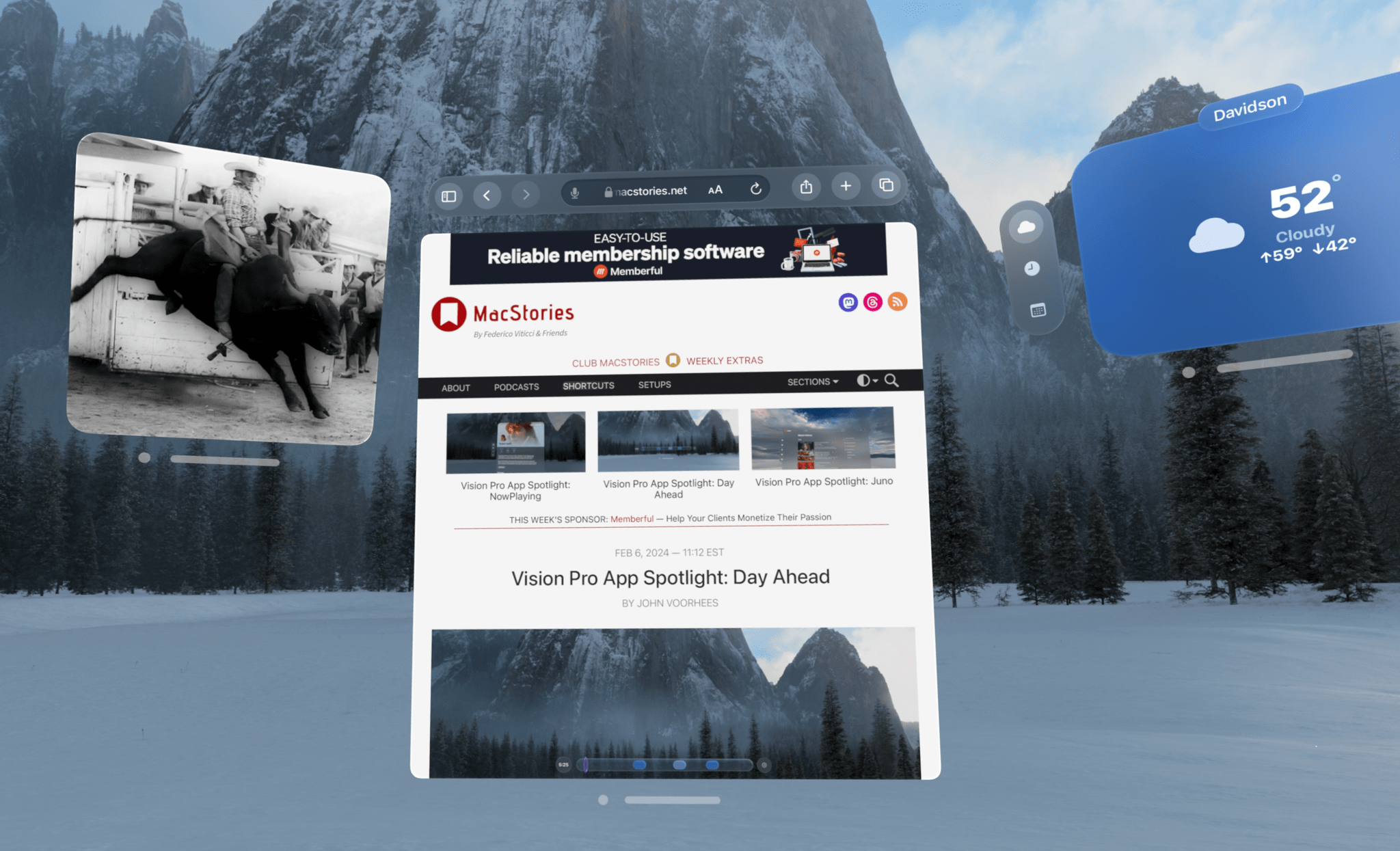

When you launch the app, you’re greeted by a bright yellow gradient window with a big record button in the middle. Tap it and start speaking to record a note. Tap again to stop, and your audio recording will transform into a yellow sticky note with your recording transcribed into text. Once created, you can drag notes around your environment, leaving them in places where they will serve as valuable reminders. In the bottom corners of the note are buttons to play back your audio and delete the note.

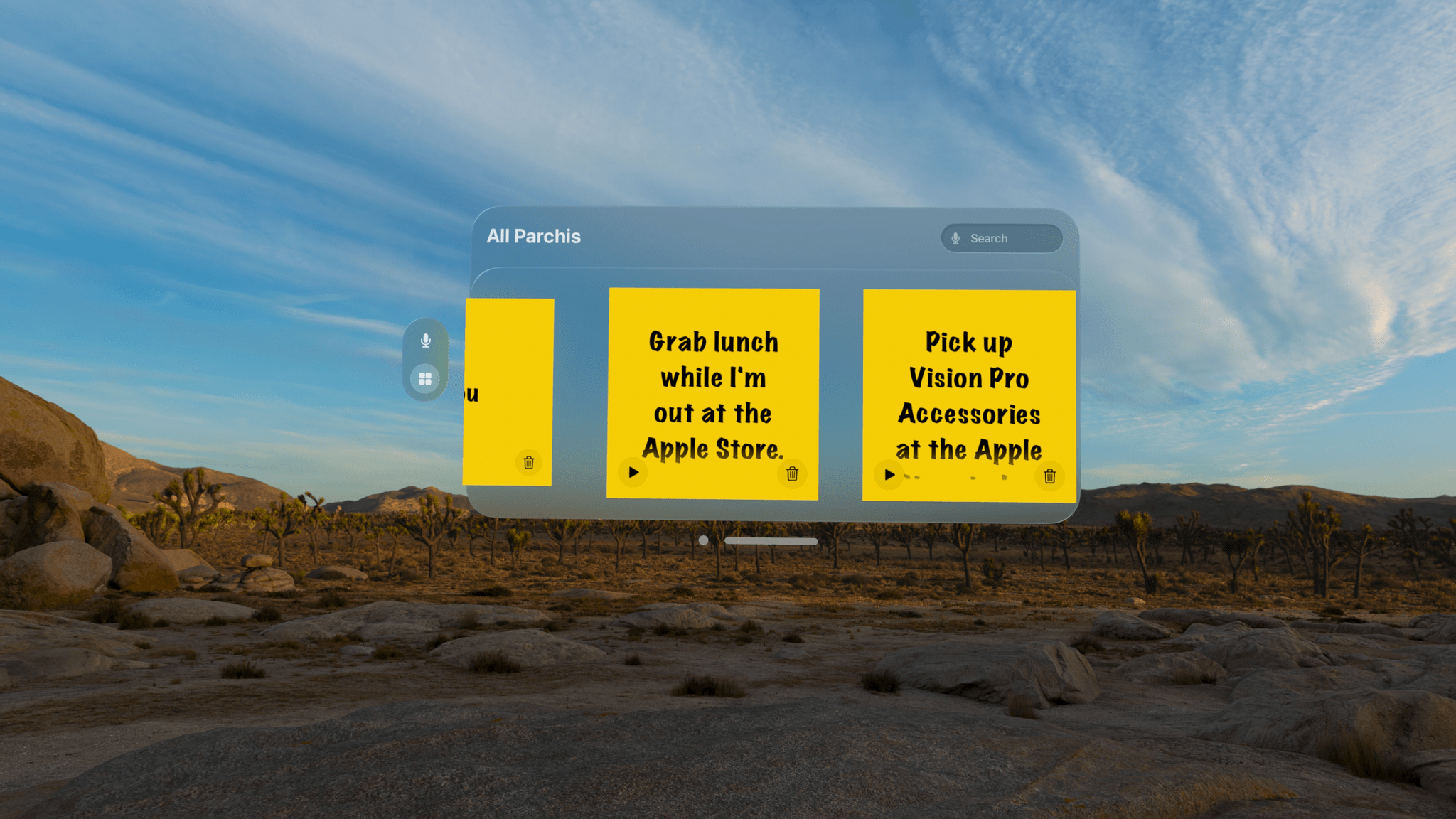

You can also access all of your notes from the app’s main UI by switching from the ‘Parchi Recorder’ view to ‘All Parchis,’ which displays each of your notes as a horizontally scrolling collection. The ‘All Parchis’ view also includes a search field for finding particular notes in a large collection.

I love Parchi’s simplicity and ease of use, but I’d like to see it expanded a little too. The smallest size note is still somewhat large. It would be great if I could shrink them even further with the text transforming into a play button when there isn’t enough room to display it. I’d also like to see Siri and Shortcuts support added to make it possible to create a note without first opening the app. Finally, a copy button would make Parchi a better complement to task managers and other apps by allowing text to be moved to other apps as needed.

Overall, though, I’ve really enjoyed using Parchi. It’s the kind of quick interaction I appreciate when I’ve got something on my mind that I want to jot down without interrupting the flow of whatever else I’m doing.